Abstract

Patents, which encapsulate crucial technical and legal information in text form and referenced drawings, present a rich domain for natural language processing (NLP). As NLP technologies evolve, large language models (LLMs) have demonstrated outstanding capabilities in general text processing and generation tasks. However, the application of LLMs in the patent domain remains under-explored and under-developed due to the complexity of patents, particularly their language and legal framework. Understanding the unique characteristics of patent documents and related research in the patent domain becomes essential for researchers to apply these tools effectively. Therefore, this paper aims to equip NLP researchers with the essential knowledge to navigate this complex domain efficiently. We introduce the relevant fundamental aspects of patents to provide solid background information. In addition, we systematically break down the structural and linguistic characteristics unique to patents and map out how NLP can be leveraged for patent analysis and generation. Moreover, we demonstrate the spectrum of text-based and multimodal patent-related tasks, including nine patent analysis and four patent generation tasks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Avoid common mistakes on your manuscript.

1 Introduction

Patents, a form of intellectual property (IP), grant the holder temporary rights to suppress competing use of an invention in exchange for a complete disclosure of the invention. The concept was once established to promote and/or control technical innovation and progress (Frumkin 1947). The surge in global patent applications and the rapid technological progress pose formidable challenges to patent offices and related practitioners (Krestel et al. 2021). These challenges overwhelm traditional manual methods of patent drafting and analysis. Consequently, there is a significant need for advanced computational techniques to automate patent-related tasks. Such automation not only enhances the efficiency of patent and IP management but also facilitates the extraction of valuable information from this extensive knowledge base (Abbas et al. 2014).

Researchers have investigated machine learning (ML) and natural language processing (NLP) methods for the patent field with highly technical and legal texts (Krestel et al. 2021). In addition, the recent large language models (LLMs) have demonstrated outstanding capabilities across a wide range of general domain tasks (Zhao et al. 2023; Min et al. 2023). Moreover, the expansion of the latest general LLMs with a graphical component to form multimodal models (Huang et al. 2024) may further enhance the capabilities in processing patents, which include text and drawings. These models are promising to become valuable tools in managing and drafting patent literature, the crucial resource that documents technological advances.

However, compared to the significant success of LLMs in the general domain, the application of LLMs in patent-related tasks remains under-explored due to the texts’ and the field’s complexity. NLP and multimodal model researchers need to deeply understand the unique characteristics of patent documents to develop useful models for the patent field. Therefore, we aim to equip researchers with the essential knowledge by presenting this highly auspicious but widely neglected field to the NLP community.

Previous surveys reported the early stages of smart and automated methods for patent analysis (Abbas et al. 2014), first deep learning methods, which opened a wider range of still simpler patent tasks (Krestel et al. 2021), or specific individual patent tasks, such as patent retrieval (Shalaby and Zadrozny 2019). The recent advancements in language and multimodal models were unforeseen, particularly the performance boost when models are massively scaled up (Kaplan et al. 2020). Accordingly, we specifically delineate a survey of popular methodologies for patent documents with a special focus on the most recent and evolving techniques. We have included the two applications of patent analysis and generative patent tasks (Fig. 1). Whereas analysis focuses on understanding and usage of individual patent documents or a group of patents, generation tasks aim at automatically generating patent texts.

We provide a systematic survey of NLP applications in the patent domain, including fundamental concepts, insights on patent texts, development trends, datasets, tasks, and future research directions to serve as a reference for both novices and experts. Specifically, we cover the following topics:

-

1.

We provide an introduction to the fundamental aspects of patents in Sect. 2, including the composition of patents and the patent life cycle. This section is particularly for those readers still unfamiliar with it and a refresher for others.

-

2.

We analyze the unique structural and linguistic characteristics of patent texts with language processing and multimodal techniques to process these texts in Sect. 3. Readers will readily understand the challenges of automated patent processing and the development trends of NLP in the patent field.

-

3.

We present a detailed synthesis of patent data sources, alongside tailored datasets specifically designed for different patent tasks, in Sect. 4. With the collection of these ready-to-use datasets, we aim to eliminate the extensive time and effort for data preparation in the patent domain.

-

4.

We evaluate nine patent analysis tasks (subject classification, patent retrieval, information extraction, novelty prediction, granting prediction, litigation prediction, valuation, technology forecasting, and innovation recommendation) in Sect. 5 and four generation tasks (summarization, translation, simplification, and patent writing) in Sect. 6. Specifically, we systematically demonstrate task definitions and relevant methodologies in detail. We show a comprehensive yet accessible insight into this area, which should enable readers to grasp the nuances of the field more effectively.

-

5.

We identify current challenges and point out potential future research directions in Sect. 7. By highlighting these areas, we hope to encourage further research and development in automated patent tasks to stimulate more efficient and effective methods in the future.

2 Brief background

2.1 Patent document

Patent documents are central elements for the protection of intellectual property and also document inventions. Patents require applicants and/or inventors to publicly disclose their inventions in detail to secure exclusive rights and obtain benefits in return. Patent documents describe new inventions and delineate the scope of patent rights granted to patent holders. These documents are key parts of the patenting process and are publicly accessible typically 18 months after the application or the first filing date. The format and content can vary by jurisdiction but normally include the following elements. Figure 2 displays an example patent document.

Publication information includes the file number and date of patent (application) publication.

Title is the concise description of the invention.

Bibliometric information includes details about applicants, inventors, assignees, examiners, attorneys, etc.

Patent classification code defines the category of the patent. We introduce detailed information on patent classification schemes in Sect. 5.1.2.

Citations are lists of prior art and other patents referenced in the document or by examiners.

Abstract is a brief summary of the invention and its purpose.

Background contains basic information on the field of the invention and is supposed to list and appreciate the prior art, particularly in the patent literature.

Detailed description provides comprehensive details about the invention and specific embodiments, typically discussing the drawings.

Claims define the legal scope of the patent. Each claim is a single sentence, which describes the invention in specific features that make the invention novel and not easily derivable (obvious) from the state of the art (described by any source, not only patent documents).

Drawings are visual representations of the invention, disclosing important aspects of the invention as well as embodiments to support the textual description. Figure 3 shows an example patent drawing. Apart from patent texts, researchers also use patent drawings for patent analysis, which is introduced in Appendix A.

Example drawing from patent US 10,854,933. Many figures in patents are generic without the corresponding description. Drawings tend to be less generic in patents on pharmaceuticals or mechanics, often consisting of graphs, images, or models. Some drawings are also of poor resolution, pixelation, or low quality. The reference numbers indicate specific elements introduced in the patent description. The reference numbers have to be named by the same term consistently throughout the patent application, which can also substantially deviate from language conventions in the field. The reference numbers are typically used for invention features and listed in the claims

2.2 Patent life cycle

The patent life cycle encompasses several stages, from the initial conception of an invention to its eventual expiration. It can be broadly divided into pre-grant and post-grant phases (Fig. 4).

Pre-grant stage. In the beginning, inventors conceptualize, design, and develop their invention. If inventors hope to obtain patent protection for their invention, they need to apply to the patent office. To ensure novelty and inventiveness (non-obviousness), inventors may preventively search for existing patents and public disclosures to avoid unnecessary cost and effort in case of prior disclosures. Additionally, patent documents need drafting to describe the invention in detail, including its specifications, claims, abstract, and accompanying drawings. The drafting process typically requires the expertise of a patent professional, such as a patent engineer, attorney, or agent. After the patent application is submitted to the patent office, examiners at the office will screen and evaluate the application for compliance with formal, legal, and technical requirements. This examination typically involves correspondence of the examiner with the inventor or their representation, where clarifications, amendments, or arguments are submitted.Footnote 1 Notably, an assessment will determine if the invention meets the criteria for patentability, including novelty, inventiveness (non-obviousness), and typically less strictly commercial utility. The requirement of utility may be less strict as offices may see that as the applicant’s problem, except for certain cases. The substantive examination compares the invention with similar documents from the patent literature or any other public source dated earlier, which were found in an initial search by the office. Upon examination and potential resolution of any objections, the patent is either granted—rarely in its original, more frequently in a restricted form based on the identified prior art—or rejected. If the original priority date should be maintained, the invention must not be extended during the examination.Footnote 2

Post-grant stage. The granted patent is published, disclosing the details of the invention to the public potentially accompanying a previous application publication. As a remnant of previous pre-computer and pre-AI-search times, the offices classify patents by field of technology for easier search and management. Maintenance fees need to be paid regularly to keep the patent in force. The patent owner can enforce patent rights through legal action when infringement occurs. In addition, third parties can question the validity of patents, and patent owners would need to defend the challenges. Furthermore, companies can analyze patents for technology insights and derive strategies. Finally, the invention enters the public domain after the expiration of the related patents, allowing anyone to use it without infringement if no other rights cover those aspects.

2.3 Related surveys

Patent analysis. Various surveys in the past summarized certain aspects of patent analysis from a knowledge or procedural perspective. The work of Abbas et al. (2014) represents early research on patent analysis. These early methods included text mining and visualization approaches, which paved the way for future research. Deep learning for knowledge and patent management started more recently. Krestel et al. (2021) identified eight types of sub-tasks attractive for deep learning methods, specifically supporting tasks, patent classification, patent retrieval, patent valuation, technology forecasting, patent text generation, litigation analysis, and computer vision tasks. Some surveys reviewed specific topics of patent analysis. For example, Shalaby and Zadrozny (2019) surveyed patent retrieval, i.e., the search for relevant patent documents, which may appear similar to a web search but has substantially different objectives as well as constraints and has a legally-defined formal character. A concurrent survey introduced the latest development of patent retrieval and posed some challenges for future work (Ali et al. 2024). In addition, Balsmeier et al. (2018) highlighted how machine learning and NLP tools could be applied to patent data for tasks such as innovation assessment. It focused more on methodological advancement in patent data analysis that complements the broader survey of tools and models used in NLP for patent processing.

Patent usage. The patent literature records major technological progress and constitutes a knowledge database. It is well known that the translation of methods from one domain to another or the combination of technology from various fields can lead to major innovation. Thus, the contents of the patent literature appear highly attractive for systematic analysis and innovation design. However, the language of modern patents has substantially evolved and diverged from normal technical writing. Recent patent documents are typically hardly digestible for the normal reader and also contain deceptive elements added for increasing the scope or to camouflage important aspects. Appropriate data science techniques need to consider such aspects to mine patent databases for engineering design (Jiang et al. 2022). Data science applied to this body of codified technical knowledge cannot only inform design theory and methodology but also form design tools and strategies. Particularly in early stages of innovation, language processing can help with ideation, trend forecasting, or problem–solution matching (Just 2024).

Patent-related language generation. Whereas the above-listed techniques harvest the existing patent literature for external needs, the strong text-based patent field suggests the use of language models in a generative way. Language processing techniques can, for instance, translate the peculiar patent language to more understandable technical texts, summarize texts, or generate patent texts based on prompts (Casola and Lavelli 2022). The recent rapid development of generative language models, especially large language models (LLMs) (Zhao et al. 2023) may stimulate more patent-related generation tasks.

Related topics. A variety of other tasks and applications also have close ties to the patent domain. For example, intellectual property (IP) includes copyrights, trademarks, designs, and a number of more special rights beyond patents. The combined intellectual property literature allows concordant knowledge management, technology management, economic value estimation, and information extraction (Aristodemou and Tietze 2018). The patent field is closely related to the general legal domain, because they share procedural aspects and the precision of language. Likewise, most patent professionals have substantial legal training or a law degree, and their work often involves legal aspects. Accordingly, NLP techniques in the more general field of law may influence patent-related techniques in the future (Katz et al. 2023).

3 Insights into patent texts

Patent language can differ from normal text in multiple aspects, which stimulates a variety of research and entails challenges for the field.

3.1 Long context

Most research so far has focused on short texts, such as patent abstracts and claims. However, titles and abstracts of patents can be surprisingly generic. Therefore, using patent descriptions that provide comprehensive details and specific embodiment of the invention for patent analysis is highly important, which is neglected by most of the current research. A possible reason is that previous language models cannot handle such long inputs. According to a recently proposed patent dataset (Suzgun et al. 2023), the average number of tokens of a patent description exceeds 11,000, which is longer than the context limit of many previous language models. For example, Llama-2 supports the context length of 4,000 tokens (Touvron et al. 2023). The reason for the limited context length of many language models is the rapid growth of computational complexity associated with self-attention. It grows because the number of attention relationships increases with the context length. As self-attention often considers every pair of tokens, the computational complexity can grow with the context length squared (\({\mathcal {O}}(n^2)\)). The long context of patent descriptions causes critical challenges for patent analysis.

Notably, researchers have investigated increasing or otherwise handling the context length of LLMs (Chen et al. 2023; Jiang et al. 2023; Xiong et al. 2023). Xiong et al. (2023), for example, introduced a series of long-context LLMs, which can support context windows up to 32,768 tokens. A mix of reduced short-range and long-range attention instead of exhaustive attention can reduce the computational and memory burden (Kovaleva et al. 2019; Beltagy et al. 2020). Moreover, the latest very large models, such as GPT-4Footnote 3 (OpenAI 2023) and Llama-3.1Footnote 4 (Dubey et al. 2024) even naturally support a context length of 128,000 tokens, which appears promising to process long patent descriptions. Other LLMs also indicate a trend towards long context capabilities, such as Falcon-180B (Almazrouei et al. 2023), Gemini 1.5Footnote 5 (Reid et al. 2024) and Claude 3.5Footnote 6 (Anthropic 2024).

3.2 Technical language

The patent language is highly technical and artificial, including specialized terminology, legal phrases, and sometimes newly coined terms to describe new concepts that may not yet have been widely recognized. Patents regularly define their own terms. As those terms are often coined by an attorney who needs to name an element, they can substantially deviate from everyday language usage and also the specific technical field. Such self-defined terms are often highly artificial and not likely to occur in other relevant documents or even in any dictionary.

Hence, the technical language causes significant challenges for general LLMs for patent analysis, which are trained on normal texts and a large share of more colloquial language. Thus, LLMs may not capture the patent context information effectively, because the important technical terms can be completely new to the LLMs or have different meanings from its pre-training corpora. Embeddings based on distance metrics for synonymity or semantic relationships of terms may not work if terms are defined contrary to their normal use or are entirely new.

3.3 Precision requirement

The precision requirement and information density of patent texts are higher than in everyday language.Footnote 7 The patent language focuses more on precision and accuracy than on readability. Patent texts must be precise and meticulously described to ensure the patent is both defensible and enforceable. Such precision requirement typically leads to high repetitiveness in both terminology and structure of sentences, paragraphs, and sections. Furthermore, sentences are often overburdened because they use relative or adverbial clauses to include specifications for precision or add examples for a wider scope. Additionally, each terminology must be used consistently throughout the document. That means a technical term must not be replaced by other words unless the patent explicitly states that both are identical. In contrast, everyday texts and academic literature tend to vary and paraphrase the wording for better readability. In addition, the patent claim is carefully crafted to define the precise scope and boundaries of the invention’s protection, ensuring that the patent can withstand legal scrutiny.

The precision requirement of patent texts complicates the patent generation tasks because LLMs are likely to generate slightly different words or phrases. Due to the requirement for large quantities of data, most pre-training corpora for LLMs tend to be colloquial and relatively informal. Another smaller portion comes from literary texts, which may be of higher quality but typically prioritize style and linguistic originality over precision and accuracy.

3.4 LLMs for patent processing

Patent texts are distinct from everyday texts with respect to long context length, in-depth technical complexity, and high precision to ensure the patent can be granted, defended, and enforced. This type of language often necessitates specific training and experience in patents for accurate reading and interpretation. However, human readers usually struggle with certain aspects of patent texts, such as ambiguous names, phrases that conflict with the readers’ prior understanding, or numerous terms that the description redefines within the context of the specific patent itself. The dense, specialized terminology and unconventional syntactic structures common in patents often pose significant barriers to comprehension, even for those with experience in technical fields. In contrast, LLMs trained on datasets specifically tailored to patent language are theoretically well-equipped to handle these challenges. They are designed to process complex syntactic structures, manage long-range dependencies, and incorporate newly coined terms or domain-specific jargon that diverges from everyday language. By leveraging the specialized vocabulary of experts in the field, these models can navigate the nuanced requirements of patent texts with a level of consistency and scope that may exceed human capabilities. Therefore, the recent advancements in LLMs (Zhao et al. 2023) for generative tasks and language processing appear ideally suited for the unique characteristics of patent literature. They promise large benefits in areas such as patent drafting, prior art search, and examination.

Despite the apparent fundamental compatibility of LLMs for knowledge extraction and language processing, the application of LLMs in the patent domain remains underdeveloped and not yet highly prominent. Previous studies used word embeddings (e.g., Word2Vec (Mikolov et al. 2013)) and deep learning models (e.g., LSTM (Hochreiter and Schmidhuber 1997)) for patent analysis tasks. As transformers (Vaswani et al. 2017) showed significant potential in text processing, researchers started to develop transformer-based language models, such as BERT (Devlin et al. 2018) and GPT (Radford et al. 2018). The recent large-sized models with outstanding capabilities have not been extensively investigated in the patent field. Some representative general LLMs that are worth exploring include the Llama-3 family (Dubey et al. 2024), Mistral (Jiang et al. 2023), Mixtral (Jiang et al. 2024), GPT-4 (OpenAI 2023), Claude 3 (Anthropic 2024), DeepSeek-V3 (Liu et al. 2024), and Gemini 1.5 (Reid et al. 2024). Researchers have also developed patent-specific LLMs, such as PatentGPT-J (Lee 2023) and PatentGPT (Bai et al. 2024). However, PatentGPT-J has shown limited performance in patent text generation tasks (Jiang et al. 2024), and PatentGPT, though promising, is a recent development that is not yet publicly available. Significant work related to patent-specific LLMs remains to be done. Moreover, since patents represent a type of legal document, law-specific LLMs are also worth investigating, such as SaulLM (Colombo et al. 2024).

Previous research efforts have included patent analysis, data extraction, and automation of procedures. However, the lack of benchmark tests, such as reference datasets and established metrics, hinders performance evaluation and comparison across different methods. The effectiveness of LLMs depends on the quality of training data. To this end, we have compiled sources and databases of patents with curated datasets tailored for various patent-related tasks in Sect. 4. Although patent offices have released raw documents for years, publicly available datasets for specific tasks remain scarce. Numerous studies continue to use closed-source data for training and evaluation. Furthermore, patent offices do not provide pre-processed data or broad access to well-structured documents from the process around patents. Although in many countries, patent documents as the disclosure of inventions are considered public domain, they offer only the manual review of individual documents.Footnote 8 The pre-processing steps to formulate structured patent datasets generally involve: segmenting patent documents into clearly defined sections to enhance downstream tasks, such as abstract, claims, description, etc., and rectifying irregularity issues, such as missing fields, erroneous characters, or formatting issues.

Previous research prominently focuses on short text parts of patents, such as titles and abstracts. However, these texts are typically highly generic and include the least specific texts with little information about the actual invention. These texts also take little time during drafting, which renders the automated creation of these parts less helpful. By contrast, patent descriptions need to include all details of an invention, and patent claims clearly define the legal boundaries of the invention’s protection, the so-called scope. Since these texts are longer and contain much more useful information, they are worth more attention for both patent analysis and generation.

3.5 Multimodal techniques

A patent is not merely a text document but can include drawings, i.e., visual components. Thus, multimodal methods such as CLIP (Radford et al. 2021) and vision transformers (Dosovitskiy 2020) may unlock this potential. Multimodal methods in patent processing integrate diverse data types, such as text, images, and quantitative information, to enhance tasks such as classification and retrieval. The combination of their complementary strengths of different modalities may lead to more comprehensive and accurate results. Lee et al. (2022) for instance introduced a multimodal deep-learning model that combined textual content with quantitative patent information, which resulted in improved performance for patent classification tasks. Additionally, multimodal approaches that integrate textual descriptions with visual content have shown promise in enhancing patent retrieval (Lo et al. 2024). Furthermore, Lin et al. (2023) proposed multimodal methods to extract structural and visual features to effectively measure patent similarity. Such multimodal similarity detection may improve the efficiency of patent examination. However, as illustrated in Fig. 3, many drawings in patents are generic without the corresponding description, and some drawings suffer from poor resolution, pixelation, or low quality. This may be one of the reasons why current multimodal methods in patent processing are scarce. Moreover, textual elements, especially the claims and descriptions, are the primary carriers of legally binding information in patents, particularly features. While drawings provide valuable support and document embodiments, the text is essential for defining the scope, novelty, and context of each invention. Consequently, the survey particularly examines NLP approaches and includes representative multimodal methods where available.

4 Data

4.1 Data sources

Patent applications are submitted to and granted by patent offices. To stimulate innovation and serve the society, patent offices provide detailed information about existing patents, patent applications, and the legal status of patents—previously on paper, nowadays online. Large patent offices include the United States Patent and Trademark Office (USPTO) and the European Patent Office (EPO). These offices also provide access to download bulk datasets or tools to explore and analyze patent data. For example, PatentsView is a platform developed by the USPTO, which provide accessible and user-friendly interfaces to explore US patent data, with various tools for visualization and analysis. Apart from patent offices, there are searchable databases that contain patents from multiple countries and offices, such as Google Patents. We list a broad range of patent offices and databases in Table 1.

4.2 Curated data collections

Datasets. Patent offices provide large-scale raw data in the patent domain. Developers and researchers rely on well-curated datasets for development and research. We summarize representative publicly available curated patent datasets in Table 2. We aim to reduce the time and effort for data searching in the patent domain by presenting these ready-to-use datasets.

The number of curated datasets for patent classification and patent retrieval is typically larger than others because the data collection process is simple. Each granted patent is assigned classification codes and contains referenced patents. Hence, researchers can formulate the datasets by collecting and filtering patents without further labeling. For patent novelty quantification and prediction, Arts et al. (2021) considered patents connected to awards such as a Nobel Prize as novel because they radically impacted technological progress and patenting. In contrast, patents were considered lacking novelty if they were granted by the United States Patent and Trademark Office but simultaneously rejected by both the European Patent Office and Japan Patent Office. However, this collection may deviate from the formal definition of novelty introduced in Sect. 5.4.1. For patent simplification, there is only a silver standard dataset (Casola et al. 2023). In text generation tasks, texts written by humans are usually considered the gold standard. Since patent simplification requires extensive expertise and effort, it is expensive and time-consuming to obtain a gold standard for patent simplification. Thus, Casola et al. (2023) adopted automated tools to generate simpler texts for patents and named it the silver standard.

Notably, the Harvard USPTO Patent Dataset (HUPD) (Suzgun et al. 2023) is a recently presented large-scale multi-purpose dataset. It contains more than 4.5 million patent documents with 34 data fields, providing opportunities for various tasks. The corresponding paper demonstrates four types of usages of this dataset, including granting prediction, subject classification, language modeling, and summarization.

Shared tasks. Some organizations proposed shared tasks and workshops in the patent domain to facilitate related research. Every participant worked on the same task with the same dataset to enable comparisons between different approaches.

The intellectual property arm of the Conference and Labs of the Evaluation Forum (CLEF-IP)Footnote 9 focuses on evaluation tasks related to intellectual property, particularly in patent retrieval and analysis. Each task usually contains a curated patent dataset for desired aims, including various information such as text, images, and metadata (Piroi and Hanbury 2017).

The Japanese National Institute of Informatics Testbeds and Community for Information access Research (NTCIR)Footnote 10 provides datasets and organizes shared tasks to facilitate research in information retrieval, natural language processing, and related areas. Patent-related tasks at the NTCIR range from patent retrieval and classification to text mining and machine translation (Utiyama and Isahara 2007; Lupu et al. 2017).

TREC-CHEMFootnote 11 is a part of the Text REtrieval Conference (TREC) series, specializing in chemical information retrieval. It contains patent datasets that are rich in chemical information. For example, the dataset from TREC-CHEM 2009 contains 2.6 million patent files registered at the European Patent Office, United States Patent and Trademark Office, and World Intellectual Property Organization (Lupu et al. 2009).

5 Patent analysis tasks

Patent analysis tasks focus on understanding and usage of patents. We divide patent analysis tasks into four main types, subject classification, information retrieval, quality assessment, and technology insights. Patent subject classification (Sect. 5.1) is one of the most widely studied topics in the patent domain, where the categories of patents are predicted based on their content. Information retrieval tasks consist of two sub-tasks, specifically patent retrieval (Sect. 5.2) and information extraction (Sect. 5.3). While patent retrieval aims at retrieving target documents from databases, information extraction focuses on extracting desired information from patent texts for further applications. Quality assessment refers to evaluating the quality of patents, which includes novelty prediction (Sect. 5.4), granting prediction (Sect. 5.5), litigation prediction (Sect. 5.6), and patent valuation (Sect. 5.7). As novelty is essential for patents, early prediction of novelty and auxiliary methods for novelty assessments could ensure patent quality before filing and improve efficiency. Granting prediction forecasts whether the patent office will grant a patent application. The process involves further aspects beyond novelty and inventiveness, such as formal requirements of the language, figures, and documents. A low-quality patent is likely to be rejected by the examiner. Litigation prediction measures the odds that the file may at some point become the subject of litigation. For example, patents with unclear or ambiguous claims or very scarce descriptions tend to be more likely to cause litigation cases. Patent valuation refers to measuring the value of the patents, which is a reflection of patent quality and scope. Technology insights are the usage of patents, consisting of technology forecasting (Sect. 5.8) and innovation recommendation (Sect. 5.9). Since patents contain extensive emerging technology information, researchers can analyze patents to predict future technological development trends or suggest new ideas for technological innovation.

5.1 Automated subject classification

5.1.1 Task definition of subject classification

The automated subject classification task is a multi-label classification task. The aim is to predict patents’ specific categories or classes based on patent content, including title, abstract, and claims. Given a sequence of inputs \(x=[w_1, w_2, \ldots, w_n]\), the objective is to predict the label \(y \in \{y_1, y_2, \ldots, y_m\}\). Since patents may belong to multiple classes, sometimes more than one label needs predicting. This classification is crucial for organizing patent databases, facilitating patent searches, and assisting patent examiners in evaluating the novelty.

5.1.2 Classification scheme

Two of the most popular classification schemes are the International Patent Classification (IPC) and Cooperative Patent Classification (CPC) systems. These IPC/CPC codes are hierarchical and divided into sections, classes, sub-classes, main groups, and sub-groups. For example, we list the breakdown of the F02D 41/02 label using the IPC scheme in Table 3.

5.1.3 Evaluation

Most research uses one or more evaluation measures originated from Fall et al. (2003). As Fig. 5 illustrates, there are three different evaluation methods, namely top prediction, top N guesses, and all categories. Top prediction only checks whether the top-1 prediction is the same as the main class. In top N guesses, the result is successful if one of the top-n predictions matches the main class, which is more flexible compared to the top-1 prediction. On the other hand, the all-categories method checks whether the top-1 prediction is included in the set of the main class and all incidental classes.

Illustration of three evaluation methods for patent classification, where 1, 2,..., n are the top-n predictions, MC stands for main class, and IC is incidental class (Fall et al. 2003)

5.1.4 Methodologies for automated subject classification

We summarize the mainstream techniques for patent classification and categorize them into three types, including feature extraction and classification, fine-tuning transformer-based language models, and hybrid methods (Fig. 6).

Feature extraction and classifier. Researchers extract various features from patent documents and adopted a classifier for prediction based on the features (Shalaby et al. 2018; Hu et al. 2018; Abdelgawad et al. 2019; Zhu et al. 2020).

Most of the research used text content for prediction. Before the prevalence of deep learning, researchers explored text representations, such as unigrams, bigrams, and syntactic phrases, for automated patent classification (D’hondt et al. 2013), but the performance was limited. Nowadays, the commonly used text representation is word embedding, a deep learning-based pre-trained model that establishes a quantitative space and paradigmatic relationships between words. Roudsari et al. (2021) compared five different text embedding approaches, including bag-of-words, GloVe, Skip-gram, FastText, and GPT-2. Bag-of-words is based on word frequency and ignores semantic information. GloVe, Skip-gram, and FastText are deep learning methods that capture word meaning but not word contexts. In contrast, GPT-2 is based on transformer architecture and is capable of capturing complex contextual information. The results demonstrated that GPT-2 performed the best with a precision of 80.52%, indicating that transformer-based embedding approaches may perform better than other traditional or deep-learning embeddings. In addition, word embeddings are typically pre-trained on heterogeneous corpora, such as Wikipedia, which lets them lack domain awareness. Therefore, Risch and Krestel (2019) trained a domain-specific word embedding for patents and incorporated recurrent neural networks for patent classification. This method improved the precision by 4% compared to normal word embedding, demonstrating the effectiveness of domain adaptation. Apart from word embeddings, researchers also adopted sentence embedding for feature extraction (Bekamiri et al. 2021). Other research calculated the semantic similarity between patent embeddings obtained from Sentence-BERT (Reimers and Gurevych 2019) and used the k-nearest neighbors (KNN) method for classification with a precision of 74%. Although the performance was not strong enough, the use of similarity and KNN provided a different view of the classification approach.

In addition, some research studied variations of network architecture and optimization methods. Shalaby et al. (2018) improved original paragraph vectors (Le and Mikolov 2014) to represent patent documents. The authors used the inherent structure to derive a hierarchical description of the document, which was more suitable for capturing patent content. In addition, Abdelgawad et al. (2019) analyzed hyper-parameter optimization methods for different neural networks, such as CNN, RNN, and BERT. The results illustrate that optimized networks could sometimes yield 6% accuracy improvement. Similarly, Zhu et al. (2020) used a symmetric hierarchical convolutional neural network and improved the F1 score by approximately 2% on the Chinese short text patent classification task compared to a conventional convolutional neural network.

Alternatively, images can also serve for automated patent classification, which is introduced in Appendix A.1.

Fine-tuning transformer-based language models. Fine-tuning tailors the pre-trained model to the patent classification task. Lee et al. fine-tuned the BERT model for the patent classification task and achieved 81.75% precision (Lee and Hsiang 2020b), which was more than 7% higher than traditional machine learning under the same setting, using word embedding and classifiers (Li et al. 2018). Moreover, Haghighian et al. (2022) compared multiple transformer-based models, including BERT, XLNet, RoBERTa, and ELECTRA, where XLNet performed the best regarding precision, recall, and F1 score. Furthermore, research found that incorporating further training approaches can optimize the fine-tuning process. For example, Christofidellis et al. (2023) integrated domain-adaptive pre-training and used adapters during fine-tuning, which improved the final classification results by about 2% of F1 score. Transformer-based language models have demonstrated better effectiveness than traditional text embedding and become the mainstream method for text-based problems.

Another study treated this hierarchical classification task as a sequence generation task. Risch et al. (2020) implemented transformer-based models to follow the sequence-to-sequence paradigm, where the input was patent texts and the output was the class, such as F02D 41/02. However, the highest accuracy tested on their datasets reached only 56.7%, which demonstrated that the sequence-to-sequence paradigm for patent classification was mediocre.

Hybrid methods. Hybrid methods refer to combining different approaches to infer predictions. TechDoc (Jiang et al. 2022) is a multimodal deep learning architecture, which synthesizes convolutional, recurrent, and graph neural networks through an integrated training process. Convolutional and recurrent neural networks served to process image and text information respectively, while graph neural networks served for the relational information among documents. This multimodal approach was able to reach greater classification accuracy than previous unimodal methods. The advantage of multimodal models is to leverage different modalities for a comprehensive prediction.

Additionally, Zhang et al. (2022) used a multi-view learning method (Zhao et al. 2017) for patent classification. Multi-view learning integrates and learns from multiple distinct feature sets or views of the same data, aiming to improve model performance by leveraging the complementary information available in different views. In a general multi-view learning pipeline, developers specify a model in each view. They aggregate and train all models collaboratively based on multi-view learning algorithms. Zhang et al. (2022) used the two views of patent titles as well as patent abstracts and tested multi-view learning on a Chinese patent dataset to demonstrate its effectiveness and reliability.

Moreover, a recent study investigated ensemble models that can combine multiple classifiers (Kamateri et al. 2023). While multi-view learning exploits diverse information from different data sources for a more comprehensive understanding, ensemble methods focus on combining predictions from multiple models to improve prediction accuracy and robustness by reducing the models’ variance and bias, which may all use the same data view. The authors experimented with different ensemble methods and finally achieved 70.71% accuracy, which was higher than using only one classifier.

For a synopsis, we list some representative papers in Table 4, including data sources, dataset size, parts used for training, number of classes, methods, and results. The table shows that researchers use different datasets and metrics to test their models, which complicates the comparison of various methods. Hence, we call for standard benchmarks for patent classification to facilitate the development. In addition, LLMs demonstrate more promising results compared to traditional ML models. However, most research is still based on outdated models, such as GPT-2. The application of recent large-sized LLMs to this task would enhance the effectiveness. Additionally, most research has focused on short texts for patent classification, such as abstracts and claims. Nonetheless, titles and abstracts of patents are generic and do not disclose much relevant information. We recommend that future research focuses more on detailed patent descriptions that contain detailed and specific information about an invention. Furthermore, domain-adaptive methods that adapt standard LLMs to the patent domain are worth investigating to optimize the performance.

5.2 Patent retrieval

There are three types of retrieval tasks, prior-art search, patent landscaping, and freedom to operate search. Since previous research mainly focused on prior-art search, we introduce this task first in Sect. 5.2.1 with its corresponding methods in Sect. 5.2.2, followed by patent landscaping in Sect. 5.2.3 and freedom to operate search in Sect. 5.2.4.

5.2.1 Task definition of prior-art search

Prior-art search refers to, given a target patent document X, automatically retrieving K documents that are the most relevant to X from a patent database.Footnote 12 This process is crucial for patent examiners to assess the patentability of a new patent application. Prior-art search is not trivial, due to the intricate patent language and the different terms used in various patent descriptions. In principle, documents from distant fields, including both patent literature and other sources, can compromise the novelty of a new application if they disclose the same combination of patent features. However, these different fields often use distinct nomenclature across all word classes, including nouns, verbs, and adjectives. Additionally, many patents and patent applications create their own terms, which can significantly differ from everyday language and even from the terminology used in the technical field. This creation of terms is not always intentional. Attorneys and patent professionals, who may not be deeply familiar with a specific field, might need precise terms for their descriptions and claims and thus decide to invent names spontaneously. These self-defined terms are often highly artificial and may not appear in any other relevant documents. Such terms do not need to be listed in any dictionary.

5.2.2 Methodologies for prior-art search

Researchers have intensively invested in patent retrieval tasks and achieved some encouraging accomplishments. We summarize the general retrieval process in Fig. 7. Data types for further pre-processing include text, metadata, and images. Multiple methods can transform patent data into numerical features, such as the statistical method term frequency-inverse document frequency (TF-IDF), which is widely known from text-based search systems, and deep-learning-based word embedding. Relevance ranking algorithms to retrieve the most relevant documents include best-matching BM25, which is a bag-of-word-type method, and cosine similarity derived from the inner vector product.

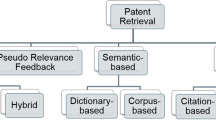

We focus on text-based patent retrieval in this section and introduce other methods in Appendix A.2. Traditional methods are keyword-based search and statistical approaches that can rank document relevance, such as the BM25 algorithm (Robertson et al. 2009). Keyword-based methods refer to a search for exact matches in the target corpus according to the input query. A previous survey summarized all keyword-based methods into three categories, namely query expansion, query reduction, and hybrid methods (Shalaby and Zadrozny 2019). On the other hand, statistical methods exploit a document’s statistics, such as the frequency of specific terms, to calculate a relevance score based on the inputs. For example, the BM25 algorithm calculates the sore using the occurrence of the query terms in each document. These methods are straightforward but the significant semantic and context information has not been explored. Furthermore, each patent document may use its own nomenclature, which may be defined in the description counter-intuitively to daily use.

Researchers proposed two types of improvement to enhance the patent retrieving performance, from keyword-based to full-text-based and from statistical methods to deep-learning methods. Errors occur inherently in keyword-based methods because different keywords can represent the same technical concepts across various disciplines. Hence, Helmers et al. (2019) adopted entire patent texts for similarity comparison and evaluated various feature extraction methods, such as bag-of-words, Word2Vec, and Doc2Vec. The results demonstrated that full-text similarity search could bring better retrieval quality. Moreover, a large body of research studied different deep learning-based embeddings that transfer texts to numerical values for similarity calculation (Sarica et al. 2019; Hain et al. 2022; Hofstätter et al. 2019; Deerwester et al. 1990; Althammer et al. 2021; Trappey et al. 2021; Vowinckel and Hähnke 2023). Since word embeddings cannot capture contextual information at a higher level, Hofstätter et al. (2019) improved the Word2Vec model by incorporating global context, which yielded up to 5% increase in mean average precision (MAP). At the paragraph level, Althammer et al. (2021) evaluated the BERT-PLI (paragraph-level interactions), which is specifically designed for legal case retrieval (Shao et al. 2020), in both patent retrieval and cross-domain retrieval tasks. However, the authors observed that the performance did not surpass the BM25 baseline and indicated that BERT-PLI was not beneficial for patent document retrieval. In another work, Trappey et al. (2021) trained the Doc2Vec model, an embedding that can capture document-level semantic information, based on patent texts for patent recommendation. Patent recommendation aims to retrieve target patent documents from the database. The results showed that Doc2Vec led to more than 10% improvement compared to bag-of-words methods or word embeddings. This research suggests that document-level embeddings are more promising for document retrieval because they can effectively capture context information.

LLMs have demonstrated effectiveness in text retrieval tasks (Ma et al. 2023). Thus, LLMs for patent prior-art search are a promising research direction. In addition, studies have shown that integrating retrieval into LLMs can improve factual accuracy (Nakano et al. 2021), downstream task performance (Izacard et al. 2023), and in-context learning capabilities (Huang et al. 2023). These retrieval-augmented LLMs are well-established for handling question-answering tasks (Xu et al. 2024). The application of retrieval-augmented generation in the patent domain is another interesting research direction.

5.2.3 Patent landscaping

Patent landscaping aims to retrieve patent documents related to a particular topic. Landscaping might have a larger overall strategic value for companies and be more related to the machine-learning topic. However, patent landscaping is much less investigated compared to prior-art search.

It is straightforward to relate landscaping to prior-art search in two ways. First, we can consider the target topic as a keyword for patent retrieval and use keyword-based methods to retrieve documents from the database. Alternatively, we can find seed patents to represent the topic and retrieve documents that are related to the seed patents as the result (Abood and Feltenberger 2018).

Furthermore, researchers developed classification models for patent landscaping, which classify whether a patent belongs to a given topic (Choi et al. 2022; Pujari et al. 2022). Choi et al. (2022) concatenated text embedding of abstracts and graph embedding of subject categories for patent representations. Subsequently, the authors added a simple output layer to conduct the necessary binary classification task. To stimulate the research on patent-landscaping-oriented classification, Pujari et al. (2022) released three labeled datasets with qualitative statistics.

5.2.4 Freedom-to-operate search

The freedom-to-operate (FTO) search, also known as the clearance search, is a specific type of patent-related research. The aim is to determine if a particular technology or product would be covered by any active intellectual property rights of another party. This search is critical for companies before launching a new product or service in the market and may be requested by investors as part of a due-diligence process.

This task shares similarities with a prior-art search but entails an important difference: The search for prior art takes the technology in question and checks if any prior record (not limited to patent documents or active patents) alone (novelty) or in combination (inventiveness/obviousness) contains all the features of the technology. Thus, the search analyzes if the technology is entirely part of the prior art. The documents or other records of the prior art anticipating the technology may also contain more features in their claims. In contrast, the search for freedom to operate checks if there is any active patent (or application still in examination), where an independent claim has fewer features (constituting a more general invention) than the technology in question requires. Thus, individual claims of relevant prior-art documents are practically in their entirety included in the technology. Accordingly, although a technology might be patentable as well as granted due to its novelty and inventiveness, it could still be covered by an earlier patent or pending application if it incorporates the features of the prior art alongside some additional nonobvious features. Therefore, the new invention would be classified as a more specific dependent invention. Owners of such overlapping earlier patents could therefore interfer with the use of such dependent IP.

Following the task definition, a freedom-to-operate search needs to analyze the claims of potentially relevant patents (applications) in detail and break down the technology in question. Hence, the automated retrieval of targeted patent claims is the core of this task. Few studies have investigated this type of retrieval task. Freunek and Bodmer (2021) trained BERT for the freedom-to-operate search process. They cut patent descriptions into pieces and use BERT to retrieve relevant claims from a constructed dataset. Their report demonstrated that BERT was able to identify relevant claims in small-scale experiments. As this task is important but widely neglected by the community, we introduce it here and suggest it for future research.

5.3 Information extraction

5.3.1 Task definition of information extraction

The process of extracting specific information from a text corpus is called information extraction. The goal of information extraction is to transform textual data into a more structured format that can be easily processed for various applications, such as data analysis. Hence, researchers usually use information extraction as a support task for patent analysis. Figure 8 demonstrates how information extraction is applied in the patent domain. Rule-based and deep-learning-based methods are two main streams of information extraction. The extracted information can be entities, relations, or knowledge graphs, which are constructed based on entities and relations. This information can serve for further tasks, such as patent analysis and patent recommendations for companies.

5.3.2 Methodologies for information extraction

Rule-based methods. Traditional extraction methods are rule-based. Researchers manually pre-define a set of rules and extract desired information based on the rules. Chiarello et al. (2019) designed a rule-based system to extract affordances from patents. For example, one of the rules was “The term user followed by can and adverbs, such as readily efficiently, quickly and easily.” The authors used the extracted results to evaluate the quality of engineering design. Another study devised rules according to the syntactic and lexical features of claims to extract facts (Siddharth et al. 2022). The authors integrated and aggregated these facts to obtain an engineering knowledge graph, which could support inference and reasoning in various engineering tasks.

Well-defined rules can lead to precise extraction so that this process is transparent and interpretable. However, creating and maintaining rules can be time-consuming, difficult, and biased. Moreover, rule-based methods may struggle with the variability and complexity of natural language.

Deep-learning-based methods. Deep learning methods require labeled datasets that include entities or relations for training. There are different network architectures for model training, such as long short-term memory (LSTM) (Chen et al. 2020) and transformers (Son et al. 2022; Puccetti et al. 2023; Giordano et al. 2022). Notably, Son et al. (2022) stated that most patent analysis research focused on claims and abstracts but neglected description parts that contain essential technical information. The reason may be the notably larger length of the description, which requires appropriate models that can load such text length. Thus, the authors proposed a framework for information extraction through patent descriptions based on the T5 model.

Deep-learning methods can handle a wide variety of language expressions and are easily scalable with more data and computational power. Moreover, deep learning can capture complex patterns and dependencies in language. Nonetheless, deep learning requires large quantities of high-quality annotated data and computation resources for training.

5.3.3 Applications of information extraction

Patent analysis. Patent analysis involves the analysis of patents with respect to multiple aspects, such as evaluating patent novelty or quality, and forecasting technology trends. For example, Chiarello et al. (2019) extracted sentences with a high likelihood of containing affordance from patents to evaluate the quality of engineering design, for example: “The user can easily navigate a set of visual representations of the earlier views.” Puccetti et al. (2023) identified technologies mentioned in patents to anticipate trends for an accurate forecast and effective foresight.

Patent recommendation. Patent recommendation refers to suggesting relevant patents to users based on their interests, research, or portfolio. Deng and Ma (2021) extracted the semantic information between keywords in the patent domain and constructed weighted graphs of companies and patents. The authors compared the distance based on weighted graphs to generate recommendations. Chen and Deng (2023) extracted connectivity and quality attributes for pairs of patents and companies. Based on knowledge graphs and deep neural networks, the authors designed an interpretable recommendation model that improved the mean average precision of best baselines by 8.6%.

Engineering design. Engineering design is a creative process that involves defining a problem, conceptualizing ideas, and implementing solutions. The goal is to develop functional, efficient, and innovative solutions to meet specific requirements. Designers can gain insight by analyzing problems and principal solutions extracted from patent documents. Giordano et al. (2022) adopted transformer-based models to extract technical problems, solutions, and advantageous effects from patent documents and achieved an F1 score of 90%. The extracted information helps reveal valuable information hidden in patent documents and generate novel engineering ideas. Similarly, Jiang et al. (2023) used pre-trained models to identify the motivation, specification, and structure of inventions with the accuracy of 63%, 56%, 44% respectively compared to expert analysis. From design intent to specific solutions, designers can review patents from a systematic perspective to gain better design insights.

The recent LLMs have shown outstanding capabilities in information extraction, such as in complex scientific texts (Dunn et al. 2022) and medical domain (Goel et al. 2023). Therefore, we anticipate the application of LLMs in the patent field to improve the quality of information extraction.

5.4 Novelty and inventiveness prediction

5.4.1 Definition of patent feature, novelty, and inventiveness

Novelty and inventiveness have a clear legal definition in most jurisdictions,Footnote 13 which may strongly deviate from common associations (European Patent Office 2000; United States Patent and Trademark Office 2022). An invention is conceived as a combination of features. It is novel if there is no older document or other form of disclosureFootnote 14 that alone includes and/or describes all essential features of the invention. In contrast, an invention may be considered inventive for two reasons: (1) All prior disclosures combined do not reveal each of its essential features. (2) Experts in the field would not find it obvious to integrate any missing features, for instance, according to their standard practice within the field.

Thus, the assessment of novelty critically relies on the concept of features. The features are the elements the invention needs to comprise to be the invention and are outlined in the patent claims. The independent claims list the essential features. For example, features can be physical elements and objects (typically nouns together with further specifiers), properties (often adjectives), or processing steps in a method.

Importantly, features in many jurisdictions cannot be implicitly negative, i.e., a missing property. Some offices allow the exclusion of a specific technology from the prior art through explicit negative limitations, but only if the description clearly states the absence of the feature as a property of the invention. Later exclusion of features in the claims based on merely an absence of the feature in the description is not possible. For example, a claim could specify “not using cloud storage” to clarify that the claimed invention operates solely on local servers. This limitation would be valid if the description explicitly states an advantage or purpose for not allowing cloud storage. The false negative rate (FPR) is particularly useful to measure the model’s performance in handling negative limitations, as it tracks instances where the model incorrectly interprets negative limitations. A low FPR is ideal and would indicate that the model rarely misinterprets positive statements as negative limitations.

The same feature can have very different names in different documents or even be denoted by a neologism well-defined in corresponding invention descriptions. The high variability of terminology between different documents is a major challenge for the examination and also for LLMs. Therefore, novelty prediction is a well-specified task by law and exhibits a high level of mathematical precision atypical of other language-related tasks. In contrast, inventiveness, determining whether the addition of certain features to existing technology is obvious to an expert, can often be ambiguous.

5.4.2 Task definition of novelty and inventiveness prediction

Novelty and inventiveness prediction is a binary classification task, aiming to determine whether a new patent is novel, given the existing patent database.Footnote 15 Novelty is one of the essential requirements of patent applications and takes a vast of resources and time for human assessment. In addition, the process of reviewing patents is complex and detail-oriented. Even experienced examiners can overlook critical information or fail in judgment. Automated patent novelty evaluation systems can be used as an auxiliary tool for novelty examination. Therefore, this system is critical, because it can not only improve the quality of patents but also enhance the efficiency of patent examination. Since novelty prediction is substantially based on text analysis, the recent LLMs appear well-suited for this complex task.

5.4.3 Methodologies for novelty and inventiveness prediction

Figure 9 illustrates key strategies for novelty prediction, which particularly include indicator-based methods, outlier detection, similarity measurement, and supervised learning.

Indicator-based methods. Indicator-based approaches rely on pre-defined indicators to measure patent novelty compared to prior art (Verhoeven et al. 2016; Plantec et al. 2021; Sun et al. 2022; Wei et al. 2024; Schmitt and Denter 2024). Researchers define indicators from various aspects, such as the citations, and assign each indicator a score or weight based on its importance to calculate novelty scores.

For example, Verhoeven et al. (2016) proposed three dimensions to evaluate technological novelty, including novelty in recombination, novelty in technological origins, and novelty in scientific origins. They involved patent classification codes as well as citation information to analyze each indicator and demonstrated that technological novelty in each dimension was interrelated but conveyed different information. Additionally, Plantec et al. (2021) investigated technological originality, which was defined as the degree of divergence between underlying knowledge couplings embedded in the invention and the predominant design. The authors used proximity indicators of direct citations, co-citation, cosine similarity, co-occurrence, and co-classification, with normalization methods to balance different indicators.

Notably, most research focused on evaluating technological novelty and originality, which was different from the formal definition of patent novelty. While patent novelty is assessed based on prior art and disclosures in a legal context, technological novelty and originality are analyzed in a technical context, focusing on the advancement and uniqueness of the technological contribution. In addition, indicator-based methods also pose some limitations. The selection and weighting of indicators can be subjective, and the indicator may not fully capture the nuanced aspects of inventions.

Outlier detection. Outlier detection is based on the assumption that novel inventions can be seen as outliers within the landscape of existing patents (Wang and Chen 2019; Zanella et al. 2021; Jeon et al. 2022). Researchers used text embeddings to represent patents and applied outlier detection algorithms to identify patents that are different from the majority.

Researchers used the local outlier factor (LOF) for novelty outlier detection, which measured how isolated the object is with respect to the surrounding neighborhood (Breunig et al. 2000). For example, Zanella et al. (2021) used Word2Vec (Mikolov et al. 2013) to obtain text embedding based on patent titles and abstracts. Wang and Chen (2019) extracted semantic information by using latent semantic analysis (LSA) (Deerwester et al. 1990) based on patent titles, abstracts, and claims. These embeddings served as the input for LOF to measure the novelty of patents. However, this method deviates from the definition of patent novelty, because it does not measure outliers strictly by the features as suggested by the legal definition. Thus, these methods may correlate with the formal patent novelty but are not equivalent and therefore not necessarily useful for an automated examination process.

Furthermore, Jeon et al. (2022) used Doc2Vec (Le and Mikolov 2014) to process patent claims as input for LOF. Since patent claims describe features of inventions, this method seems plausible according to the legal definition of novelty. Nonetheless, there are still some questions that are not explained. For instance, the difference between text embeddings may not stand for a feature difference of inventions. Therefore, using LLMs to explicitly extract and compare features between the target patent and the prior art is a more sensible approach for novelty prediction.

Another limitation of LOF is that it sometimes flags patents that are unusual but not necessarily novel or original in a meaningful way. For example, a patent combining existing technologies in a statistically rare way may be classified as an outlier. However, such an outlier for statistical reasons without actual technical novelty does not represent a significant technological advancement.

Similarity measurements. Researchers calculate similarities between the target patent and existing patents for novelty assessment (Siddharth et al. 2020; Beaty and Johnson 2021; Arts et al. 2021; Shibayama et al. 2021). They used word embeddings based on the patent text, such as abstracts, and adopted various metrics to calculate the similarities, for example, cosine similarity and Euclidean distance. A patent with a low similarity score is considered more novel.

Previous studies have investigated different text representation methods for similarity calculation. For example, Arts et al. (2021) extracted keywords that related to the technical content of patents from titles, abstracts, and claims. Each patent is represented in a 1,362,971-dimensional vector, where each dimension was the frequency of a keyword. However, the authors considered patents with major impacts on technological progress as novel, which is different from the legal definition.

Additionally, Shibayama et al. (2021) compared Word2Vec (Mikolov et al. 2013) embeddings between the target patent and its cited patents based on abstracts, keywords, and titles. Nonetheless, patent titles and abstracts are generic and do not disclose patent features, thereby failing to assess patent novelty in the legal sense. It is worth noting that Lin et al. (2023) proposed multimodal methods that combine text and image analysis to leverage both structural and visual features to measure patent similarity, which enhanced performance.

Supervised learning. Supervised learning refers to training models to classify whether a given patent is novel, based on target patent texts and the prior art (Chikkamath et al. 2020; Jang et al. 2023).

Chikkamath et al. (2020) investigated a series of machine learning models for novelty detection. The authors conducted comprehensive empirical studies to evaluate the performance, including various text embeddings (e.g., Word2Vec (Mikolov et al. 2013), GloVe (Pennington et al. 2014)), different classifiers (e.g., support vector machine, naive Bayes), and multiple network architectures (e.g., LSTM (Hochreiter and Schmidhuber 1997), GRU (Chung et al. 2014)). The inputs are target patent claims and cited paragraph texts from prior art that are related to the target patent. This process makes sense because cited paragraphs possibly include features relevant to target patent claims, providing an implicit ground check for novelty. However, it is questionable whether the model detects novelty by comparing features rather than other aspects as deep learning models are typically black-box methods and lack explainability.

To address the problem, Jang et al. (2023) proposed an explainable model based on BERT (Devlin et al. 2018) to evaluate novelty. The authors aimed to follow the legal definition by comparing the claims of a target patent with its prior art. The model could output the novelty prediction result, along with claim sets with high relevance as an explanation, which achieved 79% accuracy under the experimental settings. This paper presented a great idea, but BERT is nowadays outdated and outperformed by larger and more powerful LLMs.

Suggestions for future work. Based on the review of previous studies, we provide three suggestions for future research. (1) Researchers should differentiate between patent novelty and other similar terms, such as technology originality. Patent novelty focuses on the invention’s features compared to the prior art in a legal context, whereas technological originality refers to the advancement and uniqueness of the technological contribution in the technical context. (2) Researchers should concentrate on specific patent content for assessing novelty. Using patent claims for prediction is a sensible approach as they include essential features for comparison. In contrast, studies that leverage titles and/or abstracts are not necessarily useful for an automated examination process, because they are usually generic and vain. (3) Researchers should explore the effectiveness of powerful LLMs, such as GPT-4, in this field. While LLMs have revolutionized the field of NLP, current studies on novelty prediction are still largely based on old-fashioned methods, such as word embeddings. Using LLM-based methods may significantly stimulate the automation of novelty prediction.

5.4.4 Patentability assessment

Patentability refers to a set of criteria that an invention must meet to be eligible for a patent. The key criteria typically include novelty, inventiveness/non-obviousness, and utility. Novelty that has been discussed above means the invention has not been known or used in the prior art. Non-obviousness indicates that a patent should include a sufficient inventive step beyond what is already known, and should not be obvious to an expert in the field. Utility means the invention is useful and has some practical applications. Therefore, patentability assessment is a more comprehensive and challenging task compared to novelty prediction.

While most works focused on novelty prediction, Schmitt et al. (2023) investigated patentability assessment by examining both novelty and non-obviousness following the legal definition. The authors used a mathematical-logical approach to decompose patent claims into features and formulate a feature combination. They compared the target patent feature combination with its prior art to evaluate novelty and non-obviousness based on the legal definition. The authors tested their approach on patent application US 2009/0134108. The independent claim was parsed into different features. The model compared each feature to text fragments in prior-art patents and calculated a similarity score for each comparison, which measures how closely the wording, context, and technical concepts in the feature match with those in prior patents. If a single prior patent includes all features with high similarity scores, it could mean the new patent lacks novelty. For non-obviousness, the model examined combinations of features across multiple prior documents. This example demonstrated this method’s efficacy in identifying critical overlaps between claimed and prior features. Since the authors only processed one example patent for a proof of concept, the effectiveness and efficiency of this method on large-scale patent applications are unknown. They pointed out another limitation, specifically that this method could not detect homonyms or synonyms. The recent LLMs with outstanding capability would undoubtedly contribute to this task both effectively and efficiently.

5.5 Granting prediction

5.5.1 Task definition of granting prediction

Patent granting prediction refers to predicting whether a patent application will be granted or terminally rejected by examiners (Fig. 10).Footnote 16 Previous works named this task patent acceptance prediction (Suzgun et al. 2023), but we correct it to granting prediction in this paper because patents are granted at best but not accepted. Such an automated method could support patent examiners, patent applicants, and external investors. Due to the complexity of patent examination, this process typically requires a long time. Some cases have still not achieved the final decision after two decades, when they typically expire at last. Thus, the automation of this process can help patent offices manage their workload more efficiently. If automated examination procedures achieve to be bias-free, artificial intelligence could reduce the existing examiner dependence and also likely speed up the procedure. Furthermore, such automated examination promises to increase the quality of examination, particularly its existing bias and variability. The quality of examination has been and is such a severe issue that it, for instance, led to the America Invents Act of 2011. In addition, patent applicants can gain valuable insight, be less exposed to bias and individuality of patent examiners, and improve the invention based on the prediction of the outcome. NLP may reduce the excessive cost, particularly for small companies, to participate in the intellectual property system. Currently, drafting applications and sending them for examination can generate overwhelming costs of thousands of dollars every round for small businesses without internal resources. Such bills further increase if an examiner shows little support and a hearing needs to be arranged in the process. Thus, automation of the intellectual property process has the chance to stimulate technology development and increase the overall technological competitiveness of a society. Similarly, investors can make better-informed decisions and create strategic plans according to the predicted outcome of patent applications.

5.5.2 Methodologies for granting prediction