Abstract

Koopmans spectral functionals are a powerful extension of Kohn-Sham density-functional theory (DFT) that enables the prediction of spectral properties with state-of-the-art accuracy. The success of these functionals relies on capturing the effects of electronic screening through scalar, orbital-dependent parameters. These parameters have to be computed for every calculation, making Koopmans spectral functionals more expensive than their DFT counterparts. In this work, we present a machine-learning model that—with minimal training—can predict these screening parameters directly from orbital densities calculated at the DFT level. We show in two prototypical use cases that using the screening parameters predicted by this model, instead of those calculated from linear response, leads to orbital energies that differ by less than 20 meV on average. Since this approach dramatically reduces run times with minimal loss of accuracy, it will enable the application of Koopmans spectral functionals to classes of problems that previously would have been prohibitively expensive, such as the prediction of temperature-dependent spectral properties. More broadly, this work demonstrates that measuring violations of piecewise linearity (i.e., curvature in total energies with respect to occupancies) can be done efficiently by combining frozen-orbital approximations and machine learning.

Similar content being viewed by others

Introduction

Predicting the spectral properties of materials from first principles can greatly assist the design of optical and electronic devices1. Among the various techniques one can employ, Koopmans spectral functionals are a promising approach due to their accuracy and comparably low computational cost2,3,4,5,6,7,8,9,10,11,12. These functionals are a beyond-DFT extension explicitly designed to predict spectral properties and have shown success across a range of both molecules and materials13,14,15,16,17,18,19,20,21. One of the crucial quantities involved in the definition of Koopmans functionals is the set of so-called screening parameters. These parameters account for the effect of electronic screening in a basis of localized orbitals. There is one screening parameter per orbital in the system, and each screening parameter can be computed fully ab initio using finite differences3,8 or with linear-response theory9. Obtaining reliable screening parameters is essential to the accuracy of Koopmans spectral functionals while also being the main reason why these functionals are more expensive than their KS-DFT counterparts.

Meanwhile, machine learning (ML) is proving capable of predicting an ever-increasing range of quantum mechanical properties22,23. Inspired by this success, the objective of this work is to replace the calculation screening parameters with a machine-learning model, thereby drastically reducing the cost of Koopmans functional calculations and making it possible to apply them more widely. These screening parameters are intermediate quantities, not physical observables, and in this regard, this work shares parallels with attempts to learn the U parameter for DFT+U functionals24,25,26,27 and the dielectric screening when solving the Bethe-Salpeter equation28 (albeit in the latter case the dielectric screening is a physical observable, but for the purposes of that work it was used as an ingredient for subsequent calculations of optical spectra). This is in contrast to the majority of ML strategies in computational materials science and quantum chemistry, which often seek to relate structural information directly to observable quantities29,30,31,32,33,34,35,36,37,38. Note that these differences have practical consequences: in our case, first-principles calculations will not be bypassed completely.

More specifically, this work presents a machine-learning framework that can predict screening parameters from real-space orbital densities. The model is trained and tested on the same chemical system (i.e., we do not develop a general model to predict the screening parameters for an arbitrary chemical system). This makes the framework require very little training data at the expense of transferability. The two use-cases we will study are liquid water and the halide perovskite CsSnI3 (Fig. 1). In the case of liquid water, one might want to calculate spectral properties averaged along a molecular dynamics trajectory. In the case of the halide perovskite, one might want to calculate the temperature-renormalized band structure by performing calculations on an ensemble of structures with the atoms displaced in such a way to appropriately sample the ionic energy landscape39,40. In both cases, these methods require Koopmans spectral functional calculations on many copies of the same chemical system, just with different atomic displacements. This process can be made much faster by training a machine-learning model on a subset of these copies and then using this model to predict the screening parameters for the remaining copies.

Before discussing the machine-learning framework in more detail, let us first review Koopmans spectral functionals and the associated screening parameters that we ultimately want to predict. Koopmans spectral functionals are a class of orbital-density dependent functionals that accurately predict spectral properties by imposing the condition that the quasi-particle energies of the functional must match the corresponding total-energy difference when an electron is explicitly removed from/added to the system. This quasi-particle/total-energy difference equivalence is trivially satisfied by the exact one-particle Green’s function (as can be seen by the spectral representation, with poles located at energies corresponding to particle addition/removal), but it is violated by standard Kohn-Sham density functionals and leads to, among other failures, semi-local DFT’s underestimation of the band gap. Given that the exact Green’s function describes one-particle excitations exactly, the idea behind Koopmans functionals is that enforcing this condition on DFT will improve its description of one-particle excitations.

An orbital energy, defined as \({\varepsilon }_{i}=\left\langle {\varphi }_{i}\right\vert \hat{H}\left\vert {\varphi }_{i}\right\rangle\), will be equal to the total-energy difference of electron addition/removal if it is independent of that orbital’s occupation fi. (This follows because if εi—which is equal to \(\frac{dE}{d{f}_{i}}\) by Janak’s theorem—is independent of fi, then the total-energy difference \({{\Delta }}E=E({f}_{i}=1)-E({f}_{i}=0)=\frac{dE}{d{f}_{i}}={\varepsilon }_{i}\)41.) Equivalently, the orbital energies will match the corresponding total-energy differences if the total energy itself is piecewise linear with respect to orbital occupations:

This condition is referred to as the “generalized piecewise linearity” (GPWL) condition. It is related to the more well-known “piecewise linearity” condition, which states that the exact total energy is piecewise linear with respect to the total number of electrons in the system42.

Koopmans functionals impose this GPWL condition on a (typically semi-local) DFT functional via tailored corrective terms as follows:

where {fj} are the orbital occupancies and ρ the total electronic density of the N-electron ground state, and \({\rho }^{{f}_{i}\to f}\) denotes the electronic density of the (f + ∑j≠ifj)-electron system with the orbital occupancies constrained to be equal to {…, fi−1, f, fi+1,…} i.e., \({\rho }^{{f}_{i}\to 1}\) and \({\rho }^{{f}_{i}\to 0}\) correspond to charged excitations of the ground state where we fill/empty orbital i. In the final line of Equation (2) we have introduced the shorthand \({\Pi}_{i}^{\rm{KI}}\) for the Koopmans correction to orbital i. By construction, this correction removes the non-linear dependence of the DFT energy EDFT on the occupation of orbital i (the second line in Equation (2)) and replaces it with a term that is explicitly linear in fi (the third line), with a slope that corresponds to the finite energy difference between integer occupations of orbital i. We note that other choices are possible for the slope of this linear term, which gives rise to different variants of Koopmans spectral functionals. In this paper, we will exclusively focus on this “Koopmans integral” (KI) variant.

While Equation (2) formally imposes the GPWL condition as desired, practically, it cannot be used as a functional because we cannot construct the constrained densities \({\rho }^{{f}_{i}\to f}\) without explicitly performing constrained DFT calculations.

In order to convert Equation (2) into a tractable form, we instead evaluate these constrained densities in the frozen-orbital approximation i.e. we neglect the dependence of the orbitals {φj} on the occupancy of orbital i, obtaining

where \(\{{\varphi }_{j}^{N}\}\) are the orbitals of the unconstrained N-electron ground state, and we have introduced the shorthand \({n}_{i}({\bf{r}})=| {\varphi }_{i}^{N}({\bf{r}}){| }^{2}\) for the normalized density of orbital i and ρi(r) = fini(r). These frozen densities—unlike their unfrozen counterparts—are straightforward to evaluate because they are constructed purely from quantities corresponding to the N-electron ground state.

Evaluating the KI correction on frozen-orbital densities gives rise to the unscreened KI corrections \({{{\Pi }}}_{i}^{{\rm{uKI}}}\). The orbital relaxation that is absent in these terms can be accounted for by appropriately screening the Koopmans corrections, i.e.

where αi is some as-of-yet unknown scalar coefficient that is a measure of how much electronic interactions between orbitals are screened by the rest of the system. These parameters {αi} are the screening parameters that are the central topic of this work, and they will be discussed in more detail later.

Having introduced the frozen-orbital approximation and compensated for it via the screening parameters, we arrive at the final form of the KI functional:

where in the Koopmans correction only the Hartree-plus-exchange-correlation term appears because all the other terms in EDFT are linear in the orbital occupations and therefore cancel.

In contrast to standard DFT energy functionals, this energy functional is not only dependent on the total density but also on these individual orbital densities {ρi}. Therefore one has to minimize the total-energy functional with respect to the entire set of orbital densities ρi to obtain the ground-state energy, and not just with respect to the total density ρ (this is not so different from Kohn-Sham DFT, where one minimizes the functional with respect to a set of Kohn-Sham orbitals).

The orbitals {φi} that minimize the Koopmans energy function are called the variational orbitals. They are found to be localized in space6,43,44,45,46, closely resembling Boys orbitals in molecules and, equivalently, maximally localized Wannier functions (MLWFs) in solids47. In the specific case of the KI functional, and unlike most orbital-density-dependent functionals, the total energy is invariant with respect to unitary rotations of the occupied orbital densities. This means that once the variational orbitals are initialized—typically as MLWFs—they require no further optimization. The matrix elements of the KI Hamiltonian are given by

where the orbital-dependent Koopmans potential \({\hat{v}}_{j}^{{\rm{KI}}}\) is given by a derivative of the unscreened KI correction \({{{\Pi }}}_{i}^{{\rm{uKI}}}\), and is discussed in detail in ref. 5. At the energy minimum, the matrix \({\lambda }_{ij}^{{\rm{KI}}}\) becomes Hermitian7,48,49 and can be diagonalized. The corresponding eigenfunctions are called canonical orbitals and the eigenvalues \({\varepsilon }_{j}^{{\rm{KI}}}\) canonical energies. These canonical orbitals are different from the variational orbitals, but they are related via a unitary transformation, and both give rise to the same total density. Contrast this with KS-DFT functionals, which are invariant with respect to unitary rotations of the set of occupied Kohn-Sham orbitals, and thus the same orbitals both minimize the total energy and diagonalize the Hamiltonian. Koopmans spectral functionals follow the widely adopted approach of interpreting canonical orbitals as Dyson orbitals and their energies as quasi-particle energies43,50,51,52.

Koopmans corrections of the form of Equation (5) have proven to be remarkably effective across a range of isolated and periodic systems. For isolated systems, Koopmans functionals accurately predict the ionization potentials and electron affinities—and more generally, the orbital energies and photoemission spectra—of atoms3, small molecules5,9,13, organic photovoltaic compounds14,15, DNA nucleobases16, and toy models17. The method has also been extended to predict optical (i.e., neutral) excitation energies in molecules18. For three-dimensional systems, Koopmans functionals have been shown to accurately predict the band structure and band alignment of prototypical semiconductors and insulators8,10,11, systems with large spin-orbit coupling19, and a vacancy-ordered double perovskite20, as well as the band gap of liquid water21.

That said, it should be noted that while the Koopmans correction is designed to impose an exact condition, the correction itself is not formally derived from an exact theory such as generalized Kohn-Sham theory (cf. hybrid functionals53). In this sense, Koopmans functionals are not fully ab initio. Work is ongoing to provide a more formally rigorous basis for the Koopmans correction. It should also be noted that Koopmans functionals share many similarities with other methods that use the concept of piecewise linearity to either parametrize or correct density functionals. These include DFT+U54,55,56,57 and its various extensions58,59,60, optimally-tuned hybrid functionals61,62,63, global and localized orbital scaling corrections64,65,66,67,68,69,70, and the Wannier-Koopmans method71,72,73. There are also connections between Koopmans functionals and reduced density matrix functional theory74,75, the screened extended Koopmans’ theorem76, and ensemble density-functional theory77. In the Discussion we will return to explore how the results of this work might be applied to these related methods.

Let us now return to the screening parameters {αi} that were introduced above, but without explaining what they are nor how one might compute them. As per their definition in Equation (4), the screening parameters are responsible for relating the unscreened Koopmans correction \({{{\Pi }}}_{i}^{{\rm{uKI}}}\) (which we can directly evaluate) to the screened Koopmans correction \({{{\Pi }}}_{i}^{{\rm{KI}}}\) (which we cannot). The degree to which these two quantities differ will depend on how accurate the approximation of Equation (3) is, i.e., how closely the frozen density fni + ρ − ρi (where the occupation of orbital i is set to f and all the other orbitals are frozen in the N-electron solution) matches the true constrained density \({\rho }^{{f}_{i}\to f}\) (where all the orbitals are allowed to change in order to minimize the total energy of the (N − fi + f)-electron system). Consequently, the αi → 1 limit corresponds to an orbital that if its occupation changes, the rest of the electronic density does not respond and remains totally unchanged, i.e., the frozen density matches the true constrained density. This limit arises when orbital i interacts very weakly with the rest of the system/the permittivity is very small. In contrast, the αi → 0 limit corresponds an orbital that if its occupation changes, the rest of the system will screen the change in the density: the other orbital densities will change such that the total electronic density remains unchanged, i.e., \({\rho }^{{f}_{i}\to f}\to \rho\) and thus \({{{\Pi }}}_{i}^{{\rm{KI}}}\to 0\). This limit arises when orbital i interacts very strongly with the rest of the system/the permittivity is very large.

But how might we calculate the screening parameters? They cannot be system-agnostic (à la mixing parameters in typical hybrid functionals), because they depend on the screening of electronic interactions between orbital densities, which will clearly change from one orbital to the next, let alone one system to the next. To obtain system-specific screening parameters, one can calculate them ab initio by finding the value that guarantees that the generalized piecewise linearity condition (Equation (1)) is satisfied. It can be shown that for the KI functional, the screening parameter αi that will satisfy the GPWL condition for orbital i is given by

where \({\lambda }_{ii}^{{\rm{KI}}}[\{{\alpha }_{i}^{0}\}]\) is the ith diagonal element of the KI Hamiltonian matrix obtained with an initial guess \(\{{\alpha }_{i}^{0}\}\) for the screening parameters, and \({\lambda }_{ii}^{{\rm{DFT}}}\) is the diagonal matrix element corresponding to orbital i of the base DFT Hamiltonian. \({{\Delta }}{E}_{i}^{{\rm{DFT}}}\) is the difference in total energy for a charge-neutral calculation and a constrained DFT calculation where orbital i is explicitly emptied4,8. (Equation (7) only applies to occupied orbitals; an analogous formulation exists for unoccupied orbitals.) If we take Equation (7) to second order, then the screening coefficients become

where fHxc is the Hartree-plus-exchange-correlation kernel and ϵ is the non-local microscopic dielectric function, i.e., the screening parameters are an orbital-resolved measure of how much the electronic interactions are screened by the rest of the system. Equation (8) can be evaluated using density-functional perturbation theory9,11.

Thus, to obtain the screening parameters ab initio for a given system one must evaluate either Equation (7) (via finite-difference calculations8,10) or Equation (8) (via density-functional perturbation theory11). The former must, in principle, be solved iteratively, to account for the dependence of the variational orbitals on {αi}. However, for the particular case of the KI functional, the occupied orbitals are independent of the screening parameters (this is not true for the empty orbitals in theory, but in practice, the dependence of these orbitals on the screening parameters is sufficiently weak that it can be neglected). We stress that in this approach the screening parameters are not fitting parameters. They are determined via a series of DFT calculations and are not adjusted to fit experimental data nor results from higher-order computational methods.

Given that (a) Koopmans spectral functionals are orbital-density-dependent and (b) one must compute the set of screening parameters, a Koopmans functional calculation involves a few additional steps compared to a typical semi-local DFT calculation. In brief, the procedure for calculating quasi-particle energies for periodic systems using the KI functional involves the following four-step workflow:

-

an initial Kohn-Sham DFT calculation is performed to obtain the ground-state density;

-

a Wannierization of the DFT ground state to obtain a set of localized orbitals that are used to initialize/define the variational orbitals {φi}47;

-

the screening parameters for the variational orbitals are calculated via a series of DFT (or DFPT) calculations;

-

the KI Hamiltonian

is constructed and diagonalized to obtain the canonical eigenenergies \({\varepsilon }_{i}^{{\rm{KI}}}\).

The third step—that is, calculating the screening parameters—typically dominates the computational cost of the workflow. This is because one has to compute one screening parameter per variational orbital, and for each orbital, one must perform a finite difference or DFPT calculation. Of course, if two variational orbitals are symmetrically equivalent, then the same screening parameter can be used for both, and thus, for highly symmetric, ordered systems, the cost of calculating the screening parameters can be substantially reduced. Nevertheless, for large and/or disordered systems the computation of the screening parameters remains by far the most expensive step of the entire Koopmans workflow.

Results

The machine-learning model

To accelerate Koopmans spectral functional calculations, this work introduces a machine-learning (ML) model to predict the screening parameters, thereby avoiding the expensive step of having to calculate them explicitly. Since this ML model only predicts the screening parameters and not the quasi-particle energies directly, the initial DFT and Wannier calculations are still required to define the variational orbitals, as is the final calculation to calculate the quasi-particle energies. This workflow is compared against a conventional Koopmans functional calculation in Fig. 2.

In order to design a machine-learning model, the first thing to do is to define a set of descriptors, for which there are many possible choices. A preliminary study on acenes (presented in Supplementary Discussion 1) suggested that there is a strong correlation between the self-Hartree energy of each orbital

and its corresponding screening parameter. This scalar quantity is a measure of how localized an orbital density is, and the fact that the screening parameters were correlated with the self-Hartree energies indicated that it might be possible to predict the screening parameters based on a more complete descriptor of the orbital density.

To convert a real-space orbital density ni(r) into a compact but information-dense descriptor, we define a local decomposition of the normalized orbital density around the orbital’s center Ri:

where gnl(r) are Gaussian radial basis functions and Ylm(θ, φ) are real-valued spherical harmonics (following the choice of ref. 78 and many others). For more details regarding these basis functions, refer to Supplementary Discussion 2.

This choice of basis functions means that the model has four hyperparameters: a maximum order for the radial basis functions \({n}_{\max }\), a maximum angular orbital momentum \({l}_{\max }\), and two radii \({r}_{\min }\) and \({r}_{\max }\) that quantify the radial extent of the Gaussian basis functions. By design, these basis functions will capture orbital densities most accurately in the vicinity of the orbital’s center Ri, and progressively less accurately at larger radii and higher angular momenta. Because the variational orbitals are localized, it is reasonable to assume that most of the relevant information is captured with this local expansion. Choosing these hyperparameters will be influenced by the expected degree of transferability: too large descriptors will lead to more trainable weights and, hence, usually require more training data. On the other hand, too small descriptors might not capture the information required to accurately predict screening parameters.

In addition to the orbital density ni(r), one expects from physical intuition that the screening parameter of orbital i, αi, will also depend on the surrounding electronic density because these electrons will also contribute to the local electronic screening. For this reason, we construct an analogous local descriptor of the total electronic density around the orbital’s center Ri:

Finally, it is important to ensure that the descriptors are invariant with respect to translations and rotations of the entire system, so that the network does not consume training data learning that these operations will not affect the screening parameters. The coefficient vectors {ci} defined above are already invariant with respect to translation, but not with respect to rotation. To obtain rotationally invariant input vectors, we construct a power spectrum from these coefficient vectors, explicitly coupling coefficients belonging to different shells n, as well as coefficients belonging to the total and to the orbital density. The resulting input vector pi for each orbital i is given by the vector containing the coefficients

corresponding to all possible combinations of n1, n2, k1 and k279.

Having defined a descriptor, we must now decide on a machine-learning model with which to map the power spectrum of each orbital to its screening parameter. In this work, we use ridge-regression80. Despite its simplicity, we found that it achieved sufficient accuracy for the case studies with very little training data. By contrast, complex neural networks have more trainable parameters and, therefore, typically would require more training data (of course, this work does not preclude the possibility of employing more sophisticated models in the future).

Test systems

This machine-learning framework was tested on two systems: liquid water and the halide perovskite CsSnI3.

Despite its simple molecular structure, water exhibits very complex behavior. Understanding it better would help us to improve our ability to explain and predict a variety of phenomena in nature and technology, and it is therefore an active area of research21,81. For example, accurate values for the ionization potential (IP) and the electron affinity (EA) of water are necessary for a precise description of redox reactions in aqueous systems. These, in turn, are key to many applications such as (photo-)electrochemical cells.

Perovskite solar cells, meanwhile, are one of the most promising candidates for next-generation solar cells, with reported efficiencies higher than conventional silicon-based solar cells82. One of the most prominent perovskite materials for solar cell applications is caesium lead halide (CsPbI3) due to its suitable band gap of 1.73 eV and its excellent electronic properties. The main drawback of CsPbI3 is that lead is toxic, and it is desirable to find more environmentally friendly metals whose substitution does not compromise performance83. CsSnI3 is one such candidate.

Koopmans calculations were performed on 20 uncorrelated snapshots (i.e., different copies of the system with different atomic geometries) for each of the two test systems. Screening parameters were computed ab initio for all 20 snapshots; the first 10 snapshots were used when training the models, and the remaining 10 were exclusively reserved for validation. Further details can be found in the “Methods” section. The water test case follows what one could do in order to calculate the spectral properties of water with Koopmans functionals, where one must average across a molecular dynamics trajectory, while the perovskite test case represents how one could calculate the temperature-dependence of the spectral properties of this system, accounting for anharmonic nuclear motion.

Accuracy

To evaluate the accuracy of a model that predicts screening parameters, we can examine several different metrics. The most obvious quantities to compare are the predicted and the calculated screening parameters. However, these parameters are not physical observables: they are intermediate parameters internal to the Koopmans spectral functional framework. Ultimately, it is much more important that the canonical eigenenergies are accurately predicted. This is because all spectral properties derive from the eigenenergies, and spectral properties are of central interest whenever Koopmans spectral functionals are used.

That said, the eigenenergies are closely related to the screening parameters. As described previously, the eigenenergies are the eigenvalues of the matrix \({\lambda }_{ij}^{{\rm{KI}}}\), whose elements contain the screening parameters (Equation (9)). If all of the orbitals had the same screening parameter α, then the difference between the Koopmans and DFT eigenvalues would be linear in α. For a system with non-uniform screening parameters, the relationship between the eigenvalues and the screening parameters is more complex, with the difference between the Koopmans and DFT eigenvalues becoming a linear mix of the screening parameters of variational orbitals that constitute the canonical orbital in question. More concretely, given that the variational and canonical orbitals are related via a unitary rotation (i.e., \(\left\vert {\psi }_{i}\right\rangle =\sum\limits_{j}{U}_{ij}\vert {\varphi }_{j}\rangle\)), it follows that the Koopmans correction shifts DFT quasi-particle energies by

which is proportional to αj, with a constant of proportionality corresponding to the degree of overlap between canonical-variational orbital pairs, as well as Koopmans potential matrix elements. In general, the matrix element \(\langle {\varphi }_{k}| \hat{v}_{j}^{{\rm{KI}}}| {\varphi }_{j}\rangle\) is non-diagonal and non-local, but for occupied orbitals, it is diagonal and scalar, and the above expression simplifies to

For a quantitative example, sweeping the screening parameters across the range of ab initio values observed in liquid water changes the eigenvalues by approximately 1 eV (see Supplementary Discussion 3).

The accuracy of the predicted screening parameters and eigenenergies are shown in Fig. 3 for water and in Fig. 4 for CsSnI3. In these figures, we compare the performance of the ML model against two simplistic benchmark models:

-

the “one-shot” model, in which the screening parameters are computed ab initio for one snapshot, and then this set of screening parameters is used on other snapshots (i.e., neglecting the dependence of the screening parameters on the atomic positions)

-

the “average” model, which takes the average of the ab initio screening parameters of the training snapshots as the prediction for all screening parameters of the testing snapshots.

Given an arbitrary training dataset, these are the simplest possible models for predicting screening parameters. Any successful alternative model must, therefore, substantially outperform them, and as such, they serve as useful benchmarks.

The left-hand panels compare the predicted screening parameters against those computed ab initio (binned and colored by frequency). The right panels, meanwhile, show logarithmically scaled error histograms of the absolute error in the eigenvalues obtained using predicted screening parameters compared to eigenvalues obtained using the ab initio screening parameters. The blue lines show the corresponding cumulative distributions of these absolute errors.

See Fig. 3 for further explanation.

We will also compare against a “self-Hartree” (sH) model; a linear regression model with the self-Hartree energies of each orbital (Equation (10)) as input and the screening parameters of each orbital as output (inspired by the preliminary study discussed in Supplementary Discussion 1). Both the average and self-Hartree models treated occupied and empty states separately because this gave better results than using one model for the occupied and the empty states together. We note that this treatment of empty states is only possible because the empty states are localized, and thus, their self-Hartree energies are well-defined. (This was not the case for the preliminary study on acenes presented in the Supplementary Information). Figures 3 and 4 show the accuracy of the screening parameters and eigenvalues predicted by six different models: the one-shot model, the average model (trained on 10 snapshots), the self-Hartree model (trained on 10 snapshots), and the ridge-regression model (trained on 1, 3, and 10 snapshots). The accuracy of all six models was assessed against 10 unseen test snapshots. The mean and maximum absolute errors in the eigenvalues are also tabulated in Table 1.

The ridge-regression model outperformed the one-shot, average, and sH models for all systems, both for the screening parameters and the eigenenergies. The mean absolute error of the eigenenergies of the ridge-regression model is below 25 meV for all test systems already after 1 training snapshot. The one-shot model behaves poorly for water with an average error over four times that of the ridge-regression model, while for CsSnI3 it performs much better, aided by the fact that the variational orbitals fall into groups of orbitals of the same character that do not change from one snapshot to the next. That said, that the one-shot model does not capture any of the trends of the screening parameters between orbitals of the same character (as can be seen in the scatter plot of the screening parameters, in which each cluster of points shows no internal correlation). Meanwhile, the average and self-Hartree models predict eigenenergies with an average error above 40 meV for water and even above 200 meV for CsSnI3. The fact that the 1-snapshot ridge-regression model outperforms the one-shot model in both cases demonstrates that even if one only calculates screening parameters for a single snapshot, it is better to construct a ridge-regression model from this data rather than using the computed screening parameters directly for other snapshots.

In most applications, one is interested in specific orbital energies and not in quantities averaged over all eigenenergies. Therefore, it is important for the error distribution of the eigenenergies not to have a long tail. In this regard, the ridge-regression model also performs very well. After three training snapshots, ridge-regression predicts no single eigenenergy with an error larger than 150 meV. In comparison, the average model and the sH model predict many eigenenergies with an error larger than 200 meV; for CsSnI3 many have errors larger than even 500 meV.

The most important eigenenergies for many applications are the highest occupied molecular orbital (HOMO) and the lowest unoccupied molecular orbital (LUMO) energies, or in bulk systems (such as CsSnI3) the valence band maximum (VBM) and the conduction band minimum (CBM); in this work, we will treat these terms synonymously. Another important quantity is the band gap (the difference between the VBM and CBM). While the error of the CBM is below the average error in all cases, the error in the VBM is above average in all cases. This is not a fault of the models but suggests that the screening parameters have a larger influence on the VBM than the CBM. Even still, for most applications, the VBM is predicted sufficiently accurately by the ridge-regression after 2 or 3 training snapshots. Why the self-Hartree model fails for these two systems after showing promise in the preliminary study of acenes is analyzed in Supplementary Discussion 1.

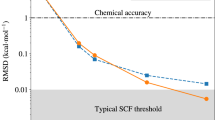

Finally, we examine the convergence of the ridge-regression model with respect to the number of training snapshots in greater detail. Figure 5 shows the convergence of the eigenvalues and the band gap as a function of the number of training snapshots. As we have already seen, the error of the HOMO energy and, correspondingly, the error of the band gap is larger than the mean absolute error. Nevertheless, both quantities become small (i.e., less than 20 meV) already after a few (2 to 4) training snapshots. To put this in perspective, Koopmans functionals typically predict orbital energies and band gaps within 200 meV of experiment8,13, i.e., the error introduced by the ML model is acceptably small, and the accuracy of the predicted band gaps remains state-of-the-art.

The top panel shows the mean absolute error in the eigenvalues, while the bottom panel shows the band gap. To get a statistical average, both quantities were obtained for 10 test snapshots. The dotted line indicates the mean, while the shaded region is the standard deviation across the 10 test snapshots.

Speed-up

The main goal in developing the ML model is to speed up the calculations with Koopmans spectral functionals while maintaining high accuracy. In the preceding section, we saw that the model achieves a satisfactory level of accuracy after a few training snapshots. In this section, we turn to look at the corresponding speedups.

We find that the ratio of the time required for an entire Koopmans calculation when (a) all screening parameters are computed ab initio relative to when (b) all screening parameters are predicted using the ML model is 80 for CsSnI3 and 11 for water. However, this does not factor in the cost of training the model in the first place. Figure 6 shows the anticipated speed-ups for performing Koopmans calculations on a given number of snapshots assuming that training requires three snapshots for training (and therefore three snapshots performed ab initio). For performing Koopmans calculations on 20 snapshots one obtains a speedup of roughly 4.4 for the water system and of roughly 6.2 for the CsSnI3 system. Because training the model is a one-off cost, for infinitely many configurations, we approach the aforementioned 80-fold and 11-fold speedups.

The timings show the speed-up depending on the total number of snapshots for which Koopmans functional calculations must be performed, assuming three snapshots are required for training, and the remaining snapshots use the ridge-regression model to predict the screening parameters. All calculations were performed on an Intel Xeon Gold 6248R machine with 48 cores.

We note that there are scenarios in which the ML model will provide even greater speed-ups than these. For example, the model could be trained on a small supercell and then employed for calculations on larger supercells. Because the cost of DFT calculations scales cubically with system size (cf. linearly with the number of snapshots), machine-learning promises even greater speed-ups for such schemes. Note that this approach is only possible because the descriptors are spatially localized and therefore invariant with respect to the system size.

Transferability

Thus far the ridge-regression models have only been applied to the same system that they were trained on. In this section, we seek to quantify the models’ transferability. Due to the simplicity of the ridge-regression model and the small amount of training data, we expect these models to not be very transferable.

The transferability of the ridge-regression model trained on 10 snapshots of liquid water was tested on two other phases of water: ice XI and isolated water molecules. The ice XI geometries correspond to a crystalline hydrogen-bonding network of water molecules, with atomic positions displaced from the pristine crystal structure using the self-consistent harmonic approximation in order to accurately reflect the quantum zero-point motion of the atoms at 0 K (and to thereby introduce some inhomogeneity into the data for the model to try and capture)84. Meanwhile, the test set of isolated water molecules corresponds to 200 individual molecules that were extracted from the liquid water snapshots reserved for testing. More details about the ice XI and isolated water molecule structures can be found in the Methods section below and Supplementary Fig. 6.

The results of applying the model trained on liquid water to these two test systems are shown in Fig. 7. The model performs relatively well for ice XI: the electrons in ice XI still find themselves in a system of hydrogen-bonded water molecules with local atomic environments and a level of electronic screening not dissimilar to liquid water. This is not the case, however, for the isolated water molecules, for which the model catastrophically fails. This is expected because the electronic screening is totally different for isolated water molecules (where there are no surrounding electrons to screen the formation of a charged state when an electron is removed from/added to the system) than in liquid water (where the surrounding electrons screen the formation of such a charged state).

The transferability of the ridge-regression model for CsSnI3 was also tested against two test systems. In this case, the two systems corresponded to (a) the same phase, but with atomic replacements corresponding to 450 K (cf. the systems on which the model was trained, which correspond to 250 K) and (b) the yellow phase of CsSnI3. This second phase contains local atomic environments that qualitatively differ from those found in the black phase of CsSnI3—for example, the caesium atoms go from a twelve-fold to a nine-fold coordination environment with much shorter bond lengths. For more details, see the Methods and Supplementary Fig. 7. As shown in Fig. 8, the model predicts the screening parameters for the 450 K phase well with only a handful of outliers, but the model does not transfer well to the novel atomic environments found in the yellow phase.

The top panel shows the accuracy of the model when applied to snapshots at 450 K, and the bottom panel shows the accuracy of the model when applied to the yellow phase at 300 K. Refer back to Fig. 3 for further explanation.

These two tests demonstrate that for the model to be transferable, the macroscopic screening ought to be similar (as was not the case for isolated water molecules), and the atomic environments should be familiar (as was not the case for the yellow phase of CsSnI3). If one wanted a machine-learning model to be more transferable, more training data and/or a more sophisticated ML model would be required. It would also be possible to employ ensemble and/or active learning approaches to detect and correct failures85,86. Ultimately, the choice of machine-learning model needs to strike a balance between transferability and the cost of training, which will differ from one application to the next.

Discussion

This work presents a machine-learning framework to predict electronic screening parameters via ridge-regression performed on translationally- and rotationally invariant power spectrum descriptors of orbital densities. This framework is able to predict the screening parameters for Koopmans spectral functionals with sufficient accuracy and with sufficiently little training data so as to dramatically decrease the computational cost of these calculations while maintaining their state-of-the-art accuracy, as demonstrated on the test cases of liquid water and the halide perovskite CsSnI3.

It is somewhat surprising that it is possible to accurately predict screening parameters directly from orbital densities because the screening parameters are not explicitly determined by orbital densities themselves but instead are related to the response of these densities (refer back to Equation (8)). Nevertheless, for the systems studied in this work, the relationship between the orbital’s density (plus the surrounding electron density) and its screening parameter learned by a ridge-regression model gives very accurate predictions of the screening parameters for orbitals it has never seen before—albeit for the same system; we do not expect the model to be transferable across different systems.

Work is already ongoing to use this machine-learning framework to predict the temperature-dependent spectral properties of materials of scientific interest.

In this work, the strategy of learning the mapping from orbital densities to screening parameters has been tailored for use with Koopmans functionals. However, the potential applications of this method are not limited to these functionals. For methods that are related to Koopmans functionals (as introduced earlier), the applicability of this strategy varies. For example, the localized orbital scaling correction has a matrix κij (i and j being the indices of localized orbitals), whose elements are calculated in a very similar manner to Koopmans screening parameters67,70. In this instance, the present machine-learning framework could be applied virtually unchanged (and would be even more beneficial, given that there are now \({\mathcal{O}}({N}_{{\rm{orb}}}^{2})\) parameters to compute). In contrast, this framework would be less useful in the context of optimally-tuned hybrid functionals61,62,63, because only a solitary parameter (the range separation parameter) is determined via a piecewise linearity criterion. More broadly, if one wants to construct a model that can predict energy curvatures of the form \(\frac{{d}^{2}E}{d{f}^{2}}\) for some generic orbital occupation f, then this work demonstrates that it is efficient to instead construct its frozen-orbital counterpart \(\frac{{\partial }^{2}E}{\partial {f}^{2}}\) and then learn the mapping from this quantity to the screened quantity \(\frac{{d}^{2}E}{d{f}^{2}}\).

Methods

All of the Koopmans functional calculations presented in this work were performed using Quantum ESPRESSO87,88 and the koopmans package12. The most relevant details of these calculations can be found below, while full input and output files can be found in the Materials Cloud Archive89.

Water

Liquid water

The liquid water system studied in this work is a simple cube with a side length of 9.81 Å containing 32 water molecules. 20 snapshots were taken from ab initio molecular dynamics trajectories at 300 K with the nuclei treated classically, as presented in ref. 90 (and as previously studied using Koopmans functionals in ref. 21). Those molecular dynamics calculations used the revised Vydrov and Van Voorhis (rVV10) van der Waals exchange-correlation functional91, a kinetic energy cutoff of 85 Ry, and SG15 optimized norm-conserving Vanderbilt pseudopotentials92. The 20 snapshots on which we then performed Koopmans calculations were taken from the 10 ps production run, each 0.5 ps apart, to ensure that the snapshots were not autocorrelated. (While this information is worth repeating, note that the details of how the snapshots were generated ultimately have little relevance to the task of predicting the screening parameters.)

The Koopmans functional calculations on water used PBE as a base functional93, an energy cutoff of 80 Ry (selected as the result of a convergence analysis), and the Makov-Payne periodic image correction scheme94. The system is spin-unpolarized, and therefore, the 32 water molecules give rise to 128 occupied orbitals. We also included 64 empty orbitals (which are typically included in Koopmans functional calculations in order to be able to be able to describe excitations involving electron addition i.e. photoabsorption). As such, each snapshot represents 192 datapoints (i.e., 192 pairs of orbital density descriptors and the corresponding screening parameter calculated ab initio), with the entire dataset corresponding to 3840 datapoints.

Ice XI

For the purpose of testing the transferability of the ridge-regression model, it was tested on two further water systems. The first of these was ice XI, an ordered phase of ice that forms below 72 K in the presence of a small amount of alkali-metal hydroxide95. The structure of ice XI is shown in Supplementary Fig. 6. The atomic positions used in this study correspond to a T = 0 K calculation with the self-consistent harmonic approximation, as reported in ref. 84. In this scheme, the atoms are displaced from their equilibrium positions in a specific pattern to accurately capture the effects of zero-point quantum fluctuations.

The calculations were performed on a 2 × 2 × 2 supercell of the eight-molecule, 4.39 Å-by-7.59 Å-by-7.59 Å orthorhombic cell. The supercell, therefore, contains 288 occupied orbitals and 192 empty orbitals in total. The simulation cell aside, the calculations used the same settings as those of liquid water.

Isolated water molecules

The second phase of water that was used to test the transferability of the ridge-regression model was isolated water molecules. In order to generate a set of non-identical water molecules with varying geometries, 200 water molecules were taken from the liquid water snapshots and considered in isolation, placed in a cubic cell with length 15 Å. These calculations used the same settings as those of liquid water but with the macroscopic dielectric constant (used in the Makov-Payne correction) set to 1.

CsSnI3

The black α phase of CsSnI3

The perovskite system studied in this work was a 2 × 2 × 2 supercell of the 5-atom primitive cell of the black α phase of CsSnI3; a cubic cell with a side length of 12.35 Å. The snapshots used in this work were generated via the stochastic self-consistent harmonic approximation40. This method simulates the thermodynamic properties originating from the quantum and thermal anharmonic motion of the ions40, where the ionic energy landscape is stochastically sampled and then evaluated within DFT. Unless otherwise noted, the configurations correspond to 250 K96.

The Koopmans functional calculations on CsSnI3 used a kinetic energy cutoff at 70 Ry (determined as the result of a convergence analysis), norm-conserving pseudopotentials from the Pseudo-Dojo library97, PBEsol as the base exchange-correlation functional98, and Makov-Payne periodic image corrections94. The system is spin-unpolarized and has 176 occupied bands, and 24 empty bands were included i.e. each snapshot represents 200 datapoints, with the entire dataset corresponding to 4000 datapoints.

The yellow phase of CsSnI3

The second “yellow” phase of CsSnI3 was used to assess the transferability of the ridge-regression model. This phase appears to be the ground state of CsSnI3, with the black α phase metastable under ambient conditions96. The calculations on the yellow phase were performed on a 2 × 2 × 2 supercell of the 5-atom orthorhombic primitive cell, leading to a 10.49 Å-by-9.49 Å-by-17.77 Å simulation cell containing 40 atoms. The atoms were displaced according to the stochastic self-consistent harmonic approximation to correspond to nuclear motion at 300 K. Otherwise, the calculations used the same settings as those of the black phase.

The atomic environments found in black and yellow CsSnI3 at different temperatures are compared in Supplementary Fig. 7.

The ridge-regression model

The ridge-regression model was implemented using the scikit-learn library99. The descriptor hyperparameters were set to \({n}_{\max }=6\), \({l}_{\max }=6\), \({r}_{\min }=0.5\,{{\rm{a}}}_{0}\), and \({r}_{\max }=4.0\,{{\rm{a}}}_{0}\). An exhaustive grid search over the hyperparameters (with all possible combinations of \({n}_{\max }\in \{1,2,4,8\}\), \({l}_{\max }\in \{1,2,4,8\}\), \({r}_{\min }/{a}_{0}\in \{0.5,1,2\}\), and \({r}_{\max }/{a}_{0}\in \{2,4,8\}\)) revealed that for \(4\le {n}_{\max }\le 8\), \(4\le {l}_{\max }\le 8\), and \(0.5\le {r}_{\min }\le 1\), the mean absolute error in the predicted screening parameters was not very sensitive to the choice of hyperparameters. For the ridge-regression model, the regularization parameter was set to 1 as the result of 10-fold cross-validation, and the input vectors pi were standardized.

Data availability

The data underpinning this work can be downloaded from the Materials Cloud Archive89.

Code availability

The koopmans code used to generate the results of this paper is open-source and is available at github.com/epfl-theos/koopmans. Versions 1.0.0 and 1.1.0 of the code were used.

References

Marzari, N., Ferretti, A. & Wolverton, C. Electronic-structure methods for materials design. Nat. Mater. 20, 736–749 (2021).

Dabo, I., Cococcioni, M. & Marzari, N. Non-Koopmans corrections in density-functional theory: self-interaction revisited. https://arxiv.org/abs/0901.26370901.2637 (2009).

Dabo, I. et al. Koopmans’ condition for density-functional theory. Phys. Rev. B 82, 115121 (2010).

Dabo, I., Ferretti, A. & Marzari, N. Piecewise linearity and spectroscopic properties from Koopmans-compliant functionals. In Di Valentin, C., Botti, S. & Cococcioni, M. (eds.) First Principles Approaches to Spectroscopic Properties of Complex Materials, 193–233 (Springer, Berlin, Heidelberg, 2014).

Borghi, G., Ferretti, A., Nguyen, N. L., Dabo, I. & Marzari, N. Koopmans-compliant functionals and their performance against reference molecular data. Phys. Rev. B 90, 075135 (2014).

Ferretti, A., Dabo, I., Cococcioni, M. & Marzari, N. Bridging density-functional and many-body perturbation theory: Orbital-density dependence in electronic-structure functionals. Phys. Rev. B 89, 195134 (2014).

Borghi, G., Park, C. H., Nguyen, N. L., Ferretti, A. & Marzari, N. Variational minimization of orbital-density-dependent functionals. Phys. Rev. B 91, 155112 (2015).

Nguyen, N. L., Colonna, N., Ferretti, A. & Marzari, N. Koopmans-compliant spectral functionals for extended systems. Phys. Rev. X 8, 021051 (2018).

Colonna, N., Nguyen, N. L., Ferretti, A. & Marzari, N. Screening in orbital-density-dependent functionals. J. Chem. Theory Comput. 14, 2549–2557 (2018).

De Gennaro, R., Colonna, N., Linscott, E. & Marzari, N. Bloch’s theorem in orbital-density-dependent functionals: band structures from Koopmans spectral functionals. Phys. Rev. B 106, 035106 (2022).

Colonna, N., De Gennaro, R., Linscott, E. & Marzari, N. Koopmans spectral functionals in periodic boundary conditions. J. Chem. Theory Comput. 18, 5435–5448 (2022).

Linscott, E. B. et al. koopmans: an open-source package for accurately and efficiently predicting spectral properties with Koopmans functionals. J. Chem. Theory Comput. 19, 7097–7111 (2023).

Colonna, N., Nguyen, N. L., Ferretti, A. & Marzari, N. Koopmans-compliant functionals and potentials and their application to the GW100 test set. J. Chem. Theory Comput. 15, 1905–1914 (2019).

Dabo, I. et al. Donor and acceptor levels of organic photovoltaic compounds from first principles. Phys. Chem. Chem. Phys. 15, 685–695 (2013).

Nguyen, N. L., Borghi, G., Ferretti, A., Dabo, I. & Marzari, N. First-principles photoemission spectroscopy and orbital tomography in molecules from Koopmans-compliant functionals. Phys. Rev. Lett. 114, 166405 (2015).

Nguyen, N. L., Borghi, G., Ferretti, A. & Marzari, N. First-principles photoemission spectroscopy of DNA and RNA nucleobases from Koopmans-compliant functionals. J. Chem. Theory Comput. 12, 3948–3958 (2016).

Schubert, Y., Marzari, N. & Linscott, E. Testing Koopmans spectral functionals on the analytically solvable Hooke’s atom. J. Chem. Phys. 158, 144113 (2023).

Elliott, J. D., Colonna, N., Marsili, M., Marzari, N. & Umari, P. Koopmans meets Bethe-Salpeter: excitonic optical spectra without GW. J. Chem. Theory Comput. 15, 3710–3720 (2019).

Marrazzo, A. & Colonna, N. Spin-dependent interactions in orbital-density-dependent functionals: noncollinear Koopmans spectral functionals. Phys. Rev. Res. 6, 033085 (2024).

Ingall, J. E., Linscott, E., Colonna, N., Page, A. J. & Keast, V. J. Accurate and efficient computation of the fundamental bandgap of the vacancy-ordered double perovskite Cs2TiBr6. J. Phys. Chem. C 128, 9217–9228 (2024).

de Almeida, J. M. et al. Electronic structure of water from Koopmans-compliant functionals. J. Chem. Theory Comput. 17, 3923–3930 (2021).

Carleo, G. et al. Machine learning and the physical sciences. Rev. Mod. Phys. 91, 045002 (2019).

Ceriotti, M., Clementi, C. & Anatole von Lilienfeld, O. Introduction: machine learning at the atomic scale. Chem. Rev. 121, 9719–9721 (2021).

Yu, M., Yang, S., Wu, C. & Marom, N. Machine learning the Hubbard U parameter in DFT+U using Bayesian optimization. npj Comput. Mater. 6, 1–6 (2020).

Yu, W. et al. Active learning the high-dimensional transferable Hubbard U and V parameters in the DFT+U+V scheme. J. Chem. Theory Comput. 19, 6425–6433 (2023).

Cai, G. et al. Predicting structure-dependent Hubbard U parameters via machine learning. Mater. Futures 3, 025601 (2024).

Uhrin, M., Zadoks, A., Binci, L., Marzari, N. & Timrov, I. Machine learning Hubbard parameters with equivariant neural networks. https://arxiv.org/abs/2406.02457 (2024).

Dong, S. S., Govoni, M. & Galli, G. Machine learning dielectric screening for the simulation of excited state properties of molecules and materials. Chem. Sci. 12, 4970–4980 (2021).

Montavon, G. et al. Machine learning of molecular electronic properties in chemical compound space. New J. Phys. 15, 095003 (2013).

Brockherde, F. et al. Bypassing the Kohn-Sham equations with machine learning. Nat. Commun. 8, 872 (2017).

Welborn, M., Cheng, L. & Miller, T. F. I. Transferability in machine learning for electronic structure via the molecular orbital basis. J. Chem. Theory Comput. 14, 4772–4779 (2018).

Schleder, G. R., Padilha, A. C. M., Acosta, C. M., Costa, M. & Fazzio, A. From DFT to machine learning: recent approaches to materials science-a review. J. Phys. Mater. 2, 032001 (2019).

Ryczko, K., Strubbe, D. A. & Tamblyn, I. Deep learning and density-functional theory. Phys. Rev. A 100, 022512 (2019).

Noé, F., Tkatchenko, A., Müller, K.-R. & Clementi, C. Machine learning for molecular simulation. Annu. Rev. Phys. Chem. 71, 361–390 (2020).

Häse, F., Roch, L. M., Friederich, P. & Aspuru-Guzik, A. Designing and understanding light-harvesting devices with machine learning. Nat. Commun. 11, 4587 (2020).

Sutton, C. et al. Identifying domains of applicability of machine learning models for materials science. Nat. Commun. 11, 4428 (2020).

Bogojeski, M., Vogt-Maranto, L., Tuckerman, M. E., Müller, K.-R. & Burke, K. Quantum chemical accuracy from density functional approximations via machine learning. Nat. Commun. 11, 5223 (2020).

Ghosh, K. et al. Deep learning spectroscopy: neural networks for molecular excitation spectra. Adv. Sci. 6, 1801367 (2019).

Zacharias, M. & Giustino, F. Theory of the special displacement method for electronic structure calculations at finite temperature. Phys. Rev. Res. 2, 013357 (2020).

Monacelli, L. & Mauri, F. Time-dependent self-consistent harmonic approximation: anharmonic nuclear quantum dynamics and time correlation functions. Phys. Rev. B 103, 104305 (2021).

Janak, J. F. Proof that ∂E/∂ni = ϵ in density-functional theory. Phys. Rev. B 18, 7165–7168 (1978).

Cohen, A. J., Mori-Sánchez, P. & Yang, W. Challenges for density functional theory. Chem. Rev. 112, 289–320 (2012).

Pederson, M. R., Heaton, R. A. & Lin, C. C. Local-density Hartree-Fock theory of electronic states of molecules with self-interaction correction. J. Chem. Phys. 80, 1972–1975 (1984).

Pederson, M. R., Heaton, R. A. & Lin, C. C. Density-functional theory with self-interaction correction: application to the lithium molecule. J. Chem. Phys. 82, 2688–2699 (1985).

Pederson, M. R. & Lin, C. C. Localized and canonical atomic orbitals in self-interaction corrected local density functional approximation. J. Chem. Phys. 88, 1807–1817 (1988).

Heaton, R. A., Harrison, J. G. & Lin, C. C. Self-interaction correction for density-functional theory of electronic energy bands of solids. Phys. Rev. B 28, 5992–6007 (1983).

Marzari, N., Mostofi, A. A., Yates, J. R., Souza, I. & Vanderbilt, D. Maximally localized Wannier functions: theory and applications. Rev. Mod. Phys. 84, 1419–1475 (2012).

Stengel, M. & Spaldin, N. A. Self-interaction correction with Wannier functions. Phys. Rev. B 77, 155106 (2008).

Goedecker, S. & Umrigar, C. J. Critical assessment of the self-interaction-corrected-local-density-functional method and its algorithmic implementation. Phys. Rev. A 55, 1765–1771 (1997).

Körzdörfer, T., Kümmel, S. & Mundt, M. Self-interaction correction and the optimized effective potential. J Chem Phys 129, 014110 (2008).

Vydrov, O. A. & Scuseria, G. E. Ionization potentials and electron affinities in the Perdew-Zunger self-interaction corrected density-functional theory. J. Chem. Phys. 122, 184107 (2005).

Ortiz, J. V. Dyson-orbital concepts for description of electrons in molecules. J. Chem. Phys. 153, 070902 (2020).

Garrick, R., Natan, A., Gould, T. & Kronik, L. Exact generalized Kohn-Sham theory for hybrid functionals. Phys. Rev. X 10, 021040 (2020).

Anisimov, V. I., Aryasetiawan, F. & Lichtenstein, A. I. First-principles calculations of the electronic structure and spectra of strongly correlated systems: the LDA+U method. J. Phys. Condens. Matter 9, 767 (1997).

Dudarev, S. L., Botton, G. A., Savrasov, S. Y., Humphreys, C. J. & Sutton, A. P. Electron-energy-loss spectra and the structural stability of nickel oxide: an LSDA+U study. Phys. Rev. B 57, 1505–1509 (1998).

Pickett, W. E., Erwin, S. C. & Ethridge, E. C. Reformulation of the LDA+U method for a local-orbital basis. Phys. Rev. B 58, 1201–1209 (1998).

Cococcioni, M. & de Gironcoli, S. Linear response approach to the calculation of the effective interaction parameters in the LDA+U method. Phys. Rev. B 71, 035105 (2005).

Leiria Campo Jr, V. & Cococcioni, M. Extended DFT+U+V method with on-site and inter-site electronic interactions. J. Phys. Condens. Matter 22, 055602 (2010).

Bajaj, A., Janet, J. P. & Kulik, H. J. Communication: recovering the flat-plane condition in electronic structure theory at semi-local DFT cost. J. Chem. Phys. 147, 191101 (2017).

Burgess, A. C., Linscott, E. & O’Regan, D. D. DFT+U-type functional derived to explicitly address the flat plane condition. Phys. Rev. B 107, L121115 (2023).

Stein, T., Eisenberg, H., Kronik, L. & Baer, R. Fundamental gaps in finite systems from eigenvalues of a generalized Kohn-Sham method. Phys. Rev. Lett. 105, 266802 (2010).

Kronik, L., Stein, T., Refaely-Abramson, S. & Baer, R. Excitation gaps of finite-sized systems from optimally tuned range-separated hybrid functionals. J. Chem. Theory Comput. 8, 1515–1531 (2012).

Wing, D. et al. Band gaps of crystalline solids from Wannier-localization-based optimal tuning of a screened range-separated hybrid functional. Proc. Natl. Acad. Sci. USA 118, e2104556118 (2021).

Zheng, X., Cohen, A. J., Mori-Sánchez, P., Hu, X. & Yang, W. Improving band gap prediction in density functional theory from molecules to solids. Phys. Rev. Lett. 107, 026403 (2011).

Li, C., Zheng, X., Cohen, A. J., Mori-Sánchez, P. & Yang, W. Local scaling correction for reducing delocalization error in density functional approximations. Phys. Rev. Lett. 114, 053001 (2015).

Zheng, X., Li, C., Zhang, D. & Yang, W. Scaling correction approaches for reducing delocalization error in density functional approximations. Sci. China Chem. 58, 1825–1844 (2015).

Li, C., Zheng, X., Su, N. Q. & Yang, W. Localized orbital scaling correction for systematic elimination of delocalization error in density functional approximations. Natl. Sci. Rev. 5, 203–215 (2018).

Mei, Y., Chen, Z. & Yang, W. Self-consistent calculation of the localized orbital scaling correction for correct electron densities and energy-level alignments in density functional theory. J. Phys. Chem. Lett. 11, 10269–10277 (2020).

Yang, X., Zheng, X. & Yang, W. Density functional prediction of quasiparticle, excitation, and resonance energies of molecules with a global scaling correction approach. Front. Chem. 8, 979 (2020).

Mahler, A., Williams, J., Su, N. Q. & Yang, W. Localized orbital scaling correction for periodic systems. Phys. Rev. B 106, 035147 (2022).

Ma, J. & Wang, L.-W. Using Wannier functions to improve solid band gap predictions in density functional theory. Sci. Rep. 6, 24924 (2016).

Weng, M. et al. Wannier Koopman method calculations of the band gaps of alkali halides. Appl. Phys. Lett. 111, 054101 (2017).

Weng, M., Pan, F. & Wang, L.-W. Wannier-Koopmans method calculations for transition metal oxide band gaps. npj Comput. Mater. 6, 1–8 (2020).

Pernal, K. & Cioslowski, J. Ionization potentials from the extended Koopmans’ theorem applied to density matrix functional theory. Chem. Phys. Lett. 412, 71–75 (2005).

Pernal, K. & Giesbertz, K. J. H. Reduced density matrix functional theory (RDMFT) and linear response time-dependent RDMFT (TD-RDMFT). In Ferré, N., Filatov, M. & Huix-Rotllant, M. (eds.) Density-Functional Methods for Excited States, 125–183 (Springer International Publishing, Cham, 2016).

Di Sabatino, S., Koskelo, J., Berger, J. A. & Romaniello, P. Screened extended Koopmans’ theorem: photoemission at weak and strong correlation. Phys. Rev. B 107, 035111 (2023).

Cernatic, F., Senjean, B., Robert, V. & Fromager, E. Ensemble density functional theory of neutral and charged excitations: exact formulations, standard approximations, and open questions. Top Curr. Chem. (Z) 380, 4 (2022).

Himanen, L. et al. DScribe: library of descriptors for machine learning in materials science. Comput. Phys. Commun. 247, 106949 (2020).

Bartók, A. P., Kondor, R. & Csányi, G. On representing chemical environments. Phys. Rev. B 87, 184115 (2013).

Hoerl, A. E. & Kennard, R. W. Ridge regression: biased estimation for nonorthogonal problems. Technometrics 12, 55–67 (1970).

Gaiduk, A. P., Pham, T. A., Govoni, M., Paesani, F. & Galli, G. Electron affinity of liquid water. Nat. Commun. 9, 247 (2018).

Min, H. et al. Perovskite solar cells with atomically coherent interlayers on SnO2 electrodes. Nature 598, 444–450 (2021).

Li, Z., Zhou, F., Wang, Q., Ding, L. & Jin, Z. Approaches for thermodynamically stabilized CsPbI3 solar cells. Nano Energy 71, 104634 (2020).

Monacelli, L., Errea, I., Calandra, M. & Mauri, F. Pressure and stress tensor of complex anharmonic crystals within the stochastic self-consistent harmonic approximation. Phys. Rev. B 98, 024106 (2018).

Settles, B. Active learning literature survey. Technical Report. University of Wisconsin-Madison Department of Computer Sciences https://minds.wisconsin.edu/handle/1793/60660 (2009).

Sagi, O. & Rokach, L. Ensemble learning: a survey. WIREs Data Min. Knowl. Discov. 8, e1249 (2018).

Giannozzi, P. et al. QUANTUM ESPRESSO: a modular and open-source software project for quantum simulations of materials. J. Phys. Condens. Matter 21, 395502 (2009).

Giannozzi, P. et al. Advanced capabilities for materials modelling with Quantum ESPRESSO. J. Phys. Condens. Matter 29, 465901 (2017).

Schubert, Y., Luber, S., Marzari, N. & Linscott, E. Predicting electronic screening for fast Koopmans spectral functional calculations. Materials Cloud Archive 2024, 182 (2024).

Chen, W., Ambrosio, F., Miceli, G. & Pasquarello, A. Ab initio electronic structure of liquid water. Phys. Rev. Lett. 117, 186401 (2016).

Vydrov, O. A. & Van Voorhis, T. Nonlocal van der Waals density functional: the simpler the better. J. Chem. Phys. 133, 244103 (2010).

Hamann, D. R. Optimized norm-conserving Vanderbilt pseudopotentials. Phys. Rev. B Condens. Matter Mater. Phys. 88, 085117 (2013).

Perdew, J. P., Burke, K. & Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 77, 3865–3868 (1996).

Makov, G. & Payne, M. C. Periodic boundary conditions in ab initio calculations. Phys. Rev. B 51, 4014–4022 (1995).

Tajima, Y., Matsuo, T. & Suga, H. Phase transition in KOH-doped hexagonal ice. Nature 299, 810–812 (1982).

Monacelli, L. & Marzari, N. First-principles thermodynamics of CsSnI3. Chem. Mater. 35, 1702–1709 (2023).

van Setten, M. J. et al. The PseudoDojo: training and grading a 85 element optimized norm-conserving pseudopotential table. Comput. Phys. Commun. 226, 39–54 (2018).

Perdew, J. P. et al. Restoring the density-gradient expansion for exchange in solids and surfaces. Phys. Rev. Lett. 100, 136406 (2008).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Acknowledgements

The authors thank Nicola Colonna, Lorenzo Monacelli, and Martin Uhrin for helpful discussions. EL gratefully acknowledges financial support from the Swiss National Science Foundation (grant numbers 179138 and 213082). This research was also supported by the NCCR MARVEL, a National Centre of Competence in Research, funded by the Swiss National Science Foundation (grant number 205602).

Author information

Authors and Affiliations

Contributions

Y.S.: methodology, software, validation, investigation, writing—original draft. S.L.: supervision, writing—review & editing. N.M.: conceptualization, supervision, writing—review & editing. E.L.: conceptualization, methodology, software, investigation, supervision, writing—review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schubert, Y., Luber, S., Marzari, N. et al. Predicting electronic screening for fast Koopmans spectral functional calculations. npj Comput Mater 10, 299 (2024). https://doi.org/10.1038/s41524-024-01484-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-024-01484-3

This article is cited by

-

Machine learning Hubbard parameters with equivariant neural networks

npj Computational Materials (2025)