Abstract

A multi-modal dataset was developed for palm oil Fresh Fruit Bunch (FFB) assessment in natural plantation environments. Data collection occurred across four diverse locations in Johor, Malaysia, representing variations in environmental conditions. The dataset includes 400 high-resolution RGB images captured with a 50 MP Sony IMX766V sensor, along with 400 depth maps and corresponding point clouds obtained using an Intel RealSense D455f camera. Images account for varying illumination, viewing angles, and distances, simulating real-world field conditions. Binary ripeness annotations adhere to Malaysian Palm Oil Board standards, with spatial registration between RGB and depth data achieving a mean error of 1.8 cm at 3 meters. Expert validation resulted in 92.5% inter-rater agreement. This dataset enables the development of advanced machine learning models for automated ripeness classification and localization, contributing to precision agriculture implementation, harvest optimization, and sustainable production practices in the oil palm industry. The dataset, stored in standardized formats with rich metadata, supports the development of advanced systems for automated ripeness classification and localization in precision agriculture.

Similar content being viewed by others

Background & Summary

Palm oil is a vital component of the global vegetable oil industry, contributing approximately 35% of total production worldwide1,2. With an annual output of 80.3 million tonnes as of 2023, palm oil serves as a cornerstone of agricultural economies and industrial supply chains3,4,5,6,7. Among the top producers, Malaysia plays a pivotal role, accounting for 25.8% of global production, which translates to a substantial 18.6 million tonnes annually8,9,10. This underscores the critical importance of efficient plantation management practices to maximize yields, ensure sustainability, and maintain global competitiveness in this sector. A key determinant of palm oil yield and quality lies in the precise assessment of Fresh Fruit Bunch (FFB) ripeness. Harvesting FFBs at their optimal ripeness stage has been shown to increase oil yield by 28–30%, while reducing the free fatty acid content by up to 15%, thereby improving oil quality and shelf life11. This ripeness assessment is not merely a procedural requirement but a significant factor influencing the profitability and operational efficiency of the industry. Conventionally, plantations have relied on manual inspection methods for evaluating FFB ripeness. These methods are heavily dependent on human expertise and involve visual inspection of indicators such as color changes and the presence of loose fruits12. While these traditional approaches have been the standard for decades, they are increasingly inadequate for modern large-scale operations. Studies have highlighted error rates of 15–25% in manual ripeness classification, with accuracy deteriorating as plantation areas expand beyond 500 hectares per inspector13. The labour-intensive nature of manual assessments further exacerbates the challenges, as consistent evaluations are difficult to achieve across vast plantation areas and diverse environmental conditions. These limitations have spurred the development of automated systems for FFB ripeness assessment. Automated systems aim to improve efficiency, accuracy, and scalability. However, the advancement of these technologies has been constrained by a critical bottleneck: the lack of comprehensive datasets that accurately represent field conditions. A systematic review of existing datasets revealed significant shortcomings, with 87% collected under artificial lighting conditions and only 13% reflecting the natural environmental variations encountered in real-world plantations14. This imbalance limits the generalizability and robustness of machine learning models trained on such datasets.

Oil palm plantation environments present unique challenges for data collection and system deployment. These include variable illumination conditions throughout the day, occlusion caused by dense vegetation, and non-uniform presentation angles of FFBs on trees. Accurate localization is further complicated by the irregular positioning of FFBs on tall, densely foliated palm trees, requiring datasets that capture these spatial complexities. Such factors significantly impact the accuracy of both manual and automated assessments, necessitating datasets that can capture the full range of these environmental complexities15. Previous datasets have exhibited several limitations that restrict their applicability to real-world scenarios. For instance, the dataset by Razali et al.16 was confined to controlled lighting conditions, providing little utility for models intended to operate in natural field settings. Similarly, the work by Hong et al.17 focused solely on RGB imagery, omitting depth information that could enhance spatial analysis and ripeness detection. Sabri et al.18 offered a dataset collected in natural environments but restricted data collection to specific times of the day, limiting its temporal coverage. Additionally, many existing datasets suffer from inconsistent annotation methodologies and incomplete environmental metadata documentation, further reducing their utility for robust system development. Notably, no publicly available datasets include depth maps or point cloud data specifically captured in outdoor environments for oil palm trees. This absence creates a significant gap in the resources needed for developing precise and reliable localization systems in natural plantation settings. The lack of such multi-modal data limits the ability to accurately analyze spatial relationships and structural complexities of FFBs, which are essential for both ripeness classification and localization. Without depth or point cloud data, automated systems face substantial challenges in adapting to real-world plantation environments where lighting, occlusion, and irregular tree structures vary significantly. The dataset presented in this study was specifically developed to address these limitations through a systematic and comprehensive approach to multi-modal data acquisition under actual plantation conditions. The experimental design was carefully structured to capture the full range of environmental variations encountered in commercial oil palm plantations. Emphasis was placed on achieving temporal distribution across daily cycles and seasonal changes, ensuring that the dataset is representative of real-world conditions. The dataset integrates RGB images, depth maps, and point clouds, facilitating both ripeness classification and precise localization of FFBs on the tree. Data collection methods adhered to established agricultural monitoring practices, while leveraging recent advances in multi-modal sensing technology to enhance data quality and utility. The resulting dataset offers comprehensive coverage of FFB presentation variations, environmental conditions, and spatial relationships. By addressing critical gaps in existing resources, it provides a robust foundation for the development of advanced automated systems for ripeness classification and localization, enabling significant improvements in efficiency, accuracy, and scalability for the oil palm industry.

Methods

Experimental design

The experimental design was structured to capture comprehensive FFB data across diverse plantation environments. Four distinct locations were selected in Johor, Malaysia, encompassing sites in two areas in Batu Pahat (1°55′04.8″N 103°02′23.0″E; 2°02′57.1″N 102°53′08.0″E), and two areas in Yong Peng (1°58′04.9″N 103°00′27.6″E; 2°02′57.1″N 102°53′08.0″E). The selection of sites was primarily influenced by plantation accessibility. Four locations in Batu Pahat and Yong Peng were chosen to enhance the variability of the collected data with respect to FFB ripeness, pruning conditions, and tree growth. Specifically, the Batu Pahat sites exhibited greater variability in FFB ripeness and more rigorous pruning practices, whereas the Yong Peng locations demonstrated a broader range of tree heights with moderate pruning conditions. The selected sites primarily consist of Elaeis guineensis, the dominant oil palm species cultivated in Malaysia and other major palm oil producing regions. Given that Johor, Malaysia is recognized as a one of the representative regions for oil palm cultivation. The agronomic conditions of the variability in FFB ripeness, pruning practices, and tree growth are consistent with those documented in major oil palm producing areas worldwide. The selected sites exhibited a range of management practices and environmental factors that align with industry standards observed in Southeast Asia, Africa, and Latin America. This selection strategy ensured that the dataset comprehensively represented the diverse agronomic conditions encountered in oil palm cultivation. Environmental parameters were systematically recorded at each site using Google Weather App Services, monitoring temperature (24–35 °C), relative humidity (65–95%), solar radiation (100–1200 W/m²), and precipitation levels (0–25 mm/day). The selected plantations comprised trees aged 3–7 years, stratified across sites to ensure representation of critical growth stages. Planting density across all sites was maintained at 138–148 palms per hectare, following standard industrial cultivation practices. The experimental plots were arranged in triangular planting patterns with 8.8-meter spacing, ensuring representation of typical commercial plantation layouts. The data acquisition setup on site is shown on Fig. 1.

Data collection methodology

The imaging system architecture was developed to address the specific challenges of FFB documentation in plantation environments. At its core, the system utilized a 50 MP Sony IMX766V sensor and f/1.75-aperture lens. This sensor demonstrated superior performance in dynamic range handling and color accuracy, particularly crucial for distinguishing subtle variations in FFB ripeness stages. The optical system was extensively modified to optimize performance in tropical environments, incorporating custom weather sealing and thermal management solutions developed in collaboration with agricultural imaging specialists. The sensor’s native resolution of 8688 × 5792 pixels provided a ground sampling distance of 0.3 mm at 2 m working distance, enabling detection of fine surface features characteristic of FFB ripeness stages. Custom firmware modifications were implemented to enable precise exposure control and high-speed burst capture, facilitating comprehensive FFB documentation under varying illumination conditions. The imaging pipeline was optimized through the implementation of a custom color processing algorithm, developed specifically for palm oil FFB photography and validated against spectrophotometric measurements of FFB samples. The comprehensive specifications of the imaging system are presented in Table 1, highlighting the key technical parameters that enabled high-quality data capture under varying field conditions.

The depth sensing system architecture was designed to overcome the specific challenges presented by plantation environments, particularly the complex interaction between IR projection patterns and vegetative surfaces as tabulated in Table 2. The Intel RealSense D455f platform was selected following comprehensive evaluation of multiple depth sensing technologies, including structured light and time-of-flight systems. The selected platform demonstrated superior performance in handling varying ambient illumination and complex surface geometries characteristic of FFB clusters. The depth sensing system underwent significant customization to optimize performance in tropical plantation conditions.

The integration of RGB and depth sensing systems required the development of a sophisticated hardware and software synchronization framework as represented in Table 3. A custom microcontroller-based synchronization unit was developed using an Nvidia Jetson Nano, utilizing a high-precision timing crystal (±0.1 ppm stability) to maintain precise temporal alignment between sensors. The synchronization system implemented a phase-locked loop mechanism to maintain stable timing relationships despite varying environmental conditions and processing loads. To ensure seamless switching and consistent data acquisition between different sensors, a simultaneous triggering mechanism was employed, enabling both sensors to capture data at precisely the same moment. Field deployment of the integrated system utilized tripod mounting for the physical registration between RGB and depth sensors. Spatial registration accuracy was continuously monitored through automated detection and matching of fiducial markers placed within the capture volume, preventing misalignment and ensuring data consistency. Furthermore, all sensor data streams were timestamped and periodically cross-verified to guarantee data integrity throughout the collection process.

Data Annotation and Segmentation

In developing this dataset, particular attention was paid to rigorous data acquisition protocols and calibration procedures to ensure high-fidelity alignment between RGB imagery and corresponding depth data. The annotation pipeline integrates domain expertise with computational techniques, providing both a robust ground truth for ripeness classification in RGB images and precise three-dimensional localization via depth maps and point clouds. This section outlines (i) the instance segmentation of Fresh Fruit Bunches (FFBs) in RGB images, (ii) the semi-automated clustering of FFBs in the 3D domain, and (iii) the calibration strategies employed to guarantee accurate co-registration of these data modalities.

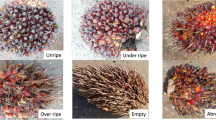

High-resolution RGB images (8688 × 5792 pixels) were collected using a 50 MP Sony IMX766V sensor. Each image typically contains multiple FFBs, some of which are partially occluded by fronds or overlapping bunches. The instance segmentation task focused on delineating individual FFBs to support binary ripeness classification. The classification of FFB ripeness was conducted based on the standard criteria established by the Malaysian Palm Oil Board (MPOB)14. FFBs were categorized into two classes: ripe and unripe, based on visual characteristics such as color, fruit detachment, and surface texture. Ripe FFBs exhibited a deep orange-to-reddish hue with visible loose fruits around the bunch, indicating optimal oil content. In contrast, unripe FFBs displayed a predominantly dark purple-to-black coloration with minimal or no loose fruit detachment. The classification process was performed by trained plantation experts to ensure consistency with industry standards, and inter-rater agreement was validated to confirm annotation reliability. Agricultural experts, trained according to Malaysian Palm Oil Board guidelines, manually labeled each FFB as “ripe” or “unripe,” ensuring that field-based domain knowledge informed the ground truth annotations. Manual segmentation was performed with specialized annotation software (e.g., CVAT, LabelMe), allowing annotators to create closed polygonal contours with sub-pixel precision. Each polygon \(\Pi \) was stored as an ordered set of two-dimensional coordinates:

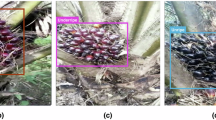

where \(\left({x}_{i},{y}_{i}\right)\) represents the \(i\)-th vertex of the polygon boundary in pixel coordinates. To capture variations in confidence or visibility, annotators appended metadata (including occlusion state and lighting conditions) in the same annotation files. Final annotations were saved in a JSON-based schema that includes the image naming with unique identifier referencing the raw image file, the ripeness labels for ripe and unripe as well as the annotation metadata with polygons coordinates. This flexible data structure supports a wide range of supervised learning frameworks. For instance, models that rely on bounding boxes (e.g., YOLO, Faster R-CNN) can be derived by computing minimal bounding rectangles from the polygons, whereas semantic or instance segmentation networks (e.g., Mask R-CNN) can use the polygons directly. Example RGB images and their corresponding annotation are shown in Fig. 2, enabling dataset users to verify and replicate the segmentation pipeline.

While ripeness determination largely depends on color and texture, spatial localization benefits from three-dimensional information. An Intel RealSense D455f camera captured 400 depth images (1280 × 720 pixels) alongside point clouds for each scene. To annotate FFB positions in 3D, a semi-automated workflow was implemented that combines clustering algorithms with expert validation. The Density-Based Spatial Clustering of Applications with Noise (DBSCAN) algorithm served as the primary tool for identifying clusters corresponding to individual FFBs. Given a set of points \(\{{p}_{1,}{p}_{2,\ldots ,}{p}_{N,}\,\}\subset {{\mathbb{R}}}^{3}\), DBSCAN partitions the point cloud into clusters \({C}_{j}\) based on two user-defined parameters: \(\epsilon \)(the neighborhood radius) and \(minPts\) (the minimum number of neighbors to form a dense region). For each point \(p\), the algorithm checks its \(\epsilon \) -neighborhood:

and designates \(p\) as a core point if \({|{N}}_{\epsilon }\left(p\right)|\ge {\min }{Pts}\). Points that do not meet this criterion but lie within the neighborhood of a core point are labeled as reachable and thus belong to the same cluster. Points not assigned to any cluster are classified as noise. In practical terms, DBSCAN is well-suited for plantation data because FFB clusters typically exhibit high local density, whereas foliage and background often remain sparser or form separate structures.

Parameter tuning was performed through iterative experimentation, employing cluster quality metrics such as the silhouette coefficient \(s\) for each cluster assignment:

where \(a\) is the average intra-cluster distance (to points within the same cluster) and \(b\) is the average nearest-cluster distance (to the closest external cluster). Optimal \(\epsilon \) and \(min{Pts}\) values were selected to maximize cluster separation while minimizing false positives caused by leaves or tree trunks. Following automatic clustering, domain experts inspected each cluster to confirm that it indeed represented a single FFB, merging or splitting clusters as necessary. This hybrid approach, wherein algorithmic speed is complemented by human oversight, allowed rapid processing of large point clouds while preserving accuracy in complex cases (e.g., occluded or overlapping bunches). Each validated cluster \({C}_{j}\) was assigned a unique identifier \({{id}}_{j}\) and annotated with centroid coordinates:

orientation data, and confidence scores, which reflect both the cluster density and expert validation. These annotations were stored in CSV or PLY formats, supplemented by JSON files that map each cluster ID to its spatial attributes and any relevant descriptive metadata (e.g., cluster size, annotation date). A key technical contribution of this dataset is its careful calibration, ensuring that annotations in the RGB images precisely align with the corresponding points in 3D space. Both intrinsic and extrinsic calibration steps were executed. Intrinsic calibration minimized lens distortion for each camera (RGB and depth), using checkerboard patterns captured from multiple vantage points. Extrinsic calibration estimated a rigid transformation \({\rm{{\rm T}}}\in {\rm{SE}}\left(3\right)\) that maps coordinates from the depth camera’s reference frame to that of the RGB camera, ensuring a mean registration error of approximately 1.8 cm at a 3 m distance. This alignment is formally expressed as:

where \({{\rm{{\rm P}}}}_{{depth}}\) and \({{\rm{{\rm P}}}}_{{rgb}}\) are homogeneous coordinate representations of the same 3D point in the depth and RGB frames, respectively. The transformation matrix \({\rm{{\rm T}}}\) was derived by matching known fiducial markers observed in both camera views. Researchers using this dataset may exploit these calibration parameters to project 3D cluster centroids onto RGB images for cross-modal data augmentation, sensor fusion, or multi-view geometry tasks. The mapped point cloud data example is shown in Fig. 3, and the depth maps example shown in Fig. 4.

Data Preprocessing and Quality Assurance

The preprocessing pipeline was developed to enhance data quality while preserving the essential characteristics needed for FFB assessment. For RGB images, this process began with basic calibration adjustments to account for varying illumination conditions. Color normalization was applied with careful consideration to maintain the natural appearance of FFBs, as color characteristics play a crucial role in ripeness assessment. To address variations in RGB images caused by different lighting conditions, a color normalization procedure was applied to ensure data consistency and comparability. White balance correction was implemented using a reference white surface captured under each lighting condition, allowing for adaptive adjustments based on scene illumination. Additionally, a color calibration target with known reflectance values was included in a subset of images to establish a standard reference for color correction. Histogram matching was performed using a master reference image to align color distributions across all samples, reducing discrepancies caused by varying environmental lighting. These preprocessing steps minimize illumination-induced variability, ensuring that ripeness classification remains robust across different lighting conditions. The preprocessing steps were validated through comparative analysis of processed images against reference color standards. Point cloud preprocessing focuses on optimizing the spatial data for subsequent analysis while maintaining geometric accuracy. Initial filtering removed noise points and outliers that could affect the accuracy of FFB localization. The point clouds underwent density normalization to ensure consistent spatial representation across the dataset, facilitating more reliable automated analysis. Ground plane removal was implemented to isolate elevated FFB clusters, improving the efficiency of subsequent processing steps. Quality assurance measures were implemented throughout the data collection and processing pipeline. Regular calibration checks were performed on both RGB and depth sensing equipment to maintain measurement accuracy. Environmental conditions were monitored and recorded to provide context for data quality assessment.

Data Records

The dataset is available at Figshare19, with this section being the primary source of information on the availability and content of the data being described. The dataset has been structured to facilitate accessibility and usability while maintaining data integrity. All data files are stored in standardized formats and organized according to a hierarchical structure that reflects the relationships between different data modalities. The RGB image collection comprises high-resolution images stored in uncompressed format to preserve image quality. Each image is accompanied by corresponding annotation files containing instance segmentation masks, ripeness classifications, and relevant metadata. The metadata includes capture conditions, camera parameters, and environmental measurements recorded during data collection. Depth data is stored in standard point cloud formats, accompanied by calibration parameters and transformation matrices necessary for spatial registration with RGB data. Each point cloud file is paired with annotation files containing FFB cluster identifications and spatial coordinates. The organization of depth data maintains the temporal and spatial relationships with corresponding RGB images. Supplementary data includes detailed documentation of collection protocols, annotation guidelines, and validation results. Environmental measurements and site characteristics are recorded in structured formats, providing context for data interpretation and analysis. The dataset architecture follows a standardized hierarchical organization optimized for scientific reproducibility and accessibility. The main branch maintains the current stable version, while development branches facilitate ongoing data refinement and additions. The primary repository structure, detailed in Table 4, encompasses distinct directories for data, documentation, and processing implementations.

Technical Validation

The validation of this dataset was conducted through systematic quality control procedures focusing on data consistency, expert verification, and basic technical measurements. The validation process encompassed three main aspects: image quality assessment, depth data verification, and annotation reliability.

Image quality validation

Image quality was assessed through systematic visual inspection and basic quantitative measurements. Each RGB image was evaluated for focus quality, proper exposure, and absence of motion blur. Images were captured during optimal daylight conditions (between 0700–1900 hours) to ensure adequate illumination. The camera settings were standardized across all collection sessions, with automatic exposure compensation permitted within predetermined limits to handle varying illumination conditions. Table 5 presents the basic quality metrics used for image validation.

Depth data validation

Depth data validation was performed using basic measurement tools and visual inspection as tabulated in Table 6. A standard measuring tape was used to verify physical distances between the camera and FFB samples, with measurements taken at the time of capture. The depth sensor’s performance was verified through comparison of these physical measurements with the captured depth data. The validation process focused on ensuring consistent depth measurement across the operating range commonly encountered in plantation environments.

Annotation reliability

The reliability of FFB ripeness classification was established through a consensus-based approach involving three experienced plantation inspectors. Each inspector independently classified the FFBs as ripe or unripe based on standard industry criteria. Cases where disagreement occurred were resolved through group discussion and consensus. The process was documented and validated through repeated assessments of randomly selected samples as tabulated in Table 7.

Environmental context validation

Environmental conditions during data collection were recorded using standard plantation weather monitoring equipment. Basic parameters such as time of day, weather conditions (sunny, cloudy, or overcast), and general environmental observations were documented for each capture session shown in Table 8. This information was used to validate the representativeness of the collected data across typical plantation conditions. Variations in natural lighting and weather conditions were observed to primarily affect ripeness classification results, as changes in illumination altered the perceived color and texture of FFBs, and fluctuating weather conditions impacted sensor performance. These environmental factors introduced variability in sensor exposure, contrast, and overall image quality, thereby increasing uncertainty in automated ripeness classification. Comprehensive calibration protocols and standardized data acquisition methods were implemented during data collection to mitigate these effects. This variability underscores the necessity of considering such factors in both dataset design and analysis, ultimately resulting in a dataset that demonstrates enhanced robustness and generalizability compared to existing datasets limited to controlled conditions.

These validation procedures, while basic, ensure the dataset’s reliability for its intended purpose of supporting FFB ripeness classification and localization research. The methods employed represent practical approaches that can be readily replicated by other researchers in similar settings.

Code availability

The code for image capturing and processing has been released along with the dataset and can be accessed through our Figshare19 dataset link. The primary data processing pipeline was implemented in Python (version 3.8 or later), utilizing standard scientific computing libraries including NumPy (1.21.0), OpenCV (4.5.3), and Open3D (0.13.0) for image and point cloud processing. The implementation provides essential functionality for preprocessing both RGB and depth data, supporting standard data formats (PNG for images, PLY for point clouds). Image processing routines handle color correction, noise reduction, and format standardization, while point cloud processing includes basic filtering and registration capabilities. The codebase also includes tools for verifying data integrity through hash checking and automated validation routines. Git Large File Storage (LFS) is utilized for managing large data files, with documentation provided for repository cloning and data synchronization. The source code has been tested on Ubuntu 20.04 operating systems to ensure broad compatibility. System requirements and installation instructions are detailed in the repository’s documentation.

References

United States Department of Agriculture. Oilseeds: World Markets and Trade. https://public.govdelivery.com/accounts/USDAFAS/subscriber/new (2023).

United States Department of Agriculture. World Agricultural Production. (2023).

Anggi Aprilia Palupi, Ahmad Ramadhan Siregar, Mahyuddin & Sitti Nurani Sirajuddin. Supply Chain Analysis Fresh Fruit Bunches Of Palm Oil. Adv Environ Biol 13, 19–22 (2019).

Malaysia Palm Oil Board. Overview of the Malaysian Oil Palm Industry 2022 Foreword. (2022).

Indonesian Palm Oil Association. G20 - Indonesia Palm Oil Facts. https://www.indonesiapalmoilfacts.com/g20/.

Malaysian Palm Oil Council. The Oil Palm Tree. https://mpoc.org.my/the-oil-palm-tree/.

Grand View Research. Palm Oil Market Size, Share & Trends Analysis Report, 2030. https://www.grandviewresearch.com/industry-analysis/palm-oil-market#.

Goh, J. Y., Md Yunos, Y. & Mohamed Ali, M. S. Synthetic Data Generation for Fresh Fruit Bunch Ripeness Classification. in Communications in Computer and Information Science 276–288, https://doi.org/10.1007/978-981-99-7240-1_22 (2024).

Palm Oil - Our World in Data. https://ourworldindata.org/palm-oil#article-citation.

Kushairi, A. et al. Oil Palm Economic Performance in Malaysia and R&D Progress in 2017. Journal of Oil Palm Research 30 163–195, https://doi.org/10.21894/jopr.2018.0030 (2018).

Lai, J. W., Ramli, H. R., Ismail, L. I. & Wan Hasan, W. Z. Oil Palm Fresh Fruit Bunch Ripeness Detection Methods: A Systematic Review. Agriculture (Switzerland), 13(1), 156, https://doi.org/10.3390/agriculture13010156 (2023).

Sunilkumar, K. & Sparjan Babu, D. S. Surface color based prediction of oil content in oil palm (Elaeis guineensis Jacq.) fresh fruit bunch. African Journal of Agricultural Research 8 (2013).

Ibrahim, Z., Sabri, N. & Isa, D. Palm Oil Fresh Fruit Bunch Ripeness Grading Recognition Using Convolutional Neural Network. Journal of Telecommunication, Electronics and Computer Engineering 10 (2018).

Goh, J. Y., Md Yunos, Y. & Mohamed Ali, M. S. Fresh Fruit Bunch Ripeness Classification Methods: A Review. Food Bioproc Tech https://doi.org/10.1007/s11947-024-03483-0 (2024).

Khan, N., Kamaruddin, M. A., Sheikh, U. U., Yusup, Y. & Bakht, M. P. Oil palm and machine learning: Reviewing one decade of ideas, innovations, applications, and gaps. Agriculture (Switzerland), 11(9), 832, https://doi.org/10.3390/agriculture11090832 (2021).

Ishak, W., Ismail, W. & Razali, M. H. Hue Optical Properties to Model Oil Palm Fresh Fruit Bunches Maturity Index. in The 14th World Multi-Conference on Systemics, Cybernetics and Informatics (2010).

Hong, T. S., Hanim Hashim, F., Raj, T. & Huddin, A. B. Classification of Oil Palm Fruit Ripeness Using Artificial Neural Network. 2021 IEEE International Conference on Automatic Control and Intelligent Systems, I2CACIS 2021 - Proceedings 358–363, https://doi.org/10.1109/I2CACIS52118.2021.9495857 (2021).

Sabri, N., Ibrahim, Z., Syahlan, S., Jamil, N. & Mangshor, N. N. A. Palm Oil Fresh Fruit Bunch Ripeness Grading Identification Using Color Features. Journal of Fundamental and Applied Sciences 9, 563–579 (2017).

Goh, J. Y. oilpalm-ffb-dataset. https://doi.org/10.6084/m9.figshare.28574489.v2 (2025).

Acknowledgements

APC funding provided by the Qatar National Library and Qatar University. This work was partially supported by Qatar University under International Research Collaboration Co-Fund (IRCC-700), Ministry of Higher Education under Fundamental Research Grant Scheme FRGS/1/2022/TK07/UTM/02/42 and Universiti Teknologi Malaysia under UTM Fundamental Research Grant Q.J130000.3823.22H55.

Author information

Authors and Affiliations

Contributions

Jin Yu Goh performed conceptualization, investigation, analysis, manuscript writing, editing and validation. Mohamed Sultan Mohamed Ali performed supervision, conceptualization, manuscript writing, review, editing, methodology, investigation, analysis, validation, and funding acquisition. Yusri Md Yunos contributed to analysi’s, validation, and review. Usman Ullah Sheikh contributed to analysis, validation, editing, and review. Muhammad Salman Khan contributed to analysis, validation, editing, and review.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Goh, J.Y., Mohamed Ali, M.S., Md Yunos, Y. et al. Outdoor RGB and Point Cloud Depth Dataset for Palm Oil Fresh Fruit Bunch Ripeness Classification and Localization. Sci Data 12, 687 (2025). https://doi.org/10.1038/s41597-025-04953-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-025-04953-6