Abstract

For finely representation of complex reservoir units, higher computing overburden and lower spatial resolution are limited to traditional stochastic simulation. Therefore, based on Generative Adversarial Networks (GANs), spatial distribution patterns of regional variables can be reproduced through high-order statistical fitting. However, parameters of GANs cannot be optimized under insufficient training samples. Also, a higher computing consumption and overfitting issue easily occurred by stacking Convolutional Neural Networks (CNNs). Therefore, a hybrid framework combined with octave convolution and multi-stage GAN (OctSinGAN) is proposed to perform reservoir simulation. Specifically, a pyramid structure is introduced to perform multiscale representation based on single Training Image (TI), with feature information being captured under different scales. Then, the octave convolution is used to perform multi-frequency feature representation on different feature maps. Finally, a joint loss function is defined to optimize network parameters to improve simulation qualities. Three different kinds of TIs are used to verify the simulation performance of OctSinGAN. Results show that different simulations are similar to the corresponding TIs in terms of spatial variability, channel connectivity and spatial structures, with a relatively high simulation performance overall.

Similar content being viewed by others

Introduction

Geological reservoir models usually represent spatial distribution patterns of different physical parameters, with better characterization of internal heterogeneity and anisotropy, which plays an important role for reservoir exploration, numerical simulation, and underground visualization1. Generally speaking, high-quality reservoir units usually develop in various sedimentary microfacies, with complex geological environments and structures. When the real observation data is limited, construction of reservoir units and heterogeneity characterization is always impacted, with a relatively low modeling efficiency, which is difficult to reflect the geological backgrounds and regularities accurately2,3. Therefore, confronting with complex geological settings, 3D reservoir structural-attribute visualization and heterogeneity characterization have been a hot issue in the fields of reservoir exploration and development3,4.

Reservoir modeling can be divided into deterministic and stochastic. For the former one, based on real observations, attribute information within undetermined regions can be inferred by various interpolation algorithms (such as Kriging interpolation)5,6. However, it cannot better characterize the complex reservoir units, and modeling uncertainties cannot be overlooked due to biases among observation data, mathematical logics and geological cognition7,8. Therefore, stochastic simulation has been introduced into different application scenarios. Based on geological conceptual models and prior knowledges, spatial distributions of regional variables can be better presented through random function, with further decision making and risk assessment being performed among multiple simulations9,10. As a mainstream stochastic simulation, Multiple-Point Statistics (MPS) has been paid wide attention for complex reservoir simulation11,12,13. For instance, a multiscale representation in Fourier space was integrated into the cross-correlation-based simulation algorithm and geological spatial patterns under different scales can be fully considered, with computational efficiencies being improved14. Bai and Mariethoz15 integrated edge-based two-stage strategy into Direct Sampling (DS) algorithm, which can improve the simulation process and modeling quality simultaneously. Levy et al.16 integrated both direct geological observation and indirect geophysical data into MPS so that intricate spatial patterns and heterogeneity of subsurface can be better reproduced. During MPS simulation, however, corresponding parameters cannot be stored in RAM and needs to be calculated repeatedly, with relatively low computing efficiencies for large-scale, successively modeling process. Furthermore, traditional MPS are performed based on the assumption of stationarity, which is not suitable for the reservoir units with relatively significant nonstationary patterns17. Therefore, some new techniques should be developed to better construct and characterize reservoir units under complex geological backgrounds.

Reservoir modeling can be viewed as a generation task, therefore, a series of deep generative models, such as Variational Autoencoder (VAE)18, Generative Adversarial Networks (GANs)19 and Recurrent Neural Networks (RNN)20, can be introduced to finely characterize distribution patterns of physical parameters, thereby accelerating the reservoir modeling efficiency and quality21,22,23. Among them, due to adversarial learning strategy, high-order statistical information of training samples can be better fit by GANs, and based on different geological conditioning data, spatial distribution patterns of reservoir physical parameters can be reproduced in 3D domains effectively24,25,26. For instance, a hybrid framework combined with GANs and self-attention mechanism, named SA-RelayGANs, was proposed by Cui et al.27. The involved two sub-modules can achieve the reconstruction of both heterogeneous structure and attribute information respectively, with better simulation performance for nonstationary delta models. Fan et al.28 introduced the BicycleGAN framework to perform continuous reservoir simulation under conditioning data constraints, with reconstruction diversities being ensured based on bijective consistency29. Similarly, Li et al.30 integrated both dictionary learning and diversity preservation strategy into BicycleGAN to further improve simulation diversities. It can be used for super-resolution reconstruction of porous media, and connectivity of pore spaces under different scales is preserved effectively. Referring to the advantages of Progressive Growing GAN (PGGAN)31, GANSim3D32 is successfully used in karst cave modeling, and all the simulations are better conformed with various geological constraints.

However, some shortcomings for the current GAN-based modeling approaches cannot be overlooked. Firstly, simulation performance of GANs strongly rely on the amount of training samples. For reservoir modeling fields, these training samples refer to the physical parameter distribution patterns, which can be regarded as the geological prior knowledge expression. But such prior knowledge is difficult to be quantified in real application scenarios. Although data augmentation can overcome the insufficiency of prior knowledge expression, corresponding latent distributions might be impacted, with modeling uncertainties and complexities being increased. Then, most of GANs rely on Convolutional Neural Network (CNN), when the number of stacking CNN layers is too deep, larger GPU computing consumption and overfitting issues might easily occur. Finally, training instability and mode collapse are common for most of GANs due to the unreasonable similarity metrics between generative and real distributions. Based on limited geological prior knowledge, a light-weighted GAN framework with stable training pattern should be designed to achieve the finely reservoir simulation.

Therefore, a multi-stage GAN integrated with octave convolution, named OctSinGAN, is proposed in this paper. Specifically, the pyramid structure involved in OctSinGAN can perform multiscale representation based on single TI. By sequentially connecting networks, corresponding simulations under different scales can be obtained. Also, instead of traditional CNN, an octave convolution will be mainly introduced to avoid higher computing consumption. Finally, a joint loss function combined with both adversarial and reconstruction loss is defined to optimize network parameters so that training stability and optimum simulation structures can be ensured. Three different kinds of 3D Training Images (TIs) are mainly used to verify the simulation performance of OctSinGAN, thereby providing theoretical support for stochastic reservoir modeling techniques.

The rest of the paper is organized as follows: Section “Methodologies” mainly introduces the OctSinGAN network structure, theory behind octave convolution, joint loss function definition and simulation process based on OctSinGAN. Section “Numerical simulation examples” includes three reservoir simulation examples and corresponding qualitative analysis, with conclusions and further outlooks being given in Section “Discussion and conclusion”.

Methodologies

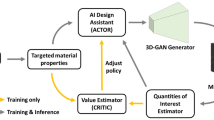

The flow chart of the experiment is shown in Fig. 1, which includes 3 parts: multiscale representation based on pyramid structure, OctSinGAN optimization and simulation performance evaluation. Specific instructions will be given as follows.

Network architecture of OctSinGAN

OctSinGAN is improved based on single-image GAN (SinGAN)33 (Fig. 2). Specifically, a single TI can be downsampled into different scales through pyramid structure. Assume the length, width and height of 3D TI are \(\:L\), \(\:W\) and \(\:H\) respectively, and the total training stage is \(\:n\). For both \(\:i\)th \(\:\left(i=1,\dots\:,n-1\right)\) and \(\:n\)th training stage, the size of corresponding TIs can be defined as \(\:{y}_{i}=\left({L}_{i},\:{W}_{i},\:{H}_{i}\right)\) and \(\:{y}_{n}=\left(L,\:W,\:H\right)\) respectively (for the final training stage, the size of simulations are equal to the ones of TI). Assuming \(\:{L}_{s}\), \(\:{W}_{s}\) and \(\:{H}_{s}\) are pre-defined, for each training stage, corresponding TI size can be defined as:

where \(\:m\) can be written as:

where \(\:min\left(\cdot\:\right)\) and \(\:max\left(\cdot\:\right)\) are used to calculate the minimum and maximum value of variables inside brackets respectively, \(\:m\) and \(\:\delta\:\) are the scaling factors that control the size of simulations during different training stages. Therefore, global nonstationary patterns and structural information can be initially captured under small scale and at this moment, distribution of regional variables can be approximately memorized by OctSinGAN, thereby a small-scale 3D reservoir cube with low-resolution are constructed. With scale increasing, the detailed information, such as channel’s geometric shape, width and tendencies in 3D reservoir domain, will be gradually restored and spatial resolution will be gradually improved, thereby reservoir models can be finely characterized.

Multiple Generators (\(\:G\)s) and Discriminators (\(\:D\)s) are included in OctSinGAN, which can achieve reservoir multiscale reconstruction through connecting mutliple \(\:G\)s sequentially. Octave convolution layers are mainly used in \(\:G\), and comparing with traditional CNN, it can reduce GPU computational resources during parameter optimization. Especially when the network layers are deeply stacked, mode collapse can be avoided effectively. Theory of octave convolution will be illustrated in the next chapter. Meanwhile, PatchGAN34 is mainly considered in \(\:D\), which is composed of multiple CNN layers following by activation function, and corresponding output is a matrix contained different scalar. Comparing with single scalar output of \(\:D\) in traditional GAN, such scalar field can be denoted as an implicit Markov random field, in which each scalar represents the corresponding discriminative results at local patch, with closer correlation between two adjacent ones. Also, for various \(\:D\)s in each training stage, the size of corresponding input and output are different with each other. Therefore, feature information among different patches can be extracted to perform discrimination, with better assistance for reservoir multiscale reconstruction.

Based on multiscale representations, the training process of OctSinGAN is shown as follows: for the first training stage, a random noise \(\:{z}_{1}\) is input into \(\:{G}_{1}\) to obtain the corresponding reconstructions \(\:{\stackrel{\sim}{x}}_{1}\). Then, both \(\:{\stackrel{\sim}{x}}_{1}\) and reference \(\:{x}_{1}\) are input into \(\:{D}_{1}\) to perform discrimination. Network parameters for both \(\:{G}_{1}\) and \(\:{D}_{1}\) are optimized simultaneously by stochastic gradient descent and backpropagation until the loss function is converged in a certain range. When the first training stage is finished, \(\:{\stackrel{\sim}{x}}_{1}\) will be up-sampled as \(\:{\stackrel{\sim}{x}}_{1}\uparrow\:\), which will be combined with random noise \(\:{z}_{2}\) and input into \(\:{G}_{2}\) to get the reconstruction in the second training stage \(\:{\stackrel{\sim}{x}}_{2}\). Meanwhile, discrimination is performed by \(\:{D}_{2}\) through inputting both \(\:{\stackrel{\sim}{x}}_{2}\) and \(\:{x}_{2}\). After repeating many times, until \(\:n\)th training stage, \(\:{\stackrel{\sim}{x}}_{n-1}\uparrow\:\) obtained from \(\:\left(n-1\right)\)th training stage will be combined with \(\:{z}_{n}\) and input into \(\:{G}_{n}\) so that reconstructions for final training stage keep the same size as the ones of original TI.

Theory of octave convolution

Information involved in 3D TI is always conveyed at different frequency levels. Among them, higher frequencies refer to the rapidly changing components in 3D domains, with detailed information being reflected, while lower frequencies represent the smoothly variation structures, which mainly focuses on the global information extraction. Though the input and output feature maps share the same spatial resolution in vanilla convolution, spatially redundant for some low-frequency information is not essential, which can be further compressed to improve calculation performances35,36. Therefore, in this paper, octave convolution is mainly introduced, and the involved multi-frequency feature representation can store high- and low-frequency information into different groups. Through sharing and updating feature information among these groups, both spatial resolution of low-frequency feature maps and spatial redundancies can be reduced. Below we detail the theory of octave convolution.

Assume feature tensor of convolutional layer \(\:X\in\:{\mathbb{R}}^{c\times\:l\times\:w\times\:h}\), where \(\:c\) denotes the number of channels, \(\:l\), \(\:w\) and \(\:h\) denotes the length, width, and height of \(\:X\) respectively. Explicitly factorizing \(\:X\) along channel directions \(\:X=\left\{{X}^{H},{X}^{L}\right\}\), where \(\:{X}^{H}\) and \(\:{X}^{L}\) are the high-frequency and low-frequency feature maps, with fine details and global structures being encoded respectively. Meanwhile, the number of channels with ratio \(\:\alpha\:\in\:\left[\text{0,1}\right]\) is assigned to low-frequency part, thus both \(\:{X}^{H}\) and \(\:{X}^{L}\) can be rewritten as \(\:{X}^{H}\in\:{\mathbb{R}}^{\left(1-\alpha\:\right)l\times\:w\times\:h}\) and \(\:{X}^{L}\in\:{\mathbb{R}}^{\alpha\:c\times\:\frac{l}{2}\times\:\frac{w}{2}\times\:\frac{h}{2}}\) respectively, which are used as the octave convolution process. Let \(\:X\) and \(\:Y\) be the input and output tensors after factorizing, then \(\:Y=\left\{{Y}^{H},{Y}^{L}\right\}\) can be denoted as:

where \(\:{Y}^{A\to\:B}\) represents the feature maps being updated from group \(\:A\) to group \(\:B\) through convolutional operator, \(\:{Y}^{H\to\:H}\) and \(\:{Y}^{L\to\:L}\) are intra-frequency update, \(\:{Y}^{H\to\:L}\) and \(\:{Y}^{L\to\:H}\) are inter-frequency communication. For calculating these terms, a convolutional kernel \(\:W\) with size of \(\:k\times\:k\times\:k\) will be divided into \(\:{W}^{H}\) and \(\:{W}^{L}\) two parts, which are used for convolving with \(\:{X}^{H}\) and \(\:{X}^{L}\) respectively. For \(\:{W}^{H}\), it can be divided into \(\:{W}^{H\to\:H}\) and \(\:{W}^{L\to\:H}\), which is written as: \(\:{W}^{H}=\left[{W}^{H\to\:H},{W}^{L\to\:H}\right]\). Similarly, \(\:{W}^{L}\) can be written as \(\:{W}^{L}=\left[{W}^{L\to\:L},{W}^{H\to\:L}\right]\). Then, for high-frequency feature maps, regular convolution is used at location \(\:\left(p,q\right)\) to perform intra-frequency update. For inner-frequency communication, the up-sampled \(\:{X}^{L}\) will be folded into convolution. The whole process can be summarized as:

where \(\left\lfloor *\right\rfloor\) is the floor operation. From Eqs. (6)-(9), for low-frequency feature maps, intra-frequency update is performed through regular convolution, and after downsampling, \(\:{X}^{H}\) will be folded into convolution, which can be formulated as:

Since the index of \(\:{X}^{H}\) must be the integer type, so corresponding value can be estimated as \(\:\left(2*p+i,2*q+i\right)\), which is similar to the stride convolution, or calculating averages of 4 adjacent locations as \(\:\left(2*p+0.5+i,2*q+0.5+i\right)\), which can be regarded as average pooling. Comparing with stride convolution, misalignments during information fusion under different scales can be relatively alleviated through average pooling, with relatively high calculating accuracy. In summary, the whole process of octave convolution is shown in Fig. 3, with mathematical formulation writing as follows:

Schematic diagram of octave convolution process: (a) The octave convolution kernel are divided into 4 parts, which are used in different frequency domains; (b) multi-frequency feature representation, including both feature information update (green arrows) within same frequencies and exchange between two frequencies (red arrows).

where \(\:f\left(X;W\right)\) is the convolving process with a set of parameters \(\:W\), \(\:pool\left(X,k\right)\) is the average pooling with kernel size \(\:k\times\:k\times\:k\) and stride \(\:k\), \(\:upsample\left(X,k\right)\) is the up-sampling operator with scale factor \(\:k\), and trilinear interpolation is mainly used for 3D tensors. Therefore, through enlarging receptive fields of octave convolution, both high- and low-frequency feature information in 3D domains can be processed to perform multi-frequency communication, with both pattern recognition and contextual caption being significantly improved. Meanwhile, spatial redundancy can be alleviated under the influence of multi-frequency feature representation. Therefore, instead of CNN, octave convolution can be integrated into GANs to reduce computing overburden and improve simulation quality simultaneously.

Joint loss function definition and learning rate adjustment

A joint loss function is defined to optimize the parameters of OctSinGAN, which can be written as:

where \(\:{\mathcal{L}}_{adv}\) and \(\:{\mathcal{L}}_{rec}\) denote the adversarial loss and reconstruction loss respectively, \(\:\alpha\:\) is the coefficient term of \(\:{\mathcal{L}}_{rec}\). For \(\:{\mathcal{L}}_{adv}\), Wasserstein GAN with gradient penalty37,38 is mainly considered. For each training stage, discrepancies between generative and real distributions can be estimated effectively through Wasserstein distance, and gradients can be better preserved due to its smoothing properties, with mode collapse being avoided. Meanwhile, gradient penalty term can make loss functions conform with 1-Lipschitz constraints, which can ensure the training stability and correlation between reconstructions and TIs39. \(\:{\mathcal{L}}_{adv}\) can be defined as:

where \(\:{x}_{i}\) represents the TI in \(\:i\)th training stage, \(\:{\stackrel{\sim}{x}}_{i}\) is the corresponding simulations. \(\:{P}_{*}\) is the probability distribution, and \(\:{E}_{*}\) is the mathematical expectation. \(\:{\lambda\:}_{gp}\) is the coefficient of gradient penalty term, \(\:\nabla\:\) is the gradient calculator. \(\:\widehat{x}\) is a mixed tensor deriving from the element-wise addition of both real and generative data with a certain proportion. \(\:{z}_{i}\) is the random noise, and \(\:\uparrow\:\) is the up-sampling process.

Meanwhile, mean square error is introduced into \(\:{\mathcal{L}}_{rec}\) to ensure the optimal reconstruction structures, which can be written as:

Furthermore, learning rate (\(\:lr\)) is crucial for the simulation performance of OctSinGAN. In order to avoiding overfitting issues during simulation, a linear decay strategy is mainly performed on \(\:lr\) in each training stage, which can be defined as:

where \(\:{\theta\:}_{n}\) is the pre-defined value, \(\:T\) is the threshold related to the training times \(\:epoch\), and \(\:\mu\:\) is the scaling factor. Therefore, through the joint loss function and \(\:lr\) dynamic adjustment, network parameters in various training stages can be fully optimized, thereby further improving the simulation performance of OctSinGAN.

Training process of OctSinGAN

Algorithm 1 shows the stochastic reservoir simulation based on OctSinGAN, which mainly includes 4 steps:

-

1.

Multiscale representation based on pyramid structure: input original TI into pyramid structure to perform multiscale representation. TIs with different scales can be used for OctSinGAN training.

-

2.

OctSinGAN initialization: multiple \(\:G\)s and \(\:D\)s are all initialized for different training stages, with some hyperparameters being pre-defined.

-

3.

OctSinGAN training: for each training stage, referring to Eq. (14), corresponding network parameters for both \(\:G\) and \(\:D\) are optimized by using stochastic gradient descent and backpropagation. All the \(\:G\)s and \(\:D\)s in OctSinGAN needs to be saved when the whole processes are finished.

-

4.

Simulation performance evaluation: All the saved \(\:G\)s are loaded and concatenated sequentially. By inputting a Gaussian random noise to obtain multiscale reconstructions so that simulation performance of OctSinGAN can be evaluated. If simulation performance is better enough, then the saved OctSinGAN can be utilized to construct reservoir models repeatedly. Otherwise, OctSinGAN should be trained once again.

Numerical simulation examples

Three different kinds of 3D TIs were selected from the open-source geo-modeling libraries (https://github.com/GAIA-UNIL/trainingimages) to verify the simulation performance of OctSinGAN. Meanwhile, variogram function, connectivity function40 and Multiscale Sliced-Wasserstein Distance with Multi-Dimensional Scaling (MS-SWD-MDS) metrics32,41 were used to perform qualitative evaluation in terms of spatial variability, channel connectivity and spatial structures respectively. These experiments ran with Python 3.9 and PyTorch 1.10.1 on the PyCharm IDE, which deployed on a workstation with Intel Xeon Gold 5117 CPU with 64GB RAM and a NVIDIA GeForce RTX 3090 GPU.

Training images and hyperparameter settings

The original 3D TIs is shown in Fig. 4. Among them, Fig. 4a denotes the aquifer structural model. Subsurface aquifers have a relatively better sealing and permeability, which is important for resources accumulation and natural gas storage construction. Figure 4b is the Berea sandstone. As is a typical pores media, heterogeneous pore space involved in sandstone can provide better conditions for oil-gas migration and accumulation. Figure 4c is the sedimentary delta system with a relatively high porosity and permeability. It is an important media for oil and gas generation, which is significant for revealing regional paleogeography and paleogeomorphology.

Meanwhile, hyperparameter settings directly impact the OctSinGAN simulation performance. As is shown in Table 1, some important hyperparameters needs to be pre-defined before network training, which includes: TI size of initial training stage (\(\:min\_size\)), total training times (\(\:num\_train\)), number layer of octave convolution (\(\:{Oct}_{layer}\)), learning rate for each training stage (\(\:lr\)), coefficient of both gradient penalty term (\(\:{\lambda\:}_{gp}\)) and reconstruction loss term (\(\:\alpha\:\)), times for single training stage (\(\:epoch\)) and optimizer categories. Among them, both \(\:min\_size\) and \(\:num\_train\) are closely related to the TI scales, which directly impact the final simulation quality. Referring to the parameter sensitive analysis proposed by Fan et al.22, if the \(\:min\_size\) is too large, complex distribution patterns in reservoir domain cannot be extracted effectively. Similarly, setting for \(\:num\_train\) cannot be too large or small, otherwise, poor simulation performance or overfitting issues might be existed. Therefore, these hyperparameters needs to be limited in a suitable value interval after trial-and-error repeatedly.

Reconstructions for 3D aquifer structural model

For 3D aquifer structural model, after network training, 6 simulation results were randomly generated, with both entire structures and attribute filter results being shown in Fig. 5. As is seen, both geometric shape and trends of aquifer among different simulations are similar with the ones of TI. Also, from corresponding filter visualizations, distribution patterns of heterogeneous aquifers are more continuous at different dimensions, without any noise or artifacts being existed in 3D reservoir domains. It indicates that heterogeneity of reservoir physical parameters can be effectively reproduced by OctSinGAN.

Variogram function, connectivity function and MS-SWD-MDS analytical results are created between 20 different simulations and TI. Among them, variogram and connectivity functions can characterize the spatial variability and channel connectivity of regional variables respectively. For MS-SWD-MDS analysis, both simulations and corresponding references will be input into Laplacian pyramid to perform multiscale representation. Then, patches with different scale will be randomly extracted and normalized so that Wasserstein distance between two groups of data can be calculated, which will be mapped into 2D space through MDS to further compare corresponding discrepancies. Qualitative assessment based on aquifer structural model simulations are shown from Figs. 6, 7 and 8:

In Fig. 6, variogram function curves for different simulations are closely distributed around reference line. When the lag distance is relatively small, spatial variability increased significantly then it gradually stabilized at a large lag distance value, with stationary patterns being presented in the reservoir domain. In Fig. 7, along \(\:x\)-directions, multiple point connectivity probability are decreased gradually with lag distances increasing, while in \(\:y\)-directions, channel connectivity degrees are slightly fluctuated at middle lag distance values. All the simulated curves resemble with the reference line. In Fig. 8, all the red points are distributed within the range of \(\:\left[-\text{400,400}\right]\) and they are located within the contour regions. It indicates that these patches randomly extracted from various simulations are very similar with the reference ones, with simulation diversities being preserved overall. In summary, OctSinGAN is suitable for the heterogeneous aquifer model reconstruction, and each simulation is similar with the reference ones in terms of spatial variability, channel connectivity and spatial structures.

Reconstructions for 3D Berea sandstone

Berea sandstone reconstructions based on OctSinGAN is shown in Fig. 9. Specifically, distribution pattern of pore spaces for each simulation is similar to the ones of original TI. Pore spaces connectivity is relatively higher, and only small parts of independent pore spaces are randomly distributed in 3D reservoir domain. It indicates that for the Berea sandstone, OctSinGAN can effectively reproduce the internal heterogeneous characteristics. 20 simulation results were randomly selected to perform variogram function, connectivity function and MS-SWD-MDS analysis, which are shown from Figs. 10, 11 and 12:

In Fig. 10, the trend of variogram function for each simulation is similar to the reference line, and when the lag distance increases, spatial variabilities are fluctuated within the range of \(\:\left[\text{0.14,0.18}\right]\), with relatively stable pattern being presented overall. In Fig. 11, along both \(\:x\)- and \(\:y\)-directions, the connectivity degree is significantly decreased at a small lag distance then it slightly fluctuated within a certain interval at a large lag distance. Curve tendencies for different simulations resemble with the reference ones. As is the red points distribution shown in Fig. 12, the sliced-Wasserstein distances for different simulations have a relatively small discrepancy with the ones of TI, and all of them are included in the contour region \(\:\left[-\text{300,300}\right]\). It indicates that the simulated pore spaces structures resemble with the one of original TI. Therefore, OctSinGAN is appliable for the reconstruction of reservoir models with significant heterogeneity, with complex structural information being effectively reproduced in 3D reservoir domain.

Reconstructions for 3D sedimentary delta

Four 3D sedimentary delta models were randomly constructed by OctSinGAN (Fig. 13). Nonstationary patterns of sedimentary delta can be better reproduced. From the observation of slices along \(\:z\)-direction, the same facies types are concentrated, and the transition for all facies is from distributary channel to mouth bar then to mud, with a relatively higher connectivity degree overall. Statistical information in terms of variogram function, connectivity function and MS-SWD-MDS were also created based on 20 reconstructions, which are shown from Figs. 14, 15, 16 and 17:

In Fig. 14, with lag distances expanding, spatial variabilities for all the simulations and TI are all increased gradually, with significant nonstationary tendencies being presented overall. Along two directions in Fig. 15, the connectivity of mouth bar in various simulations are slightly far away from the reference ones, possibly as a result of an apparent decreasing of facies density with respect to the reference ones. Comparing with the reference value, for all simulation curves in Fig. 16, distributary channel connectivity along \(\:x\)-direction is significantly fluctuated at different lag distances, while for the one along \(\:y\)-direction, it is closer to 0 at a larger lag distance. All the facies connectivity for various simulations are similar with the reference ones. In Fig. 17, all the red points (denoted the patches randomly extracted from the simulations) are closely distributed within \(\:\left[-\text{300,300}\right]\), with a high structural similarity being preserved comparing with TIs. In summary, OctSinGAN is suitable for the sedimentary delta reconstruction, and both complex structural information and nonstationary patterns are better reproduced in 3D reservoir domain.

Reservoir simulation performance comparison

Simulation performance among DS42, Variational AutoEncoder (VAE) and OctSinGAN was also compared in the experiment. Parameter settings for DS simulation are defined in Table 2, and VAE structure is shown in Table 3. Comparisons in terms of modeling quality and efficiency are presented in Fig. 18; Table 4 respectively.

For aquifer model reconstruction, the heterogeneous patterns can be better reproduced by OctSinGAN, while for the reconstruction based on VAE, the aquifer distributions are more disordered, with less significant geometric shapes. And for the reconstruction based on DS, the aquifer structures cannot be reproduced effectively, with too many noises and artifacts. For Berea sandstone simulation, pore spaces constructed by OctSinGAN are more continuous and closer to the ones of TI. For VAE-based simulation, however, such heterogeneities cannot be better reproduced in 3D reservoir domain, and there are too many independent and cracked pore spaces being contained in the DS-based reconstruction, with relatively poor simulation performance. For sedimentary delta model constructed by OctSinGAN, both mouth bar and distributary channel maintain a high continuity degree, with nonstationary patterns being reproduced overall. However, for the models constructed by both VAE and DS, the complex distribution patterns and channel continuity cannot be effectively preserved. From three groups of variogram function evaluations, at different location, spatial variabilities simulated by OctSinGAN is closer to the reference value than the ones simulated by VAE and DS. Both OctSinGAN and VAE can realize automatic reservoir modeling, but from visual appearance, simulation quality of OctSinGAN is higher than the one of VAE, which indicates that OctSinGAN is more suitable for categorical reservoir reconstruction under insufficient geological prior knowledge expression.

Discussion and conclusion

Finely representation of reservoir units plays a significant role for characterizing subsurface structural information, heterogeneity and anisotropy. Comparing with the deterministic modeling, stochastic simulation can quantify the uncertainties of regional variables distribution, and equiprobable reservoir models can be established according to the random function theory, thereby providing further decision-making reservoir exploration. However, traditional stochastic modeling is performed based on the assumption of stationarity, with some limitations and large computing consumption for the reservoir units under nonstationary conditions. Therefore, based on a series of deep generative models, it can overcome these issues and is gradually paid attention in the reservoir modeling fields. Among them, though the GANs-based modeling can achieve reservoir reconstruction by fitting latent distribution of training samples, for the insufficient expression of geological knowledge, training process of GANs is hardly to be supported. Also, a higher GPU computing overburden might be caused by stacking multiple CNN layers, and the whole network structure is more complicated, with mode collapse issues being easily occurred. Therefore, a OctSinGAN framework is proposed to achieve the stochastic reconstruction of reservoir units based on single TI. Specific discussions are given as follows:

-

1.

The pyramid structure involved in OctSinGAN can perform multiscale representation based on original TI, and receptive fields can capture global information under small-scale. With scale increasing, it mainly focuses on local information extraction, and corresponding detailed features can be gradually restored. Therefore, based on multiscale TIs, stochastic reconstruction and finely characterization of reservoir units can be achieved under limited geological prior knowledge expression.

-

2.

SinGAN is constructed through multiple CNN layers, when the network becomes deeper, it might cause bottlenecks in terms of simulation diversity and computing efficiency. Therefore, the octave convolution is introduced to replace CNN. Among them, feature maps under different scales can be divided into low-frequency and high-frequency feature information through multi-frequency feature representation. And spatial resolution of low-frequency feature map is reduced by information exchanging in frequency domain, with spatial redundancies being alleviated effectively, and global information among different frequency maps can be easily captured. In addition, octave convolution can reduce computing overburden during training, with overfitting and mode collapse issues being avoided.

-

3.

Distribution evaluation metric is one of the important factors for network parameter optimization. Therefore, a joint loss function combined with adversarial loss and reconstruction loss is introduced. Specifically, Wasserstein distance is mainly used in adversarial loss to better evaluate discrepancies between generative and real distributions, and gradient penalty regularization term can ensure training stability, with correlations between output modes and TIs being ensured. For reconstruction loss term, mean square error is considered to ensure the facies optimal structures during simulation. Both of two loss terms are combined with different weight, and under learning rate linear decay strategy, network parameters can be fully optimized to improve the simulation performance of OctSinGAN.

Three different kinds of TIs, including aquifer structural model, Berea sandstone model and sedimentary delta model, are selected to verify the geo-modeling performance. Results show that different simulations are similar with corresponding TIs in terms of spatial variability, channel connectivity and spatial structures. Meanwhile, comparing with DS and VAE, OctSinGAN is more suitable for complex reservoir units simulation. Furthermore, for the saved OctSinGAN, different simulation results can be obtained rapidly within a few seconds by inputting random noise with different size once only, thereby realizing automatic reconstruction and finely representation of reservoir units.

It is worth mentioning that reservoir simulation based on OctSinGAN is still lack of conditioning data constraints. And corresponding hyperparameters can hardly be defined without multiple experiments. Therefore, by modifying network structures and fine-tuning hyperparameters adaptively to achieve reservoir conditioning simulation is a key objective in the future research.

Data availability

The analysis datasets during the current study are available from the corresponding author on reasonable request.

References

Schwindt, S. et al. Bayesian Calibration Points to Misconceptions in Three-Dimensional Hydrodynamic Reservoir Modeling. Water Resour. Res. 59(3), eWR033660 (2023). (2022).

Pacina, J., Lenďáková, Z., Štojdl, J., Grygar, M., Dolejš, M. & T. & Dynamics of sediments in reservoir inflows: a case study of the Skalka and nechranice reservoirs, Czech Republic. ISPRS Int. Geo-Inf. 9(4), 258 (2020).

Grana, D., Mukerji, T. & Doyen, P. Seismic Reservoir Modeling: Theory, Examples, and Algorithms (Wiley, 2021).

Jacquemyn, C., Jackson, M. D. & Hampson, G. J. Surface-based geological reservoir modelling using grid-free NURBS curves and surfaces. Math. Geosci. 51, 1–28 (2021).

Schaaf, A. & Bond, C. E. Quantification of uncertainty in 3-D seismic interpretation: implications for deterministic and stochastic geomodeling and machine learning. Solid Earth. 10(4), 1049–1061 (2019).

Li, Y. et al. CSD-RKNN: reverse k nearest neighbors queries with conic section discriminances. Int. J. Geogr. Inf. Sci. 37(10), 2175–2204 (2023).

Caers, J. Modeling Uncertainty in the Earth Sciences (Wiley, 2011).

Refsgaard, J. C. et al. Review of strategies for handling geological uncertainty in groundwater flow and transport modeling. Adv. Water Resour. 36, 36–50 (2012).

Zhang, T. Incorporating geological conceptual models and interpretations into reservoir modeling using multiple-point geostatistics. Earth Sci. Front. 15(1), 26–35 (2008).

Hassan, T., Basal, A. M., Omran, M. A., Mowafy, M. H. & Sarhan, M. A. An advanced workflow to compress the uncertainties of stochastic distribution of Bahariya reservoir properties using 3D static modeling: an example from Heba Oil Fields, Western Desert, Egypt. Petroleum Res. 8(2), 205–216 (2023).

Chen, Q., Mariethoz, G., Liu, G., Comunian, A. & Ma, X. Locality-based 3-D multiple-point statistics reconstruction using 2-D geological cross sections. Hydrol. Earth Syst. Sci. 22(12), 6547–6566 (2018).

Wang, L. et al. A MPS-based novel method of reconstructing 3D reservoir models from 2D images using seismic constraints. J. Pet. Sci. Eng. 209, 109974 (2022).

Chen, Q. et al. pyMPSLib: a robust and scalable open-source Python library for mutiple-point statistical simulation. Earth Sci. Inf. 16, 3179–3190 (2023).

Tahmasebi, P., Sahimi, M. & Caers, J. MS-CCSIM: accelerating pattern-based geostatistical simulation of categorical variables using a multi-scale search in Fourier space. Comput. Geosci. 67, 75–88 (2014).

Bai, H. & Mariethoz, G. A fast edge-based two-stage direct sampling method. Comput. Geosci. 150, 104742 (2021).

Levy, S., Friedli, L., Mariéthoz, G. & Linde, N. Conditioning of multiple-point statistics simulations to indirect geophysical data. Comput. Geosci. 105581 (2024).

Zhang, D., Zhang, H., Ren, Q. & Zhao, X. Multiple-point geostatistical simulation of nonstationary sedimentary facies models based on fuzzy rough sets and spatial-feature method. SPE J. 28(5), 2240–2255 (2023).

Kingma, D. P. & Welling, M. Auto-encoding variational bayes. arXiv Preprint arXiv:13126114. (2013).

Goodfellow, I. et al. Generative adversarial networks. Commun. ACM. 63(11), 139–144 (2020).

Medsker, L. R. & Jain, L. C. Recurrent neural networks. Des. Appl. 5(64–67), 2 (2001).

Yang, Z. et al. Automatic reconstruction method of 3D geological models based on deep convolutional generative adversarial networks. Comput. Geosci. 26(5), 1135–1150 (2022).

Cui, Z., Chen, Q. & Liu, G. A two-stage downscaling hydrological modeling approach via convolutional conditional neural process and geostatistical bias correction. J. Hydrol. 620, 129498 (2023).

Fan, W. et al. Stochastic reconstruction of geological reservoir models based on a concurrent multi-stage U-Net generative adversarial network. Comput. Geosci. 105562 (2024).

Creswell, A. et al. Generative adversarial networks: an overview. IEEE Signal. Process. Mag. 35(1), 53–65 (2018).

Song, S., Mukerji, T. & Hou, J. Geological facies modeling based on progressive growing of generative adversarial networks (GANs). Comput. Geosci. 25, 1251–1273 (2021).

Sun, C., Demyanov, V. & Arnold, D. Geological realism in fluvial facies modelling with GAN under variable depositional conditions. Comput. Geosci. 27(2), 203–221 (2023).

Cui, Z., Chen, Q., Liu, G., Xun, L. & SA-RelayGANs: A Novel Framework for the characterization of complex hydrological structures based on GANs and self‐attention mechanism. Water Resour. Res. 60(1), e2023WR035932 (2024).

Fan, W. et al. Automatic reconstruction of geological reservoir models based on conditioning data constraints and BicycleGAN. Geoenergy Sci. Eng., 212690 (2024).

Zhu, J. Y. et al. Toward multimodal image-to-image translation. Adv. Neural. Inf. Process. Syst., 30 (2017).

Li, Y., Han, G. & Jian, P. 3D reconstruction of unlimited-size real-world porous media by combining a BicycleGAN-based multimodal dictionary and super-dimension reconstruction. Geoenergy Sci. Eng. 212005(2023).

Karras, T., Aila, T., Laine, S. & Lehtinen, J. Progressive growing of gans for improved quality, stability, and variation. arXiv Preprint arXiv :171010196 (2017).

Song, S., Mukerji, T., Hou, J., Zhang, D. & Lyu, X. GANSim-3D for conditional geomodeling: theory and field application. Water Resour. Res. 58(7), eWR031865 (2022). (2021).

Shaham, T. R., Dekel, T., Michaeli, T. & Singan Learning a generative model from a single natural image. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 4570–4580 (2019).

Isola, P., Zhu, J. Y., Zhou, T. & Efros, A. A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 1125–1134 (2017).

Lindeberg, T. Scale-space Theory in Computer Vision Vol. 256 (Springer Science & Business Media, 2013).

Chen, Y. et al. Drop an octave: Reducing spatial redundancy in convolutional neural networks with octave convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 3435–3444 (2019).

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V. & Courville, A. C. Improved training of Wasserstein Gans. Adv. Neural. Inf. Process. Syst., 30 (2017).

Ye, C. et al. Differential evolution with alternation between steady monopoly and transient competition of mutation strategies. Swarm Evol. Comput. 83, 101403 (2023).

Fan, W. et al. Geological model automatic reconstruction based on conditioning Wasserstein generative adversarial network with gradient penalty. Earth Sci. Inf. 16, 2825–2843 (2023).

Wellmann, F. & Caumon, G. 3-D structural geological models: concepts, methods, and uncertainties. Adv. Geophys. 59, 1–121 (2018).

Tan, X., Tahmasebi, P. & Caers, J. Comparing training-image based algorithms using an analysis of distance. Math. Geosci. 46, 149–169 (2014).

Mariethoz, G., Renard, P. & Straubhaar, J. The direct sampling method to perform multiple-point geostatistical simulations. Water Resour. Res. 46(11) (2010).

Acknowledgements

This work is supported by the Major Research Plan of Hubei Province (2023BAA027), Science and Technology Strategic Prospecting Project of Guizhou Province ([2022]ZD003). Meanwhile, we are grateful to the two anonymous referees for their insightful comments and suggestions towards improving the research enclosed in this paper.

Author information

Authors and Affiliations

Contributions

Xuechao Wu: review, editing, supervision, and funding acquisition. Wenyao Fan: conceptualization, methodology, investigation, formal analysis, software, writing-original draft and editing. Shijie Peng: supervision, investigation, and funding acquisition. Bing Qin: investigation and visualization. Qing Wang: review and editing. Mingjie Li: supervision and investigation; Yang Li: supervision and visualization.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wu, X., Fan, W., Peng, S. et al. Reservoir Stochastic Simulation Based on Octave Convolution and Multistage Generative Adversarial Network. Sci Rep 14, 31618 (2024). https://doi.org/10.1038/s41598-024-80317-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-80317-1

Keywords

This article is cited by

-

Dual Storage-Based Cascade Reservoir Simulation Under Changing Climate: A Case Study on Sunkoshi Hydropower Projects in Nepal

Remote Sensing in Earth Systems Sciences (2025)