Abstract

Based on deep mediatization theory and artificial intelligence (AI) technology, this study explores the effective improvement of museums’ social media communication by applying Convolutional Neural Network (CNN) technology. Firstly, the social media content from four different museums is collected, a dataset containing tens of thousands of images is constructed, and a CNN-based model is designed for automatic identification and classification of image content. The model is trained and tested through a series of experiments, evaluating its performance in enhancing museums’ social media communication. Experimental results indicate that the CNN model significantly enhances user participation, access rates, retention rates, and sharing rates of content. Specifically, user participation increased from 15 to 25%, reflecting a 66.7% rise. Content coverage increased from 20 to 35%, showing a 75% increase. User retention rate rose from 10 to 20%, indicating a 100% increase. Content sharing rate increased from 5 to 15%, reflecting a 200% rise. Additionally, the study discusses the model’s performance across various museum types, batch sizes, and learning rate settings, verifying its robustness and wide applicability.

Similar content being viewed by others

Introduction

Research background and motivations

The advancement of new media technology has made digital technology a core component of politics, economy, culture, and society, ushering in the era of deep mediatization1. In this process, all societal elements are closely linked to digital media and its underlying architecture2. Algorithms, data, and artificial intelligence (AI) have become crucial topics in studying the complex relationship between media, daily life, and societal functioning3. Digital technology empowers individuals, intertwining the dynamics of social interaction. The public utilizes social media as a virtual habitat for communication, consumption, performance, and other media behaviors. The communication network constructed by social media, through the optimization and integration of technology, has become the key communication form in the era of deep mediatization4. In the current digital media environment, museums are facing unprecedented communication challenges and opportunities. With the rise of social media, the way the public obtains and interacts with cultural content has undergone fundamental changes. As an important place for cultural inheritance and education, the effectiveness of museum’s social media communication strategy is directly related to its social influence and cultural communication effect. In such a communication environment, cultural institutions such as museums are facing new opportunities and challenges. Social media offers museums a new platform to showcase their cultural values and expand their social influence. By optimizing and integrating technology, museums can interact with the public more effectively, attract more young audiences, spread cultural knowledge through social media platforms, and enhance the public’s cultural experience and sense of participation. For example, through social media forms such as Weibo, official WeChat account and short video platform, museums can spread the knowledge of cultural relics in their collections and enhance cultural experience and interactive participation. The challenge lies in highlighting unique characteristics amidst vast information and managing and utilizing extensive digital resources. Social media content is rich and varied, with users’ needs becoming increasingly personalized and diverse, necessitating higher standards for museums’ content creation and promotion strategies. Moreover, real-time interactive feedback on social media requires museums to promptly address public needs and feedback, increasing operational complexity and difficulty.

The development of AI technology, especially in the fields of image recognition, text analysis and multi-label classification, provides a new technical means for the optimization and dissemination of museum social media content. For example, Omar et al.5 verified the effectiveness of semi-supervised tagging technology in short text classification and sentiment analysis by constructing a standard multi-label Arabic dataset, and found the relationship between topics posted on social media and hate speech. Omar et al.6 compared the performance of machine learning and deep learning algorithms in Arabic hate speech detection, and found that the recurrent neural network (RNN) was outstanding in accuracy. These studies showed that deep learning technology had significant potential in processing social media content. However, the existing research mostly focuses on the evaluation of the communication effect of social media content, and there is still a lack of in-depth and systematic discussion on how to use advanced AI technology, especially CNN, to optimize the creation and promotion strategy of museum social media content. This is the starting point and innovation of this study. Among many AI technologies, it chooses CNN as the core of the research, mainly based on its unique advantages in image recognition and processing. CNN can effectively capture the local features of images and build more complex and abstract feature representations layer by layer, which is especially important for the rich visual elements in museum social media content. Through CNN, people can automatically identify and classify image content, thus providing technical support for content recommendation and optimization. In addition, CNN’s high efficiency in processing large-scale datasets gives it a significant advantage in real-time interaction and feedback of social media content7,8,9. Video processing technology enables automatically analyze and understand videos from surveillance cameras to social media, while natural language processing technology enables machines to understand and generate human language. The development of these two fields is profoundly changing the life and work style. In video processing, deep learning model, especially CNN, has been widely used in video content recognition, classification and analysis. These technologies can automatically identify objects, scenes and events in video, which has brought revolutionary changes to video surveillance, autonomous driving, health care and other fields. For instance, in security monitoring, video processing technology can detect abnormal behaviors in real-time, enhancing public safety. The development of natural language processing technology enables machines to perform tasks such as language translation, sentiment analysis and automatic summarization. With the appearance of pre-training language models such as BERT, the machine’s ability to understand the language has been significantly improved, which not only promotes the development of intelligent assistants and chat robots, but also provides strong technical support for applications such as content creation and customer service automation. However, although CNN performs well in image processing, it also faces many challenges in practical application. For example, how to ensure the accuracy and fairness of the recommendation system and avoid the problem of algorithm bias, how to find a balance between automatic content generation and manual curation, and maintain the creativity and depth of content, and how to reasonably use user data for analysis while protecting user privacy. These problems are not only related to the application effect of technology, but also to the boundary between ethics and law. Therefore, this study not only pays attention to the application of technology, but also discusses the ethical, legal and social problems faced by applying AI technology in museum social media communication.

As an important place for cultural inheritance and education, museums are also facing the needs and challenges of transformation and upgrading in the digital wave10,11,12. On the one hand, traditional exhibition methods and means of communication are difficult to meet the increasingly diversified and personalized needs of modern audiences. On the other hand, with the popularity of social media and mobile Internet, museums need new media platforms, such as social networks, official websites and mobile applications, to expand their influence and attract more audiences, especially the younger generation13,14,15. Therefore, museums are no longer limited to exhibitions in physical space, but actively expand online channels, spread the knowledge of cultural relics in their collections through social media forms such as Weibo, WeChat official account and short video platforms, and enhance the cultural experience and interactive participation of the public16,17,18. However, museums face several challenges in creating and accurately delivering social media content, such as handling vast amounts of information, meeting diverse user needs, and providing real-time interactive feedback.

Museums face multiple challenges in this process. These include effectively managing and utilizing massive digital resources, improving content attractiveness and communication efficiency, and enhancing audience participation and interaction19,20,21. Traditional content creation and promotion methods are often time-consuming and labour-intensive, and it is difficult to accurately meet the needs of users22,23,24. In this context, based on the deep mediatization theory and AI technology, the exploration and application of advanced technical means, especially AI technology such as CNNs, has become an important way for the digital transformation of museums25,26,27. Utilizing CNN for image identification and processing, museums can automatically generate high-quality visual content and enhance exhibition appeal. Additionally, by analyzing user behaviors and preferences through deep learning, museums can offer personalized content recommendations and displays, significantly improving the effectiveness and efficiency of social media communication28,29,30. The application of this technology actually provides a new impetus and direction for the digital transformation of museums. Firstly, CNN’s ability in image recognition and processing enables museums to automatically create fascinating visual content. These contents can not only attract the attention of the audience, but also show the details of the exhibits and the stories behind them in a more vivid and intuitive way, which enhances the educational and entertainment value of the exhibition. Secondly, the application of deep learning technology in user behavior analysis provides the museum with a way to deeply understand the needs and preferences of the audience. By analyzing the interactive data of users on social media, museums can better understand the target audience to design exhibitions and educational projects that are more suitable for users’ interests. Furthermore, implementing personalized recommendation systems enables museums to suggest relevant content to users on social media. This customized content push not only improves users’ participation and satisfaction, but also enhances the interaction and contact between users and museums. However, realizing the application of these technologies presents several challenges for museums. These include ensuring the accuracy and fairness of the recommendation system, balancing the creativity and depth of automatic content generation with manual curation, and making rational use of user data for analysis while protecting user privacy. Additionally, museums must consider how to integrate these technologies with existing exhibitions and educational projects to achieve a balance between technology and humanities. This may involve the cooperation of interdisciplinary teams and the training and education of museum staff in new technologies. In a word, CNN and deep learning technology provide powerful tools for museums to enhance the effectiveness and efficiency of social media communication. But meanwhile, museums need to explore and learn constantly to meet the challenges in the process of technology application and give full play to the potential of these tools. Through these efforts, museums can better serve the public and promote the spread of culture and the popularization of education.

By constructing and applying CNN model, this study aims to fill the gaps in the existing research and explore the application potential of AI technology in museum social media communication. By collecting and analyzing social media content from different museums, we designed and tested a CNN model specifically for museum social media content. The research results show that the application of CNN model can significantly improve user participation, content coverage, user retention rate and content sharing rate, thus verifying the application value of deep learning technology in improving the social media communication effect of museums. This discovery not only provides a new communication strategy and technical path for the museum, but also provides a new perspective and practical reference for the application of AI in the field of cultural communication and education promotion.

Research objectives

Based on deep mediatization theory and AI technology, this study aims to automatically identify and classify the image content of museum social media. The goal is to enhance user participation and the effectiveness of content dissemination. Specifically, it focuses on how to optimize the content creation and promotion strategy of museum social media through CNN technology to improve its communication effect and influence among the public. Specific research objectives include: (1) Explore the application of CNN technology in museum image recognition and content classification, so as to automatically generate higher quality and more attractive visual content. (2) Analyze the behavior data of museum users from the media, and use CNN for in-depth excavation and learning to achieve more accurate content recommendation and personalized display. (3) Evaluate the practical application value of CNN technology in improving the social media communication effect of museums, including enhancing user participation, increasing interaction times and improving user satisfaction.

Based on these objectives, the main contribution of this study is to propose a multimodal content recommendation system based on CNN, which innovatively integrates visual and text data to optimize the content dissemination of museum social media. Through the deep learning model, this study effectively solves the shortcomings of traditional content recommendation system in diversity and personalization. Secondly, this study provides a new framework, which can accurately push according to users’ interests, thus improving users’ interaction and satisfaction. In addition, this study also reveals how to use multimodal data analysis technology to achieve wider application and more efficient content management in the field of cultural communication.

Literature review

In recent years, the development of AI technology has significantly expanded its application in cultural communication. Zhao and Zhao31 proposed that in a deeply mediatized society, the communication of traditional Chinese culture was increasingly influenced by various social technologies, forming a new mode of social communication. Egarter Vigl et al.32 showed that AI technology, particularly natural language processing and machine learning, could effectively analyze user behaviors and preferences, providing data support for promoting cultural products. Pisoni et al.33 discussed AI applications in museum exhibition design and found that using machine learning algorithms to analyze audience feedback could significantly improve interaction and educational effectiveness. Jiao and Zhao34 introduced a CNN model, initiating research into the application of deep learning in image processing. Lodo et al.35 successfully enhanced image recognition accuracy in complex backgrounds using an improved CNN model, providing a new technique for image classification and retrieval. As key institutions for cultural communication, museums are undergoing a transformation in media communication modes in the digital age. Kamariotou et al.36 noted that while museums increasingly used social media to interact with the public, effectively managing and optimizing digital content and improving user participation and satisfaction remained major challenges. Additionally, Cuomo et al.37 suggested that analyzing large volumes of user data to achieve personalized recommendations was another challenge museums must address.

As society develops and technology progresses, museums, as custodians of cultural heritage and key venues for knowledge dissemination, are experiencing evolving communication modes. Social media, as a new communication tool, has become crucial for museums to enhance their communication effectiveness due to its extensive user base and efficient communication capabilities. Deep mediatization theory offers a framework for understanding this transformation, while AI technology, particularly convolutional neural network (CNN), provides technical support for optimizing social media communication content. Trunfio et al.38 highlighted that the interactivity and immediacy of social media allowed museums to engage with the public more directly, thereby enhancing user participation. However, they noted that traditional social media strategies often lacked specificity and failed to effectively attract and retain users. Amanatidis et al.39 examined the characteristics of museum social media content, emphasizing the crucial role of visual content in capturing users’ attention. Esposito et al.40 analyzed several museums’ social media accounts and found that posts featuring high-quality images typically received more likes and shares. Deep mediatization theory posits that all aspects of modern society are profoundly influenced by media, and museums, as pivotal institutions of cultural communication, are no exception. Vesci et al.41 demonstrated that museums must leverage media capabilities to transmit information more innovatively and enhance communication effectiveness. CNNs have demonstrated remarkable capabilities in image recognition and classification. Sun et al.42 found that CNNs outperformed traditional methods in large-scale image classification tasks, with their deep structures and convolutional operations providing significant advantages in processing image data. Research on optimizing social media content using CNNs is growing. Chandrasekaran et al.43 developed a CNN-based model to analyze and classify visual content on social media, with experimental results indicating that the model significantly enhanced user interaction rates and content dissemination. Kuppusamy et al.44 put forward a hybrid model combining sentiment analysis and image recognition, and further optimized the content recommendation system of social media by combining text sentiment and image content. The research showed that this method not only improved the relevance of content, but also increased the participation of users.

Under the social background of deep media, social media has become an important platform for cultural communication and education promotion. However, how to effectively manage and optimize these digital contents and improve user participation and satisfaction is still an important challenge for museums. In recent years, AI technology, especially CNN, has performed well in image recognition and classification. Hassan et al.45 explored the application of language model and deep learning technology in disease prediction. The ability of Medical Concept Normalization—Bidirectional Encoder Representations from Transformers (MCN-BERT) and Bidirectional Long Short-Term Memory (BiLSTM) models to predict diseases in symptom description was verified. Mamdouh Farghaly et al.46,47 put forward feature selection methods based on frequent and related item sets respectively, emphasized the importance of feature selection in pattern recognition, and verified the effectiveness of these methods in text classification tasks. In addition, Khairy et al.48 summarized the automatic detection of cyber bullying and language abuse in Arabic content, and pointed out the scarcity of Arabic resources and the challenges of detection technology. Farghaly et al.49 proposed an efficient method of automatic threshold detection based on mixed feature selection method, and demonstrated the role of feature selection in data dimensionality reduction. Omar et al.50 compared the performance of quantum computing and machine learning in emotion classification of Arabic social media, and found that quantum computing had high accuracy when dealing with large datasets. Finally, Lotfy et al.51 paid attention to the privacy of public Wi-Fi networks, and classified the websites visited by users through machine learning model, revealing the risk of privacy leakage that users might face when using public hotspots. These studies not only provide a new perspective and technical path for the optimization of museum social media content, but also explore new possibilities for the application of deep learning in the field of cultural communication and education promotion.

According to He et al.‘s research52, CNN had achieved remarkable success in large-scale image classification tasks, which provided a solid foundation for subsequent research. Chen et al.53 showed that the accuracy of image classification could be greatly improved by training a large number of image data through deep learning algorithm, which urged many scholars to further explore the application possibility of CNN in other fields. In the field of culture, Zhao et al.54 studied the application of AI technology in digital management of museums. By constructing an image classification model based on deep learning, the study automatically classified and labelled museum collections, which improved the collection management efficiency and audience experience. The research results showed that using CNN to analyze and classify images could not only reduce labour costs, but also improve the diversity and richness of collections and further enhance the audience’s sense of participation.

In addition, Li et al.55 discussed the application of CNN in education, especially in the analysis of classroom teaching behavior. It was found that the application of CNN technology could realize the automatic recognition and behavior analysis of classroom videos, thus providing data support for teachers and improving teaching methods. The research pointed out that CNN had shown high accuracy and efficiency in processing a large number of video data, which provided a new direction for the intelligent development of education. Different from the above research, Alamdari et al.56 focused on the application of AI technology in social media content management. The research team used CNN model to classify and recommend the image content on social media, thus improving users’ participation and satisfaction. The research results showed that using CNN technology could significantly improve the user interaction and content dissemination effect of social media platform, and verify the broad application prospect of AI technology in social media management. For another example, Wang et al.57 advocated the digitalization of museums in the “Smart Museum Project”, which promoted a paradigm based on digital museums, combining new technologies with comprehensive perception, ubiquitous interconnection and fusion applications.

In previous studies, AI, especially CNN, has shown great potential in the management of museum social media content. Research shows that AI technology can effectively improve user participation and content dissemination effect, but there are still some limitations. Firstly, although the CNN model proposed above has opened a new direction for deep learning applications, it lacks effective extraction of multi-scale information when dealing with complex images. Although some studies have improved the accuracy of image recognition through improved CNN, they have failed to fully solve the problem of gradient disappearance in deep networks, which limits the deep learning ability of the model. However, museums face the challenges of content management and user interaction when using social media, which shows that the existing models are still insufficient to respond to user needs.

In order to fill these gaps, the MuseumCNN model in this study combines the advantages of ResNet and Inception modules, and proposes solutions to these limitations. The residual structure of ResNet effectively alleviates the problem of gradient disappearance in the deep network and enables the model to learn deeper features. The Inception module flexibly captures multi-scale information through multi-scale volume integral branch, which improves the processing ability of complex images. This combination not only improves the accuracy of classification, but also enhances user participation, which responds to the needs of museums in social media content management. In addition, previous studies mostly focused on a single deep learning model, but lacked comparison with non-deep learning methods. This study provides more comparative results to further verify the superiority of the proposed method and show its wide application prospect in the optimization of museum social media content. These innovations not only make a breakthrough in technology, but also provide a new solution for the cultural communication of museums in the digital age. To sum up, this study provides a new way to improve the communication effect of museum social media by combining the theory of deep media and advanced AI technology. This exploration not only enriches the content of academic research, but also provides practical technical solutions for practical application.

Research methodology

CNN model architecture and adaptation

In this study, a hybrid CNN (named MuseumCNN) is constructed, which skillfully combines the core idea of ResNet58,59 and Inception Network60,61,62. MuseumCNN model combines the core ideas of ResNet and Inception network, and constructs a multi-level and multi-scale feature extraction and deep learning system. The residual block design of ResNet is selected to solve the problem of gradient disappearance in deep network training. Each residual block contains a series of convolution layers, batch normalization layers and jump connections. Jumping connection promotes the forward propagation of information in the deep network, especially when dealing with museum-related images. It can effectively extract subtle and hidden visual features in images, such as texture, shape and color changes of cultural relics.

In addition, the Inception module extracts multi-scale features by running convolution kernels with different sizes in parallel, thus enhancing the model’s ability to understand complex scenes. Specifically, the Inception module includes the following elements: Multi-scale convolution kernel: Use convolution kernels with sizes of 1 × 1, 3 × 3 and 5 × 5, local and global features can be captured simultaneously. Maximum pooling layer: Parallel to convolution kernel to further enhance feature extraction ability. In ResNet, the residual learning framework solves the problem of gradient disappearance in deep network training by introducing residual blocks. The structure of each residual block is as follows: Multiple convolution layers: Used for depth feature extraction. Batch normalization layer: Accelerate the training process. Jump connection: Add the input directly to the output, and effectively transfer the gradient. This design enables the model to identify and understand important visual information more accurately when dealing with complex museum images.

MuseumCNN model combines the residual block of ResNet with the Inception module, and realizes the depth feature learning and multi-scale feature extraction of the image through serial combination. When designing MuseumCNN model, other alternatives have been considered, such as using more complex network structure or introducing other types of deep learning technology. For example, the Transformer model is excellent in dealing with sequence data, so it can be considered to be applied to the analysis of text content. In addition, Generative Adversarial Network (GAN) has potential in image generation and enhancement, which may provide new ideas for the innovation of museum social media content. Although these methods may have advantages in some aspects, considering the computational resources and model complexity, MuseumCNN model is finally chosen as the main method of this study. This study aims to meet the diverse image recognition, understanding and content analysis needs of museums in the media communication environment. The specific structure is shown in Fig. 1.

Figure 1shows that MuseumCNN model combines the advantages of Inception module and ResNet on the basis of retaining the traditional CNN architecture to form a multi-level, multi-scale feature extraction and deep learning system to meet the understanding and analysis needs of complex scenes in museum social media communication. This combination not only combines the advantages of ResNet, but also effectively solves the problem of gradient disappearance through residual connection in deep networks. It also combines the characteristics of the Inception module, which can capture the local and global features of the image at different scales. This combination can consider the requirements of detail and global feature extraction when processing museum images, thus improving the accuracy and generalization ability of classification. Firstly, the input layer inputs the multimedia content from the media in the museum. If it is an image, it is preprocessed into a tensor input with a fixed size. If it contains text, it is first converted into vector representation by word embedding. Among them, ResNet is famous for its unique residual block design, and each residual block contains a series of convolution layers, batch normalization layers and a short circuit connection. Short-circuit connection allows information to propagate forward more easily in deep networks63,64. This design allows signals to directly “skip” some layers in the network without propagating layer by layer, which effectively alleviates the problem of gradient disappearance with the increase of network depth65. This is particularly important when dealing with museum-related images, because it is necessary to extract subtle and hidden visual features in the images, such as texture, shape and color changes of cultural relics, which often requires deep network to capture.

By introducing the ResNet structure, a deeper network can be built, and a finer feature expression can be obtained, which is very important for distinguishing different types of cultural relics and artworks and their display effects in various environments66,67. Following the input layer is the residual block design of ResNet. The residual block consists of several key parts, including convolution layer, batch normalization layer and short circuit connection, which works together to extract and transmit the local features of the image. After the ResNet residual block is the Inception module, which extracts multi-scale features through convolution kernels of different sizes running in parallel. Finally, the output layer pools the features extracted by multiple residual blocks and the Inception module, and predicts the categories through classifiers and softmax functions to complete the final classification of the input content.

On this basis, it is considered that although the existing research results have proved the potential of deep learning technology in the classification of cultural relics and works of art, more factors still need to be considered in practical application. For example, cultural relics in different cultural backgrounds may have unique visual elements and aesthetic standards, which requires the model not only to have high recognition ability, but also to have certain cultural adaptability and flexibility. In addition, changes in the exhibition environment, such as light, angle and background, will also affect the visual presentation of cultural relics and artworks, so the model needs to be able to adapt to these changes in external conditions to ensure accurate identification and classification in different situations.

An innovative hybrid CNN model is designed, which combines the advantages of ResNet and Inception network to realize efficient recognition of complex scenes. The model adopts ResNet’s residual learning framework to construct a deep network, which solves the problem of gradient disappearance in deep network training by introducing residual blocks. Each residual block is stacked by multiple convolution layers and batch normalization layers, and the input is directly added to the output of the block through jump connection, thus allowing the gradient to propagate back effectively. On the basis of ResNet, the idea of Inception network is integrated, and features of different scales are captured simultaneously through parallel volume integral branches. Each Inception module contains several convolution kernels (1 × 1, 3 × 3, 5 × 5) with different sizes and a maximum pooling layer, and these branches can simultaneously extract local and global features in the image68,69.

The residual block of ResNet and the Inception module are combined in series. Specifically, the input image is preprocessed by a shared convolution layer and a batch normalization layer, and then enters a plurality of residual blocks for feature extraction. After each residual block, an Inception module is inserted to further extract multi-scale features. In this way, the model can capture rich information from details to the whole at different levels. After the processing of several residual blocks and the Inception module, the feature map is reduced in dimension through the global average pooling layer and transformed into a fixed-length feature vector. These features are then sent to a fully connected layer and finally classified by softmax layer to identify scenes and objects in the image. Through this design, the new hybrid CNN model can not only learn the deep features of images, but also effectively process visual information of different scales, which is particularly important for the identification of complex scenes in museum social media content.

Model design and workflow

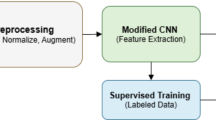

When exploring the application of CNN in museum social media communication, the model design and data processing flow are refined, aiming at efficiently processing and analyzing multivariate data from museum social media platform. The model mainly predicts the following tasks: (1) Image classification: The model divides images into different categories, such as sculpture, painting and cultural relics. (2) Emotional analysis: By analyzing the text related to the image, the model identifies the user’s positive or negative emotional response to the content. (3) User interaction prediction: The model also predicts the probability of user interaction with specific content, including the possibility of likes, comments and sharing. The specific workflow of is shown in Fig. 2.

Figure 2 Starting from the image and text data collected by the museum social media platform, the model design and data processing flow are carefully polished to efficiently process and analyze the multivariate data from the museum social media platform. The specific workflow is as follows:

-

Data input: First, collect rich data resources from the museum’s social media platform, including but not limited to high-definition cultural relics pictures, article titles and texts, user comments and likes.

-

Data preprocessing stage: Image preprocessing: the collected cultural relics pictures are standardized, including image normalization, cropping and size adjustment, to ensure that the images input into CNN have uniform size and pixel range. Text pre-processing: analyze the text content, including word segmentation, stop words removal and stem extraction, and transform the pre-trained word embedding model into vector representation to transform the complex human language into a machine-understandable form.

-

Feature extraction stage: Image feature extraction: firstly, CNN model is used to extract features from images. The model automatically learns the visual features in the image, such as color, texture and shape, through a series of convolution layers and pooling layers. These features can capture the visual content and aesthetic elements of the image. Text feature extraction: For text data, natural language processing technology is adopted. Firstly, the text is cleaned by preprocessing steps such as word segmentation, stop words removal and stem extraction. Then, word embedding technology (Word2Vec) is used to transform the text into vector form to capture the meaning and context information. After extracting the features of image and text, attention mechanism is adopted to fuse the information of these two modes. Specifically, an attention network is designed, which can assign weights to different areas of the image and different words in the text, and these weights reflect their importance for understanding the whole social media content. Aiming at the text information contained in the image, optical character recognition (OCR) technology is adopted to convert it into analyzable text data. The application of OCR technology makes it possible to extract text features from images and combine them with features directly extracted from text data.

-

Fusion strategy: Suppose there is a picture of a museum exhibit and a text describing the exhibit. The image feature extraction model will identify the visual features such as the shape and color of the exhibits; The text feature extraction model will understand the description of the historical and cultural background of the exhibits in the text. Through the attention mechanism, people can identify the most important part of the image and the most relevant information in the text, and combine them to form a comprehensive feature representation. The fused feature representation can provide a more comprehensive understanding of content, which is very important to realize personalized recommendation and improve user participation. For example, when users are interested in exhibits in a certain historical period, the model can recommend exhibits pictures and related stories with similar visual styles and historical backgrounds.

-

Feature fusion stage: The independently extracted image and text features are combined by clever fusion strategy to form a comprehensive multi-modal feature representation.

-

Classification and prediction stage: These features are input to the fully connected layer for deep learning, and after dimensionality reduction and nonlinear transformation, they are finally mapped into various categories of probabilities by softmax function to realize accurate classification and prediction of data.

Application of the model in museum media communication scene

Then, the established system model is designed to assist museums to produce high-quality, personalized and attractive social media content. The specific workflow is shown in Fig. 3.

Figure 3 shows that the MuseumCNN model first obtains and updates all kinds of information from the museum media platform in real time, including the latest exhibition pictures, detailed text descriptions and user interaction behavior data. Through this information, the system uses advanced technical means to extract the depth features of images and texts, in which CNN is used to identify key elements in image feature extraction, while natural language processing technology is used to analyze keywords and emotional tendencies in text feature extraction.

After obtaining the data, the model provides content creation and optimization functions according to the extracted depth features, including image editing suggestions and text summary generation. The system recommends the best image editing scheme according to the user’s feedback, and provides a concise text summary for the museum to better attract the user’s attention. The model also analyzes the communication effect of upcoming content through simulation and prediction functions, measures the performance of different types of content on social media with historical data, and provides accurate content publishing strategy suggestions for museums.

In the stage of personalized recommendation and content publishing, the system accurately pushes suitable content according to user portraits and interest preferences. Through user interaction data, detailed user portraits are constructed, and collaborative filtering and content-based recommendation algorithms are applied to recommend related exhibitions, artworks and museum activities for users, thus improving users’ stickiness and satisfaction. Finally, the system continuously improves the effect of content planning and promotion strategy through continuous tracking analysis and model optimization, and analyzes the feedback of users on recommended content in real time to adjust the content creation and publishing strategy and guide the direction of museum in content innovation and theme planning. Through the above process, museums can make more efficient use of social media platforms, enhance users’ participation and satisfaction, and enhance the effect of cultural communication.

For example, in terms of image editing, the original image describes: “This is a photo of ancient pottery with a messy background.” The system will provide optimization suggestions: “It is suggested to blur the background of the image to highlight the details of pottery and make it more visually impactful.” Text summary: Original text: “This exhibition shows ceramic art in many periods, including classic works of Tang Dynasty, Song Dynasty and Ming Dynasty.” Optimization Summary: “The exhibition featured three generations of ceramic masterpieces of Tang, Song and Ming dynasties, showing the evolution and essence of China ceramic art.” In terms of personalized recommendation content, generate a recommendation for a user’s interest: “According to your interest preference, this article recommends you to check the relevant content of the Ming Dynasty Pottery Art Exhibition, and the wonderful works are waiting for your exploration!” Cases like this are used to optimize the museum in the process of self-media communication.

User research method

In order to verify the effectiveness of MuseumCNN model in practical application, this study designs and implements a user study to evaluate the influence of the model on user participation and satisfaction. The specific research process is as follows:

Firstly, this study randomly selects 500 users from the social media platform of the museum as the research object. These users cover different age groups, professional backgrounds and cultural interests to ensure the diversity of samples. Participants ranged in age from 18 to 60, and the ratio of male to female is close to 1:1. Users are mainly active users of the museum’s social media platform to ensure the validity of data.

The experiment is divided into two parts. The first part is the user’s participation in the pushed content, which is mainly evaluated by recording interactive behaviors such as likes, comments and sharing. These pushed contents are automatically generated by MuseumCNN model, and compared with the unoptimized contents to see whether the content optimized by the model can effectively improve user participation. The second part is the evaluation of users’ satisfaction with the pushed content. In this study, users are invited to rate the personalization, attractiveness, information quality and other aspects of the content through questionnaire survey (the rating range is 1–5) to obtain their satisfaction data.

The research lasted for one month, with 500 tweets, and each user received an average of 10 tweets. In the questionnaire section, a total of 350 users submitted effective feedback. According to the data of participation and satisfaction, this study evaluates the role of MuseumCNN in improving user participation and content satisfaction through statistical analysis.

Experimental design and performance evaluation

Experimental design

In this experiment, four representative museums are selected from the image dataset, and the images and related descriptive texts in their online collection database are collected. The introduction of the dataset is shown in Table 1. These pictures have been scanned in high definition, accompanied by detailed interpretation and historical research materials by experts. Each image is accompanied by detailed collection information, such as age, author, cultural background, etc. Text data collect relevant text information from official blogs, press releases and social media accounts of these museums, including exhibition introduction, stories of specific collections, historical background, etc. Through the public application of the museum social media platform, user interaction data related to the museum, such as comments, likes and sharing, are collected. For example, this text describes: “This Song Dynasty porcelain is famous for its exquisite patterns and elegant shapes, reflecting the technological level and cultural taste at that time.” Or the content of user interaction: “This porcelain is really beautiful! I like its color and design very much!” And got 10 likes.

Table 1 shows that Dataset A, a social media platform from Museum A, contains about 2,000 high-definition pictures of sculptures and porcelain in different cultural periods. Each picture is accompanied by detailed description information, such as age, author, cultural background and so on. This dataset covers many cultural periods from ancient times to modern times, showing the artistic features and cultural connotations of different historical stages. Dataset B: About 1,500 pictures of works of art collected from Museum B, including paintings, sculptures and historical relics. These pictures not only show the visual characteristics of works of art, but also contain related exhibition introductions and art reviews. This dataset spans many cultural themes, involving diverse themes from ancient art to contemporary art. Dataset C: Museum C provides about 1,800 pictures, covering modern art, ancient art, decorative art and other fields. These pictures have been professionally shot, accompanied by in-depth academic interpretation and artistic value analysis, reflecting the rich connotations of different periods and artistic schools. Dataset D: From the online collection database of Museum D, it contains about 1,700 pictures of ancient artworks, such as paintings, calligraphy, ceramics, bronzes and jades. These pictures are scanned in high definition, accompanied by experts’ detailed interpretation and historical research materials, showing the artistic style and process evolution from ancient times to feudal times.

In addition, the text data comes from the official blogs, news releases and social media accounts of these museums, covering exhibition introductions, stories and historical backgrounds of specific collections. This study also collects relevant user interaction data, including comments, likes and sharing, which reflects users’ participation and feedback on different cultural periods and theme backgrounds. These data fully reflect the potential of social media platforms in promoting cross-cultural communication and user participation. Through the analysis of these diverse datasets, this study can explore the effect and influence of social media communication in museums more deeply.

In the division of datasets, this study follows the proportion principle of 80/20 to ensure the representativeness and balance of training and testing. Each dataset is divided based on random selection to reduce the deviation and enhance the robustness of the model. This study also conducts several rounds of random experiments to ensure that the performance of the model is consistent under different training sets and test sets, which further verifies the reliability and applicability of the results. Images are randomly selected from each museum’s image data set and divided into training set and test set according to the ratio of 80/20. Multiple experimental rounds (here, 10 rounds) are set, and in each round: the dataset is randomly divided. CNN is used to train the model, and finally the performance of the model is evaluated on the test set.

The technical configuration of the experimental environment is shown in Table 2:

The parameter settings are shown in Table 3:

In the data preprocessing stage, firstly, the collected cultural relics pictures are standardized, including image normalization, cropping and size adjustment, to ensure that the images input into CNN have uniform size and pixel range. For text data, natural language processing technology is adopted, including word segmentation, stop words removal and stem extraction, and the text is converted into vector representation to capture the meaning and context information of the text. In addition, optical character recognition technology is adopted to convert the text information in the image into analyzable text data to comprehensively analyze the characteristics of the image and the text. In the feature extraction stage, CNN model is used to automatically learn the visual features in the image, such as color, texture and shape, and visual content and aesthetic elements are captured through a series of convolution layers and pooling layers. For text data, word embedding technology (Word2Vec) is adopted to transform the text into vector form to capture the meaning and context information of the text. Through the attention mechanism, people can identify the most important part of the image and the most relevant information in the text, and combine them to form a comprehensive feature representation.

Performance evaluation

In order to comprehensively evaluate the performance of MuseumCNN model in identifying and classifying museum-related images and contents, the accuracy, precision, recall, AUC and F1 score are adopted as the main evaluation indexes. The calculation equation is as follows:

TP is True Positive, TN is True Negative, FP is False Positive and FN is False Negative. AUC index randomly draws a pair of samples (a positive sample and a negative sample), and then uses the trained classifier to predict these two samples. In this study, positive samples refer to users’ positive feedback on museum social media content, which is usually expressed as appreciation of exhibits, activities or the museum itself. This kind of sample shows the user’s interest and participation in the content. Negative samples refer to users’ negative feedback on museum social media content, which is usually manifested as criticism or disappointment. Such samples reflect users’ dissatisfaction or lack of interest in the content. Table 4 shows some examples:

The probability of predicting the positive sample is greater than the probability of the negative sample. Equation 5 shows the calculation:

In Eq. 5, \(\:{P}_{p}\) is the probability of predicting positive samples. \(\:{P}_{n}\) is the probability of predicting negative samples. \(\:M\) is the number of positive samples. \(\:N\) is the number of negative samples, and \(\:I\) is the number of real and false positive samples used to calculate the model under a specific threshold.

Figure 4 shows the model performance comparison of different museum datasets:

Figure 4 shows that the dataset of Museum C shows the highest accuracy, precision, recall, F1 score and AUC value, which may indicate that the visual content of Art and Design has obvious characteristics, making it easier for the model to learn and identify these characteristics. The dataset of Museum B shows low performance in various indicators, which may be due to the visual diversity and complexity of exhibits in Museum B, such as paintings, sculptures and historical relics etc. These factors may increase the difficulty of model identification. The datasets of Museum A and Museum D are balanced in all indicators, which shows that the model has good generalization ability and adaptability. These results emphasize that it is very important to understand the characteristics of the target dataset when preparing the museum’s social media content. Especially in the field of art and design, using CNN model for feature extraction and recognition can greatly improve the recognition rate and communication effect of content.

The model performance under different batch sizes is shown in Fig. 5:

In Fig. 5, when the batch size is 32, the model is optimal in all evaluation indexes, which indicates that a moderate batch size is helpful for the model to achieve a better performance balance in the learning process. This means that when dealing with social media content, the museum can optimize the preparation and release process of the content according to this parameter setting to attract and maintain the attention of the audience more effectively. When the batch size is 16 or 64, the performance of the model decreases slightly, which may be because too small a batch size causes the model to update too frequently, while too large a batch size may make it difficult for the model to learn enough information from each batch. This result shows that adjusting batch size is one of the effective means to optimize the model performance in practical application. Figure 6 shows the influence of different learning rates on the model performance.

In Fig. 6, the choice of learning rate directly affects the recognition and classification performance of museum social media content. When the learning rate is 0.001, the model performs best, which shows that when dealing with museum social media content, adopting a smaller learning rate is helpful for the model to identify and classify images and video content more accurately. This is of great significance to enhance the interactive experience of the audience and the attractiveness of the content.

Figure 7 shows the performance indicators of the model in different experimental rounds.

The above results show that the model shows good stability and consistency in several rounds of random experiments. The accuracy rate fluctuates between 87.5% and 89.2%, the changes of precision rate and recall rate are relatively small, and the F1 score remains between 85% and 87.9%. This shows that the model has high robustness in recognizing and classifying image content, and can provide reliable results under different random divisions. On the one hand, the dataset contains diverse images and descriptions from different museums, which enables the model to learn a wider range of artistic styles and cultural backgrounds. This diversity helps to improve the robustness of the model, because it can adapt to different types of input data and avoid over-fitting a particular type of image. On the other hand, in the training of the model, this study also considers the user interaction data (such as comments, likes, etc.). These data not only enrich the text input, but also help the model to provide valuable contextual information when understanding the popularity of museum exhibits and the preferences of the audience. This multi-modal input makes the decision of the model more comprehensive, thus improving the recognition accuracy.

In order to measure the improvement of CNN model in the museum’s social media content dissemination effect, some indicators related to user interaction and content dissemination effect are introduced, such as user participation, content access rate, user retention rate and content sharing rate to get the following comparison results, as shown in Fig. 8.

In Fig. 8, the significant increase in user participation (66.7%) shows that the social media content optimized by using CNN model can attract the audience’s interest and participation. This may be because the model improves the relevance and attractiveness of the content and makes the audience more willing to interact with it, such as commenting, praising or participating in online activities. This increase in participation is crucial for strengthening the connection between museums and the public. The increase in content access rate (75%) means that more people have seen the museum’s social media content. This may be attributed to the fact that CNN model can tag and classify the content more accurately, so that these contents can be recommended to interested users more effectively through algorithms. In addition, higher access rate may also promote wider word-of-mouth communication and further expand the audience base of the museum’s social media content. The doubling of user retention rate shows that the audience is not only interested in the contents of the museum, but also willing to pay attention to the museum’s social media platform for a long time.

Table 4 shows the changes of social media communication effect indicators before and after the application of CNN model. The data in Table 5 shows that all indicators have been significantly improved after the application of CNN model. User engagement is defined as the proportion of user interaction with social media content, including comments, likes and sharing. The experimental data show that the user participation increased from 15 to 25% before and after the application of CNN model to optimize the content, with a growth rate of 66.7%. Content coverage refers to the proportion of users that content can reach to the total users. The experimental results show that the optimized content coverage rate has increased from 20 to 35%, with a growth rate of 75%. User retention rate refers to the proportion of users who continue to participate in social media content for a period of time. In the experiment, the user retention rate increased from 10 to 20%, and the promotion ratio reached 100%. Content sharing rate refers to the proportion of users sharing content with others. Experimental data show that the content sharing rate has increased from 5 to 15%, with a growth rate of 200%. These results prove the effectiveness of the model in improving the communication effect of museum social media content.

In order to evaluate the performance of the proposed hybrid CNN model, two existing deep learning models are introduced for comparative experiments: the standard ResNet model and the Inception network. The performance comparison results of different models on four museum datasets are shown in Table 6. By comparing with the basic model and advanced model, this study can more clearly quantify the advantages of MuseumCNN in processing multimodal data. This comparison can not only help this study identify the potential of the model, but also provide a baseline reference for the follow-up research.

Table 6shows that the performance of the proposed MuseumCNN model is significantly better than that of ResNet70, Inception71, GAN and RNN models in all museum datasets. MuseumCNN model has achieved more than 90% accuracy on the datasets of all four museums, which shows its excellent ability in identifying and classifying social media content. For example, on the data set of Museum C, its accuracy rate reaches 94.1%, while that of ResNet and Inception models is only 88.3% and 89.2% respectively. This shows that MuseumCNN is more reliable in dealing with complex and diverse museum contents. Accuracy reflects the ability of the model to correctly predict positive samples (such as users’ favorite content), while F1 value takes both precision and recall into account. The F1 value of MuseumCNN is generally higher than other models in various museums, for example, it reaches 93.9% in Museum C, which further proves its advantages in balancing the performance of the model.

The closer the AUC value is to 1, the stronger the judgment ability of the model is. Among all models, MuseumCNN has the highest AUC value, especially 0.93 in Museum A, indicating that it shows the highest sensitivity and accuracy in the effective classification of social media content. In contrast, although the performance of GAN model is close to MuseumCNN in some cases, it is still not as good as the latter in terms of precision and recall rate, and GAN may face the problem of unstable training in the processing of high-dimensional data. At the same time, the limitations of RNN model in image processing make it weak on all datasets, which once again verifies the importance of choosing a suitable model. Table 7 shows the performance of different models on these indicators:

Table 7 shows the effects of different models in improving user interaction indicators. Under this indicator, MuseumCNN model also performs well, which is significantly improved compared with other models. The user engagement rate of MuseumCNN model has reached 66.7%, compared with 52.3% for ResNet and 58.7% for Inception. This shows that MuseumCNN can attract and maintain users’ participation more effectively and improve the interactivity of social media content. The improvement of content access rate is a key index to measure users’ interest and attention to museum content. MuseumCNN’s 75.0% improvement rate on this indicator is much higher than other models, which shows that more and more users are willing to visit and browse the social media content of museums. It is a strong proof of the effectiveness of the model. The user retention rate directly reflects the user’s willingness to continue to pay attention and interact. The user retention rate of MuseumCNN model has increased to 100%, which shows its great attraction and retention. It is worth noting that the improvement of content sharing rate has a direct impact on the exposure and dissemination of museums. The MuseumCNN model has improved by 200% on this indicator, indicating that users are more willing to share their favorite content, thus broadening the influence of museums on social media platforms.

In contrast, the effect of GAN model in improving user participation and content access rate is satisfactory, but it is still lower than MuseumCNN as a whole, and although RNN model has improved the user sharing rate, its performance in other indicators is still unsatisfactory, especially the participation rate and retention rate. These data further emphasize the importance of choosing the right model to improve user experience and participation. To sum up, MuseumCNN’s excellent performance in accuracy, user interaction and content communication makes it a powerful tool in the field of museum social media communication, which has important guiding significance for museums to use social media to enhance public participation and cultural communication. This study will continue to study to further explore how to improve the social media effect of museums.

The experimental time of different models on different datasets is shown in Table 8: It shows that MuseumCNN model shows significant efficiency advantages on all datasets. Specifically, the experimental time of MuseumCNN model is 3.81 GPU seconds on dataset A, 4.65 GPU seconds on dataset B, 2.14 GPU seconds on dataset C and 4.33 GPU seconds on dataset D. In contrast, the time of ResNet model is 8.22 GPU seconds on dataset A, 8.91 GPU seconds on dataset B, 7.43 GPU seconds on dataset C and 8.46 GPU seconds on dataset D. The time of the Inception model is 6.20 GPU seconds on dataset A, 6.71 GPU seconds on dataset B, 5.86 GPU seconds on dataset C and 6.34 GPU seconds on dataset D. By comparing these data, it is found that MuseumCNN model is not only superior to ResNet and Inception models in processing speed, but also more stable in different datasets. Especially on dataset C, the experimental time of MuseumCNN model is only 2.14 GPU seconds, which is significantly lower than the other two models. This shows that MuseumCNN model has higher efficiency and better adaptability when dealing with visual content. In addition, the experimental time of ResNet and Inception models on different datasets is also different. ResNet model has the longest experimental time on dataset C, while Inception model has the shortest experimental time on dataset C. This may be related to the characteristics of visual content in dataset C, and the ResNet model may need more computing resources to deal with these characteristics.

Generally speaking, MuseumCNN model shows excellent performance on all datasets, which not only verifies its effectiveness in the dissemination of museum social media content, but also shows its potential in practical application.

This study compares and evaluates the performance of the proposed MuseumCNN model with the latest deep learning models. These models include, but are not limited to, the latest CNN variants, networks with enhanced attention mechanisms, and so on. The following are the performance comparison results of different models on dataset C, as shown in Table 9. It shows that MuseumCNN model performs well in all performance indicators, especially in accuracy, precision, recall rate and F1 value, which shows its efficiency and accuracy in the task of social media content identification and classification in museums. The AUC value also reaches 0.93, indicating that the model is very stable under different thresholds. In contrast, the Transformer model is slightly lower in accuracy and F1 value than MuseumCNN, but its recall rate is slightly higher, which may indicate that Transformer has certain advantages in identifying positive samples. However, its overall performance is still not as good as MuseumCNN. GAN-based model is not as good as MuseumCNN and the other two models in all indicators. This may be because GAN-based model can generate high-quality images in the process of generating confrontation training, but it may lack sufficient discrimination ability in classification tasks. Attention-CNN model is quite close to MuseumCNN in accuracy and AUC, but slightly lower in accuracy and recall. This shows that Attention-CNN can capture the key features when processing visual content, but in some cases, it may not be able to completely distinguish between positive and negative samples. Through comparative analysis, MuseumCNN model performs best in the identification and classification of museum social media content. Its high accuracy, high precision, high recall rate and high F1 value prove its potential and value in practical application. Although Transformer and Attention-CNN models also show good performance, they still need to be further optimized in some aspects. GAN-based model needs to further enhance its discrimination ability in classification tasks.

In order to further verify the scalability and performance of MuseumCNN model, experiments are carried out on larger and more diverse datasets. These datasets include MODEST Museum Dataset, Tourism Dataset, BeijingImageByLabel Dataset and the datasets used before this study. By testing on these datasets, it is expected to evaluate the generalization ability and practical application potential of the model more comprehensively. The experimental results of this model under different datasets are shown in Table 10. It shows that the MuseumCNN model shows high accuracy on all datasets, especially on MODEST Museum Dataset and Tourism Dataset, with accuracy rates of 92.3% and 90.1% respectively. This shows that MuseumCNN model not only has advantages in the identification and classification of museum social media content, but also can well adapt to other types of cultural and tourism-related image data. ResNet and Inception models are also relatively stable on various datasets, but they are not as good as MuseumCNN models as a whole. This further verifies the superiority of MuseumCNN model in dealing with complex visual content. Especially on BeijingImageByLabel Dataset, the accuracy of MuseumCNN model reaches 89.0%, which shows its powerful performance in processing image data with geographical and cultural characteristics. In addition, through experiments on different datasets, it is also found that the model may need to be adjusted and optimized when dealing with different sources and types of image data. For example, on the Tourism Dataset, the model may need to better understand the tourism-related background and contextual information in order to identify and classify the image content more accurately. Generally speaking, the performance of MuseumCNN model on diverse datasets proves its good generalization ability and practical application potential.

Discussion

The experimental results show that the model shows high accuracy and precision for different types of museum social media content, especially on the dataset of the Museum C. This discovery highlights the advantages of CNN model in processing and analyzing visually rich content. However, the model shows performance fluctuation under different settings of batch size and learning rate, suggesting that the model parameters need to be carefully tuned in practical application to achieve optimal performance. In addition, by comparing the effects of social media communication before and after the application of the model, it is found that CNN technology can not only enhance the attractiveness of content and user participation, but also significantly improve the user retention rate and content sharing rate by optimizing the content recommendation mechanism. As Mitcham et al.72 and Ohara73 said, content sharing on social media is not only a way to spread information, but also a way to build and maintain social relations. These results are consistent with the latest research results of Rejeb et al.74 and Chen et al.75, and emphasize the potential of deep learning technology in improving the efficiency and effectiveness of social media content dissemination. To sum up, although the implementation of CNN model faces a series of challenges, its remarkable potential in improving the social media communication effect of museums cannot be ignored.

Conclusion

Research contribution

Based on deep mediatization theory and AI technology, this study provides a comprehensive analysis and optimization of the communication effectiveness of museum social media content through the construction and application of CNN model. The main findings are as follows: CNN model can effectively identify and classify the key visual features of museum social media content, and provide reliable technical support for automatic content recommendation and optimization. After applying CNN model, the user participation, access rate, retention rate and sharing rate of museum social media content have been significantly improved, which verifies the application value of deep learning technology in improving museum social media communication effect. The experimental results further prove the extensive applicability and effectiveness of CNN model in media content dissemination in different types of museums (such as Museum A and Museum D). These findings not only provide a new perspective and technical path for museums to spread from the media, but also explore new possibilities for the application of deep learning in the field of cultural communication and education promotion.

Limitation and future work

This study optimizes the communication effect of museum social media content through CNN technology, and has achieved some results, but there are still some limitations. Firstly, this study is mainly based on social media datasets of four museums. Although these datasets contain rich visual and textual information, they may not fully represent the diversity of all museums. In addition, although the MuseumCNN model performs well in the experiment, it also has limitations and deviations. For example, the model may not accurately identify the characteristics of some cultural heritage or artworks, which is related to the diversity and representativeness of training data. Future research will solve these problems by increasing the diversity of datasets and adopting more complex model structures.

Secondly, the GPU resources used in the experiment limit the training and testing scale of the model. When the resources are limited, the optimization and expansion of the model are limited. In addition, the application of the model in the actual museum environment also faces a series of practical challenges. CNN model performs well in experiments, but it needs high computing resources when dealing with large-scale datasets, which is an obstacle for cultural institutions such as museums with limited hardware support. Therefore, future research should consider developing lightweight models or exploring the effective use of cloud services and edge computing technologies to reduce dependence on local computing resources.

Finally, the traditional working mode of the museum needs to adapt to the integration of new technologies, which involves personnel training, workflow adjustment and the adaptation of policies and regulations. User privacy and data security issues also need special attention. In terms of technology promotion, although this study shows the potential of CNN technology in museum social media communication, its wide application still faces many obstacles. The future research can explore how to apply these technologies to other cultural and educational fields, such as libraries and art exhibitions to promote cultural communication and education promotion.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author Chao Song on reasonable request via e-mail sc463895@163.com.

References

Zhang, C. The expansion path of government affairs openness in China in the era of deep mediatization. Chin. Admi. 11, 157–159 (2023).

Couldry, N. & Hepp, A. The Mediated Construction of Reality Vol. 7, 34 (Polity Press, Cambridge, 2017).

Hepp, A. Deep Mediatization 5th edn. (Routledge, London & New York, 2020).

Zollo, L., Rialti, R., Marrucci, A. & Ciappei, C. How do museums foster loyalty in tech-savvy visitors? The role of social media and digital experience. Curr. Issues Tour. 25(18), 2991–3008 (2022).

Omar, A., Mahmoud, T.M., & Abd-El-Hafeez, T. Comparative performance of machine learning and deep learning algorithms for Arabic hate speech detection in OSNs. In Proceedings of the International Conference on Artificial Intelligence and Computer Vision (AICV2020) 247–257 (Springer, 2020).

Omar, A., Mahmoud, T. M., Abd-El-Hafeez, T. & Mahfouz, A. Multi-label arabic text classification in online social networks. Information Systems 100, 101785 (2021).

Naranjo-Torres, J. et al. A review of convolutional neural network applied to fruit image processing. Appl. Sci. 10(10), 3443 (2020).

Abdou, M. A. Literature review: Efficient deep neural networks techniques for medical image analysis. Neural Comput. Appl. 34(8), 5791–5812 (2022).

Nirthika, R., Manivannan, S., Ramanan, A. & Wang, R. Pooling in convolutional neural networks for medical image analysis: A survey and an empirical study. Neural Comput. Appl. 34(7), 5321–5347 (2022).

Kefi, H., Besson, E., Zhao, Y. & Farran, S. Toward museum transformation: From mediation to social media-tion and fostering omni-visit experience. Inf. Manag. 61(1), 103890 (2024).

Oztig, L. I. Holocaust museums, Holocaust memorial culture, and individuals: A constructivist perspective. J. Mod. Jew. Stud. 22(1), 62–83 (2023).

Soulard, J., Stewart, W., Larson, M. & Samson, E. Dark tourism and social mobilization: Transforming travelers after visiting a Holocaust museum. J. Travel Res. 62(4), 820–840 (2023).

Zou, Y., Xiao, H. & Yang, Y. Constructing identity in space and place: Semiotic and discourse analyses of museum tourism. Tour. Manag. 93, 104608 (2022).

Park, E., Kim, S. & Xu, M. Hunger for learning or tasting? An exploratory study of food tourist motivations visiting food museum restaurants. Tour. Recreat. Res. 47(2), 130–144 (2022).

Ruggiero, P., Lombardi, R. & Russo, S. Museum anchors and social media: Possible nexus and future development. Curr. Issues Tour. 25(18), 3009–3026 (2022).

Suh, J. Revenue sources matter to nonprofit communication? An examination of museum communication and social media engagement. J. Nonprofit Public Sect. Mark. 34(3), 271–290 (2022).

Balcells, L., Palanza, V. & Voytas, E. Do transitional justice museums persuade visitors? Evidence from a field experiment. J. Polit. 84(1), 496–510 (2022).

Agostino, D. & Costantini, C. A measurement framework for assessing the digital transformation of cultural institutions: The Italian case. Meditari Account. Res. 30(4), 1141–1168 (2022).

Vacalopoulou, A., Markantonatou, S., Toraki, K. & Minos, P. Openly available resource for the management and promotion of museum exhibits: The case of Greek museums with folk exhibits. Int. J. Comput. Methods Herit. Sci. (IJCMHS) 3(1), 33–51 (2019).

Taormina, F. & Baraldi, S. B. Museums and digital technology: A literature review on organizational issues. Eur. Plan. Stud. 30(9), 1676–1694 (2022).

Marini, C. & Agostino, D. Humanized museums? How digital technologies become relational tools. Mus. Manag. Curatorship 37(6), 598–615 (2022).

Fernandez-Lores, S., Crespo-Tejero, N. & Fernández-Hernández, R. Driving traffic to the museum: The role of the digital communication tools. Technol. Forecast. Soc. Change 174, 121273 (2022).

Wu, Y., Jiang, Q., Liang, H. E. & Ni, S. What drives users to adopt a digital museum? A case of virtual exhibition hall of National Costume Museum. Sage Open 12(1), 21582440221082104 (2022).

Xu, W., Dai, T. T., Shen, Z. Y. & Yao, Y. J. Effects of technology application on museum learning: A meta-analysis of 42 studies published between 2011 and 2021. Interact. Learn. Environ. 31(7), 4589–4604 (2023).

Patrucco, G. & Setragno, F. Multiclass semantic segmentation for digitisation of movable heritage using deep learning techniques. Virtual Archaeol. Rev. 12(25), 85–98 (2021).

Sizyakin, R. et al. Crack detection in paintings using convolutional neural networks. IEEE Access 8, 74535–74552 (2020).

Younis, S. et al. Taxon and trait recognition from digitized herbarium specimens using deep convolutional neural networks. Bot. Lett. 165(3–4), 377–383 (2018).

Joshi, M. R. et al. Auto-colorization of historical images using deep convolutional neural networks. Mathematics 8(12), 2258 (2020).

Cetinic, E., Lipic, T. & Grgic, S. Learning the principles of art history with convolutional neural networks. Pattern Recognit. Lett. 129, 56–62 (2020).

Ioannakis, G., Bampis, L. & Koutsoudis, A. Exploiting artificial intelligence for digitally enriched museum visits. J. Cult. Herit. 42, 171–180 (2020).