Abstract

This article details the development of a next-word prediction model utilizing federated learning and introduces a mechanism for detecting backdoor attacks. Federated learning enables multiple devices to collaboratively train a shared model while retaining data locally. However, this decentralized approach is susceptible to manipulation by malicious actors who control a subset of participating devices, thereby biasing the model’s outputs on specific topics, such as a presidential election. The proposed detection mechanism aims to identify and exclude devices with anomalous datasets from the training process, thereby mitigating the influence of such attacks. By using the example of a presidential election, the study demonstrates a positive correlation between the proportion of compromised devices and the degree of bias in the model’s outputs. The findings indicate that the detection mechanism effectively reduces the impact of backdoor attacks, particularly when the number of compromised devices is relatively low. This research contributes to enhancing the robustness of federated learning systems against malicious manipulation, ensuring more reliable and unbiased model performance.

Similar content being viewed by others

Introduction

Federated learning enables decentralized machine learning across various devices while maintaining user privacy, a method utilized by companies like Google for applications such as input method editors (IME). However, this training process requires connections to numerous devices, making it susceptible to attacks where malicious actors can corrupt the model by introducing tainted datasets, which is also known as adversarial attacks. Research has highlighted the vulnerabilities inherent in federated learning, particularly when a subset of devices is compromised to inject harmful data, potentially leading to misleading outcomes in the trained models and client devices. Thus, it is crucial to strike a balance between ensuring data privacy and maintaining the integrity, accuracy, and efficiency of the model.

In the context of political events like presidential elections, this research proposes constructing a next-word prediction model using federated learning to simulate a backdoor attack detection mechanism. This approach aims to evaluate the performance of the next-word prediction model effectively.

The implications of federated learning extend beyond technical challenges; they also intersect with broader issues such as cybersecurity and employment opportunities in an increasingly automated world. As highlighted in various studies, including there is a pressing need to address these vulnerabilities while considering the socio-technological landscape shaped by advancements in artificial intelligence and robotics1,2,3,4,5. Additionally, understanding the security challenges within the Internet of Things (IoT) is vital, as these devices often lack robust security measures, exposing them to potential exploitation3. The interplay between cybercrime and cybersecurity strategies further complicates this landscape, emphasizing the necessity for comprehensive research and proactive measures in both technology and policy domains4,5.

Contributions

The followings are significant contributions to this study:

-

Construct a novel approach of next-word prediction model for detecting backdoor attack through Recurrent Neural Networks (RNNs).

-

Propose a backdoor attack detection mechanism.

-

Inveterate the efficiency and performance of the model in real world setting through experiment.

The organization of this work is delineated as follows: Section “Literature review” investigates the literature about current threats of Federated learning related to cyber. Section “Background” provides the foundational context, encompassing the model for next-word prediction, federated learning, backdoor federated learning, and the dataset sources categorized as “Good” and “Bad.” Section “Implementation” illustrates the implementation using an example from a presidential election. Section “Verification and results” details the verification process and the results of the proposed banning mechanism. Finally, Section “Conclusion” presents the conclusion of the proposed banning mechanism and discusses potential future work.

Literature review

Recent studies have proposed linguistic and sentiment analysis as methods to detect and mitigate fake information and hate speech, as shown in Table 1. Linguistic features and word connections have been utilized to identify fake information6,7, while sentiment analysis has been applied to detect bots spreading hate speech on platforms like Twitter8. Extensive research has highlighted the prevalence and impact of cyberbullying, linking it to adverse mental health outcomes such as anxiety, depression, and low self-esteem9,10. Addressing cyberbullying requires a comprehensive approach encompassing education, prevention, and intervention strategies10. Machine learning algorithms have been explored for their potential to detect cyberbullying on social media platforms11,12,13,14,15.

Federated learning is a promising approach to maintaining privacy in machine learning applications, with research extending its use to healthcare, secure internet of vehicles, drones, and blockchain16,17,18,19,20,21. Nevertheless, maintaining data privacy while ensuring model accuracy and efficiency remains a challenge. Solutions such as differential privacy and secure aggregation have been proposed to address these issues22,23,24,25. This research examines the efficacy of a sentiment analysis-based banning mechanism in mitigating the impact of malicious devices on federated learning models. The proposed mechanism is evaluated by preparing devices with both “good” and “bad” datasets and incorporating them into the training stages. The prepared devices will be applied in the training stage, with the test of banning mechanism proposed in this article. The effectiveness of the banning mechanism is assessed based on the sentiment generated by sentences from the aggregated model, with and without the banning mechanism in place. Results indicate that the banning mechanism successfully detects negative words and protects the model from abnormal dataset attacks when a small proportion of devices are compromised. The parameters for the proposed algorithms are primarily based on the accuracy.

Background

Threat to a validity

Researchers in26 investigated 57 antimalware products using approximately 5,000 malware samples and found that most tools struggled to detect transformed variants of known malware. This highlights a significant gap in identifying new or modified threats. Additionally, web-based attacks, such as SQL injections, which are often of lesser concern and overlooked by traditional antimalware solutions, can introduce vulnerabilities to web applications27,28. According to29, contemporary cybersecurity faces two major challenges: the use of machine learning to create novel, dynamic malware and the emergence of new attacks with distinct features that evade current classifiers, reducing detection rates and increasing false alarms. This paper proposes a possible solution to address this problem.

Model used to build the next-word prediction

RNNs are widely used in machine learning for tasks involving sequential data, such as next-word prediction, due to their ability to leverage information from previous outputs. Long Short-Term Memory (LSTM) models, a specialized type of RNN, are particularly effective for these tasks. LSTMs use mechanisms to incorporate previous output results as parameters, improving the prediction of subsequent words.

LSTM models outperform standard RNNs due to their unique architecture, which addresses the limitations of traditional RNNs, such as vanishing and exploding gradients. These issues hinder RNN performs ability to retain information over long sequences. In contrast, LSTMs utilize memory cells and gating mechanisms to manage information over extended periods, making them ideal for tasks requiring the preservation of long-term dependencies.

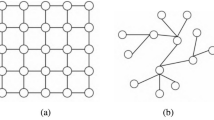

Federated learning

Traditional machine learning relies on a centralized server for data storage and model training. In contrast, federated learning is a decentralized approach where client devices participate in model training, thereby reducing privacy concerns2,3. The central server aggregates locally trained models from client devices and performs model averaging. Google utilizes federated learning in applications like Gboard, an input method editor (IME) for Android. This approach enhances predictive capabilities while preserving user privacy by minimizing the transfer of sensitive data to a centralized server.

Backdoor federated learning

Federated learning entails a collaborative learning process involving multiple client devices. Recent research has explored the vulnerability of federated learning models to backdoor attacks. Specifically, in4, an empirical study investigated the susceptibility of a deep neural network used for image classification to such an attack.

Dataset sources for “Good” and “Bad”

In recent presidential elections, the corpus of the candidates was commented on forum discussions, news headlines, commentaries, and social media. In this article, a presidential election is selected, and the actual speeches of the candidates are extracted and prepared for the learning datasets used in the devices. A candidate will be selected and used as an example.

Implementation

Dataset preparation and preprocessing

Fundamental and abnormal datasets were selected, as delineated in Table 2. The fundamental datasets primarily enable the predictive model to learn basic English word structures and concatenation patterns. These datasets include passages of sentences that provide sufficient data for the model to discern patterns and common linkages among words.

The abnormal datasets draw from two distinct sources. The first source comprises transcripts from a target candidate (“Target”), which predominantly contain positive, complimentary messages about the “Target.” These datasets are utilized to introduce positive sentiment linkages toward the “Target.” The second source includes two news pieces that are critical of the “Target.” Unlike overtly negative statements such as “Target is poor” or “Target is a bad guy,” which can easily be flagged as spam, these news articles contain more nuanced, meaningful sentences to mimic typical human language. These datasets are employed to introduce negative sentiment linkages toward the “Target.”

The following stages outline the process for cleaning and integrating datasets into the system to enhance learning efficiency:

Algorithm 1 The algorithm for every communication round in the federated learning model.

Algorithm 2 Algorithm for detection and banning algorithm when performing model aggregation in the main server

Convert the numbers to %number% to degrade the relationship due to number difference.

-

Remove punctuation marks, for example, commas (,), full stops (.), brackets ((), [], {}) to eliminate the word deviation due to the attachment of the word to punctuation marks. Quotation marks (‘) are not removed because they contain some special abbreviation meanings, for example, “I’m,” “Target’s.” Dashes (-) are also not removed because they sometimes carry purposes for well-remembered models.

-

Change all words to lower-case characters to reduce the difference between capital and small letters.

Since the datasets are huge, it is required to limit memory usage and training time. The first 500 lines of a text file in the data are used, and 300 lines are randomly collected in the first 500 lines in each dataset to make it independent.

Next-word prediction model

In the model, a word is treated as the fundamental unit, given that words have stronger connections to subsequent words compared to sequences of characters. Tokenization is performed on the words, and an initial dictionary for tokenization is prepared, which remains static during the learning process. The language model learned from Webster’s dictionaries serves as the starting point.

The dictionary is divided equally into 20 divisions in preparation. Each division undergoes training for 2500 epochs, and this process is repeated for all 20 divisions. The prepared dictionary is subsequently used in the experiment. For the next-word prediction model, an LSTM model is employed as a type of RNN. The previous output is utilized for the next prediction. The model comprises 12 hidden states and one recurrent layer, with both input and output sizes set to eight. For each sentence, every eight words are segmented for learning. For instance, the sentence “It would be one thing if Target misspoke one or two” is divided into eight-word segments and processed by the system.

In the federated learning round, 10 out of an initial 50 clients are selected for training (each client possesses distinct datasets, which are elaborated upon later) with parameters set at 5 epochs and a learning rate of 0.001. The client models are then transmitted to the server for aggregation. The server averages the ten client models and disseminates the updated model to all clients for the subsequent round. The federated learning algorithm is detailed in Algorithm 1. The time complexity of Algorithm 1 is approximately O (total_r × (10 + Algorithm2+ num_d)).

Flowchart of Algorithm 1.

The flowchart in Fig. 1 delineates a systematic approach for training a global model utilizing data from selected devices within a specified dataset, referred to as tdp. The process commences with the initialization of a counter variable i set to zero and another variable dev_num initialized to one. Subsequently, the methodology involves the random selection of ten devices from the dataset. Local training is then assigned based on the data from the selected device. A critical evaluation follows to determine whether dev_num exceeds ten; if not, dev_num is incremented by one, prompting the repetition of the training assignment. Conversely, upon surpassing this threshold, the next phase involves constructing a global model derived from the accumulated results of the device datasets. An algorithm is subsequently employed to assess the model for any abnormalities. The validated global model is then stored in a dataset denoted as \(\:\stackrel{-}{\varvec{d}\varvec{t}\varvec{s}}\). Lastly, a check is conducted to ascertain whether the counter matches a predetermined total count, total_r; if not, is incremented, and the process reiterates. This iterative framework ensures that the global model is continuously refined and updated until all devices have been adequately processed.

Detection and banning mechanism

For each selected client model, the difference between the original model (before server aggregation) and the client model is calculated and averaged. This calculation provides the mean deviation of a client. The absolute values of the differences are obtained and compared with the mean values of all mean deviations. Let us assume that a particular model contains a mean deviation greater than (average of all mean deviations + k), where k is the ban threshold set to the value of 0.009 in the experiment. In this case, this device is banned from joining the learning process in the next round. To prevent banning all devices from joining the learning process in each round, maximum (Number_of_client_list_remaining-1)/10 machines are allowed to be banned. The banning mechanism is described in Algorithm 2. The time complexity of Algorithm 2 is approximately O(1).

The flowchart illustrates a systematic approach for detecting and banning devices based on their performance relative to a global model during the model aggregation process on the main server, as shown in Fig. 2. The algorithm begins with the initialization of an array named dif to store differences between the global model and individual device models. Following this, a variable dev_num is set to one. The global model is assigned to the corresponding entry dif in the array for each selected device. A check is then performed to determine if dev_num has reached ten; if not, dev_num is incremented, and the process repeats until all devices have been assessed. Once the loop completes, the value stored in the dif array is assigned to a variable dev_threshold. The algorithm then reinitializes dev_num to one and checks whether the difference for each device exceeds a calculated threshold (defined as dev_threshold + k). If a device’s difference surpasses this threshold, it is removed from the training devices pool tdp. The algorithm iterates this process until all devices have been evaluated, concluding the operation. This structured methodology ensures that only devices meeting the performance criteria remain in the training pool for subsequent model updates, thereby enhancing the overall efficacy of the aggregation process.

Flowchart of Algorithm 2.

Verification and results

Testing setup and expectations

Federate learning for 80 communication rounds was carried out by selecting ten machines from the pool (which contains different datasets representing different user behaviors). Local learning was carried out on each of the ten devices. Next, the ten models from the selected devices performed model averaging on the server. After each communication round, the “Target” was used as the starting point to enable the model to predict the next eight words. This was performed five times. Starting with the “Target,” five sentences with eight words each were predicted. Subsequently, a sentimental analysis library was used to determine whether the predicted sentences contained positive, negative, or neutral feelings. The percentage of positive, negative, and neutral feelings was recorded from the 0th round up to the 79th round, out of a total of 80 communication rounds.

To test the trained model, a sentimental analysis using a natural language toolkit (NLTK) with the learned dataset “Valence Aware Dictionary and sEntiment Reasoner” Sentiment Lexicon (VADER)30 was performed to assess the perception of the generated output sentences. The experiment was conducted in the following environment:

-

PyTorch was used as the library.

-

A server with a 2 RTX 2080 Graphics Card was used.

-

The devices in the model do not have any difference, except the data sets they are carrying.

-

Assume that there will be no network latency nor data loss due to the network.

Distribution of the datasets

Four setups with 50 machines were used for the model. In each communication round, 10 of the 50 machines were randomly selected and used for averaging. In Set 1, the percentage of the “Hate-Target” data was limited to 20% to test whether the proposed banning mechanism can spot the devices with more non-neutral feelings and ban them from joining the communication round. The abnormal datasets exhibited a higher deviation compared to the other datasets. The percentage of “Hate-Target” data increased gradually. A positive variation was predicted when the percentage of “Hate-Target” data was increased. The distribution of the dataset in the device sets is shown in Table 3.

The effect of the generated sentences on the perception was observed by the contribution of the “Hate-Target” data. Identical sets (Sets 1–4) with and without applying the banning mechanism were used in this experiment to assess the efficiency of the mechanism. The percentage of bad feelings toward the generated sentence differences on the bad devices remained the same, and it was also used to assess the efficiency of the banning mechanism.

Results

The training was performed with 143 trials in total. The average running time for a trial was 12 h and 12 min (43918.142 s). On average, each set was run in 17 to 18 sets. Regarding the training, the curve representing the percentage of bad feelings stabilizes around Round 50. Figure 3a, b show the variation in the bad mood of the sentences generated by the trained model during the training period. Figure 3a shows the results without applying the banning mechanism, whereas Fig. 3b shows the results when the mechanism was applied. The curve stabilizes after approximately 80 rounds of communication, indicating that the trained model is stable.

Figure 3a, b show that after 80 communication rounds, the average percentage of the bad feelings generated by the model has increased. This suggests a positive relationship between the percentage of machines containing bad datasets and the percentage of bad feelings generated by the model. Both the banned and permissible versions support the hypothesis that by increasing the percentage of “Hate-Target” data, the bad feeling of the generated sentences, which starts from the “Target,” also increases.

Figure 4a presents the results regarding the average percentage of sentences exhibiting negative sentiment generated by the models in the final round. Figure 4b illustrates the percentage reduction in devices containing undesirable datasets, comparing scenarios with and without the implementation of the banning mechanism alongside control sets. The results depicted in Fig. 4 validate the efficacy of the banning mechanism. Particularly, the banning mechanism effectively reduces the proportion of compromised devices within the learning pool when compared to scenarios without its application. However, when the percentage of “Hate-Target” data increases, the banning mechanism tends to ban more devices with normal datasets, even if the initial models were trained using the “Word explanation and example sentence parts in the Webster’s dictionaries.” This aggravates the bad influence of the bad devices, which aims to attack the whole model and make it generate more “Hate-Target” sentences than the one without banning mechanism but lesser than the one trained with majority of normal datasets. Therefore, if most of the devices contain bad datasets, then this method will have some limitations (Table 4).

The WFB method exhibits limitations in its accuracy when detecting trigger forgery attacks, as evidenced by the associated p-values35. This limitation ultimately results in verification outcomes being categorized as “False,” indicating that the method was ineffective in identifying trigger forgery attacks under the examined conditions. In contrast, the model proposed in this research demonstrates the efficacy of the banning mechanism in significantly reducing the presence of malicious devices within datasets. Furthermore, when compared to the MM-BD approach, the findings presented in Fig. 4a,b suggest that the mechanism developed in this study is capable of effectively mitigating backdoor attacks, even in complex all-to-all scenarios.

Conclusion

This tutorial demonstrates the positive correlation between the proportion of attack datasets and negative sentiment toward targeted words in federated learning. Federated learning is susceptible to data poisoning, where attackers manipulate datasets on participating devices. To counteract this, a banning mechanism is proposed to exclude compromised devices, effectively reducing their influence when their proportion is small.

However, the mechanism may mistakenly ban legitimate devices if the number of compromised devices becomes too large. Enhancements such as storing more datasets on the aggregated server, validating the global model against these datasets, and incorporating advanced tools for detecting word embeddings and sentiment analysis can improve the mechanism’s effectiveness. These refinements will bolster the integrity and reliability of federated learning systems.

Combining deep learning and machine learning techniques can significantly enhance the performance, robustness, and security of federated learning systems. By leveraging advanced models for word embeddings, sentiment analysis, anomaly detection, and privacy preservation, the proposed banning mechanism can effectively mitigate the impact of malicious devices. These refinements will bolster the integrity and reliability of federated learning systems, making them more resilient to attacks and capable of providing accurate and trustworthy results.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Shaukat, K. et al. A review of time-series Anomaly detection techniques: a step to future perspectives. Adv. Intell. Syst. Comput. 865–877. https://doi.org/10.1007/978-3-030-73100-7_60 (2021).

Palthya, R., Barmavat, B., Prakash, K. & Bellevue, S. The impact Artificial Intelligence (A.I) on healthcare: opportunities & future challenges. Int. J. Sci. Res. (IJSR) 10, 1655–1661 (2021).

Shaukat, K. et al. A Review on Security Challenges in Internet of Things (IoT). 2021 26th International Conference on Automation and Computing (ICAC), Portsmouth, United Kingdom 1–6. https://doi.org/10.23919/ICAC50006.2021.9594183 (2021).

Shaukat, K., Rubab, A., Shehzadi, I. & Iqbal, R. A socio-technological analysis of cyber crime and cyber security in Pakistan. Transylv. Rev. (2017).

Tariq, U. et al. A critical cybersecurity analysis and future research directions for the internet of things: A comprehensive review. Sensors 23, 4117 Extending the school grounds?- Bullying experiences in cyberspace. J. Sch. Health 78(9), 496–505 (2023).

Yang, Q., Liu, Y., Chen, T. & Tong, Y. Federated machine learning: concept and applications. ACM Trans. Intell. Syst. Technol. 10(2), 1–19 (2019).

Gu, T., Liu, K., Dolan-Gavitt, B. & Garg, S. BadNets: Evaluating backdooring attacks on deep neural networks. IEEE Access 7, 47230–47244 (2019).

Li, Z., Sharma, V. & Mohanty, S. P. Preserving data privacy via federated learning: challenges and solutions. IEEE Consum. Electron. Mag. 9(3), 8–16 (2020).

Verma, P. K., Agrawal, P., Amorim, I. & Prodan, R. WELFake: Word embedding over linguistic features for fake news detection. IEEE Trans. Comput. Soc. 8(4), 881–893 (2021).

Seddari, N. et al. A hybrid linguistic and knowledge-based analysis approach for fake news detection on social media. IEEE Access 10, 62097–62109 (2022).

Bailurkar, R. & Raul, N. Detecting bots to distinguish hate speech on social media, presented at the 12th Int. Conf. Computing Communication and Networking Technologies (ICCCNT) 1–5. https://doi.org/10.1109/ICCCNT51525.2021.9579883 (2021).

Kowalski, R. M., Giumetti, G. W., Schroeder, A. N. & Lattanner, M. R. Bullying in the digital age: a critical review and meta-analysis of cyberbullying research among youth. Psychol. Bull. 140(4), 1073–1137 (2014).

Ning, H., Dhelim, S., Bouras, M. A., Khelloufi, A. & Ullah, A. Cyber-syndrome and its formation, classification, recovery and prevention. IEEE Access 6, 35501–35511 (2018).

Patchin, J. W. & Hinduja, S. Deterring teen bullying: assessing the impact of perceived punishment from police, schools, and parents. Youth Violence Juv Justice 16(2), 190–207 (2018).

Murshed, B. A. H., Abawajy, J., Mallappa, S., Saif, M. A. N. & Al-Ariki, H. D. E. DEA-RNN: a hybrid deep learning approach for cyberbullying detection in Twitter social media platform. IEEE Access. 10, 25857–25871 (2022).

Akhter, M. P., Jiangbin, Z., Naqvi, I. R., AbdelMajeed, M. & Zia, T. Abusive language detection from social media comments using conventional machine learning and deep learning approaches. Multimed. Syst. 28(6), 1925–1940 (2022).

Mullah, N. S. & Zainon, W. M. N. W. Advances in machine learning algorithms for hate speech detection in social media: a review. IEEE Access 9, 88364–88376 (2021).

Fazil, M. & Abulaish, M. A hybrid approach for detecting automated spammers in Twitter. TIFS 13(11), 2707–2719 (2018).

Faraz, A., Mounsef, J., Raza, A. & Willis, S. Child safety and protection in the online gaming ecosystem. IEEE Access 10, 115895–115913 (2022).

Qayyum, A., Ahmad, K., Ahsan, M. A., Al-Fuqaha, A. & Qadir, J. Collaborative federated learning for healthcare: multi-Modal COVID-19 diagnosis at the edge. IEEE Open J. Comput. Soc. 3, 172–184 (2022).

Thakur, A., Sharma, P. & Clifton, D. A. Dynamic neural graphs based federated reptile for semi-supervised multi-tasking in healthcare applications. IEEE J. Biomed. Health. Inform. 26(4), 1761–1772 (2022).

Ferrag, M. A., Friha, O., Maglaras, L., Janicke, H. & Shu, L. Federated deep learning for cyber security in the internet of things: concepts, applications, and experimental analysis. IEEE Access. 9, 138509–138542 (2021).

Majeed, U., Khan, L. U., Hassan, S. S., Han, Z. & Hong, C. S. FL-incentivizer: FL-NFT and FL-tokens for federated learning model trading and training. IEEE Access 11, 4381–4399 (2023).

Huang, W. et al. FedDSR: daily schedule recommendation in a federated deep reinforcement learning framework. IEEE Trans. Knowl. Data Eng. 35(4), 3912–3924 (2023).

Zhang, X. et al. Efficient federated learning for cloud-based AIoT applications. IEEE TCAD 40(11), 2211–2223 (2021).

Yi, Z. et al. A stackelberg incentive mechanism for wireless federated learning with differential privacy. IEEE Wirel. Commun. Lett. 11(9), 1805–1809 (2022).

Zhang, L., Zhu, T., Xiong, P., Zhou, W. & Yu, P. S. A robust game-theoretical federated learning framework with joint differential privacy. IEEE Trans. Knowl. Data Eng. 35(4), 3333–3346 (2023).

Kalapaaking, A. P. et al. Blockchain-based federated learning with secure aggregation in trusted execution environment for internet-of-things. IEEE Trans. Industr Inf. 19(2), 1703–1714 (2023).

Domingo-Ferrer, J., Blanco-Justicia, A., Manjón, J. & Sánchez, D. Secure and privacy-preserving federated learning via co-utility. IEEE Internet Things J. 9(5), 3988–4000 (2022).

Canfora, G., Di Sorbo, A., Mercaldo, F. & Visaggio, C. A. Obfuscation techniques against signature-based detection: a case study. 2015 Mob. Syst. Technol. Workshop (MST). https://doi.org/10.1109/mst.2015.8 (2015).

Shaukat, K., Luo, S. & Varadharajan, V. A novel machine learning approach for detecting first-time-appeared malware. Eng. Appl. Artif. Intell. 131, 107801 (2024).

Bokolo, B. G., Chen, L. & Liu, Q. Detection of web-attack using Distilbert, RNN, and LSTM. 11th International Symposium on Digital Forensics and Security (ISDFS) 1–6. https://doi.org/10.1109/isdfs58141.2023.10131822 (2023).

Shaukat, K., Luo, S., Varadharajan, V., Hameed, I. A. & Xu, M. A survey on machine learning techniques for cyber security in the last decade. IEEE Access. 8, 222310–222354 (2020).

Hutto, C. & Gilbert, E. VADER: a parsimonious rule-based model for sentiment analysis of social media text. ICWSM 8(1), 216–225 (2014).

Shao, S. et al. WFB: watermarking-based copyright protection framework for federated learning model via blockchain. Sci. Rep. 14, 19453. https://doi.org/10.1038/s41598-024-70025-1 (2024).

Wang, H. et al. MM-BD: post-training detection of backdoor attacks with arbitrary backdoor pattern types using a maximum margin statistic. 2024 IEEE Symp. Secur. Priv. (SP) 1994–2012. https://doi.org/10.1109/sp54263.2024.00015 (2024).

Funding

The work was supported by the Research Matching Grant Scheme (RMGS) from the Research Grants Council of the Hong Kong Special Administrative Region, China.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Jimmy K. W. Wong: Methodology, Software, Investigation, Formal Analysis, Writing.Ki Ki Chung: Formal Analysis, Writing.Yuen Wing Lo: Methodology, Investigation, Visualization, WritingChun Yin Lai: VerificationSteve W. Y. Mung: Investigation, Resources, Funding Acquisition, Supervision.Jimmy K. W. Wong, Ki Ki Chung and Yuen Wing Lo contributed equally to the manuscript and shared first authorship.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wong, J.K.W., Chung, K.K., Lo, Y.W. et al. Practical Implementation of Federated Learning for Detecting Backdoor Attacks in a Next-word Prediction Model. Sci Rep 15, 2328 (2025). https://doi.org/10.1038/s41598-024-82079-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-82079-2