Abstract

We introduce efficient tensor network models for sequence processing motivated by correspondence to probabilistic graphical models, interpretability and resource compression. Inductive bias is introduced via network architecture as motivated by correlation and compositional structure in the data. We create expressive networks utilising tensors that are both complex and unitary. As such they may be represented by parameterised quantum circuits and describe physical processes. The relevant inductive biases result in networks with logarithmic treewidth which is paramount for avoiding trainability issues in these spaces. For the same reason, they are also efficiently contractable or ‘quantum-inspired’. We demonstrate experimental results for the task of binary classification of bioinformatics and natural language, characterised by long-range correlations and often equipped with syntactic information. This work provides a scalable route for experimentation on the role of tensor structure and syntactic priors in NLP. Since these models map operationally to the qubits of a quantum processor, unbiased sampling equates to taking measurements on the quantum state encoding the learnt probability distribution. We demonstrate implementation on Quantinuum’s H2-1 trapped-ion quantum processor, showing the potential of near-term quantum devices.

Similar content being viewed by others

Introduction

In recent years data has become a plentiful resource that has contributed to monumental advances in artificial intelligence (AI). Application of large language models which are trained on TBs of data, such as GPT-31,2, GPT-43, and LAMDA4, have entered the public sphere and have been met with justifiable awe. However, critiques of this unstructured approach remain prominent and the large amounts of redundancy in these energy-hungry models further encourage us to ask if, despite impressive results, alternative routes are worth exploring. It is believed that naturally occurring learning systems utilise priors, or biases, which provide scaffolding to neural wiring5,6,7,8,9,10. A motivating question regards the brain’s ability to generalise from sparse examples when artificial neural networks typically require such large amounts of data. Compositional generalisation argues that the presence of innate wiring rules predisposes the brain with a model for reasoning and concept creation. Such ideas paved the way for the development of structured learning models. The contemporary example is graph neural networks (GNNs)11,12 which achieve state-of-the-art performance with improved sample complexity11,12,13,14,15,16.

Tensor networks provide low-dimensional representations of high-dimensional data. They are a natural framework for learning as they are equivalent to probabilistic graphical models26, where we simply replace factorisations of probability distributions into marginals with factorisations of high-rank tensors into lower-rank tensors. They have created some recent excitement in the machine learning community as they can address some of the fields key criticisms including large redundancy, lack of interpretability17,18,19,20,21,22,23 and lack of rigorous theoretical results e.g. in comparing expressive power of different architectures24,25. Tensor networks also naturally describe quantum processes35. As such there has been excitement to find ways to use quantum spaces as feature space36, which in principle may lead to superpolynomial advantage for machine learning37,38. Such quantum tensor networks39 have valid operational interpretations as quantum computations, for example realised with parameterised quantum circuits (PQCs)40,41. A tensor network is said to be efficiently contractible when the cost of contraction is a polynomial in the bond dimension and network size. A more rigorous definition can be given in terms of tree width and in57 this is connected directly to the ability to simulate the corresponding quantum circuit. Since all our tensor networks have bounded tree width we refer to them as quantum-inspired models since we do not require a quantum device for execution. While it’s still true that for large enough bond dimension classical simulation would not be efficient, even in the case of low tree width, it is likely that training such a model would also not be feasible. As will be discussed in further detail, even in the case of efficiently contractable networks there is however scope for polynomial speed-up in sampling the probability distribution over a tensor network on a quantum device.

The data we study in the work is bioinformatics and natural language, but these models may be applied to any sequence data. The task of sequence modelling in general reduces to learning a probability distribution over symbols. Tensor networks natively fuse the successful distributional approaches for encoding meaning, or ‘semantics’27,28, with the compositional structures rooted in the field of theoretical linguistics29, or ‘syntax’. In the DisCoCat framework30, the first to introduce such an approach to modelling the semantics of sentences, word embeddings take the form of tensors which are composed according to the syntactic structure of the sentence in which they participate, resulting in a syntax-aware tensor network. Specifically, and in contrast to our work, the compositional schemes used follow parses of typeological grammars, such as pregroup grammar31,32, Lambek-calculus33, or context-free grammar34. The syntactic frameworks we utilise do not require post-selection to be a part of our models as is required for scalability with real quantum devices. An additional difference is that we allow syntactic operations to be trainable rather than have only the semantics embeddings be parameterised. Beyond the shared naturality of tensor networks in both language modelling and quantum, previous work has been motivated by possible polynomial quantum speedup42 and the relevance of contextuality where for example in43,44 minimal contextual extensions of Bayesian language models show advantage even for this ‘classical’ data. Quantum DisCoCat models have been constructed for syntax-aware quantum machine learning tasks45, as has been demonstrated in small-scale proof-of-concept experiments46,47.

There has already been some success using one-dimensional tensor networks for probabilistic sequence modelling25,48,49. However, from a physical point of view, the presence of long-range correlations (known as Zipf’s law) in language and biological data50,51,52,53,54,55 motivates the use of tree-like or hierarchical tensor network architectures56,57,58. Key examples of hierarchical tensor networks are the tree tensor network (TTN)59 and multi-scale entanglement renormalisation ansatz (MERA)60, initially introduced to capture power-law correlations in critical quantum many-body systems. In direct analogue to condensed matter physics we leverage the flexibility in architecture design of tensor networks to restrict the exponentially large feature space according to the correlation structure relevant to a given problem61. That is, we introduce an inductive bias on the space of models according to any known structure in the data and learning task at hand. Finally, noteworthy explorations of syntax-aware neural-based models include the recursive neural network of Ref.62, defined for the task of sentiment analysis, where the authors motivate the use of syntactic structure from the point of view of interpretability and explainability. Reference63 introduces syntax-aware neural-based models where even more linguistic information from a combinatory categorial grammar (CCG)64 is used, a grammar on which we will base our syntax-aware models in this work. Additionally, improvement in inference and machine translation tasks have been found using syntax-aware neural models in65,66.

In this work, we present a rich family of learning models in the common language of tensor networks. Another main contributions of this work is the introduction of methods for efficient implementation of large-scale quantum tree tensor network models. Hence this work provides tools for testing the benefits of including strong inductive biases, including syntactic structure, in tensor-based models for NLP. Additionally and as a first at this scale, we run several of our trained models on Quantinuum’s state-of-the-art ion-trap quantum computer showing its potential power even in the near-term.

Models

We introduce our models via a two-step process. To instantiate a model, first, a compositional scheme is defined for a sentence. In order to define a compositional scheme, we make use of the graphical language of process theories. The processes are depicted by boxes, with input and output wires. The compositional schemes we use throughout this work are such that wires carry one of two types, the ‘internal’ type \(\tau\) and the ‘sentence’ type \(\sigma\), as shown in Fig. 1. Freely composing the boxes, while respecting the types, allows for any process diagram to be generated, representing a scheme, to be defined. Given a vocabulary comprising a finite set of words, or more generally tokens, \(V=\{w_i\}_i\), we consider compositional schemes for sentences, or more generally sequences, S, of finite length over this vocabulary.

Then, semantics is given via a semantic functor, F, i.e. a structure-preserving map. The semantic function maps the object of words to vectors and grammatical reductions to algebraic operations on these vectors. We may define F for arbitrary vector spaces. We will define F to give Hilbert space semantics to our compositional schemes, realising quantum tensor network models. This is done by assigning a number of qubits q to \(\tau\) and \(q'\)-many bits to \(\sigma\), and a parameterised quantum circuit (PQC) of a suitable size to each box, where the set of control parameters depends on the box:

-

The w-box, which specifically prepares a word-state, typed \(\tau ^{\otimes 0} \rightarrow \tau ^{\otimes 1}\), is mapped to a parameterised quantum state prepared by applying the circuit \(U(\theta _w)\) on the fixed input state \(|0\rangle ^{\otimes q}\). Note that to every word corresponds a parameter set.

-

The filter f-box, with type \(\tau ^{\otimes 2} \rightarrow \tau ^{\otimes 2}\), is mapped to \(U(\theta _f)\) with 2q input and 2q output qubits.

-

The merge m-box, typed \(\tau ^{\otimes 2} \rightarrow \tau ^{\otimes 1}\), is mapped to a \(U(\theta _m)\) with 2q input qubits and q output qubits, where the other q qubits have been either discarded or postselected by the \(\bot\)-effect.

-

The classifier c, with type \(\tau ^{\otimes 1} \rightarrow \sigma ^{\otimes 1}\), is mapped to a \(U(\theta _c)\) which accepts a q-qubit state as input and outputs a \(q'\)-qubit state (by applying the \(\bot\) effect) with \(q' < q\) determined by the number of classes. This output is then measured in the Z basis to get a vector in \([0, 1]^{q'}\) which represents the probability of each outcome (as determined by the Born rule) and maps the output from quantum bits to classical bits.

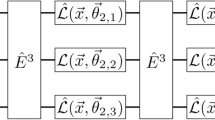

Note \(\tau ^{\otimes n}\) means n wires of type \(\tau\) while for example \(|0\rangle ^{\otimes q}\) denotes a q qubit state assigned to a wire after functor F is applied.The action of F on the scheme generators is shown in Fig. 2. In general, a more general class of quantum processes can be considered by introducing ancillae qubits to the parameterised quantum circuits.

Definition of the semantic functor F which assigns parameterised quantum circuits \(U(\theta _b)\) to boxes of type b. Thick black wires carry \(\tau\) types and via F they are mapped to thin black wires carrying q-many qubits. The thick blue wire carries the \(\sigma\) type and is mapped to a thin blue wire carrying b-many bits. For the \(\bot\)-effect, we can choose either to postselect on the all-zeros state or to discard.

For the \(\bot\)-effect, we consider two options. In the first case, \(\bot =\texttt {postselect}\), it represents postselection, ie conditioning on the outcome of a nondeterministic measurement, by convention and without loss of generality to the all-zeros state. In the second case, \(\bot =\texttt {discard}\), it represents discarding of that dimension, or a partial trace (marginalisation). The tensor network topology of the models we consider, inherited by the structure of the compositional schemes we define, allows for efficient tensor contraction strategies, thus making them classically simulable. This holds for both choices regarding the \(\bot\)-effect. We can therefore consider our models as ‘quantum-inspired’. At the same time, both versions have a valid operational interpretation as quantum circuits, so they could also be evaluated on quantum processors. However, while discarding is a free operation in quantum theory, postselection is not, as it incurs an exponential sampling overhead in number of qubits being postselected.

The number of qubits per wire q, the specific form, or ansatz, of each PQC realising a unitary U, and the choice for the \(\bot\)-effect are hyperparameters of our models. The ansätze we use are composed of a number of repeating layers of parameterised quantum gates. For simplicity, our functor is defined such that all PQCs involved are defined in terms of the same ansatz \(U(\theta _b)\) and have the same number of layers D; the type of box b affects the control parameters \(\theta _b\) as well as the size of the circuit. In the following, we taxonomise our tensor network models first by the compositional scheme defining their architecture, and then by the choice of semantic functor \(F(\theta )\) and its hyperparameters, where \(\theta = \cup _b \theta _b\) is the set of all control parameters (in the following we will suppress notation for F as it should be inferable by context). Rules and constraints we impose on the parameters that control PQCs define a model species.

Path

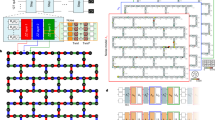

The first compositional scheme we study follows a sequential architecture which follows the reading order of the words in the sentence. Applying the semantic functor F defined in Fig. 2 to this scheme, we obtain the path tensor network model \(F(\theta )[\texttt {path}] =\textrm{PTN}\), as shown in Fig. 3.

We denote the length of the input sequence S by |S|. If we allow a ‘hierarchical’ dependence of the parameter sets \(\theta _{m_i}\) on their position \(i\in \{1,2,\ldots ,|S|-1\}\), then we realise the hPTN models. Then imposing that all m-circuits share one parameter set \(\theta _m=\theta _{m_i}, \forall i\), which makes the model recurrent, we realise the ‘uniform’ models uPTN. The uPTN can be interpreted as a vanilla version of a recurrent quantum model67, or a matrix product state (MPS) model where all the ‘physical dimensions’ are either discarded or postselected, save the last one which encodes the meaning of the sentence.

This compositional scheme does not take syntax nor long-range correlations into account and can thus serve as a baseline model against which syntax-aware models can be compared, but still satisfies the property that it is constructed by local application of a composition rule. Also, as it is effectively an MPS, it is not expected to be able to capture long-range correlations for a fixed bond dimension, constituting it a useful baseline against other models we construct below.

Tree

The simplest model that is able to capture long-range correlations uses a compositional scheme with the topology of a balanced binary tree. We call this scheme tree. At every branching, an m-box combines two input wires into one. The number of branchings, and so the number of m-boxes is \(|S|-1 = \sum _{i=0}^{{\log (|S|)-1}}2^i\), where the log is in base 2 throughout. This scheme has depth \(\log (|S|)\), since at the i-th layer there are half as many parallel m-boxes than that in layer \(i+1\). Figure 3 shows the result of applying the semantic functor to tree in order to obtain a tree tensor network (TTN) model.

We explore the ‘hierarchical’ species, hTTN, where circuits that belong to the i-th layer share a parameter set \(\theta _{m_i}\). Furthermore, we define the ‘uniform’ species, uTTN, where all m-circuits share one parameter set \(\theta _m=\theta _{m_i},\forall i\). The general form of quantum TTN is what is defined in Ref.41, where the authors allowed the m-circuits to have different parameter sets and used this model to classify images of handwritten digits (MNIST).

Syntactic

We now present the first model that is syntax-aware, which we call the syntax. A CCG parser returns a syntax tree over text input which can be used directly as our compositional scheme. Structure-wise, it can be viewed as an intermediate scheme between path and tree, in the sense that the CCG parser may output binary trees that include path (right-branching or maximally-imbalanced binary tree) and tree (ie balanced binary tree), with path the limit case of maximal depth and minimal width and tree the limit case of minimal depth and maximal width. The number of m-boxes in this scheme is also \(|S|-1\), but the depth depends on the syntactic structure. In Fig. 3 we provide an example of an instantiation of a syntactic tensor network (STN).

More specifically, we define three species of STNs. The \(m_i^r\)-circuits depend on the layer \(i\in \{1,2,\dots ,|S|-1\}\), ie their distance from the leaves, as well as the CCG rule \(r\in \textrm{R}\) as annotated by the parser. Similarly to the case of models based on the tree scheme, we can also, in this case, define a hierarchical species (hSTN), such that the m-circuits that are at depth i, where depth is defined by their distance from the leaves, share a parameter set \(\theta _{m_i}=\theta _{m_i^r}, \forall r\). Also, since we have syntactic information, we can define a ‘rule-based’ species (rSTN), where the m-circuits annotated with the same CCG rule r share the same parameter set \(\theta _{m^r} = \theta _{m_i^r}, \forall i\). Finally, we define the uniform species (uSTN), where all m-circuits share a single parameter set \(\theta _{m}=\theta _{m_i^r}, \forall i,r\).

Convolutional

We now introduce a compositional scheme that can be viewed as an enhancement of the tree, which we call conv. It is constructed by adding layers of f-boxes to the tree scheme, such that they act before the m-boxes on neighbouring wires that are not input to the same m-box. The number of m-boxes is \(|S|-1\) and the depth is \(\log (|S|)\) as is the case for tree. The number of f-boxes is \(|S|-(\log (|S|)+1) = \sum _{i=0}^{\log (|S|)-1}(2^i-1)\), since at every layer there is one less f-box than there are m-boxes. The application of the semantic functor to this scheme in order to obtain convolutional tensor network \(F[\texttt {conv}] = \textrm{CTN}\) is shown in Fig. 4. At the i-th layer, the layer of f-circuits filter out unnecessary entanglement and then the layer of m-circuits reduces the number of qubit wires, effectively coarse-graining the sentence and retaining only the relevant information for the task.

We define the ‘hierarchical’ models hCTN by having their parameter sets, \(\theta _{f_i}, \theta _{m_i}\), be shared if they belong to the same layer. Finally, by making them be shared throughout the model, \(\theta _f = \theta _{f_i}, \theta _m = \theta _{m_i}, \forall i\), we realise the self-similar ‘uniform’ uCTN. The CTN with \(\bot = \texttt{discard}\) has been introduced in Refs.68,69 and implemented on quantum computers in Ref.70. In Ref.68 the authors put no constraints on the parameter sets \(\theta _f, \theta _m\) and the model was used to classify MNIST, and in Ref.69 the authors introduce a special case of hCTN, where measurement-and-feedforward is used to keep the state pure. Finally, we define a ‘sliding’ variant which allows us to apply the convolutional model to large sequences efficiently. We call this the CTNS which consists of a sliding window of fixed size w that scans along the sequence. At each position in the sequence, i, a CTN is applied to the sub-sequence \([w_i,...,w_{i+w}]\). The outputs obtained from all sub-sequences are then aggregated by a function of our choosing, and here we choose the average.

Syntactic convolutional

Finally, we introduce a compositional scheme that combines the features of syntax and conv, i.e. it is a syntax-aware coarse-grainer of a sentence, which we call syntaxconv. It is constructed by endowing syntax with f-boxes, following the convention that before every m-box we place f-boxes. The rule for placing an f-box is that it does not act on two wires that are inputs to the same m-box. In general, f-boxes do not necessarily commute, so we follow the convention that if two f-boxes act on the same wire, then the one that involves words that appear earliest in the sentence is applied first. The application of the semantic functor which returns the syntactic convolutional tensor network, \(F[\texttt {syntaxconv}] = \textrm{SCTN}\), is shown in Fig. 5.

The m-circuits and f-circuits are controlled by parameter sets that depend on the CCG rule annotating the tree at each branching, as well as the depth (distance from the leaves), \(\theta _{m_i^r}, \theta _{f_i^r}\). We define the ‘hierarchical’ models hSCTN, where circuits of the same depth share the same parameter sets \(\theta _{m_i} = \theta _{m_i^r}\) and \(\theta _{f_i} = \theta _{f_i^r}\), \(\forall r\). We also define the ‘rule-based’ models rSCTN, in which case the parameter sets only depend on the CCG rule \(\theta _{m^r} = \theta _{m_i^r}\) and \(\theta _{f^r} = \theta _{f_i^r}\), \(\forall i\). Finally, the uniform models uSCTN have two parameter sets shared across all m-circuits and f-circuits, \(\theta _{m} = \theta _{m_i^r}\) and \(\theta _{f} = \theta _{f_i^r}\), \(\forall i,r\).

Experimental results for binary classification

The models as we have defined them in section “Models” follow an ‘encoder’ architecture, in that they take words from a sentence as input, and return an output. Thus, they are designed to naturally act as classifiers. We focus on tasks of binary classification, ie only a single qubit (\(q'=1\)) is measured from the output quantum state of the \(U_c\) circuit, resulting in the probabilities over two measurement outcomes, \(p_0\) and \(p_1\), as given by the Born rule. Each outcome is interpreted as the label of a class. Multiclass classification can be straightforwardly defined by setting \(q' = \lceil \log L \rceil\) for a number of class labels L. Since all the model species we have introduced have a tree-like architecture, they can be evaluated efficiently; we provide the upper bounds to their contraction complexities in the Supplementary Material along with additional model details. Importantly, tree-like models are immune by construction to the phenomenon of barren plateaus during training71,72,73.

The models are defined using the DisCoPy library for monoidal categories74,75. Where applicable, the syntactic structures were obtained using the CCG parser Bobcat76, which is available in the lambeq package77. We simulate the models using the tensornetwork library78. Training is done using the library JAX79; by defining the forward pass as a pure JAX function, we are able to exploit Just-In-Time (JIT) compilation to batch compute the outputs of multiple sentences, even if the tensor networks were produced from schemes with different syntactic structures. While we experimented with different families of quantum ansätze, the results we present use the expressive ansatz 14 from80, as it had the best test performance. The parameters of a model are optimised using AdamW81 such that the labels of the train set \(l_i\in \{0,1\}\) are predicted correctly according to the binary cross-entropy loss, \(H = - \frac{1}{|\mathrm {train ~set}|} \sum _{i=1}^{|\mathrm {train ~set}|} l_i \log _2({p_1}_i) + (1-l_i) \log _2(1-{p_1}_i)\). If one were to train on a quantum computer, one would use the parameter-shift rule for estimating analytic gradients82, or use gradient-free optimisers such as SPSA83, which requires much less overhead and has been shown to perform well when training PQCs on near-term hardware85.

A validation set is used for model selection with early stopping. The model hyperparameters are the embedding qubit number and ansatz depth, (q, D), and learning rate. The optimiser is given a fixed random seed for all models. Finally, an unseen test set is used to evaluate the model’s generalisation performance as measured by the prediction accuracy. All test accuracy results are taken at best validation accuracy over the stated hyperpameters and learning rate with fixed seed rather than an averaged behaviour.

We work with three datasets comprising labelled sequences: two NLP datasets comprised of short news titles (Clickbait)86 and longer movie reviews (Rotten Tomatoes)87 and one bioinformatic dataset consisting of DNA sequences88. The classes are clickbait/non-clickbait, positive/negative sentiment and binding affinity respectively. The NLP data is lemmatised, which occurs post-parsing for the syntactic models. While PTN, TTN, STN are all efficiently batchable and scalable, CTN and SCTN remain harder to scale in simulation, as discussed in the Supplementary Material.

Table 1 show results only for the scalable models on the full datasets. Additionally, we provide a baseline LSTM model in a comparable parameters regime implemented using Keras84 and again using AdamW. We found that on the equivalent data the simple LSTM models considered consistently overfit showing significantly worse accuracy on validation and test set data than on the train set. This is especially true for the movie reviews which had a train accuracy of around 100 but a test set accuracy of random guessing.

In order to obtain results from SCTN, we extracted a ‘reduced’ news titles dataset by implementing batching of sentences with the same syntactic structure and utilising vectorisation. These results are shown in Table 2 along with the CTN model for comparison and the restriction \(q=1\) only.

We find that all our models train well in the low parameter regime. As is already known in NLP, it is not possible to show significant advantage for including syntax for sentiment analysis tasks. As shown in Table 1, for the simplest dataset consisting of news titles all models show good generalisation performance with improvement on test score over the LSTM. For the case of the movie reiews, the CTNS performs the best but this is only significant in the case of discarding. The most significant advatage of this model can be seen in the genetic data set with models restricted to \(q=1\). For this dataset, the CTNS performs well but the complete CTN significantly outperforms all other models and the baselines. For the uCTN achieving 89.0\(\%\) test, the number of trainable parameters is 3/token and 11 in the remaining architecture. For the hCTN achieving 94.0\(\%\) test, the number of trainable parameters is 3/token and 8/layer in the remaining architecture (with 4 tokens and 6 layers in total) and again 3 for the final classification box. See Table 2 in Supplementary Material for exact parameter values for all cases. For the training with significantly reduced data shown in Table 2, all convolutional models significantly outperform the comparable LSTM with the uniform CTN scoring the best. The CTN model’s performance supports the importance of an architecture’s bias towards the correct correlations in achieving consistent resource compression even for relatively simple tasks.

Finally, we ran a larger scale 50,000 review IMDb dataset using the uCTNS model with \(\bot =\texttt {postselect}\), window size 4 and ansatz depth 1, achieving a test set accuracy of 88\(\%\). We did not do a full hyperparmeter grid search for this data but this is comparable to recent baseline results89 again with only 3 trainable parameters per word and 11 in the remaining architecture. Together these results support the potential of tensor networks for reducing resources in training models. In the future, experiments utilising the scalable machinery developed in this work should be applied to more complex tasks to test the robustness of these arguments.

Execution on a trapped-ion quantum processor

Finally, we execute a representative selection of test set examples from each dataset, utilising the uSTN, rSTN and uCTN models with \(\bot = \texttt {discard}\), on Quantinuum’s 32-qubit H2-1 quantum processor with reported quantum volume of \(2^{16}\)90. The sampled averages over 300 shots are shown in Fig. 6 which are in good agreement with the simulations. For the shorter circuits relevant to classifying the new title dataset, we find that the estimated probability \(p_1\) that determines the classification label agrees with its exact classical noiseless simulation, considering the variance arising from shot noise. We find that only 1 disagrees with its classical simulation regarding the classification label, due to both shot noise and the fact that for that circuit the exact result for \(p_1\) is very close to the classification threshold. For the selected larger circuits corresponding to the movie reviews and protein sequences, we find that noise effects beyond shot noise, such as gate noise, have affected the estimated value of \(p_1\). However, after considering the size of these circuits, it is notable that the classification label is not affected, since noise has not altered \(p_1\) enough for it to cross the classification threshold. Before the quantum circuits corresponding to these models can be run on a specific backend, they need to be compiled, for which we used Quantinuum’s TKET compiler91. This entails translating the circuit’s gates into the native operations available to the device. The quality of the experimental results in relation to exact classical simulation is particularly impressive for the DNA binding sequences comprising 64 nucleotides each. In particular, the uCTN model, post-compilation, is a 64-qubit circuit that contains 1207 gates, of which 360 are two-qubit entangling gates, which are two orders of magnitude noisier than single-qubit gates. Furthermore, H2-1’s mid-circuit measurement and qubit-reset features allow for qubit reuse92. This allows us to reset a qubit that is no longer in use such that when we ‘discard’ the output in one part of our circuit we may use this qubit as a fresh input to a later operations. Remarkably, with qubit-reuse strategies, which essentially follow the logic of good tensor contraction strategies, the 64-qubit uCTN circuit is compressed down to an 11-qubit circuit.

Test-time results for representative examples from the datasets used in this work for some choice of models. The sampled average \(\tilde{M} = \frac{1}{300} \sum _{i=1}^{300} M_i\) (blue), obtained by execution on the H2-1 quantum processor for 300 shots, and the expected value \(\mathbb {E}(M)\) (orange), obtained by exact classical noiseless simulation, of the measurement outcome M, which is a Bernoulli random variable. Specifically, \(p_1 = \mathbb {P}(M = 1) = \mathbb {E}(M)\) represents the probability of measuring 1, and the red line represents the decision threshold (0.5). Error bars show the range of 2 standard deviations of M (95% confidence interval) away from the expected value \(\mathbb {E}(M)\), considering only shot noise.

Discussion and outlook

We have introduced machine learning models for sequence classification constructed using the techniques of tensor networks. The architecture of the models plays the role of an inductive bias motivated by the inherent correlation and compositional structures present in the data. Moreover, we utilise unitary complex tensors which allows for their instantiation as parameterised quantum circuits. We have demonstrated both in simulation and by execution on a state-of-the-art trapped-ion quantum processor the efficient implementation of our models for datasets relevant to natural language processing as well as bioinformatics, showing good performance. These models show particular promise in generalisation power in the low parameter and small data regime. The models and methods defined in this work enable for the first time the experimentation with a variety of quantum-inspired tensor network models for sequence processing. Importantly, with the methods presented here, one can employ large-scale and real-world data, and explore the question of for what kinds of tasks and datasets is a syntax-aware structure beneficial, as well as explore more sophisticated model-selection strategies. To this end, we make our code and results available in this repository https://github.com/CQCL/classification-with-qttn.

In analogy to standard NLP techniques we can train quantum-inspired word embeddings and test their performance in a downstream task, such as the classification tasks tackled in this work. This can be done with the usual objectives such as the skipgram93 or glove94 methods, and it would be interesting to evaluate such embeddings in tasks such as word analogy. Creating embeddings in this way constitutes the creation of quantum data to be classified; interestingly, it is in this context in which quantum convolutional neural networks (CTN with discard in our work) were introduced69. In addition, our methods can be straightforwardly extended so that a syntactic structure is learned using adaptive methods applicable to our STN or SCTN models95, which could be used to infer syntactic structures in bioinformatics data96. Our models can also be applied to other highly-structured data similarly displaying long-range correlations, such as neuron firing networks97.

Beyond classification, our simple setup can be extended to accommodate generative modelling for sequences, using tree-like Born machines with a basis encoding of the vocabulary (\(q=\log _2(|V|)\). In general, one can create a quantum language model using one of our non-syntactic models, which is trained in a masking task to capture the conditional probability distribution of tokens in text corpora. Additionally, there is scope for polynomial advantage in sampling from such models using a quantum device. To do this we simply have ancilla on the isometries, run the circuit with O(log|S|) gates and perform a measurement on the q|S| qubit output state to obtain a sample from the \(2^{q|S|}\) possible strings. In simulation we need to contract the tensor network in order to obtain the probabilities over the possible strings where the complexity of contraction is O(poly(log|S|)). We note that for even for the sequences considered in this work we would need to resort to weak simulation to extract samples over a full q|S| qubit output sequence. Here, weak simulation refers to calculating the marginal probability distribution of a subsystem, updating the state of the subsystem with a sample (or x samples if following multiple probabilistic paths) and then extracting the marginal over the next subsystem, sampling and so on.

Finally, we consider two general directions, guided by the efficiency of contracting tensor network models. In this work we have only used the Born rule as a nonlinearity for obtaining the classification label, since we designed our models as to have valid quantum operational semantics. But one could use any element-wise nonlinearities one desires, acting on the complex-valued tensors98. Alternatively, it would be interesting to consider text-level syntactic structures99, which are conjectured to lead to hard-to-contract tensor networks. In that case, given quantum semantics, an efficient implementation would necessarily require quantum processors.

Data availability

All code and datasets required to recreate the results of this paper can be found in the repository https://github.com/CQCL/classification-with-qttn.

References

Vaswani, A. et al. Attention is all you need. Adv. Neural. Inf. Process. Syst. 2017, 30 (2017).

OpenAI (2023, accessed 19 Jan 2023). https://openai.com/.

OpenAI. Gpt-4 technical report, 2023 (2023).

Thoppilan, R. et al. Lamda: Language models for dialog applications. arXiv:2201.08239 (2022).

Zaadnoordijk, L., Besold, T. R. & Cusack, R. Lessons from infant learning for unsupervised machine learning. Nat. Mach. Intell. 4(6), 510–520 (2022).

Zador, A. M. A critique of pure learning and what artificial neural networks can learn from animal brains. Nat. Commun. 10(1), 1–7 (2019).

Mitchell, T. M. The Need for Biases in Learning Generalizations 184–191 (Springer, 1980).

Michalski, R. S. A theory and methodology of inductive learning. Mach. Learn. 1983, 83–134 (1983).

Lehmann, F. Semantic networks. Comput. Math. Appl. 23(2–5), 1–50 (1992).

Minsky, M. A framework for representing knowledge. Psychol. Comput. Vis. 1975, 586 (1975).

Zhou, J. et al. Graph neural networks: a review of methods and applications. AI Open 1, 57–81 (2020).

Bronstein, M. M. et al. Geometric deep learning: grids, groups, graphs, geodesics, and gauges. arXiv:2104.13478 (2021).

Garcia, V. & Bruna, J. Few-shot learning with graph neural networks. Int. Conf. Learn. 2018, 58 (2018).

Chen, C., Li, K., Wei, W., Zhou, J. T. & Zeng, Z. Hierarchical graph neural networks for few-shot learning. IEEE Trans. Circ. Syst. Video Technol. 32(1), 240–252 (2021).

Yu, T., He, S., Song, Y. & Xiang, T. Hybrid graph neural networks for few-shot learning. Proc. AAAI Conf. Artif. Intell. 36, 3179–3187 (2022).

Song, K., Yue, K., Duan, L., Yang, M. & Li, A. Mutual information based bayesian graph neural network for few-shot learning. In Uncertainty in Artificial Intelligence 1866–1875 (PMLR, 2022).

Stoudenmire, E. M. & Schwab, D. J. Supervised learning with tensor networks. Adv. Neural Inf. Process. Syst. 2016, 29 (2016).

Stoudenmire, E. M. Learning relevant features of data with multi-scale tensor networks. Quant. Sci. Technol. 3(3), 034003 (2018).

Novikov, A., Podoprikhin, D., Osokin, A. & Vetrov, D. P. Tensorizing neural networks. Adv. Neural Inf. Process. Syst. 28, 8596 (2015).

Novikov, A., Trofimov, M. & Oseledets, I. Exponential machines. arXiv:1605.03795 (2016).

Hallam, A., Grant, E., Stojevic, V., Severini, S. & Green, A. G. Compact neural networks based on the multiscale entanglement renormalization ansatz. arXiv preprint arXiv:1711.03357 (2017).

Liu, D. et al. Machine learning by unitary tensor network of hierarchical tree structure. New J. Phys. 21(7), 073059 (2019).

Patel, R. et al. Quantum-inspired tensor neural networks for partial differential equations. arXiv preprint arXiv:2208.02235 (2022).

Cohen, N., Sharir, O. & Shashua, A. On the expressive power of deep learning: a tensor analysis. In Conference on Learning Theory 698–728 (PMLR, 2016).

Glasser, I. et al. Expressive power of tensor-network factorizations for probabilistic modeling. Adv. Neural Inf. Process. Syst. 2019, 32 (2019).

Glasser, I., Pancotti, N. & Cirac, J. From probabilistic graphical models to generalized tensor networks for supervised learning. IEEE Access 8, 68169–68182 (2020).

Mikolov, T., Chen, K., Corrado, G. & Dean, J. Efficient estimation of word representations in vector space. In International Conference on Learning Representations (2013).

Mikolov, T., Yih, W. & Zweig, G. Linguistic regularities in continuous space word representations. In Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies 746–751 (Association for Computational Linguistics, 2013).

Chomsky, N. Syntactic Structures (Mouton and Co., 1957).

Coecke, B., Sadrzadeh, M. & Clark, S. Mathematical foundations for a compositional distributional model of meaning. arXiv:1003.4394 (2010).

Lambek, J. The mathematics of sentence structure. Am. Math. Mon. 1958, 154–170 (1958).

Lambek, J. From Word to Sentence: A Computational Algebraic Approach to Grammar (Polimetrica sas, 2008).

Coecke, B., Grefenstette, E. & Sadrzadeh, M. Lambek vs. lambek: functorial vector space semantics and string diagrams for lambek calculus. Ann. Pure Appl. Logic 164(11), 1079–1100 (2013).

Gogioso, S. A corpus-based toy model for discocat. Electron. Proc. Theor. Comput. Sci. 221, 20–28 (2016).

Coecke, B. & Kissinger, A. Picturing Quantum Processes: A First Course in Quantum Theory and Diagrammatic Reasoning (Cambridge University Press, 2017).

Havlíček, V. et al. Supervised learning with quantum-enhanced feature spaces. Nature 567(7747), 209–212 (2019).

Liu, Y., Arunachalam, S. & Temme, K. A rigorous and robust quantum speed-up in supervised machine learning. Nat. Phys. 17(9), 1013–1017 (2021).

Huang, H. et al. Power of data in quantum machine learning. Nat. Commun. 12(1), 456 (2021).

Rieser, H. M., Köster, F. & Raulf, A. P. Tensor networks for quantum machine learning, 2023. Proc. R. Soc. A 479(2275), 20230218 (2023).

Benedetti, M., Lloyd, E., Sack, S. & Fiorentini, M. Parameterized quantum circuits as machine learning models. Quant. Sci. Technol. 4(4), 043001 (2019).

Huggins, W., Patil, P., Mitchell, B., Whaley, K. B. & Stoudenmire, E. M. Towards quantum machine learning with tensor networks. Quant. Sci. Technol. 4(2), 024001 (2019).

Zeng, W. & Coecke, B. Quantum algorithms for compositional natural language processing. Electron. Proc. Theor. Comput. Sci. 221, 67–75 (2016).

Gao, X., Anschuetz, E. R., Wang, S., Cirac, J. I. & Lukin, M. D. Enhancing generative models via quantum correlations. Phys. Rev. X 12(2), 12589 (2022).

Eric, R. A., Hong-Ye, H., Jin-Long, H. & Xun, G. Interpretable Quantum Advantage in Neural Sequence Learning (Springer, 2022).

Meichanetzidis, K. et al. Quantum natural language processing on near-term quantum computers. Electron. Proc. Theor. Comput. Sci. 340, 213–229 (2021).

Meichanetzidis, K., Toumi, A., de Felice, G. & Coecke, B. Grammar-aware question-answering on quantum computers. arXiv:2012.03756 (2020).

Lorenz, R. et al. Qnlp in practice: running compositional models of meaning on a quantum computer. J. Artif. Intell. Res. 76, 1305–1342 (2023).

Stokes, J. & Terilla, J. Probabilistic modeling with matrix product states. Entropy 21(12), 1236 (2019).

Miller, J., Rabusseau, G. & Terilla, J. Tensor networks for probabilistic sequence modeling. In International Conference on Artificial Intelligence and Statistics 3079–3087 (PMLR, 2021).

Ebeling, W. & Pöschel, T. Entropy and long-range correlations in literary english. EPL (Europhys. Lett.) 26(4), 241 (1994).

Ebeling, W. & Neiman, A. Long-range correlations between letters and sentences in texts. Phys. A 215(3), 233–241 (1995).

Altmann, E. G., Cristadoro, G. & Esposti, M. D. On the origin of long-range correlations in texts. Proc. Natl. Acad. Sci. 109(29), 11582–11587 (2012).

Montemurro, M. A. & Pury, P. A. Long-range fractal correlations in literary corpora. Fractals 10(04), 451–461 (2002).

Lin, H. W. & Tegmark, M. Criticality in formal languages and statistical physics. arXiv:1606.06737 (2016).

Mora, T. & Bialek, W. Are biological systems poised at criticality?. J. Stat. Phys. 144(2), 268–302 (2011).

Pestun, V. & Vlassopoulos, Y. Tensor network language model. arXiv:1710.10248 (2017).

Gallego, A. J. & Orus, R. Language design as information renormalization. SN Comput. Sci. 3(2), 1–27 (2022).

Wright, L. et al. Deterministic tensor network classifiers. arXiv:2205.09768 (2022).

Cheng, S., Wang, L., Xiang, T. & Zhang, P. Tree tensor networks for generative modeling. Phys. Rev. B 99(15), 78596 (2019).

Vidal, G. Class of quantum many-body states that can be efficiently simulated. Phys. Rev. Lett. 101(11), 7845 (2008).

Orús, R. Tensor networks for complex quantum systems. Nat. Rev. Phys. 1(9), 538–550 (2019).

Socher, R. et al. Recursive deep models for semantic compositionality over a sentiment treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing 1631–1642 (2013).

Hermann, K. M. & Blunsom, P. The role of syntax in vector space models of compositional semantics. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) 894–904 (Association for Computational Linguistics, 2013).

Steedman, M. The Syntactic Process (The MIT Press, 2001).

Liu, P., Qiu, X. & Huang, X. Syntax-based attention model for natural language inference. arXiv:1607.06556 (2016).

Gómez, S. E., McGill, E. & Saggion, H. Syntax-aware transformers for neural machine translation: the case of text to sign gloss translation. In Proceedings of the 14th Workshop on Building and Using Comparable Corpora (BUCC 2021) 18–27 (2021).

Bausch, J. Recurrent quantum neural networks. Adv. Neural Inf. Process. Syst. 33, 1368–1379 (2020).

Grant, E. et al. Hierarchical quantum classifiers. npj Quant. Inf. 4(1), 7859 (2018).

Cong, I., Choi, S. & Lukin, M. D. Quantum convolutional neural networks. Nat. Phys. 15(12), 1273–1278 (2019).

Herrmann, J. et al. Realizing quantum convolutional neural networks on a superconducting quantum processor to recognize quantum phases. Nat. Commun. 13(1), 41442021 (2022).

Pesah, A. et al. Absence of barren plateaus in quantum convolutional neural networks. Phys. Rev. X 11(4), 041011 (2021).

Martín, E. C., Plekhanov, K. & Lubasch, M. Barren plateaus in quantum tensor network optimization. Quantum 7, 974 (2023).

Zhao, C. & Gao, X. S. Analyzing the barren plateau phenomenon in training quantum neural networks with the zx-calculus. Quantum 5, 466 (2021).

de Felice, G., Toumi, A. & Coecke, B. Discopy: monoidal categories in python. arXiv:2005.02975 (2020).

Toumi, A., de Felice, G. & Yeun, R. Discopy for the quantum computer scientist. arXiv:2205.05190 (2022).

Clark, S. Something old, something new: grammar-based ccg parsing with transformer models. arXiv preprint arXiv:2109.10044 (2021).

Kartsaklis, D. et al. lambeq: an efficient high-level Python library for quantum NLP. arXiv:2110.04236 (2021).

Roberts, C. et al. Tensornetwork: a library for physics and machine learning. arXiv:1905.01330 (2019).

Bradbury, J. et al. JAX: composable transformations of Python+NumPy programs (2018).

Sim, S., Johnson, P. D. & Guzik, A. A. Expressibility and entangling capability of parameterized quantum circuits for hybrid quantum-classical algorithms. Adv. Quant. Technol. 2(12), 1900070 (2019).

Loshchilov, I. & Hutter, F. Decoupled Weight Decay Regularization (Springer, 2017).

Schuld, M., Bergholm, V., Gogolin, C., Izaac, J. & Killoran, N. Evaluating analytic gradients on quantum hardware. Phys. Rev. A 99(3), 48523 (2019).

Spall, J. C. Multivariate stochastic approximation using a simultaneous perturbation gradient approximation. IEEE Trans. Autom. Control 37(3), 332–341 (1992).

Keras, Chollet, François et al. https://keras.io (2015).

Monroig, X. B. et al. Performance comparison of optimization methods on variational quantum algorithms. Phys. Rev. A 107(3), 74859 (2023).

Chakraborty, A., Paranjape, B., Kakarla, S. & Ganguly, N. Stop clickbait: detecting and preventing clickbaits in online news media. In Advances in Social Networks Analysis and Mining (ASONAM), 2016 IEEE/ACM International Conference on 9–16 (IEEE, 2016).

Pang, B. & Lee, L. Seeing stars: exploiting class relationships for sentiment categorization with respect to rating scales. In Proceedings of the 43rd Annual Meeting of the Association for Computational Linguistics (ACL’05) 115–124 (Association for Computational Linguistics, 2005).

Zou, J. et al. A primer on deep learning in genomics. Nat. Genet. 2018, 1 (2018).

IMDb baselines (2023, accessed 19 Jan 2023). https://paperswithcode.com/sota/sentiment-analysis-on-imdb.

Moses, S. A. et al. A race track trapped-ion quantum processor. Phys. Rev. X 13(4), 041052 (2023).

Sivarajah, S. et al. t\(|\)ket\(\rangle\): a retargetable compiler for nisq devices. Quant. Sci. Technol. 6(1), 014003 (2020).

Matthew, D., Eli, C., Megan, K. & Michael, F.-F. Qubit-reuse compilation with mid-circuit measurement and reset. Phys. Rev. X 13(4), 041057 (2023).

Mikolov, T. et al. Distributed representations of words and phrases and their compositionality. Adv. Neural Inf. Process. Syst. 26, 7859 (2013).

Pennington, J., Socher, R. & Manning, C. GloVe: global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP) 1532–1543 (Association for Computational Linguistics, 2014).

Lourens, M. et al. Hierarchical quantum circuit representations for neural architecture search. NPJ Quant. Inf. 9(1), 79 (2023).

Searls, D. B. The language of genes. Nature 420(6912), 211–217 (2002).

Brochini, L. et al. Phase transitions and self-organized criticality in networks of stochastic spiking neurons. Sci. Rep. 6(1), 35831 (2016).

Scardapane, S. et al. Complex-valued neural networks with nonparametric activation functions. IEEE Trans. Emerg. Top. Comput. Intell. 4(2), 140–150 (2020).

Coecke, B. The mathematics of text structure. Outstand. Contrib. Logic 2021, 181–217 (2021).

Acknowledgements

CH acknowledges funding from grant EP/S023607/1, and RY thanks Simon Harrison for his generous support for the Wolfson Harrison UK Research Council Quantum Foundation Scholarship that he enjoys. We thank Marcello Benedetti, Michael Lubasch, Michael Foss-Feig, Steve Clark, Dimitri Kartsaklis for discussions and feedback on the manuscript.

Author information

Authors and Affiliations

Contributions

C.H. and R.Y contributed equally to the codebase cited. C.H. ran the simulated experiments and R.Y. handled collected data from the real device runs. All authors contributed to writing and reviewing the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Harvey, C., Yeung, R. & Meichanetzidis, K. Sequence processing with quantum-inspired tensor networks. Sci Rep 15, 7155 (2025). https://doi.org/10.1038/s41598-024-84295-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-84295-2