Abstract

The Advanced Persistent Threat (APT) poses significant security challenges to the availability and reliability of government and enterprise information systems. Due to the high concealment and long duration characteristic of APT, industry and academia typically adopt active defense methods to combat APT. By deploying deception defense (DD) methods such as honeypots, the attacker’s target can be effectively confused. However, in reality, honeypots are usually deployed in resource-constrained systems. Therefore, how to effectively deploy deception resources is an urgent problem that needs to be addressed. This paper proposes a multi-type deception resources deployment strategy generation algorithm based on reinforcement learning for the network reconnaissance stage of APT. Through the analysis of network assets and attack process, the algorithm balances the two dimensions of defense effectiveness and defense cost, generating the deployment strategy for deception resources. The experimental results show that compared to other baselines, the proposed algorithm achieves defensive success probability of 97.09% while reducing the attack probability of the target asset by at least 10.34%. This effectively reduces defense costs while ensuring defense efficiency. In addition, the algorithm demonstrates good convergence and stability.

Similar content being viewed by others

Introduction

Nowadays, Advanced Persistent Threat (APT) has become one of the core challenges in the field of cyberspace security. APT is a long-term, covert and systematic network intrusion against government agencies, critical infrastructure, etc., initiated by the state or highly organized groups. For example, in 2010, Iran’s nuclear industry facilities were destroyed by Stuxnet, and in 2015, Ukraine’s power facilities were subjected to large-scale power outages caused by blackenergy attacks. APT has the characteristics of long attack duration and high attack concealment. It makes traditional network defense methods such as intrusion detection and vulnerability patch difficult to deal with.

Among the seven stages of APT attack: reconnaissance, weaponization, delivery, exploitation, installation, command and control, and actions on objectives, network reconnaissance is the first and most critical step of APT attack1. Relevant research shows that up to 70% of network attacks come from the network reconnaissance stage2. The effective response at this stage can prevent or mislead the attacker’s subsequent operations, and significantly improve the level of network security3. Deception defense (DD) technology is the main means to deal with network reconnaissance threats4. It aims to mislead attackers by deploying a variety of deception resources in the network. Among them, honeypot is the most widely used one, which is widely used by existing research5.

For the honeypot deployment process, we divide it into three stages: important asset evaluation, honeypot selection and deployment strategy generation. In the stage of important asset evaluation, the existing research focuses more on the vulnerability distribution6,7 and abnormal alarm8 of network assets, ignoring the communication relationship between assets. It also plays a vital role in the subsequent attack path formulation and lateral moving target selection of attackers9. This leads to a deviation between the protection target and the attack target during the subsequent honeypot deployment. During the honeypot selection stage, honeypots can be categorized into three types based on the level of interaction: low-interaction, medium-interaction, and high-interaction honeypots. The deception and deployment costs of different types of interaction honeypots are different. In order to ensure the deception of attackers, the existing research mostly uses high-interaction honeypots for deployment8,10,11. However, the high cost of high-interaction honeypots makes large-scale deployment challenging in resource-constrained environments, resulting in inadequate defense effectiveness for honeypot deployment strategies. In this regard, although some studies have mixed use of multiple interactive types of honeypots12,13, the cooperation between different interactive honeypots has been neglected in the deployment process. In the stage of deployment strategy generation, it is the main research trend to use graph model14,15 and game theory16,17 to generate honeypot deployment strategy. However, the construction of graph models is usually complex and heavily dependent on expert experience, and there is a problem of insufficient flexibility. With the increase of the network size, the strategy space of the game theory method will become extremely complex. This makes it more difficult to verify the nash equilibrium and find the optimal solution in the game model. To this end, some scholars have introduced reinforcement learning (RL) to achieve intelligent deception resources deployment8. RL is a machine learning method based on environmental interaction. It uses agents to interact with the environment continuously, and can realize strategy self-optimization through independent exploration and feedback mechanism. With the change of network environment, the optimal strategy will be adjusted accordingly, which has strong flexibility. In addition, RL can better deal with high-dimensional and complex state space by introducing neural network. It focuses on maximizing long-term benefits, making it suitable for solving long-term programming problems such as network attack and defense scenarios18.

In order to solve the problems of insufficient evaluation of important assets, single selection of honeypot and insufficient flexibility of strategy generation methods in the above research. This paper focuses on improving the accuracy, effectiveness and adaptability of honeypot deployment, and proposes an intelligently driven deception resources deployment method. Different from the existing schemes, we consider the traffic correlation between devices and fully evaluate the importance of network assets. At the same time, the multi-interaction type honeypots is used to improve the effectiveness of the honeypot deployment strategy. In addition, we use the RL to improve the flexibility of the honeypot deployment strategy generation method.

To sum up, the main contributions of this paper is summarized as:

-

1.

We quantify the network assest importance evaluation process, consider the communication between network devices, and propose a novel asset importance evaluation method. Based on the Common Vulnerability Scoring System (CVSS), the traffic correlation factor is introduced.

-

2.

Considering the limited defense effectiveness of high-interaction honeypots in resource-constrained scenarios, we design a hybrid deception defense model. This model is based on high-interaction honeypot (H-honeypot) and false traffic interaction honeypot (F-honeypot). By introducing F-honeypots, the effectiveness of defense strategies is improved.

-

3.

By introducing RL, we propose an intelligent generation method for a multi-type deception resources deployment strategy based on Proximal Policy Optimization (DRD-PPO). This method aims to achieve a balance between the cost of the deployment strategy and the effectiveness of defense. In addition, we reflect the cooperative relationship between different types of honeypots in state and action space.

The rest of the paper is organized as follows. Section 2 introduces the ralated work. Section 3 introduces the system network model, attack model and defense model. Section 4 introduces the design idea of the intelligent generation algorithm of multi-type deception resources deployment strategy based on PPO. The experimental simulation results of this scheme in Sect. 5. Section 6 concludes the whole work and draws future research.

Related work

In order to provide targeted protection for the network, asset evaluation is the most critical part. Liu6 quantitatively evaluates the availability and impact of vulnerabilities on power equipment in the power scenario to predict the possible transfer path of the threat. Gabirondo7 combines CVSS to quantify the three dimensions of confidentiality, integrity and availability of vulnerabilities on devices in the target network to predict the potential attack targets of attackers. Wang8 studies the alarm situation of the equipment in the current network to predict the trend of the attack path, and then guide the deployment of honeypots. However, the above research mainly evaluates assets from the aspects of vulnerability distribution and alarm situation, ignoring the impact of communication relationship between devices on the asset evaluation process. The communication relationship between devices is also the core element that attackers pay attention to in the process of network detection and lateral movement9. The introduction of this element can improve the accuracy of asset evaluation and provide strong guidance for honeypot deployment.

In order to confuse the attacker’s perception of the real assets of the network, the appropriate honeypot selection plays a decisive role. Wang8 uses elaborate deception resources to replace real devices to attract attackers’ traffic. Zhou10 deploys a high-interaction server honeypot to transmit error information to manipulate the attacker’s perception. Anwar11 misleads the attacker’s passive monitoring and active detection by deploying a single type of honeypot. Most of the above studies use high-interaction honeypots to enhance the authenticity of interaction with attackers, but with the improvement of honeypot interaction ability, its deployment cost also increases. In resource-constrained network scenarios, only a small number of high-interaction honeypot deployments are usually supported, and it is difficult to form an effective defense. A hybrid honeypot system is considered, high-interaction honeypots and low-interaction honeypots are used to achieve a balance between defense cost and defense effectiveness12. Ge13 uses simulation-based honeypots and full operating system-based honeypots, to deceive the attacker differently, and evaluates the interaction probability of the two honeypots to the attacker. However, in the deployment of the aforementioned research, various types of honeypots operate independently of each other. This independence makes it difficult to form an effective deception protection system, which hinders the improvement of the deployment strategy’s effectiveness.

In the honeypot deployment strategy generation phase, a lot of research work mainly focuses on the two categories of graph model and game theory. Shakarian14 utilized a graph model to analyze network topology and introduced a probability model to infer attacker behavior, guiding defenders in deploying deception resources within the target network. Polad15 proposed using attack graphs to simulate attackers’ penetration paths in the target network, formalized the combinatorial optimization problem, and developed a heuristic search algorithm to determine the optimal honeypot configuration strategy. Anwar16 proposed a scalable honeypot deployment algorithm based on attack graph. The attack and defense process is described as a static game model with incomplete information, and the equilibrium strategy is used to deploy the honeypot. Jajodia17 employed an attack graph to characterize the relationships among vulnerability exploitations and utilized a Stackelberg game to design an adversarial reasoning engine, guiding defenders in selecting the appropriate deception strategy. Although the deception resources deployment strategy generated by the above method can effectively alleviate network attacks, the flexibility and scalability of the strategy are limited. For the graph model, as the network size increases, the difficulty of constructing the graph model will also increase rapidly. At the same time, as the network scene changes, the graph model also needs to be reconstructed. For the game theory, it is difficult to handle the large-scale strategy space created by complex networks, and the focus is typically on single-stage or local games. This approach separates the long-term interaction process of the attack and defense scenario, causing the strategy to tend toward being locally optimal.

As one of the most attractive research hotspots of artificial intelligence, RL has made major breakthroughs in many fields such as robotics, autonomous driving, and chess games. In particular, the emergence of AlphaGo19 and AlphaGo Zero20 has verified the effectiveness of RL. RL is also used in many works related to network security and network performance optimization. Aiming at optimizing the utility function of defender, Grammatikis21 formulated the deployment process as a Multi-Armed Bandit problem, and proposed a honeypots deployment method based on RL in a smart grid environment. Li22 proposed to use RL to simulate the process in which defenders choose to carry deception resources with different operating systems in a cloud computing environment. The simulation results show that the proposed method reduces the defense cost while improving the system security. Guo23 proposed a network routing clustering strategy for complex network environments, using RL to learn the benefits of different strategies in different network states. Wang8 proposed an intelligent deployment strategy for deception resources, using RL and threat penetration graph (TPG) models to adjust the location of deception nodes according to security conditions. RL can learn its own experience by exploring unknown environments, and then take the best sequential action without or with limited prior knowledge of the environment. In the above research, RL shows its adaptability and practicability in both real-time environment and adversarial environment. Although the existing research has introduced RL into honeypot deployment strategy, it often focuses on the deployment of a single interactive type of honeypot. This approach ignores the utilization of different interactive types of honeypots, reducing the overall defense effectiveness of the network. In addition, existing research on the design of the state space and action space of RL typically focuses on the number and location of honeypots. This focus often overlooks the cooperative relationships between honeypots, resulting in each honeypot operating independently and making it difficult to form an effective protection system.

Compared to the existing research mentioned above, this paper first addresses the incomplete evaluation of network key assets by introducing communication factors between devices based on vulnerability analysis. Additionally, it designs a more accurate quantitative method for asset evaluation. Secondly, aiming at the problem that the existing research deploys a single interactive honeypot, it is difficult to balance the defense utility and defense cost. This paper introduces H-honeypots and F-honeypots, and designs a hybrid deception defense model. Finally, in order to improve the flexibility of defense strategy, this paper introduces RL and designs a multi-type deception resources deployment strategy generation method based on PPO. In addition, considering the weak cooperation between different types of honeypots, we take into account the honeypot types and their relationships during the design process of the action space and state space. This approach effectively improves the defense efficiency of the deployment strategy.

System model

In this section, we introduce the problem setting of multi-type deception resources deployment. We introduce the target network model, attack model and defense model in detail. This section will focus on the attacker’s method of evaluating the importance of network assets in conjunction with reconnaissance information. It will also cover the functions and characteristics of the two types of honeypots used by defenders.

Network model

In this work, we consider an enterprise office network scenario, including host, router, switch, and server. Among them, these hosts and servers are in different subnets, and the hosts in different office areas are also divided into different subnets. Simultaneously, the host will interact with different servers due to office business requirements. Routers and switchs are mainly responsible for traffic forwarding and traffic management of devices within and between subnets. In addition, in order to cope with the network reconnaissance of attackers, we introduce two types of deception devices, F-honeypots and H-honeypots, on the basis of the above network model. Among them, the F-honeypot is deployed in the subnet of the office area. The H-honeypot is usually a clone of a server and is deployed in the same subnet with the target server. Simultaneously, there is an irregular fake traffic interaction between the F-honeypot and the H-honeypot. The enterprise office network topology is shown in Fig. 1.

We describe the attributes of device nodes in the network through five tuples:

where \(s_i\) is the current state of the node i, If the node i is broken, the value is 0, otherwise the value is 1. \(t_i\) is the current device type of node i, if node i is the host, the value is 0, if it is the server, the value is 1, if node i is the H-honeypot, the value is 2, if it is the F-honeypot, the value is 3. \(r_i\) is the current device authenticity of node i, If node i is a real device, the value is 0, and if it is a honeypot, the value is 1. \(v_i\) denotes the device vulnerability serial number currently contained in node i. \(l_i\) exists in the form of a list, indicats the current relationship between node i and other devices.

Attack model

We consider the network reconnaissance attack phase, assuming that the attack has the following attack behavior:

Network reconnaissance: In this process, attackers obtain information from the target network by active probing and passive monitoring. The attacker can obtain the open ports and operating system types of network assets through active probing, and then obtain the vulnerability information of network assets by combining open source intelligence. The attacker realizes the monitoring of the communication traffic of the network assets through passive monitoring, and then obtains the network topology information and the correlation information between the assets of the network.

It is assumed that after obtaining the vulnerability information and the correlation information of network assets, the attacker will combine these two types of information using Eq. (2) to evaluate the importance of the assets. The attacker will then select the most important network asset for vulnerability exploitation to facilitate further attacks. Among them, Eq. (2) refers to the definition and description method of equipment vulnerability impact score in Reference7.

where \(Importance_i\) represents the importance of node i, \(VC_i\) indicates the confidentiality of the vulnerability of node i, \(VI_i\) represents the integrity of the vulnerability of node i,\(VA_i\) indicates the availability of carrying vulnerabilities on node i, \(NL_i\) represents the number of associated devices of node i, w represents the weight of the assets associated with each node, which is a constant, N represents the total number of nodes in the current target network.

It is assumed that the probability of successful exploitation of the vulnerability is 100% after the attacker obtains the vulnerability information of the network assets and the correlation information of the network assets. If the target device selected by the attacker is a real asset, the attack is successful; if the target device selected by the attacker is a honeypot, the defense is successful.

Defense model

In this paper’s research work, we consider the differences in network information obtained through the two attack methods: active probing and passive monitoring. We employ two different types of honeypots to deliberately confuse the network information acquired by the attacker, in order to lure them into further attacking the honeypot. The two types of honeypots used in the scheme are as follows:

H-honeypot: This type of honeypot has a high device simulation capability and can effectively cope with the active probing behavior initiated by the attacker. The H-honeypot achieves a high degree of restoration of the target device by performing a high degree of clone mirroring of the target server, including open services, operating system type, and vulnerability type.

F-honeypot: This type of honeypot has a low deployment cost, supports large-scale deployment, and can effectively cope with the passive monitoring behavior initiated by attackers. F-honeypot is usually a low-level clone of a conventional host. The main function is to generate traffic interaction behaviors such as traffic requests to H-honeypots from time to time to enhance the device correlation degree of H-honeypots.

Further, through RL, a multi-type honeypot deployment strategy is generated, including the number of H-honeypot, the number of F-honeypot, the clone objects of each H-honeypot, and the communication objects of each F-honeypot. Combined with Eq. (2), it can be seen that the importance score of the device is affected by two factors. On the one hand, it is the score of each dimension of the device vulnerability, and on the other hand, it is the correlation score of the device. Because there are exactly the same vulnerabilities between the H-honeypot and its cloned target service, and the selection of the communication target of the F-honeypot will affect the correlation score of the H-honeypot itself. Through different deployment strategies, the correlation scores of different devices are affected to induce attackers to attack the wrong target, so as to achieve the purpose of attack mitigation.

Algorithm design

In this section, we will briefly introduce the RL model framework and the intelligent generation algorithm of multi-type deception resources deployment strategy based on PPO.

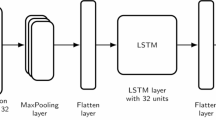

Reinforcement learning model

According to the classical RL theory, the model framework of multi-type deception resources deployment strategy generation based on RL is shown in Fig. 2.

As an important branch of machine learning, the core of RL lies in the dynamic interaction between agents and the environment. In the RL framework, the agent selects actions based on the environmental state, and adjusts the strategy by continuously exploring the reward feedback to maximize the long-term cumulative incentive. In Fig. 2, the defender is the agent, the target network and the attacker are the environment. Usually, the defender will select the deception resources to deploy the action (Action) according to the current network security state (State). Due to the joint action of attacker, defender and target network, the network security status will change. At the same time, defender will receive environmental feedback as a reward (Reward). In this process, the defender continuously interacts with both the target network and the attacker, ultimately seeking to select the optimal strategy. This strategy can serve as a guideline for defenders to implement deception resources deployment in any network security state. By implementing the optimal strategy, defender can achieve the goal of capturing attacker with maximum probability.

In the process of RL, the better the defense strategy chosen by the defender, the higher the reward. The definition of reward is closely related to the goal in RL problems. The optimal strategy is selected to maximize the total reward obtained by the defender in the long time. In addition to the defender, attacker and target network, we also need to define the six main elements of the RL model: state, action, reward, strategy, constraint and objective function.

State: The network state reflects the number, correlation and security states of the four types of devices in the target network: host, server, H-honeypot and F-honeypot. At a given time t, the state of the network is defined as follows:

where \(n_{h}^{t},\) \(n_{s}^{t},\) \(n_{d}^{t}\) and \(n_{v}^{t}\) represent the number of hosts, servers, H-honeypots and H-honeypots in the network at time t, \(L_{1}^{t}\) is stored in the form of matrix, denotes the relationship between the host and the server in the network at time t. \(L_{2}^{t}\) is stored in the form of matrix, denotes the relationship between the H-honeypot and the F-honeypot in the network at time t, \(Aim^t\) denotes the simulation target information of the H-honeypot in the network at time t, which is reflected in the form of matrix, \(T^{t}\) is the security states information of each device node in the network at time t.

Action: The defense action reflects the change of the number of H-honeypots and F-honeypots in the network and the correlationship between them at the current time. At a given time t, the defense action is defined as follows:

where \(d_{d}^{t}\) represents the change of the number of H-honeypots by the defender, \(d_{v}^{t}\) indicates the change in the number of F-honeypots, \(m_{1}^{t}=\left\{ m_{1,1},...,m_{1,j},...,m_{1,n_{s}^{t}} \right\}\) represents the protection target of the H-honeypot that the defender changes, \(m_{2}^{t}=\left\{ m_{2,1},...,m_{2,k},...,m_{2,n_{d}^{t}} \right\}\) represents the correlationship of the F-honeypot that the defender chooses to change.

Reward: The reward reflect the feedback given to the defender by observing the responses of the network and attacker after the defender selects the defense action, and then guide the defender to select better action in the subsequent moments. The reward function is defined as follows:

where \(R_t\) indicates the feedback obtained by the defender according to the next state of the network after performing the selected defense action, \(R_1\) and \(R_2\) represent defense reward value constraints for the agent in different cases, \(c_d\) represents the cost of deploying a single H-honeypot. Generally, the deployment cost of H-honeypot is higher than that of F-honeypots. \(c_v\) represents the cost of deploying a single F-honeypot, \(State_{end}^{success}\) denotes the defender successfully lures the attacker to exploit the vulnerability in the honeypot through the deployment of the defense strategy, that is, the defense is successful. \(State_{end}^{fail}\) means that although the defender has deployed the defense strategy, the attacker still exploits the vulnerability in the important asset, that is, the defense is failure.

Strategy: Strategy \(\pi\) reflects the behavior of the defender at a given time. That is to say, the strategy \(\pi\) is the actions mapping that the defender will take in these network states. In our model, at time t, the policy function is defined as follows:

In addition, the discount factor \(\gamma\) is introduced by Eq. (8) to calculate the long-term cumulative reward to evaluate the pros and cons of the strategy. Among them, the strategy with the highest long-term cumulative reward is the optimal action selection strategy, as shown in Eq. (9).

Constraint: Since there are still many infeasible actions in the action space, we will introduce in detail how to model the relationship and number of servers, H-honeypots, and F-honeypots according to actual constraints to remove inactions. The specific constraints are as follows.

Capacity Logic Constraints: The number of changes in H-honeypots and F-honeypots cannot exceed the upper and lower limits of capacity, and the number of F-honeypots can be changed only when H-honeypots exist. This constraint is formalized as:

Where \(\alpha\) and \(\beta\) represent the maximum deployment number of H-honeypots and F-honeypots, respectively.

Relationship Logic Constraints: The change of H-honeypots need to satisfy the rationality of the relationship with the server, and the change of F-honeypots need to satisfy the rationality of the relationship with the H-honeypot. This constraint is formalized as:

Objective Function: From the defender’s perspective, selecting the optimal deception resources deployment scheme and transforming it into the reward from the environment is the core goal. To this end, we express the objective function of the optimization problem as:

In theory, the above optimization problem can be solved by the traditional dynamic programming method. However, it is difficult to mathematically track the state transition probability of the deception resources deployment strategy process. The introduction of RL solves the above problems well24. We regard P1 as a multi-constraint online problem. In order to solve this problem, we develop a PPO learning algorithm that can adaptively generate a deceptive resources deployment strategy.

Deception resources deployment strategy based on PPO

In this section, we use RL to solve the optimization problem of deception resources deployment strategy. Among many RL algorithms, we select PPO as the algorithm model. Among them, PPO is a mainstream policy-based RL algorithm. It limits the strategy update range by introducing the objective function clipping mechanism to ensure that the difference between the old and new strategies is in a controllable range, so as to avoid the drastic fluctuation of the strategy. In addition, by introducing the importance sampling mechanism, PPO reuses the training data to improve the training speed and data utilization of the model. Compared with Q-Learning, DQN and other models, PPO has both algorithm simplicity and robustness, and shows significant advantages in continuous or discrete action space. Since the PPO algorithm is an algorithm with the Actor-Critic framework, we give the loss function of the actor network and the critic network, the loss function of the actor network is as follows:

Where \(\hat{A}_t\) represents the dominance function at time t, \(r_t\left( \theta \right)\) represents the probability ratio of the old and new strategies, and \(\delta\) is a hyperparameter that controls the clipping range of the clipping function. The dominance function and the probability ratio are defined as:

Where \(\lambda\) is used to balance the variance and deviation, and \(V\left( State_t,\psi \right)\) is the approximation of the state value function. Then the state value target is \(\hat{V}_t\left( State_t \right) =\hat{A}_t\left( State_t,Action_t \right) +V\left( State_t,\psi \right)\), so the loss function of the critic network is:

Finally, the Adam optimizer is used to update the parameter \(\theta\) of the actor network and the parameter \(\psi\) of the critic network through backward gradient propagation.

The pseudo-code of the intelligent generation algorithm of multi-type deception resources deployment strategy based on PPO is shown in Algorithm 1. First, initialize the parameters, the replay buffer, actor and critic networks (line 1-3). Line 4 starts the main body of our algorithm, which is divided into two parts. The first part describes how to generate empirical data samples by interacting with the environment (line 5-17). By observing the current network state, performing deception resources placement actions, obtaining rewards and the next network state (line 7-10), and storing the samples in the experience replay pool, and finally calculating the advantage function and the action-value function (line 11-15). The second part is the network parameter update phase (line 18-22). According to Eqs. (17) and (21), the gradient is calculated and the actor and critic networks are updated (line 19 and 20). And update the action selection strategy (line 22).

Simulation study

In this part, we carry out a series of simulations and give the experimental results of the proposed algorithm. We further prove the superiority of the algorithm by analyzing and comparing with the other baseline algorithms and combining with the multi-dimensional performance metrics analysis.

Experiment setup

In order to verify the effectiveness of the proposed method, we use NetworkX25 to establish an experimental network simulation topology. The topology of the experimental network is shown in Fig. 3.

The experimental network consists of three subnets, subnet1 is composed of two hosts, subnet2 contains three hosts, and subnet3 contains two servers. The attacker controls a host outside the network and uses tools such as Nmap and Nessus to collect information from the experimental network. The attacker then combines this information with CVSS to evaluate the importance of key assets in the experimental network. The simulation steps of the attack are as follows:

-

1.

The attacker scans the experimental network by using Nmap, and determines the IP range of the three subnets based on the ICMP Echo technology in the tool.

-

2.

At the same time, Nmap will have more response characteristics of each device to the ICMP protocol, and return the operating system type and service version information of each device.

-

3.

Based on the operating system type and service version information obtained above, Nessus is used to automatically associate the vulnerability numbers on each device.

-

4.

According to the vulnerability number of each device, the confidentiality, integrity and availability indicators of the vulnerability are obtained through CVSS.

-

5.

Using Wireshark to capture the traffic data in the network, and through the source IP address and destination IP address of the message header, the communication relationship between the devices is sorted out.

-

6.

The importance score of each device is calculated by Eq. (2), and the device with the highest importance score is selected for DDOS attack.

The vulnerability configuration of each asset is shown in Table 1. Considering the size of the network and network resources, we limit the number of two types of honeypots that defenders can deploy. The maximum number of H-honeypots is 2, and the maximum number of F-honeypots is 10. Aiming at the difference in deployment cost between low- and high-interaction honeypots, we study the method of reference12, quantify the deployment cost of a single H-honeypot to 0.5, and the deployment cost of a F-honeypot to 0.1.

Next, we will introduce the hyperparameter setting of DRD-PPO. At present, some hyper-parameter configurations have been widely used in many studies, and these configurations have been proved to have good training performance. For example, the discount factor is 0.9, the clip factor is 0.2 and so on. In addition, we also have more experimental results to adjust some parameters appropriately. For example: DRD-PPO trained 500 episodes, each episode has 30 timesteps. In actor network, we set up one hidden layer with the width of 32. Considering that our action space is discrete, we introduce the Softmax function in the output layer. The important parameters related to the experiment are shown in Table 2.

Baselines and evaluation metrics

To demonstrate the effectiveness of the proposed algorithm in incorporating communication relationship factors in the asset evaluation process, as well as the advantages of using multiple interactive types of honeypots in the selection process, we conduct a performance comparison. This comparison is made between the proposed algorithm and two other baselines:

DRD-PPO (only base vulnerability analysis): For the evaluation method used in the reference7, we introduce it into the proposed algorithm to highlight the advantages of combining communication relationships for evaluation. This method tends to provide protection for devices with poor vulnerability analysis results during honeypot deployment.

DRD-PPO (without F-honeypot): For the high-interaction honeypot used in the reference11, it is introduced into the proposed algorithm to highlight the role of using multi-interaction type honeypots. This method uses only high-interaction honeypots for deployment in the honeypot selection process.

In order to confirm the effectiveness of RL in the deployment strategy, we analyze and compare the performance of the proposed algorithm with the other three baselines:

Deployment policy random generation (Algorithm1): For the comparison algorithm used in reference18, we introduce Algorithm1 to highlight the self-learning ability of the RL model. The method randomly configures the number of various types of honeypots in the generated strategy, the association relationship, and the protection target device of the H-honeypot.

Intelligent generation of full deployment strategy (Algorithm2): In order to reflect that the proposed algorithm balances the consideration between defense benefits and defense costs in the process of honeypot deployment, we introduce the honeypot ratio setting method used in the reference21. The number of various types of honeypot defaults to the largest proportion, but the relationship between each type of honeypot and the H-honeypot protection target device is intelligently generated based on the PPO algorithm.

Random generation of full deployment strategy (Algorithm3): In order to study the influence of cooperation between honeypots on the performance of the algorithm, based on the random deployment method used in reference18, we introduce the method of setting the proportion of honeypots used in reference21. The number of each type of honeypot in the strategy is the same as that in algorithm 2, which defaults to the largest proportion, but the relationship between each type of honeypot and the H-honeypot protection target device is randomly configured.

In order to highlight the advantages of the PPO model in the algorithm, we compare the PPO model used in the proposed algorithm with other mainstream RL models:

DRD-Q, DRD-DQN, DRD-DDQN, DRDAC, DRD-PG, DRD-SAC: This method generates the number and correlation of various types of honeypots in the strategy and the protection target device of H-honeypots through Q-Learning8, DQN26, DDQN27, Actor-Critic28, policy gradient29, soft Actor-Critic30 model.

The evaluation of the proposed algorithm and the other three baseline algorithms is based on the following metrics:

The attack probability of important assets: This metric is used to measure the effectiveness of the algorithm, which indicates the effect of the attack probability of the target device after the defender selects the defense strategy. Usually, the smaller the metric is, the better the effect of the defense strategy generated by the algorithm is.

Defense cost: This metric is used to measure the deployment cost of H-honeypot and F-honeypot in the process of deception resources deployment strategy generation using the corresponding algorithm. Usually, the smaller the metric is, the smaller the resource occupation of the defense strategy generated by the algorithm is.

Defensive success probability (DSP): This metric is used to measure the effectiveness of the algorithm. It indicates the ratio of the number of successful defenses to the total number of experimental simulations. Usually, the larger the metric is, the better the effect of the defense strategy generated by the algorithm is.

Defensive utility (Reward): This metric is used to measure the effectiveness of the algorithm and the occupancy of resources. It indicates how the defender maintains a lower resource occupancy overhead while maintaining the same defensive effect. Usually, the larger the metric is, the better the defense strategy generated by the algorithm is.

Experiment results

In this part, we have carried out a lot of simulation experiments on the proposed algorithm and the baseline algorithms. After averaging, the experimental results of each algorithm are obtained, and the simulation results are analyzed with four evaluation metrics. The purpose of this experiment is as follows: Firstly, the advantages of considering the communication relationship between devices are analyzed by comparing the asset evaluation methods based on vulnerability analysis. Secondly, compared with the deployment strategy using a single type of deception resources, the improvement brought by the introduction of multi-interaction type deception resources is analyzed. Finally, compared with the traditional random generation and full generation methods, the advantages of the deception resources deployment strategy after introducing RL and the cooperation deception resources are analyzed.

Experiment 1: The impact of asset evaluation differences on honeypot deployment strategy:

Figure 4 shows the performance changes of the proposed algorithm in the two cases of using vulnerability analysis-based asset evaluation and using vulnerability analysis and communication relationship-based asset evaluation. The simulation results show that when only vulnerability analysis is considered in the asset evaluation process, the reward obtained by the honeypot deployment strategy decreases, but the convergence speed increases rapidly. In terms of attack probability, the attack probability of the target device increased by 26.7%. This is due to the neglect of the communication relationship between equipment, which makes the evaluation of key assets not accurate enough, which leads to the deviation of the equipment protected by the honeypot. In addition, due to the strategy generation process, the communication relationship between the devices is ignored, which reduces the action space of the RL and effectively improves the convergence speed of the algorithm.

Experiment 2: The impact of diversified selection of honeypots on deployment strategy:

Figure 5 shows the performance changes of the proposed algorithm in the case of using a single type of honeypot and using multiple types of honeypot. The simulation results show that when only H-honeypot is used, the network feedback obtained by the honeypot deployment strategy is reduced, and the attack probability of the target device is significantly improved. This is because leaving the false traffic interaction support of F-honeypot makes the fidelity of H-honeypot less deceptive from the attacker’s perspective. In addition, due to the limited network resources, it is difficult to support large-scale deployment, resulting in a limited number of H-honeypots and can not play a good role in protecting the target device.

Experiment 3: The influence of policy generation method and cooperation between honeypots on deployment strategy:

Figure 6a shows the changes of the defense utility of the four algorithms. The simulation results show that the defense utility of the generated defense strategy is optimal after the proposed algorithm converges through iterative learning. This is because the proposed algorithm trade off the defense cost and defense effectiveness in the strategy generation process. In addition, in the case of the same number of honeypots, the performance of Algorithm2 is better than Algorithm3, indicating that the introduction of collaboration between honeypots helps to improve the effectiveness of the deployment strategy.

Figure 6b shows the impact of the defense strategies generated by the four algorithms on the attack probability of the target asset. The simulation results show that compared with the random deployment strategy, the proposed algorithm reduces the attack probability of the target asset by at least 10.34%, which reflects that the introduction of PPO model provides a strong guidance for the deployment process. Among them, Algorithm2 has a greater reduction in the attack probability of the target device, because the algorithm generates more honeypots for the strategy, which also leads to a greater defense cost overhead of the algorithm.

Figure 6c shows the cost overhead of the defense strategies generated by the four algorithms. The simulation results indicate that, despite the high cost of the deception resources deployment strategy identified in the early stages through continuous attempts, a minimum cost strategy is ultimately achieved. Because Algorithm2 and Algorithm3 use the maximum proportion setting in the process of determining the number of honeypots, the defense overhead of both is the largest.

Table 3 shows the probability of successful defense of the four algorithms in the process of 500 attack-defense confrontations. The simulation results show that the DSP of the proposed algorithm and the full deployment strategy intelligent generation algorithm is better, which is 97.09% and 98.31% respectively. Among them, the DSP of Algorithm2 is higher than the proposed algorithm, because the number of honeypots used by the former is more than the latter. The DSP of Algorithm1 and Algorithm3 is poor, which is 28.43% and 55.19% respectively. The reason is that although the random deployment strategy can confuse the attacker’s attack target by deploying honeypot resources, it lacks pertinence and has poor effect.

By comparing and analyzing the four methods using four evaluation metrics, we find that the proposed algorithm effectively balances the effectiveness and cost of the defense strategy. It minimizes the occupation of network resources while ensuring high defense performance. In addition, the simulation results of the intelligent method show that the performance curve is far better than the other two random strategies in terms of stability.

Experimental analysis of PPO model

In this part, we compare the PPO model used by the proposed algorithm with other mainstream reinforcement learning models. The purpose of this experiment is to show the advantages of using the PPO model for deceptive resources deployment strategies compared to other reinforcement learning models.

Figure 7a shows the defense utility changes of the defense deployment strategies generated under different reinforcement learning models. The simulation results show that the defense utility of the defense strategy generated by the algorithm using the PPO model is optimal after iterative learning convergence. This is because PPO can effectively use the collected samples to improve the strategy through multiple updates. This sample efficiency allows PPO to learn effective strategies in fewer interactions, thereby obtaining higher rewards in the environment. Although the defensive utility of the Q-Learning model can reach the level of the proposed algorithm after convergence, in terms of convergence speed, the convergence speed of the proposed method is the fastest, and the curve fluctuation range is the smallest. This is because the PPO model uses the cliping technique to limit the magnitude of each update, preventing policy updates from being too large to cause instability. At the same time, this small update can make more effective use of the collected samples, so that the model can converge quickly and reduce the number of samples required.

Figure 7b shows the impact of the defense deployment strategy generated under different reinforcement learning models on the attack probability of the target asset. The simulation results show that the algorithm using the policy gradient model can minimize the attack probability after its convergence, followed by the PPO model and Q-Learning. However, the strategy gradient model will lead to instability in the training process due to the gradient estimation of high variance, which is also the reason for the large fluctuation of the curve.

Figure 7c shows the overhead of the defense deployment strategy generated under different reinforcement learning models. The simulation results show that the algorithm using PPO, Q-Learning and SAC model has much lower defense strategy overhead than other algorithms after convergence. Table 4 shows the probability of successful defense of the reinforcement learning model algorithm in the 1500 attack-defense confrontation process. The simulation results indicate that the DSP performance of the DRD-PPO algorithm and DRD-Q is superior, achieving 98.61% and 95.32%, respectively. They are followed by DRD-PG and DRD-SAC, with performances of 87.13% and 78.89%, respectively. DRD-AC, DRD-DQN and DRD-DDQN have the worst DSP performance. This is because AC model lacks constraints in the policy update process, and there will lead to the policy update range is too large. The DQN and DDQN models rely heavily on the experience replay mechanism, and lack the sample sampling mechanism of PPO, which will lead to slow convergence speed and easy to fall into the local optimum.

In the generation of the deception resources deployment strategy, we observe that DRD-PPO performs well in four key areas: defense utility, attack probability impact, defense cost, and defense success rate. Additionally, its convergence speed and stability are excellent. DRD-Q, DRD-PG, DRD-SAC and DRD-AC are second, but their convergence speed is slow. Among them, the curve fluctuation of DRD-SAC is smaller, which is due to its use of soft update method, introducing an entropy term in the process of updating the value function to balance exploration and utilization. The remaining two algorithms are not ideal in terms of defense effect, algorithm convergence or stability.

Conclusion and future work

To address the network reconnaissance of APT, this study proposes DRD-PPO, based on the concept of deception defense. The aim is to integrate the analysis of network assets and attack processes to generate the deployment strategy for deception resources. This method seeks to maximize the utilization efficiency of honeypots while minimizing unnecessary resource overhead. Experimental results demonstrate that, compared to other algorithms, the proposed algorithm achieves defensive success probability of 97.09% and significantly reduces the cost of deployment. In addition, compared with other reinforcement learning methods, the proposed algorithm improves defensive success probability by at least 3.29%, while also exhibiting faster convergence speed and smaller fluctuations. However, the definitions of defense benefit and defense cost in this study are relatively simplistic, and the static deployment characteristics of the proposed algorithm struggle to adapt to the subsequent attack stages of APT. Furthermore, in large-scale distributed networks, the proposed algorithm relies on a single reinforcement learning agent, which faces challenges such as limited computing power and data silos during the defense strategy generation phase.

In view of the limitations, future research will focus on introducing Moving Target Defense (MTD) technology to design the dynamic active defense method based on hybrid defense strategy, while also optimizing the definitions of defense benefit and defense cost in the objective function. Additionally, we will implement the federated learning framework, deploying distributed reinforcement learning agents in large-scale network and designing the multi-agent based honeypot deployment strategy generation method. Furthermore, considering the threats posed by inference attacks31 and poisoning attacks32 in federated learning, we will develop the differential privacy protection mechanism33 for network asset data collection, along with the blockchain-based reinforcement learning model security aggregation mechanism34.

Data availability

All data generated or analysed during this study are included in this published article.

References

Hutchins, E. M. et al. Intelligence-driven computer network defense informed by analysis of adversary campaigns and intrusion kill chains. Lead. Issues Inf. Warfare Secur. Res. 1, 80 (2011).

Panjwani, S., Tan, S., Jarrin, K. M. & Cukier, M. An experimental evaluation to determine if port scans are precursors to an attack. In 2005 International Conference on Dependable Systems and Networks (DSN’05), 602–611 (IEEE, 2005).

Achleitner, S. et al. Deceiving network reconnaissance using sdn-based virtual topologies. IEEE Trans. Netw. Serv. Manage. 14, 1098–1112 (2017).

Wang, C. & Lu, Z. Cyber deception: Overview and the road ahead. IEEE Secur. Priv. 16, 80–85 (2018).

Spitzner, L. The honeynet project: Trapping the hackers. IEEE Secur. Priv. 1, 15–23 (2003).

Liu, X., Ospina, J. & Konstantinou, C. Deep reinforcement learning for cybersecurity assessment of wind integrated power systems. IEEE Access 8, 208378–208394 (2020).

Gabirondo-Lopez, J., Egana, J., Miguel-Alonso, J. & Urrutia, R. O. Towards autonomous defense of sdn networks using muzero based intelligent agents. IEEE Access 9, 107184–107199 (2021).

Wang, S. et al. An intelligent deployment policy for deception resources based on reinforcement learning. IEEE Access 8, 35792–35804 (2020).

Qin, X., Jiang, F., Cen, M. & Doss, R. Hybrid cyber defense strategies using honey-x: A survey. Comput. Netw. 230, 109776 (2023).

Zhou, Y., Cheng, G. & Yu, S. An sdn-enabled proactive defense framework for ddos mitigation in iot networks. IEEE Trans. Inf. Forensics Secur. 16, 5366–5380 (2021).

Anwar, A. H., Kamhoua, C. A., Leslie, N. O. & Kiekintveld, C. Honeypot allocation for cyber deception under uncertainty. IEEE Trans. Netw. Serv. Manage. 19, 3438–3452 (2022).

Anwar, A. H. et al. Honeypot-based cyber deception against malicious reconnaissance via hypergame theory. In GLOBECOM 2022-2022 IEEE Global Communications Conference, 3393–3398 (IEEE, 2022).

Ge, M., Cho, J.-H., Kim, D., Dixit, G. & Chen, I.-R. Proactive defense for internet-of-things: moving target defense with cyberdeception. ACM Trans. Internet Technol. 22, 1–31 (2021).

Shakarian, P., Kulkarni, N., Albanese, M. & Jajodia, S. Keeping intruders at bay: A graph-theoretic approach to reducing the probability of successful network intrusions. In International Conference on E-Business and Telecommunications, 191–211 (Springer, 2014).

Polad, H., Puzis, R. & Shapira, B. Attack graph obfuscation. In Cyber Security Cryptography and Machine Learning: First International Conference, CSCML 2017, Beer-Sheva, Israel, June 29-30, 2017, Proceedings 1, 269–287 (Springer, 2017).

Anwar, A. H., Kamhoua, C. & Leslie, N. A game-theoretic framework for dynamic cyber deception in internet of battlefield things. In Proceedings of the 16th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, 522–526 (2019).

Jajodia, S., Park, N., Serra, E. & Subrahmanian, V. Share: A stackelberg honey-based adversarial reasoning engine. ACM Trans. Internet Technol. 18, 1–41 (2018).

Anwar, A. H., Kamhoua, C. A., Leslie, N. O. & Kiekintveld, C. Honeypot allocation for cyber deception under uncertainty. IEEE Trans. Netw. Serv. Manage. 19, 3438–3452 (2022).

Silver, D. et al. Mastering the game of go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Silver, D. et al. Mastering the game of go without human knowledge. Nature 550, 354–359 (2017).

Radoglou-Grammatikis, P. et al. Trusty: A solution for threat hunting using data analysis in critical infrastructures. In 2021 IEEE International Conference on Cyber Security and Resilience (CSR), 485–490 (IEEE, 2021).

Li, H., Guo, Y., Huo, S., Hu, H. & Sun, P. Defensive deception framework against reconnaissance attacks in the cloud with deep reinforcement learning. Sci. China Inf. Sci. 65, 170305 (2022).

Guo, J. et al. ICRA: An intelligent clustering routing approach for UAV Ad Hoc networks. IEEE Trans. Intell. Transp. Syst. 24, 2447–2460 (2022).

Xu, C., Zhang, T., Kuang, X., Zhou, Z. & Yu, S. Context-aware adaptive route mutation scheme: A reinforcement learning approach. IEEE Internet Things J. 8, 13528–13541 (2021).

Hagberg, A., Swart, P. J. & Schult, D. A. Exploring network structure, dynamics, and function using networkx (Tech. Rep, Los Alamos National Laboratory (LANL), Los Alamos, NM (United States), 2008).

Mnih, V. et al. Playing atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602 (2013).

Van Hasselt, H., Guez, A. & Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 30 (2016).

Konda, V. & Tsitsiklis, J. Actor-critic algorithms. Adv. Neural Inf. Process. Syst.12 (1999).

Sutton, R. S., McAllester, D., Singh, S. & Mansour, Y. Policy gradient methods for reinforcement learning with function approximation. Adv. Neural Inf. Process. Syst.12 (1999).

Haarnoja, T., Zhou, A., Abbeel, P. & Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In International Conference on Machine Learning, 1861–1870 (PMLR, 2018).

Yuan, W. et al. Interaction-level membership inference attack against federated recommender systems. Proc. ACM Web Conf. 2023, 1053–1062 (2023).

Wang, Z. et al. Poisoning attacks and defenses in recommender systems: A survey. arXiv preprint arXiv:2406.01022 (2024).

Yao, A. et al. Fedshufde: A privacy preserving framework of federated learning for edge-based smart UAV delivery system. Futur. Gener. Comput. Syst. 166, 107706 (2025).

Dong, C. et al. A blockchain-aided self-sovereign identity framework for edge-based uav delivery system. In 2021 IEEE/ACM 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid), 622–624 (IEEE, 2021).

Acknowledgements

This work was supported by the Fundamental Research Funds for the Central Universities under project 2022JBQY004, in part by the Fundamental Research Funds for the Central Universities under project 2024QYBS019.

Author information

Authors and Affiliations

Contributions

Changsong Li, Ning Zhao and Hao Wu wrote the main text of the manuscript. Changsong Li prepared figures. All authors reviewed the manuscript. All authors confirm that neither the article nor any part of its content is currently under consideration or published in another journal. The authors agree to publish their work in the journal.

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, C., Zhao, N. & Wu, H. Multiple deception resources deployment strategy based on reinforcement learning for network threat mitigation. Sci Rep 15, 16830 (2025). https://doi.org/10.1038/s41598-025-00348-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-00348-0