Abstract

As digital media grows, there is an increasing demand for engaging content that can captivate audiences. Along with that, the monetary conversion of those engaging videos is also increased. This leads to the way for more content-driven videos, which can generate revenue. YouTube is the most popular platform which shared the revenue from advertisement to video publisher. This paper focuses on the work of video popularity prediction of the YouTube data. The idea of mapping the video features into low-dimensional space to get the latent features is presented. This mapping is achieved by a novel multi-branch child-parent Long Short Term Memory (LSTM) network. These latent features train the fused Convolutional Neural Network (CNN) with LSTM to predict the popularity of unseen videos on the trained deep learning network. We compared our results against Linear Regression (LR), Support Vector Regression (SVR) and Fully Convolutional Networks (FCN) with LSTM. A significant improvement with a 50% reduction in MAE and a 0.61% increase in the coefficient of determination (R²) has been observed by the proposed Multi branch LSTM encoded features with a fused deep learning predictor (MLEF-DL predictor) when compared to existing methods.

Similar content being viewed by others

Introduction

Nowadays, there has been a notable rise in the amount of time people spend on digital media. According to the MindShare India report, YouTube has gained 13% more viewership in the 1st quarter of 2020 than the previous year. This shift created new opportunities for social media platforms like YouTube to enhance their viewers and advertisement revenue. It has been seen that a mere fraction of uploaded videos can attract viewership while billions of uploaded videos go unattended. If the popularity of the uploaded video can be predicted, then revenue can increase. So, it is notable here to consider the popularity prediction to gain subscribers and advertisement revenue. The popularity prediction is not trivial as the selection of features and predictor is a complex task1. In the past, many researchers had worked on the various features extraction from the video, whether it is temporal features like video views, engagements, visual features, textual, or social. It has been accepted that these all are not substantial and based on this theory, few researchers have focused on the selection by correlation among them or collaborated/generated features by their proposed scheme. Most of them used the regression analysis for the prediction. Few of them suggested the deep learning sequential classifier like LSTM2. It has been noticed that the attention on the designing of a good predictor is still less explored as the work on the video features is so complex that this area could get less. This motivates the authors to design the framework for better understandability of the features and improved predictions for the popularity of the videos to benefit the targeted audience.

The popularity prediction work is presented based on various features like view durations, video length, category, etc. However, the view count is the primarily used feature. Many researchers have considered heterogeneous features like textual, visual, acoustic and social features3. The list of features is never-ending in this domain, but do all these features contribute to the popularity prediction? The Jeon et al.4 even pointed out the view count volatility as the future prediction hurdle. Bao et al.5 also showed concern with the view counts volatility and used the adaptive peak window to consider the historical view counts for prediction. However, the author used the hit and trial to select the window length. The impact of every feature on the prediction is not possible. Bielski et al.6 studied the impact of textual and visual features of the micro-video. We also explored the view counts of the video. It can be observed from Fig. 1 that the view counts are not a reliable parameter solely as user’s may view the video but not watch it if the content in the video is not engaging. Figure 1 shows the plot of view counts and watch time of a video for 100 days. The view counts saturate at the least slope after 2 months but the watch time is still considerable.

Researchers have explored several attributes of the video to be considered in the prediction and a few of them also focused on the predictor too but the mapping of latent features to lower-dimensional semantic space is unexplored and the void is still there. The Lin Y. et al.7 had worked on the autoencoder-based clustering for the prediction. The autoencoder learns the latent feature by transforming the feature into a lower-dimensional feature vector. A similar concept of latent feature representation is adopted in the Tree-LSTM8. Chain LSTM strictly allows only sequential information flow. The generalization of LSTM can be extended by using the hidden state of a child LSTM as the current state along with the input. This way, the latent information of data is propagated in tree LSTM. Tai et al.8 and other researchers have generalized the tree LSTM for natural language processing applications; however, it has equal opportunity in the video popularity prediction.

In this paper, the video attributes like engagement feature, view counts etc. are mapped into lower dimensions and their latent information is further generalized by a tweaked Tree-LSTM. These generalized latent features are the input to a fused FCN-LSTM predictor. We call this novel algorithm as MLEF-DL predictor. Our contribution to the work is:

-

A novel approach to map the video attributes into a lower-dimensional space by multi-branch sequential LSTMs, which are connected to a parent LSTM for a latent feature vector.

-

A fused FCN-LSTM predictor to predict the video popularity.

Tree-LSTM network consists of various child LSTMs and a parent LSTM. In each child LSTM, the single attribute is the input and all hidden layer vectors from multiple branches of these child LSTMs are cascaded to provide the input to parent LSTM. This child-parent relationship of the LSTMs helps in better representation of the dependency of the video attributes. Multi-branch child LSTMs focus on analyzing and extracting the latent features of the video that strengthens the model’s capacity to learn from diverse data. Multi-branching provides deeper understanding and better utilization of the input video attributes that ultimately enhance the accuracy and efficiency of the network.

This improvement in the prediction results significantly aid the online video publishers and channel owners to increase revenue and number of subscribers. Ahead in this paper, the previous work is discussed in Sect. 2. The proposed approach is detailed in Sect. 3, followed by the experimental evaluation in Sect. 4. Section 5 concludes the work.

Literature review

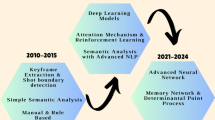

There has been a lot of work in this field. There is a method designed based on the KB entities and leverage of KB information is done9. The next work is a Lifetime Aware Regression Model (LARM), this model represents the first model designed on leverage10. A dubbed Fine-grained Video Attractiveness Dataset (FVAD) built on largest benchmark data that tracks the fine trained video attractiveness and prediction model11. An adversarial training model PreNets is designed that aims to search for equilibrium between feature-driven and point process models12. Wasserstein Generative Adversarial Networks (WGAN), an alternative to the existing GAN is proposed here stability of learning is improved by making the model free of collapse problem13. Another approach is SVM based on Radial Basis Function that predicts the online popularity14. The method of machine learning was applied to predict15, followed by a new interactive visualization system named Hawkes Intensity Process (HIP) is also developed for the same purpose16. A set of aggregated metrics are taken into consideration for analysis like average watch time, average watch percentage, and a new metric, relative engagement17. In18 a time series classification task is performed by FCN with hybrid LSTM-Recuurent Neural Network (RNN) sub modules. Then its variant a multivariant LSTM-RNN is also designed19. Predicting popularity eventually helps in emphasizing different sensitive issues and also increase awareness among the audience. There are many methods around to predict the popularity of any video some of them are: a fuzzy-based model was designed that provides a score to each video and classifies it on various scales of popularity20. Next, the method studied is called GraphInf. Here, GraphInf clusters short videos based on the graph method21. Followed by, three very well-known popularity prediction models namely Szabo-Huberman (SH), multivariate linear (ML), and Multivariate linear Radial Basis Functions (MRBF) models are enhanced. Here, with the help of an unsupervised grouping technique that is hierarchical clustering small groups are created then an optimal cut-off is decided based on the total entropy of the video under a request made by the users in the small groups created by the algorithm22. A very concept has been introduced by23 where CatBoost framework is employed to explore feature generalization and short-term dependency. The next, model was shared in OSNs where the main features were taken into consideration24 There has been studied carried out in this where the actual reasons for the popularity are studied and modelled25,26.

There have been many efforts made to explore the popularity prediction of different data formats on the web like text27,28 followed by images29,30,31 and lastly the videos14,24,32,33 due to their immense effect was seen on the business34,35. In general, the prediction is carried out by a simple comparison of content and finding the correlation among the social data. A classification task problem is formulated to predict the popularity of the new Hashtags generated on Twitter28. The 7 content features and 11 contextual features are extracted from the hashtag strings and social graph formed by users. The popularity of the image is carried out by content, context, and social-based features extraction method. The significance of each feature based on multiple visual features and social context features is presented for the popularity prediction task36. The role of aesthetic features like contrast, sharpness, brightness, and semantics could be used for predicting the popularity of an image14. The popularity of social image is predicted by combining context features, user features, and image sentiment information31.

Video popularity is normally governed by integrating different visual and textual modalities. The popularity of video content is investigated based on the engagement factor determination24,32,37. The correlation among the user-generated content and video popularity is determined by massive large scale data to drive analysis32. The impressive video content and social response are considered as the input to predict the popularity of social media content24. The temporal and visual features are also used to predict social media content14. The performance of the popularity prediction task does not only depend on the content of social media, but user response and context information can also be enhanced the performance of prediction. There are many researches15,16,24 reported that they had explored video popularity based on the fusion information from different patterns. The traditional methods of popularity prediction have a higher misclassification rate that is minimized by machine learning approaches. State of the art k-Nearest Neighbors (KNN), Multiple Linear Regression (MLR), and Autoregressive Integrated Moving Area (ARIMA) had been tested for the popularity prediction task. The combination of intrinsic features of video and propagation structure is used to develop a popularity prediction scheme38. In39 a Dual Sentimental Hawkes Process (DSHP) is proposed for video popularity prediction that determines efficient underlying factors. A learning model can capture the social response about the video content25. The noise and insufficiency issues are resolved by transductive multimodal learning of micro-video popularity prediction. The separate category features are described as a latent common space40,41.

A multilevel regression-based method is proposed to model and capture the relationship between different features of social media posts and their group-level popularity42. Authors in43 explored the application of tensor factorization techniques to predict the popularity of social media posts. A deep learning model with stacked Bidirectional Long Short Term Memory (BiLSTM) layers is suggested to predict the popularity of videos based on various features, including content-related information, user engagement, and temporal factors44. An advanced recommendation system has been devised that utilizes hypergraph-based modeling, user segmentation, and item similarity learning to enhance prediction and recommendation accuracy45.

An AI-driven solution is proposed to identify and rank the key features that determine whether a YouTube video becomes trending46. The analysis uses a dataset containing both trending and non-trending videos with 12 distinct video features. The model leverages sentiment analysis and classification techniques, along with feature selection, to improve the accuracy of predicting video popularity. A framework is suggested that that incorporates deep learning within a meta-heuristic-based wrapper approach to effectively identify the optimal feature subset for the prediction of cancer47. The proposed framework employs an ensemble of three filter methods to minimize the chance of not selecting the important features. A supervised machine learning-based framework for emotion recognition in email text was developed, incorporating three feature selection methods, three classification algorithms, two clustering techniques, and several cluster validity indices48. The framework involved extracting 14 features from the datasets, followed by the application of three feature selection techniques—Principal Component Analysis (PCA), Mutual Information (MI), and Information Gain (IG)—to identify the most relevant features for classification. Subsequently, three classifiers, namely Artificial Neural Network (ANN), Random Forest (RF), and Support Vector Machines (SVM), were used to predict the emotions in the emails. Additionally, clustering techniques such as k-means, k-medoids, and fuzzy c-means were applied to examine the distribution of various emotional categories. Temporal, spectral, and Mel Frequency Cepstral Coefficient (MFCC) methods are discussed to extract the features from signals, and finds its correlation with depression, anxiety, and stress emotional state of the humans49. BiLSTM network is used to classify the generated features’ vectors. A novel metaheuristic optimizer named as the Binary Chaotic Genetic Algorithm (BCGA) is proposed to improve the Genetic Algorithm (GA) performance for feature selection50. The chaotic maps are applied to the initial population, and the reproduction operations follow. The proposed BCGA enhances the mutation and population initialization steps of the raw GA using chaotic maps. An enhanced GA based feature selection method has been proposed named as GA-Based Feature Selection (GBFS) to enhance the classifiers’ detection accuracy of the Intrusion Detection System51.

In this literature survey, it has been observed that a large variety algorithms have been given by distinct researchers to predict the future popularity of the videos. Researchers have explored several attributes of the video to be considered in the prediction and the predictor. As per the literature available and surveyed by the authors, the mapping of latent features to lower-dimensional semantic space was unexplored. Therefore, authors worked upon extraction of the latent features of the videos that are used to fed upon the fused deep learning predictor.

Proposed approach

In this paper, we cast the popularity prediction scheme as a time series prediction problem and the objective is to predict the popularity of YouTube videos at the time \(\:{t}_{t}\), providing the video attributes upto the time \(\:{t}_{r}\). Considerably \(\:{t}_{t}>{t}_{r}\). The collected data is time-series data and in the literature, LSTM model is found very efficient to predict the time series problems. It is a good choice for the sequential data but the information propagated is strictly sequential in all of its variants, whether it is chained LSTM, or any other formation of LSTM. We have used the sequential power of LSTM to map the latent features in the low dimensional space like any autoencoder in deep learning. We use the strength of sequential LSTM in features mapping as well as in the prediction of popularity in conjunction with fully convolutional layers.

The volume, veracity and velocity of data from various devices thriving over the internet have led to the need for processing the information. One of the most efficient tools that has emerged in real-time is a RNN52. The whole idea of incorporating neural nets for data processing. Unlike the feedforward NN, these have feedback connections and can process data of any length. However, the most difficult part is the training part. A simple RNN is one of the forms of neural networks that exhibit temporal behavior by connecting the individual layers. The authors in53 showcase the implementation of RNN in order to maintain a hidden vector \(\:x\) which is updated at \(\:t\) as shown in Eq. 1.

In Eq. 1, \(\:tanh\) is a hyperbolic tangent function, \(\:{x}_{T}\) represent the input vector time step, \(\:W\) is the recurrent weight matrix, and the projection matrix is denoted by \(\:I\). Equation 2 shows the prediction evaluation\(\:{\:y}_{T}\), based on hidden state \(\:h\) and weight matrix \(\:W\)

The output of the prediction model is normalized by the softmax function and provides valid probability distribution. \(\:\sigma\:\) represents the logistic sigmoid function, and a deeper network is developed by using RNN hidden states (\(\:{h}_{l-1},\:{h}_{l})\) for different layers;

The weight update in RNN is mainly gradient base and this leads to either vanishing or exploring the gradient problem. This problem is addressed using LSTM. A simple LSTM is a special type of RNN that has internal memory and multiplicative gates. The first LSTM paved its way in 199754. To date, more than 1000 works are reported in this field. Many new approaches have been explained regarding the LSTM functionality55,56,57 and its effectiveness58. Three different gates Forget gate, Input gate, and Output gate are used to control the function of LSTM memory cell. The input gate controls the input storing information, similar operation performs by output gate. The delete operation of the LSTM memory cell is performed by the Forget gate based on comparing past and present storage values. Finally, the forget gate’s role is to allow memory cells to remember or forget its previous information. This special structure of LSTM is advantageous over the traditional RNN model in many applications like feature extraction and semantic analysis, especially for the long data sequence. Any LSTM approach normally involves two simple strategies to further extract the local features from the output of LSTM: (1) Maxpooling; (2) Average pooling. In order to improve efficiency, the LSTM is combined with CNN. With this basic theory, the modelling of problem formulation is stated below. Some recent works reported that combined the LSTM and CNN work along with 3D analysis of video for Multi-Tasking Learning (MTL) mechanism, tailored to information sharing of video inputs55. Another work was based on 3D CNN for temporal motion information that worked with LSTM for better analysis of the videos58,59,60,61,62.

Latent features mapping

This paper has introduced the generalized LSTM for latent feature extraction from every video attribute and then mapped to a hidden feature space under multi-branch LSTM. Every standard LSTM’s hidden state is composed of its current state and hidden state at the previous time step. In the proposed multi-branch LSTM, the hidden state is composed of many child LSTM branches. The number of child LSTM is equal to attributes in the video. Every child LSTM is trained for a univariate attribute with the next timestamp video attributes as the target. The trained hidden states are the latent features of that attribute. The input gate equation of standard LSTM is

Where \(\:{X}_{t}\) is the video attribute at time instant \(\:t\) and \(\:{W}_{i},\:{b}_{i}\) are the weights and biases of the input gate of LSTM. The \(\:ch\) denotes the child LSTM. The previous hidden state is represented by \(\:{h}_{t-1}\). The forget gate and output gate in LSTM are formulated in Eqs. 5 and 6, respectively.

The hidden state at time \(\:t\) is the elementwise product of \(\:{o}_{t}\) and cell state at that time as shown in Eq. 7

This hidden state vector is our latent feature for a video attribute. In the data considered in this work, 9 temporal and engagement attributes are present. So the hidden states matrix would be \(\:{H}^{ch}\in\:({h}_{{t}_{1}}^{ch},\dots\:.{h}_{{t}_{9}}^{ch})\). these cascaded hidden states are from child LSTMs. A parent LSTM considers the hidden states of every child LSTM as the input matrix \(\:H\) and it received the input at it’s input gate as in Eq. 8.

Here \(\:{I}_{t}^{P}\) represents the parent LSTM input gate and \(\:{H}_{t}^{ch}\) is the input matrix from child LSTM’s hidden state. The final latent features \(\:\stackrel{\sim}{{h}_{t}}\) are calculated similarly to Eq. 4 for child LSTM. Figure 2 shows the mapping of features into latent state by the proposed multi-branch LSTM. Figure 3 shows the layered diagram for the child LSTM model and parent LSTM model, plotted through the deep learning Python package, Keras. In each child LSTM, the single attribute is the input and all hidden layer vectors are cascaded at the input of parent LSTM, making it a matrix of \(\:samples\times\:9\) as shown in Fig. 3(b). The \(\:{\prime\:}?{\prime\:}\) in the Fig. 3 shows the samples at the input and output. We are receiving three output vectors at the output layer of each LSTM. These are the predicted attribute value with the testing label\(\:\:({y}_{t}\)), hidden state vector (\(\:{h}_{t}\)) and last memory cell \(\:{c}_{t}\) vector.

We have the time series dataset with \(\:{a}_{i=\text{1,2}0.9}\) attributes of every video. This data can’t be used as input feed to sequential LSTM since to train the LSTM model for latent feature extraction, simulation requires the target values. However, in time series, these are missing. So we convert the data into frames by presenting the attributes at the next time instant as the target values to current attributes. Now a sample in the data matrix \(\:{X}_{t}\) would be

The data is randomly divided into training-testing data and latent features are extracted for each. To avoid the biasing during the LSTM training, data standardization is also applied. The pseudo-code for the latent features extraction by the proposed multi-branch parent-child LSTM is shown in algorithm 1.

Prediction of videos popularity

The CNN has emerged as an alternative of LSTM for time series prediction for multivariate data. CNN understands the input data’s spatial and temporal characteristics, while LSTM models the temporal information of the time series data. That’s why CNN, in combination with the LSTM extracts the performs better than the standalone LSTM. The noise-free extracted features from the CNN layers output are fed into the LSTM input. We have tested that with the standalone LSTM configuration too, as discussed in Sect. 4. The mean absolute error in LSTM is higher than CNN-LSTM due to the propagation of spatial features extraction through CNN from the latent features \(\:\stackrel{\sim}{{h}_{t}}\). CNN also convolutionally filters the noise from the temporal \(\:\stackrel{\sim}{{h}_{t}}\)features.

The CNN consists of an input layer that takes the input in 3 dimensions as initially it was written for image processing applications. After the input layer, it has hidden layers and an output layer that extracts the features to the LSTM. The hidden layers are constituted by the convolutional layer, ReLU layer and the pooling layer. The convolutional layer convolves the latent features and passes to the next layer. To make the CNN deeper, we used two CNN layers. The convolved outcome of first CNN layer is mathematically represented as:

Here \(\:{y}_{t}^{1}\) is the outcome of first convolutional layer. To reduce the effect of overfitting and adjust the computational cost, the max-pooling layer is used in CNN. Equation 11 shows the process of max-pooling later. \(\:T\) is the stride which controls the filter sliding during time series prediction. If \(\:T=1\), the filter slides for one timestamp.

Where \(\:R\) is the pooling size. The outcome of this max-pooling layer is flattened before presenting it to the LSTM layers. The proposed architecture is shown in Fig. 4 with layers’ input and output. The arrangement of the filter size, stride length is done experimentally. Table 1 incorporates the CNN-LSTM configuration. The input to the CNN-LSTM is the latent vector \(\:\stackrel{\sim}{{h}_{t}}\) from the multibranch LSTM, so the filter size at the input layer is 1 as shown in Table 1; Fig. 4. Two convolutional layers with \(\:1\times\:16\) filters are used with 2 strides. The output of these layers would be \(\:1\times\:16\times\:1\). The flatten layer changes the shape of the convolution layer outcome for the LSTM layer and converts it to \(\:1\times\:16\). The hidden neurons are 20 in LSTM, so the output matrix is of shape \(\:1\times\:9\times\:20\). Finally, dense layers convey the final predicted popularity value for the next day.

Experimental evolution

Dataset

The video dataset presented in17 is considered for popularity prediction tasks. The video data is taken from the YouTube platform by using the API of YouTube. The YouTube API collects the metadata information like channel ID, duration, upload time, description, video ID, and title of videos. A software package is developed to retrieve the three daily series like daily shares, watch time, and view counts of video attention dynamics. The content of the video is an important dynamic to predict popularity. The interest of the users depends on the video content; if the content is good, then the users watch duration is more, which also increases the number of users count per day.

The three activities of users watch time, number of shares, and view counts each day are essential for the video popularity prediction. The engagement factor among the user and video content can enhance the duration and view counts of a particular video. The higher value of the engagement factor can improve the popularity prediction of a video. Figure 1 shows the inconsistency in daily watch time and view time for automobiles videos.

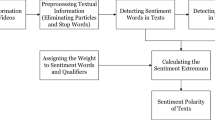

As shown in Fig. 1, daily watch time is decreased from the time of uploading the content, but view counts do not affect in this manner. Figure 5 (a) shows the average watch percentage and video duration Gaussian \(\:pdf\) curve for automobile videos. The black curve line shows 50% of the data watch duration, and a blue color line represents 10, 30, 70, and 90% of watch duration. A correlation matrix is plotted for the available features: watch percentage, view counts, and the watch is also plotted in Fig. 5 (b). There are 0.55 correlations present among the daily watch and view videos; average watch duration is correlated by 0.68 for the 30 days. The watch duration is not affected by the quality of the videos, as we observed from the correlation matrix. This article has suggested the usage of 9 features which includes daily views, duration, average views of 30 days, average watch duration of 30 days, definition, category, average watch percentage and days. These features are primarily categorised into three categories: control variable, contextual features and engagement features. These are discussed as:

-

Control variable: Duration of the watch time is the key control variable in the prediction. It is the primary feature of the engagement. Other engagement features stated in the literature are indirectly mapped to the engagement. The watch duration is a globally accepted direct engagement feature. So, this article includes it as the control variable.

-

Contextual features: these features are provided by the video publisher. These are categories and definitions. The video category is specified by the user specifically and video definition, i.e., the video picture quality is automatically detected by YouTube and can be specified by the publisher too. High-quality videos (1080p, 720p) are denoted as 1 and low quality videos (480 p, 360p, 240 p) are represented as 1.

-

Engagement features: the primary engagement feature is the average amount of time, a user spends on the video. If the content is good, the watch duration is higher. The average watch percentage (\(\:\stackrel{-}{{\mu\:}_{t}})\) is such a feature which is calculated by the average watch time divided by the duration. Equation 12 calculates it.

\(\:{\stackrel{-}{\omega\:}}_{t}\) is the average watch time and \(\:D\) is the duration The average watch time is calculated as the ratio of total watch time divided by the total view count as in Eq. 13.

Here \(\:{x}_{w}\) and \(\:{x}_{v}\) are the watch time and views.

Baselines

The proposal is to extract the latent information from the sequential propagation of video information from the LSTM model. The input is temporal and engagement features. Our work started with looking for more informative features than view counts that don’t present complete information over time. Researchers in3,4,5,6 also followed the same ideology; however, instead of digging into low dimensional feature space, they populated features by looking into diverse features like textual, visual etc.

Due to different dataset, the solution can’t be directly evaluated in comparison to their features set. Though, we used the predictor used in those researches. They have relied on LR, SVR and deep neural networks like LSTM. LR is also used as a primary predictor in14 and Wu et al.17 used the LR and SVR for comparison. However, they have proved the SVR had shown the degraded performance than LR. Since we have tested the MLEF-DL predictor algorithm on the dataset as in17, a more valid comparison is possible.

Evolutional parameters

The YouTube popularity prediction is made upon the time series data whose cross-validation analysis is not possible due to non-independent samples. We reshape our multivariate data for time series analysis which are evaluated by Mean Absolute Error (MAE) and the coefficient of determination (\(\:{R}^{2}\)).

MAE is more robust to outliers and the youtube dataset is prone to outliers due to limited mapping of user behavior in the data. There may be more matrics to catch for user events, like whether the user skips the video’s content and watches clips at certain video intervals. This event is not defined in the used data. The video’s popularity also gets affected by external conditions like an overnight shoot in the video’s views. These are treated as outliers in the prediction. The MAE is a suitable regression evaluation parameter for the outliers63. Although the MAE is good for outliers, it has limitations due to the arithmetic mean (AM) usage. Due to use of AM, it shows biasing towards overrating. The AM is severely affected if the number of samples is few which is possible in the prediction for videos data of any genre. For such cases, the evaluation parameter like coefficient of determination (\(\:{R}^{2}\)) is used as it shows the variability of a time series factor to another. A brief of these two are:

MAE

The MAE is the scale-dependent parameter and at the same scale as the predicting input. It is famous when applying on uni or multivariate time series accuracy measurement from the prediction error between the forecasted and observed values. It is calculated as in Eq. 14.

Coefficient of Determination (\(\:{R}^{2}\)): It is a statistical measure to analyze how well the predicted time series values align with the observed values. Its value lies between 0 and 1. The higher the value, the more is the fitness of predicted values with the observed one. Mathematically equation to calculate as in Eq. 15:

Here \(\:x\) and \(\:y\) are the observed and predicted time series values.

Discussion

We have implemented the MLEF-DL predictor in python with Keras package for deep learning. Google Colaboratory has been primarily used for training. Comparison with state-of-the-art algorithms, as discussed in Sect. 4.2 is tabulated in Table 2. The LR, SVR and other LSTM deep learning configurations are tested on the processed video attributes directly and comparison is presented with proposed MLEF-DL predictor. In machine learning, the evaluation is considered on the unseen data on which network is not trained as a machine learning network may perform better during the training but performance on unseen data might differ. So, we trained the network with 80% of the data and tested with the rest 20% of data not used in training.

Results are compared with two different single-layered LSTM configurations (LSTM1 and LSTM2) with normalization and dropout layers. These two configurations have 32 and 128 hidden layers, respectively. The CNN-LSTM configuration with the original video attributes is also compared with the proposed MLEF-DL. The schema of the proposed scheme comparison is shown in Fig. 6. The proposed method to map the features into lower dimensional space is also tested with the LR, SVR and LSTM predictors. Table 2 records the testing of LR, SVR and LSTM with the original video attributes and with MLEF extracted latent features. The minimum MAE of 0.0002 with MLEF-DL is observed, which is 50% lesser than the modeling without latent features. The spatial extraction of features from the latent temporal features has reduced the error significantly. Though the \(\:{R}^{2}\) has shown an improvement of 0.61% from the18. As discussed in Sect. 3.2, the LSTM layer arrangement propagates only the temporal information from the time-series data, whereas the spatial information of the features also plays a significant role. Due to this, the MAE from two single-layered LSTM with different hidden layers can’t be comparable with MLEF-DL. Though the analysis from Table 2 states that MAE is better with lesser hidden neurons, the statistical analysis indicates that predicted values are less than the actual set. But with the video attributes at the input only, the LSTM model with more hidden neurons performs better. This concludes that the contribution of latent features to the time-series prediction is accountable. The performance of LR and SVR with and without MLEF is approximate at the scale of MAE. It can be noted from Table 2, the statistical analysis has improved with MLEF for all state-of-the-art schemes. Table 2 results are for the testing in unseen data. The training progress curve comparison is shown in Fig. 7 for both LSTM and CNN-LSTM for the MLEF vector. During training, MAE is chosen as the loss function. It can be observed from the curve that the MAE at the last epoch by the MLEF-DL is equal to the obtained for the testing data.

Popularity Prediction vs. a week Horizon: The popularity of the videos may change with respect to time. The above analysis is done for the next day’s prediction. With the same training set, the popularity is also evaluated for 1 to 7 days ahead of the current recording date. The data is reframed in the time series prediction analysis to concatenate the previous 1 to 7 days’ feature matrix with the training data. The LSTM learns the past observations as input to the next output. The next day feature matrix acts as the output to current day input for one day ahead popularity prediction. Similarly, for two days ahead prediction, the next two days’ features act as the output. This way, the model with CNN-LSTM learns the observations. Figure 8 shows the popularity prediction comparison for MLEF-DL, trained at the different timestamps.

Figure 8 follows the theoretical convention that short-term forecasting is better than challenging long-term. An algorithm always works better for a smaller time horizon. The MAE increases with the increase in window size in Fig. 8. Comparison is shown for proposed MLEF-DL, CNN-LSTM, SVR and LR. The change of -2.52% is obtained in the MLEF-DL for the 7 days’ windows prediction and with 1 day prediction.

Conclusions

The authors have proposed a novel multi-branch child-parent LSTM network for the video attributes mapping into lower-dimensional space. Latent features thus extracted are predicted by a fused CNN-LSTM deep learning arrangement. The YouTube database of 5,331,204 videos of different categories is abstracted from 1,257,412 YouTube channels. The attributes collected from the data are temporal and video engagement features. These video attributes are propagated through the suggested MLEF deep learning arrangement to extract the information-rich latent features. These features are used for prediction with various state-of-the-art schemes and proposed CNN-LSTM arrangement. An improvement of 50% has been noticed in the MAE from the CN-LSTM arrangement in18 with video attributes at the input. On the statistical ground, the proposed MLEF-DL has shown an improvement of 0.61%. On comparing the other deep learning algorithms, constituting of LSTM layers with different hidden neurons, an improved value of the coefficient of determination has been achieved. However, due to a lack of spatial information in LSTM network, MAE score is lesser. The other popular regression analysis also showed a degrade performance than MLEF-DL. Using MLEF-DL, authors assist the online video publishers and online channel owners to know the popularity of the videos in advance. By knowing the future popularity, publishers can upload the videos with more engaging content that results in increased revenue and number of subscribers.

Based upon these observations, the scope of introducing the latent features from the visual information is also there. The mapping of these visual features in the latent space has many aspects to explore. In the time-series prediction, use of autoencoder is also increased. Though our concept of latent features extraction is inspired by the autoencoder concept, yet the possibilities of autoencoder implementation with proposed MLEF features can be explored.

Data availability

“ The authors confirm that the data supporting the findings of this work are available/cited within the article.”

References

Huang, L. et al. User behavior analysis and video popularity prediction on a large-scale vod system. ACM Trans. Multimedia Comput. Commun. Appl. (TOMM). 14 (3), 1–24 (2018).

Chen, G., Kong, Q., Xu, N. & Mao, W. NPP: a neural popularity prediction model for social media content. Neurocomputing 333, 221–230 (2019).

Bao, Z., Liu, Y., Zhang, Z., Liu, H. & Cheng, J. Predicting popularity via a generative model with the adaptive peeking window. Phys. A: Stat. Mech. its Appl. 522, 54–68 (2019).

Zhu, C., Guang, C. & Wang, K. Big data analytics for program popularity prediction in broadcast TV industries. IEEE Access. 5, 24593–24601 (2017).

Jeon, H., Seo, W., Park, E. & Choi, S. Hybrid machine learning approach for popularity prediction of newly released contents of online video streaming services. Technol. Forecast. Soc. Chang. 161, 120303 (2020).

Bielski, A. & Trzcinski, T. Understanding multimodal popularity prediction of social media videos with self-attention. IEEE Access. 6, 74277–74287 (2018).

Lin, Y. T., Yen, C. C. & Wang, J. S. Video popularity prediction: an Autoencoder Approach with Clustering. IEEE Access. 8, 129285–129299 (2020).

Tai, K. S., Socher, R. & Manning, C. D. Improved semantic representations from tree-structured long short-term memory networks. arXiv Preprint arXiv :150300075. (2015).

Dou, H. et al. Predicting the popularity of online content with knowledge-enhanced neural networks. ACM KDD 1–8 (2018).

Ma, C., Yan, Z., Chen, C. W. & LARM A lifetime aware regression model for predicting youtube video popularity. In proceedings of the ACM on Conference on Information and Knowledge Management. 467–476 (2017). (2017).

Chen, X. et al. Fine-grained video attractiveness prediction using multimodal deep learning on a large real-world dataset. In companion Proceedings of the Web Conference.671–678 (2018).

Wu, Q., Yag, C., Zhang, H., Gao, X. & Weng, P. Adversarial training model unifying feature-driven and point process perspectives for event popularity prediction. In proceedings of the 27th ACM International Conference on Information and Knowledge Management. 517–526 (2018).

Martin, S. C. & Bottou, L. Wasserstein generative adversarial networks. In proceedings of the 34 th International Conference on Machine Learning, Sydney, Australia. 214–223 (2017).

Trzciński,, T. & Przemysław, R. Predicting popularity of online videos using support vector regression. IEEE Trans. Multimedia. 19 (11), 2561–2570 (2017).

Mekouar, S., Zrira, N. & Bouyakhf, E. H. Popularity Prediction of Videos in YouTube as Case Study: A Regression Analysis Study, In proceedings of the 2nd international Conference on Big Data, Cloud and Applications. 1–6 (2017).

Kong, Q., Rizoiu, M. A., Wu, S. & Xie, L. Will This Video Go Viral: Explaining and Predicting the Popularity of Youtube Videos, In companion Proceedings of the The Web Conference. 175–178 (2018).

Wu, S., Rizoiu, M. A. & Xie, L. Beyond views: Measuring and predicting engagement in online videos, In Proceedings of the International AAAI Conference on Web and Social Media.12(1), 1–10 (2017). (2017).

Karim, F., Majumdar, S., Darabi, H. & Chen LSTM fully convolutional networks for time series classification. IEEE Access. 6, 1662–1669 (2017).

Karim, F., Majumdar, S., Darabi, H. & Harford, S. Multivariate LSTM-FCNs for time series classification. Neural Netw. 116, 237–245 (2019).

Sangwan, N. & Bhatnagar, V. Video popularity prediction based on fuzzy inference system. J. Stat. Manage. Syst. 23 (7), 1–13 (2020).

Zhang, Y., Li, P., Zhang, Z., Wang, W. & GraphInf: A GCN-based Popularity Prediction System for Short Video Networks. In proceedings of international Conference on Web Services. Springer, Cham. 27, 61–76 (2020). (2020).

Hassanpour, M., Hoseinitabatabaei, S. A., Barnaghi, P. & Tafazolli, R. Improving the accuracy of the video popularity prediction models through user grouping and video popularity classification. ACM Trans. Web (TWEB). 14 (1), 1–28 (2020).

Wang, K., Wang, P., Chen, X., Huang, Q. & Mao, Z. A Feature Generalization Framework for Social Media Popularity Prediction. In proceedings of the 28th ACM International Conference on Multimedia. 4570–4574 (2020).

Li, H., Ma, X., Wang, F., Liu, J. & Xu, K. On popularity prediction of videos shared in online social networks. In proceedings of the 22nd ACM international conference on Information & Knowledge Management. 169–178 (2013).

Roy, S. D., Mei, T., Zeng, W. & Li, S. Towards cross-domain learning for social video popularity prediction. IEEE Trans. Multimedia. 15 (6), 1255–1267 (2013).

Figueiredo, F. On the prediction of popularity of trends and hits for user generated videos. In proceedings of the sixth ACM international conference on Web search and data mining. (2013).

Bae, Y. & Lee, H. Sentiment analysis of twitter audiences: measuring the positive or negative influence of popular twitterers. J. Am. Soc. Inform. Sci. Technol. 63 (12), 2521–2535 (2012).

Ma, Z., Sun, A. & Cong, G. On predicting the popularity of newly emerging hashtags in twitter. J. Am. Soc. Inform. Sci. Technol. 64 (7), 1399–1410 (2013).

McParlane, P. J., Moshfeghi, Y. & Jose, J. M. Nobody comes here anymore, it’s too crowded; predicting image popularity on flickr. In Proceedings of International Conference on Multimedia Retrieval. 385–391 (2014).

Cappallo, S., Mensink, T. & Snoek, C. G. M. Latent factors of visual popularity prediction. In Proceedings of International Conference on Multimedia Retrieval. 195–202 (2015).

Gelli, F., Uricchio, T., Bertini, M., Del Bimbo, A. & Chang, S. F. Image popularity prediction in social media using sentiment and context features. In Proceedings of ACM International Conference on Multimedia. 907–910 (2015).

Cha, M., Kwak, H., Rodriguez, P., Ahn, Y. Y. & Moon, S. I tube, you tube, everybody tubes: analyzing the world’s largest user generated content video system. In Proceedings of ACM SIGCOMM Conference on Internet Measurement. 1–14 (2007).

Liu, J., Yang, Y., Huang, Z. & Yang, Y. On the influence propagation of web videos. IEEE Trans. Knowl. Data Eng. 26 (8), 1961–1973 (2014).

Vasconcelos, M., Almeida, J. M. & Gonc¸alves, M. A. Predicting the popularity of micro-reviews: a foursquare case study. Inf. Sci. 325, 355–374 (2015).

Wu, B. & Shen, H. Analyzing and predicting news popularity on twitter. Int. J. Inf. Manag. 35 (6), 702–711 (2015).

Khosla, A., Das Sarma, A. & Hamid, R. What makes an image popular? In Proceedings of ACM International Conference on World Wide Web. 867–876 (2014).

Wu, J., Zhou, Y., Chiu, D. M. & Zhu, Z. Modeling dynamics of online video popularity. IEEE Trans. Multimedia. 18 (9), 1882–1895 (2016).

Totti, L. C. et al. The impact of visual attributes on online image diffusion. In Proceedings of ACM Web Science Conference. 42–51 (2014).

Ding, W. et al. Video popularity prediction by sentiment propagation via implicit network. In Proceedings of ACM International on Conference on Information and Knowledge Management. 1621–1630 (2015).

Jing, P., Su, Y., Nie, L., Bai, X. & Liu, J. Low-rank multi-view embedding learning for micro-video popularity prediction. IEEE Trans. Knowl. Data Eng. 30 (8), 1519–1532 (2017).

Chen, J. et al. Micro tells macro: predicting the popularity of micro-videosvia a transductive model. In Proceedings of ACM International Conferenceon Multimedia. 898–907 (2016).

Bohra, N. & Bhatnagar, V. Group level social media popularity prediction by MRGB and Adam optimization. J. Comb. Optim. 41, 328–347 (2021).

Bohra, N. et al. Popularity Prediction of Social Media Post using Tensor Factorization. Intell. Autom. Soft Comput. 36 (1), 205–221 (2023).

Sangwan, N. & BhatnagaR, V. Video popularity prediction using stacked bilstm layers. Malaysian J. Comput. Sci. 34 (3), 242–254 (2021).

Malik, N. et al. HyperSegRec: enhanced hypergraph-based recommendation system with user segmentation and item similarity learning. Cluster Comput. 27, 11727–11745 (2024).

Halim, Z., Hussain, S. & Ali, R. H. Identifying content unaware features influencing popularity of videos on YouTube: a study based on seven regions. Expert Syst. Appl. 206, 117836, 1–19 (2022).

Uzma, Al-Obeidat, F., Tubaishat, A., Shah, B. & Halim, Z. Gene encoder: a feature selection technique through unsupervised deep learning-based clustering for large gene expression data. Neural Comput. Appl. 34, 8309–8331 (2020).

Halim, Z., Waqar, M. & Tahir, M. A machine learning-based investigation utilizing the in-text features for the identification of dominant emotion in an email. Knowl. Based Syst. 208, 106443, 1–17 (2020).

Rahman, A. U. & Halim, Z. Identifying dominant emotional state using handwriting and drawing samples by fusing features. Appl. Intell. 53 (3), 2798–2814 (2023).

Tahir, M. et al. A novel binary chaotic genetic algorithm for feature selection and its utility in affective computing and healthcare. Neural Comput. Appl. 34, 11453–11474 (2022).

Halim, Z. et al. An effective genetic algorithm-based feature selection method for intrusion detection systems. Computers Secur. 110, 102448, 1–20 (2021).

Pascanu, R., Gulcehre, C., Cho, K. & Bengio, Y. How to construct deep recurrent neural networks. ArXiv Preprint arXiv :13126026. (2013).

Olah, C. Understanding lstm networks. (2015).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9 (8), 1735–1780 (1997).

Ouyang, X. et al. A 3D-CNN and LSTM based multi-task learning architecture for action recognition. IEEE Access. 7, 40757–40770 (2019).

Rossi, L., Paolanti, M., Pierdicca, R. & Frontoni, E. Human trajectory prediction and generation using LSTM models and GANs. Pattern Recogn. 120, 108136 (2021).

Li, B. & Nong, X. Automatically classifying non-functional requirements using deep neural network. Pattern Recogn. 132, 108948 (2022).

Lu, N., Wu, Y., Feng, L. & Song, J. Deep learning for fall detection: three-dimensional CNN combined with LSTM on video kinematic data. IEEE J. Biomedical Health Inf. 23 (1), 314–323 (2018).

Chen, Z. et al. Time-Frequency Deep Metric Learning for Multivariate Time Series Classification. Neurocomputing 462, 221–237 (2021).

Zhang, X., Gao, Y., Lin, J., Lu, C. T. & Tapnet Multivariate time series classification with attentional prototypical network. In Proceedings of the AAAI Conference on Artificial Intelligence. 34(4), 6845–6852 (2020).

Liu, S. et al. COVID-19 estimation based on wristband heart rate using a contrastive convolutional auto-encoder. Pattern Recogn. 123, 108403 (2022).

Liang, G., Lv, Y., Li, S., Zhang, S. & Zhang, Y. Video summarization with a convolutional attentive adversarial network. Pattern Recogn. 131, 108840 (2022).

Davydenko, A. & Fildes, R. Forecast error measures: critical review and practical recommendations. Bus. Forecasting: Practical Probl. Solutions. 34, 1–12 (2016).

Funding

The authors confirm that no funding was received for this work.

Author information

Authors and Affiliations

Contributions

Neeti Sangwan wrote the main manuscript text and Dr. Vishal Bhatnagar reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval

The authors confirm that the results/data/figures in this manuscript have not been published elsewhere, nor are they under consideration by another publisher.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sangwan, N., Bhatnagar, V. Multi-branch LSTM encoded latent features with CNN-LSTM for Youtube popularity prediction. Sci Rep 15, 2508 (2025). https://doi.org/10.1038/s41598-025-86785-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-86785-3