Abstract

Medical image annotation is scarce and costly. Few-shot segmentation has been widely used in medical image from only a few annotated examples. However, its research on lesion segmentation for lung diseases is still limited, especially for pulmonary aspergillosis. Lesion areas usually have complex shapes and blurred edges. Lesion segmentation requires more attention to deal with the diversity and uncertainty of lesions. To address this challenge, we propose MSPO-Net, a multilevel support-assisted prototype optimization network designed for few-shot lesion segmentation in computerized tomography (CT) images of lung diseases. MSPO-Net learns lesion prototypes from low-level to high-level features. Self-attention threshold learning strategy can focus on the global information and obtain an optimal threshold for CT images. Our model refines prototypes through a support-assisted prototype optimization module, adaptively enhancing their representativeness for the diversity of lesions and adapting to the unseen lesions better. In clinical examinations, CT is more practical than X-rays. To ensure the quality of our work, we have established a small-scale CT image dataset for three lung diseases and annotated by experienced doctors. Experiments demonstrate that MSPO-Net can improve segmentation performance and robustness of lung disease lesion. MSPO-Net achieves state-of-the-art performance in both single and unseen lung disease segmentation, indicating its potentiality to reduce doctors’ workload and improve diagnostic accuracy. This research has certain clinical significance. Code is available at https://github.com/Tian-Yuan-ty/MSPO-Net.

Similar content being viewed by others

Introduction

The interpretation of medical images is the main task of the radiologists, and it is also a crucial part of assisting clinicians in disease diagnosis and treatment. Its accuracy heavily relies on the work experience of doctors, which may lead to misdiagnosis and missed diagnosis of diseases, affecting the clinical outcomes of patients. Semantic segmentation plays a crucial role in interpretation of medical images such as clinical diagnosis1, treatment planning2, and quantification of tissue volumes3. Deep learning has achieved successful performance in semantic segmentation of medical images4.

The challenges that medical image analysis need to confront are: (1) heterogeneous medical data, e.g., there are many types of diseases and various treatment methods for each disease; (2) scarcity of labeled data, annotating medical images requires clinical expertise; (3) restrictions on the use of medical data, e.g., strictly to protecting the patient’s privacy and following medical ethical principle. Deep learning depends heavily on large-scale, high-quality, fully pixel-wise annotations. The publicly available biomedical datasets are still limited, they mostly annotated for major human anatomic structures5 and common diseases6, which cannot meet the needs of customized research topics.

To this end, few-shot learning has been introduced into medical image segmentation, which is devoted to extract knowledge from small-scale dataset and achieve knowledge transfer. Few-shot segmentation (FSS) predicts the label of each pixel in the image, which is an extension of few-shot classification. FSS has achieved many valuable works in the medical imaging field7, most of them focus on organ segmentation, such as liver8, kidney9, heart10, and brain11. A few research are around cell segmentation12,13 and lesion segmentation14. In medical images, human organs have clear boundaries and stable sizes, while the size and shape of lesion area are not fixed, the edge is blurred. Disease lesions usually have diversity and uncertainty. The difficulty of annotating and segmenting lesion areas is higher. The FSS research on lesion segmentation is still limited.

Secondary pulmonary tuberculosis, pulmonary aspergillosis and lung adenocarcinoma are three common lung diseases with typical lesion characteristics. In recent years, clinicians have tended to screen suspected cases of lung diseases through chest computerized tomography (CT) scan and diagnose them based on typical manifestations on CT images. However, in the research on detection and lesion segmentation of pulmonary tuberculosis15,16, the mostly commonly used datasets are chest X-ray (CXR) images. CXR has a very high misdiagnosis and missed diagnosis rate for tuberculosis, and even most of lung diseases17. For pulmonary aspergillosis, there is almost no relevant segmentation research at present, whether using deep learning or machine learning methods18. There are many segmentation research on lung carcinoma based on chest CT images, but there is few related research specifically on lung adenocarcinoma19.

In this paper, we introduce FSS into the lesion segmentation of lung diseases. Doctors make diagnoses for patients through medical knowledge, and different patient information can also enrich their diagnostic experience. Doctors also repeatedly study confirmed cases to strengthen or fine-tune their prior knowledge to make more accurate diagnoses on such symptoms. Inspired by this, we developed a multilevel support-assisted prototype optimization network (MSPO-Net) for few-shot lesion segmentation of lung diseases. MSPO-Net fully utilizes the lesion information from a few support cases, places emphasis on adaptively learning the lesion features to address the diversity of lesions, especially for the lesions of “unseen” lung diseases. MSPO-Net can accurately mark lesion areas in chest CT images with only a small number of cases, which could reduce the workload of doctors and improve the accuracy of diagnosis.

Most public large-scale chest CT datasets are related to detection of pulmonary abnormalities (like lung nodule, emphysema, lung opacity, et al.), and there are few public large-scale chest CT datasets for lesion segmentation of multiple lung diseases currently. To better evaluate the performance and generalization of our proposed MSPO-Net and other state-of-the-art (SOTA) models, we organized the real information of secondary pulmonary tuberculosis, pulmonary aspergillosis, and lung adenocarcinoma cases and established a small-scale CT images dataset. To ensure the quality of our work, all CT images are annotated fully pixel-wise by experienced doctors.

Our contributions:

-

1.

We propose a novel multilevel support-assisted prototypes optimization network to improve prior art FSS methods for medical segmentation of different lung lesions.

-

2.

We develop a few-shot segmentation framework with multilevel prototype learning and self-attention threshold to adaptively address the lesion diversity and uncertainty.

-

3.

We introduce a support-assisted prototype optimization module to enhance the representativeness of multilevel prototypes for different lesion characteristics and improve the model generalization.

-

4.

We construct and release a publicly available CT image dataset for three lung diseases with typical manifestations and fully pixel-wise annotate by experienced doctors.

Data description

Shandong Provincial Public Health Clinical Center is a first-class specialized hospital with sufficient experience in the diagnosis and treatment of respiratory diseases. In this paper, we reviewed the data of hospitalized patients with secondary pulmonary tuberculosis, pulmonary aspergillosis, and lung adenocarcinoma at Shandong Provincial Public Health Clinical Center from January 2020 to January 2024. We read these medical records in detail and screened these cases according to the following conditions:

-

(1)

Exclude cases younger than 18 years and older than 90 years.

-

(2)

Exclude cases with incomplete diagnosis.

-

(3)

Exclude cases with incomplete medical records.

A total of 100 cases of secondary pulmonary tuberculosis, 51 cases of pulmonary aspergillosis and 51 cases of pulmonary adenocarcinoma were selected. These patients have clear diagnoses, complete medical records, typical imaging manifestations, as well as complete pathogen culture results and pathology report. Experienced doctors selected the most typical image of each case and annotated the lesion areas. The original size of CT images is 512 × 512 pixels. Therefore, we established our chest CT image dataset of three lung diseases and shared on GitHub20.

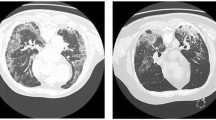

Secondary pulmonary tuberculosis, pulmonary aspergillosis and lung adenocarcinoma each have their typical manifestations on chest CT, as shown in Fig. 1. Secondary pulmonary tuberculosis often occurs in the apical and posterior segments of the upper lobes and the superior segments of the lower lobes. Its most common CT manifestation are patchy and nodular shadows with multiple morphologies and densities, such as the typical tree-in-bud distribution, accompanied by satellite lesions, fibrous calcification lesions, etc21. CT specialities of pulmonary aspergillosis often presents as pulmonary cavity, round or oval density formed within the cavity22. Lung adenocarcinoma has uneven edges of the tumor with CT characteristics of lobulation, spiculation, pleural indentation, etc., because the degree of tumor cell differentiation is different, and the growth rate varies in different parts of tumors23. Doctors need to diagnose these diseases based on these radiological features, combined with the patient’s medical history, clinical manifestations, laboratory examinations, and relevant etiologic analysis.

Methodology

Problem statement

We aim at obtaining a segmentation model for few-shot lesion segmentation. The training set \(\:{D}_{train}\) is constructed from class set \(\:{C}_{train}\) and the test set \(\:{D}_{test}\) is constructed from class set \(\:{C}_{test}\). Generally speaking, \(\:{C}_{train}\) and \(\:{C}_{test}\) do not intersect.

We train the segmentation model on \(\:{D}_{train}\) and evaluate on \(\:{D}_{test}\). Both \(\:{D}_{train}={\left\{\left({S}_{i},{Q}_{i}\right)\right\}}_{i=1}^{{N}_{train}}\) and \(\:{D}_{test}={\left\{\left({S}_{i},{Q}_{i}\right)\right\}}_{i=1}^{{N}_{test}}\) are composed of several sampled episodes, where \(\:{S}_{i}\) is the support set with annotations and \(\:{Q}_{i}\) is the query set, \(\:{N}_{train}\) and \(\:{N}_{test}\) denote the number of episodes for training and testing respectively. Each training or testing episode \(\:\left({S}_{i},{Q}_{i}\right)\) includes \(\:K\) support images and some query images of \(\:C\) classes, that is, a \(\:C\)-way \(\:K\)-shot learning task. Specifically, the support set \(\:{S}_{i}\) has \(\:K\) pairs of ⟨image, mask⟩ per semantic class and there are in total \(\:C\) different classes from \(\:{C}_{train}\) and \(\:{C}_{test}\), i.e., \(\:{S}_{i}=\left\{\left({I}_{c,\:k},\:{M}_{c,\:k}\right)\right\}\), where \(\:k=1,\:2,\:\dots\:,\:K\) and \(\:c\in\:{C}_{i}\) with \(\:\left|{C}_{i}\right|=C\). The query set \(\:{Q}_{i}\) contains \(\:{N}_{query}\) ⟨image, mask⟩ pairs from the same class set \(\:{C}_{i}\) as the support set. After training, we make prediction masks for the query set by the learned segmentation model to evaluate the few-shot segmentation performance across the testing episodes. Following the previous works, we adopt 1-way 1-shot few-shot learning strategy.

Overall architecture

In this work, we propose a multilevel support-assisted prototype optimization network (MSPO-Net) for FSS and apply in lung diseases lesion segmentation. Figures 2 and 3 provide an overview of the proposed MSPO-Net during training stage and testing stage in a 1-way 1-shot two-level example.

In chest CT images, the lesion part is the foreground. The pipeline of training and testing stage both first embed the chest CT images from support set and query set into deep features by a shared backbone network and select multiple groups of feature maps output from different depths in the backbone network.

In training stage, MSPO-Net applies the masked average pooling to learn multilevel foreground lesion prototypes from the feature maps of support set. Few-shot segmentation with self-attention threshold is used on query images and the query predicted segmentation result is obtained by multilevel predicted masks fusion. Following24, the learned segmentation model is used to perform segmentation in a reverse direction for prototype alignment regularization. MSPO-Net updates parameters during training.

In testing stage, the proposed MSPO-Net operates in four steps: (1) Learn multilevel foreground prototypes from the support set; (2) Perform segmentation over support CT image; (3) Update the foreground prototypes level by level through the support-assisted prototype optimization module; (4) Use the optimized multilevel prototypes to make final segmentation over query CT image. The parameters of MSPO-Net model remain fixed in testing stage.

Multilevel prototypes learning

For medical images, the texture of the foreground object (e.g., one organ, a piece of lesion) is generally homogeneous, while the background is usually spatially in-homogeneous due to the existence of abundant tissues and objects of unseen classes25. The imbalance between the foreground object and the large and highly heterogeneous background class is more severe than for natural images. Recent work has shown that using a single foreground prototype to few-shot medical image segmentation can avoid modeling the background, thus reducing the impact of the imbalance between the foreground and background26. So that, we only consider the foreground prototype, which is different from the previous methods of obtaining prototypes both for foreground and background24,27,28.

FSS requires strong ability to capture class characteristics in a little annotated data. For the few-shot lesion segmentation task for lung diseases in our works, foreground prototype represents the features of the lesion area. As mentioned in convolutional neural network (CNN) feature visualization literature, low-level features are typically related to low-level cues like edges and colors, while high-level features are related more to object-level concepts such as object categories29,30. Single prototype learned only from high-level features cannot reflect the whole characteristics of lesion areas. Instead, we focus on the cooperation of multilevel prototypes. The lesions of different lung diseases have their own textures, the lesion areas have different sizes and shapes. Multilevel prototypes can learn the lesion characteristics from low-level clues to high-level concepts.

The process of multilevel prototypes learning is shown in Fig. 4. Embed the CT image into deep features through backbone networks and select feature maps output by different convolution blocks. Each group of feature maps can learn a corresponding level of lesion prototype and obtain a predicted mask after segmentation.

Let \(\:{F}^{s}\) be the support feature maps output by the backbone networks, \(\:{M}^{s}\) be the support foreground ground truth mask. The foreground prototype of the lesion class \(\:c\) is computed via masked average pooling31:

where \(\:\left(x,\:y\right)\) is the spatial location, \(\:{F}^{s}\left(x,y\right)\) is the feature vector at \(\:\left(x,\:y\right)\) in \(\:{F}^{s}\), \(\odot\) denotes the Hadamard product, \(\: \mathbbm{l}\left(\cdot\:\right)\) is an indicator function, outputting value 1 if \(\:{M}^{s}\left(x,y\right)=c\) or 0 otherwise.

Assume that \(\:n\)-level prototypes of a CT image need to be learned. Multilevel prototypes are learned according to formula (1) and obtain a set of prototypes \(\:P=\left\{{p}_{1},\:{p}_{2},\dots\:,{p}_{n}\right\}\). Multilevel prototypes learning can reduce the information loss of lesion areas in CT images.

Few-shot segmentation with self-attention threshold

To segment the query image based on only foreground prototypes, we choose a threshold-based metric learning approach to the segmentation. The negative cosine similarity \(\:S\left(x,y\right)\) between the query feature maps at each spatial location and a learned prototype \(\:p\) in the prototype set \(\:P\) is calculated by

where \(\:{F}^{q}\) denotes the query feature maps, \(\:{F}^{q}\left(x,y\right)\) is the feature vector at \(\:\left(x,\:y\right)\) in \(\:{F}^{q}\), and the scaling factor \(\:\alpha\:\) is fixed at 20 due to learning it yields little performance gain32.

Soft thresholding by a shifted Sigmoid is performed to generate the query predicted mask \(\:{\widehat{m}}^{q}\):

where \(\:\sigma\:\left(\cdot\:\right)\) denotes the Sigmoid function, \(\:T\) is the learned soft threshold, the subscripts \(\:fg\) and \(\:bg\) denote foreground and background, respectively.

To adapt to foreground classes better, we proposed a self-attention threshold learning strategy, as shown in Fig. 5. The threshold \(\:T\) is learned in the training stage and directly used in the testing stage to segment foreground objects. The parameters of the self-attention block are trained in the training stage to make the threshold \(\:T\) better distinguish the negative cosine similarity \(\:S\left(x,y\right)\). When \(\:{F}^{q}\left(x,y\right)\) is similar to the foreground prototype, \(\:S\left(x,y\right)\) is less than \(\:T\) and the foreground probability is higher than 0.5.

In this strategy, a self-attention block \(\:g\left(\cdot\:\right)\) with a single head and a linear layer \(\:\mathcal{L}\) are embedded after the backbone networks \(\:{f}_{\theta\:}\) to map the deep features to a single value as the learned self-attention threshold \(\:T\). The threshold \(\:T\) for a chest CT image \(\:I\) through the backbone networks \(\:{f}_{\theta\:}\) and self-attention threshold learning strategy \(\:{g}_{\mathcal{L}}\left(\cdot\:\right)\) is

The self-attention block focuses on the global information of deep feature maps and learns more appropriate threshold \(\:T\). During the training process, \(\:T\) keeps updating through the self-attention threshold learning strategy and gradually stabilizes around a fixed value. Each CT image corresponds to its own self-attention threshold.

Suppose that we learn \(\:n\)-level prototypes of each CT image, upsample the query predicted masks \(\:{\widehat{m}}_{i}^{q}\) to CT image size and record as \(\:{\widehat{M}}_{i}^{q}\), \(\:i=1,\:2,\dots\:,\:n\). Then, \(\:n\) query predicted masks are fused into the final predicted segmentation result \(\:{\widehat{M}}^{q}\) with a weight factor set \(\:w=\left\{{w}_{1},\:{w}_{2},\dots\:,{w}_{n}\right\}\):

where \(\:{w}_{i}\in\:\left[\text{0,1}\right]\) and \(\:\sum\:{w}_{i}=1\). The weight factor represents the contribution of different levels of prototypes in generating the final predicted mask.

The segmentation loss \(\:{L}_{seg}\) is calculated as follows:

where \(\:{M}^{q}\) is the ground truth segmentation mask of the query image and \(\:N\) is the total number of spatial locations.

Further, via prototype alignment regularization, the query and its predicted mask are taken as the new support to learn to segment the support images. According to the same process from Eq. (1) to (6), the loss \(\:{L}_{PAR}\) is calculated as follows:

The total loss \(\:L\) for training MSPO-Net model is

Support-assisted prototype optimization

In testing stage, the support annotations are the only valid information we can obtain from the support set. If the support annotations are used only for masking, it does not adequately exploit the support information for FSS. We prefer to extract more lesion information from the support set to adjust the lesion prototype, thereby improving its adaptability to lung disease lesions in testing stage, especially in the case of “unseen” diseases. To improve the quality and adaptability of prototypes, we introduce a support-assisted prototype optimization module to refine the foreground prototypes by support information in testing stage. The simplified optimization process is shown in Fig. 6 and more details are shown in Fig. 3. So that the optimized prototypes can better represent the foreground class features in different embedding deep levels.

Given a support chest CT image with ground truth segmentation mask \(\:{M}^{s}\) and a query chest CT image, firstly learn \(\:n\)-level foreground prototypes via masked average pooling. Before segmenting the query image, use the learned prototypes to segment the supporting image, following Eqs. (1)-(5). For \(\:i\)-level support foreground prototype \(\:{p}_{i}\), compute the support predicted mask \(\:{\widehat{M}}_{i}^{s}\) by cosine similarity as Eq. (6). Estimate the segmentation loss \(\:L\left({\widehat{M}}_{i}^{s},\:{M}^{s}\right)\) between the support predicted segmentation mask and ground truth as same as Eq. (7). Iteratively update \(\:{p}_{i}\) by Adam optimizer and the update process is as follows:

where \(\:\nu\:\) is the learning rate, \(\:K\) is the total number of iterations of gradient back-propagation, \(\:{p}_{i}\left(k\right)\) denotes the optimized prototype of \(\:{p}_{i}\) after \(\:k\) iterations, \(\:{\widehat{M}}_{i}^{s}\left(k\right)\) is the support predicted segmentation mask obtained by \(\:{p}_{i}\left(k\right)\), \(\:i=1,\:2,\:\dots\:,\:n\).

The gradient back-propagation is only used for prototype optimization and will not further propagate to the MSPO-Net. The module does not participate in the training of MSPO-Net and will not increase the training burden. Its calculation amount is far less than updating the whole MSPO-Net parameters and will not significantly affect the test speed.

This proposed support-assisted prototype optimization module aims to improve the quality of the multilevel prototypes gradually. If the support foreground class has not appeared in the training stage, the multilevel prototypes can learn as much knowledge as possible from the support set during iteration and adapt to the unseen class better. Therefore, support-assisted prototype optimization module can make full use of supporting information to provide more accurate assistance to few-shot medical image segmentation and improve the model generalization.

Experiments

Evaluation metric

We adopt mean intersection-over-union (MIoU) as a primary evaluation metric, which is the common recognition in image segmentation. MIoU measures the Intersection-over-Union (IoU) for each foreground class and averages over all the classes. Binary-IoU (BIoU) are also reported for more comparisons. BIoU treats all object categories as one foreground class and averages the IoU of foreground and background.

The test results, MIoU and BIoU, are composed of the average and standard deviation of 5 runs experiments with different random seeds, each run containing 10 episodes. The average reflects the segmentation performance, and the standard deviation reflects the stability of the model.

Implementation details

We initialize the VGG-1633 as the backbone networks with the weights pre-trained on ILSVRC dataset34, which is same as the classic method PANet24 in FSS. Retain the first five convolutional blocks in VGG-16 and remove the layers after the fifth convolutional block. Each convolutional block is connected by a maxpool layer, the stride of maxpool1-3 layers is set to 2, the stride of maxpool4 layer is set to 1 for maintaining large spatial resolution. Replace the convolutions in conv5 block by dilated convolutions with dilation set to 2 to increase the receptive field.

Following the common practice, we crop input chest CT images to unified size of 417 × 417 pixels and use random horizontal flipping for image augmentation. The model is trained end-to-end for 20k iterations by stochastic gradient descent (SGD) optimizer. The default learning rate is 1e-3, weight decay is 0.0005, momentum is 0.9 and batch size is 1. During the testing phase, the proposed support-assisted prototype optimization module adaptively optimizes prototypes by Adam optimizer, with a default learning rate of 1e-3 and betas of (0.9, 0.999). And the maximum iteration time of the optimization module is set to 100. We implement the proposed method using the PyTorch on a NVIDIA RTX 4060Ti GPU.

The experiments include two parts. The first part of the experiments is to observe the performance of the proposed MSPO-Net on different lung diseases and compare with other SOTA methods, and then compare the generalization of these models. The second part is the ablation study to verify the effect of multilevel prototypes learning and support-assisted prototype optimization module, taking CT images of secondary pulmonary tuberculosis as an example. When training and testing MSPO-Net with CT images of one lung disease, objects of test classes are implicitly involved in the training process, then the test classes are not truly “unseen” classes for the algorithm agent.

Result

Model performance, generalization and comparison with SOTA

We apply the proposed MSPO-Net on chest CT images of different lung diseases to observe the model generalization. We have prepared annotated CT images of three diseases: secondary pulmonary tuberculosis, pulmonary aspergillosis, and lung adenocarcinoma. Their lesions have typical manifestations, multilevel prototypes can represent lesion features more accurately. We report the segmentation results as well as the 95% confidence intervals.

Firstly, we train lesion segmentation models for each lung disease separately to verify that the proposed MSPO-Net is capable to perform well in few-shot lesion segmentation for all three diseases. The best lesion segmentation results of MSPO-Net with different prototypes and weight factor for three lung diseases are shown in Table 1. The iteration time of prototype optimization is set to 100.

According to Table 1, for secondary pulmonary tuberculosis and lung adenocarcinoma, MSPO-Net has the best lesion segmentation effect with three-level prototypes, while for pulmonary aspergillosis, the lesion segmentation effect with two-level prototypes is the best. We can choose different prototypes combinations and weight factor allocation in MSPO-Net lesion segmentation models for different diseases to achieve the best performance.

When training the segmentation model for lung adenocarcinoma lesion, the learning rate is changed to 2e-4. The default learning rate of 1e-3 is too high and causes the loss function to fluctuate. The loss is difficult to steadily decrease during model training. Because the lesion area of lung adenocarcinoma is usually small, which only can provide limited features for foreground prototype learning.

We provide some qualitative examples obtained from the most effective segmentation model for each lung disease in Fig. 7. It demonstrates that the proposed MSPO-Net can extract robust prototypes from a few annotated data for different lung disease lesion. It also confirms that MSPO-Net performs excellently in lesion segmentation of lung disease CT images.

In the comparison of qualitative results, our proposed MSPO-Net can accurately locate the lesion area and segment the main body. But in terms of lesion edges, some predicted masks are close to ground truth, while others are slightly different from ground truth, as shown in Fig. 8.

We compare the lesion segmentation results of MSPO-Net and some modern SOTA FSS models, including PANet24, ALPNet27, ADNet26 and Q-Net35, for single lung disease, as shown in Table 2. Among them, PANet is a classic FSS method, the other three networks are few-shot medical image segmentation models. We ran algorithms of these models using publicly available code to report their performance on lesion segmentation of three lung diseases chest CT images. All algorithms use default parameters, VGG-16 backbone networks and train 20k iterations.

It shows that our MSPO-Net outperforms the SOTA methods on both secondary pulmonary tuberculosis, pulmonary aspergillosis focus segmentation. The lesion segmentation performance in lung adenocarcinoma is slightly inferior to PANet, but better than others. Because the lesion area of lung adenocarcinoma is usually small and causes the imbalance between pixel categories. We can only reduce the initial learning rate to learn the lesion prototypes more carefully during training without introducing other auxiliary methods.

Then, we test the generalization of MSPO-Net and compare it with some SOTA FSS models. Select CT images of secondary pulmonary tuberculosis and lung adenocarcinoma as the training set, and CT images of pulmonary aspergillosis as the test set. There is almost no resent research on lesion segmentation of pulmonary aspergillosis. Pulmonary aspergillosis can be regarded as an “unseen” disease to explore the generalization of MSPO-Net on rare lung diseases. See the result in Table 3.

When segmenting CT images of pulmonary aspergillosis in test set, our proposed MSPO-Net has the best generalization performance on the unseen disease. Figure 9 shows the qualitative performance of our MSPO-Net in generalization testing. For every testing episode, MSPO-Net can further learn multilevel prototypes through support-assisted prototype optimization module, which assists MSPO-Net to achieve better generalization. The pathology of the three lung diseases is different, and the lesion characteristics are different. It is a great challenge for the generalization of MSPO-Net.

Ablation study

Effect of multilevel prototypes learning and weight factor

To verify the effect of multilevel prototypes learning, we select feature maps output by the third, fourth and fifth convolutional block, record as conv3, conv4 and conv5 block. The corresponding prototypes learned from different feature maps are denoted by \(\:{p}_{conv3}\), \(\:{p}_{conv4}\) and \(\:{p}_{conv5}\).

First, we do an ablation study for multilevel prototypes learning to test how different combination of prototypes and weights affect the segmentation results. In addition, the proposed support-assisted prototype optimization module in subsection 3.5 is not introduced in testing stage of this part of experiments.

When taking single-level prototype, the default weight factor \(\:w\) in Eq. (6) is set to 1. The segmentation results on the dataset of secondary pulmonary tuberculosis chest CT images using MIoU and BIoU metric are shown in Table 4. The prototype \(\:{p}_{conv4}\) have more influence on segmentation results under this experimental setup.

When taking two-level prototypes, we evaluate the contribution of weight factor \(\:w\) in the fusion of final predicted mask. Through the segmentation results in Table 4, we select the combination of prototypes \(\:{p}_{conv4}\) and \(\:{p}_{conv5}\). As shown in Table 5, the weight factor \(\:w\) has a certain impact on the performance of MSPO-Net, and the segmentation results of two-level prototypes have improvement than single-level. For this experimental setup, the optimal \(\:w\) is [0.8, 0.2].

When taking three-level prototypes, we explore the segmentation performance of the combination of \(\:{p}_{conv3}\), \(\:{p}_{conv4}\) and \(\:{p}_{conv5}\) with different weight factors \(\:w\). The segmentation results of three-level prototypes are shown in Table 6. The segmentation performance is the best when \(\:w=[0.2,\:0.6,\:0.2]\). The best segmentation result of three-level prototypes is better than that of two-level, but the improvement is relatively small.

From Tables 4, 5 and 6, the cooperation of multilevel prototypes learning improves segmentation performance of MSPO-Net. Multilevel prototypes learning can learn more abundant lesion features from low-level clues to high-level concepts of CNN features. The weight factor is not fixed and needs to be adjusted according to the specific experimental settings.

Effect of support-assisted prototype optimization module

We test the contribution of the proposed support-assisted prototype optimization module. Taking the model trained by \(\:{p}_{conv4}\) and \(\:{p}_{conv5}\) with weight factor \(\:w=\left[0.8,\:0.2\right]\) in Table 5 as an example, we prove the effect of the proposed support-assisted prototype optimization module and the impact of iteration times on segmentation results. The influence of the proposed support-assisted prototype optimization module is presented in Fig. 10; Table 7.

As the iteration times increase, the performance of MSPO-Net gradually improves. The MIoU and BIoU are increased by 0.0464 and 0.0238. In the process of prototype optimization, the standard deviation of segmentation results gradually decreases. It indicates that the proposed support-assisted prototype optimization module increases the stability of the segmentation model. Besides, the testing time in Table 7 is the total time of 5 runs testing experiments. The proposed support-assisted prototype optimization module in every testing episode takes about 0.36 s, which is completely acceptable.

Effect of backbone networks

As a supplement, we compared the impact of backbone networks on the proposed MSPO-Net. ResNet-10136 is also a commonly used backbone network in FSS. It is composed of five convolutional blocks, for details, a 7 × 7 convolutional layer with stride of 2 (conv1 block), a maxpool layer with stride of 2, and four residual blocks with multiple 3-layer bottleneck sub-blocks (conv2-5 blocks). Under the same experimental setup, the segmentation results of ResNet-101 are shown in Table 8.

By comparison, VGG-16 performs better than ResNet-101. ResNet-101 has a huge number of parameters and a deeper network structure. The small-scale training set is unable to support in updating so many parameters during training.

Discussion

Most of patients with lung disease lack typical symptoms such as low fever, night sweats, cough, and expectoration in the early stages. Compared with other respiratory diseases, the diagnosis of lung diseases is difficult and relies more on medical imaging for computer-aided diagnosis. In recent years, artificial intelligence (AI) based on deep learning is gradually being widely applied in the interpretation of medical images37. Doctors who use AI in their practices are generally satisfied with their experience and find that AI provides value to them and their patients38.

Due to the limitations of imaging principles, the position of X-ray in the diagnosis of lung diseases has been decreasing in recent years. Many clinical studies have confirmed that the accuracy of X-ray in diagnosing lung diseases is significantly worse than that of CT39,40. Currently, for many kinds of lung diseases, there is limited research on lesion segmentation over chest CT images based on deep learning, which cannot provide further assistance to clinical practice.

We found that the lesion segmentation results of lung adenocarcinoma are not as good as the other two types of lung diseases, whether our MSPO-Net model or other SOTA methods. This is because the imbalance between pixel categories caused by small-size lesion of lung adenocarcinoma. We will consider data augmentation and Slicing Aided Hyper Inference41 to improve the situation in the future research.

Unlike organs and cells, lesions do not have accurate boundaries. When different doctors annotate lesions on medical images, they may have different choices for the lesion edges, but the main body are consistent. From the doctors’ point of view, it is enough to segment the main body of the lesion with deep learning methods, and the segmentation results can play a reference role in assisting the diagnosis. In the future, we will pay more attention to dealing with the lesion boundaries, e.g., divide the lesion area into superpixel blocks and perform segmentation separately, introduce edge-detection algorithms and regularization techniques.

Diagnosis and treatment of lung-related diseases is the advantage of Shandong Provincial Public Health Clinical Center, which has accumulated rich case information. So that, our current research mainly focuses on lung diseases. We will collaborate with other departments in the future to expand our research scope to other medical imaging tasks.

Conclusion

In this work, we proposed MSPO-Net for FSS and applied in lesion segmentation of lung diseases chest CT images. It simulates the diagnostic and learning processes of doctors. To improve the segmentation effect of lesion area, MSPO-Net adaptively learns soft threshold through self-attention strategy in the training process and optimizes multilevel prototypes with the assistance of support set during testing.

In clinical practice, CT is more effective in diagnosing lung diseases than X-ray. We organized case information from the Shandong Provincial Public Health Clinical Center over the past 4 years and established chest CT dataset for secondary pulmonary tuberculosis, pulmonary aspergillosis, and lung adenocarcinoma. Experienced doctors selected the typical images and annotated the lesion areas.

Experimental results showed that MSPO-Net can improve segmentation performance and robustness of lung disease lesion. When dealing with unseen diseases, the generalization of MSPO-Net is better than the SOTA models. The lesion segmentation results can assist doctors in understanding the lesion directly, and provide valuable references for doctors to analyze the condition, make diagnosis and treatment plans for patients.

Data availability

This paper is a retrospective case-control study, not a secondary analysis on the data of published papers. Chest CT imaging examination is a part of the routine diagnosis and treatment. It is not an additional examination for research purposes, does not require the approval of the medical ethics committee. The experimental data are anonymous information, and the personal information of cases is not published.Data is available at https://github.com/Tian-Yuan-ty/Lung-Diseases-CT.git (Reference20)

References

Tsochatzidis, L. et al. Integrating segmentation information into cnn for breast cancer diagnosis of mammographic masses. Comput. Methods Programs Biomed. 200 https://doi.org/10.1016/j.cmpb.2020.105913 (2021).

Tyagi, S. & Talbar, S. N. Predicting lung cancer treatment response from CT images using deep learning. Int. J. Imaging Syst. Technol. 5 (33), 1577–1592. https://doi.org/10.1002/ima.22883 (2023).

Bhatia, P. et al. : Automated Quantification of Inflamed Lung Regions in Chest CT by UNet + + and SegCaps: A Comparative Analysis in COVID-19 Cases. 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC). pp. 3785–3788 (2022). https://doi.org/10.1109/EMBC48229.2022.9870901

Rayed, M. E. et al. Deep learning for medical image segmentation: state-of-the-art advancements and challenges. Inf. Med. Unlocked. 47, 101504. https://doi.org/10.1016/j.imu.2024.101504 (2024).

Wasserthal, J. et al. TotalSegmentator: robust segmentation of 104 anatomic structures in CT images. Radiology: Artif. Intell. 5 (5). https://doi.org/10.1148/ryai.230024 (2023). e230024.

Bernard, O. et al. Deep learning techniques for automatic MRI cardiac multi-structures segmentation and diagnosis: is the problem solved? IEEE Trans. Med. Imaging. 37 (11), 2514–2525. https://doi.org/10.1109/TMI.2018.2837502 (2018).

Eva & Pachetti Sara Colantonio: A Systematic Review of Few-Shot Learning in Medical Imaging. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2023). https://doi.org/10.48550/arXiv.2309.11433

FENG, R. et al. Interactive few-shot learning: Limited Supervision, Better Medical Image Segmentation. IEEE Trans. Med. Imaging. 40 (10), 2575–2588. https://doi.org/10.1109/TMI.2021.3060551 (2021).

Kim, S. et al. : Bidirectional RNN-based Few Shot Learning for 3D Medical Image Segmentation. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2020). https://doi.org/10.48550/arXiv.2011.09608

GAMA P H T, OLIVEIRA, H. et al. Weakly supervised few-shot Segmentation Via Meta-Learning. IEEE Trans. Multimedia. 25, 1784–1797. https://doi.org/10.48550/arXiv.2109.01693 (2023).

LU, Q. YE C.: Knowledge Transfer for Few-shot Segmentation of Novel White Matter Tracts. International Conference on Information Processing in Medical Imaging (IPMI). pp: 216–227 (2021). https://doi.org/10.1007/978-3-030-78191-0_17

Erica, M., Rutter, J. H., Lagergren, Kevin, B. & Flores A convolutional neural network method for boundary optimization enables few-shot learning for biomedical image segmentation. MICCAI Workshop on Domain Adaptation and Representation Transfer. pp: 190–198 (2019). https://doi.org/10.1007/978-3-030-33391-1_22

Yuan, Z., Esteva, A., Xu, R. & Augmentation MetaHistoSeg: A Python Framework for Meta Learning in Histopathology Image Segmentation. Deep Generative Models, and Data Label. Imperfections 13003, 268–275 https://doi.org/10.1007/978-3-030-88210-5_27 (2021).

Ma, S. et al. : A zero-shot method for 3d medical image segmentation. IEEE International Conference on Multimedia and Expo (ICME) (2021). https://doi.org/10.1109/ICME51207.2021.9428261

Muhammad Rahman, Y. & Cao, X. Deep pre-trained networks as a feature extractor with XGBoost to detect tuberculosis from chest X-ray. Comput. Electr. Eng. 93, 107252. https://doi.org/10.1016/j.compeleceng.2021.107252 (2021).

Plessis, T. D. et al. Introducing a secondary segmentation to construct a radiomics model for pulmonary tuberculosis cavities. Radiologia Med. 128 (9), 1093–1102. https://doi.org/10.1007/s11547-023-01681-y (2023).

Lau, A. et al. A comparison of the chest radiographic and computed tomographic features of subclinical pulmonary tuberculosis. Sci. Rep. 12 https://doi.org/10.1038/s41598-022-21016-7 (2022).

Schalekamp, S., Klein, W. M. & van Leeuwen, K. G. Current and emerging artificial intelligence applications in chest imaging: a pediatric perspective. Pediatr. Radiol. 52 (11), 2120–2130. https://doi.org/10.1007/s00247-021-05146-0 (2022).

Poonkodi, S. & Kanchana, M. A review on lung carcinoma segmentation and classification using CT image based on deep learning. Int. J. Intell. Syst. Technol. Appl. 20, 394–413. https://doi.org/10.1504/ijista.2022.10050327 (2022).

Tian, Y. et al. Lung-Diseases-CT. https://github.com/Tian-Yuan-ty/Lung-Diseases-CT.git. Accessed 17 Jun 2024.

Evangelia Skoura, A., Zumla, J. & Bomanji Imaging in tuberculosis. Int. J. Infect. Dis. 32, 87–93. https://doi.org/10.1016/j.ijid.2014.12.007 (2015).

Chabi, M. L. et al. Pulmonary aspergillosis. Diagn. Interv. Imaging. 96 (5), 435–442. https://doi.org/10.1016/j.diii.2015.01.005 (2015).

Lee, E. & Kazerooni, E. A. Lung Cancer Screening. Semin Respir Crit. Care Med. 43 (6), 839–850. https://doi.org/10.1055/s-0042-1757885 (2022).

Wang, K. et al. : PANet: Few-shot image semantic segmentation with prototype alignment. IEEE International Conference on Computer Vision (ICCV). pp: 9197–9206 (2019). https://doi.org/10.1109/ICCV.2019.00929

Sun, L. et al. Few-shot medical image segmentation using a global correlation network with discriminative embedding. Comput. Biol. Med. 140, 105067. https://doi.org/10.1016/j.compbiomed.2021.105067 (2021).

Hansen, S. et al. Anomaly detection-inspired few-shot medical image segmentation through self-supervision with super-voxels. Med. Image. Anal. 78, 102385. https://doi.org/10.1016/j.media.2022.102385 (2022).

Ouyang, C. et al. : Self-supervision with superpixels: Training few-shot medical image segmentation without annotation. European Conference on Computer Vision (ECCV). pp: 762–780 (2020). https://doi.org/10.1007/978-3-030-58526-6_45

Lu, Q., Li, Y. & Ye, C. Volumetric white matter tract segmentation with nested self-supervised learning using sequential pretext tasks. Med. Image. Anal. 72 (1), 102094. https://doi.org/10.1016/j.media.2021.102094 (2021).

Matthew, D. & Zeiler Rob Fergus: Visualizing and understanding convolutional networks. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2013). https://doi.org/10.48550/arXiv.1311.2901

Yosinski, J. et al. Understanding neural networks through deep visualization. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR). https://doi.org/10.48550/arXiv.1506.06579 (2015).

Zhang, X. et al. Sg-one: similarity guidance network for one-shot semantic segmentation. IEEE Trans. Cybernetics. 50 (9), 3855–3865. https://doi.org/10.1109/TCYB.2020.2992433 (2020).

Oreshkin, B. N., Rodriguez, P. & Lacoste, A. Tadam: task dependent adaptive metric for improved few-shot learning. Proc. 32nd Int. Conf. Neural Inform. Process. Syst. 719–729. https://doi.org/10.48550/arXiv.1805.10123 (2018).

Karen Simonyan, A. & Zisserman Very deep convolutional networks for large-scale image recognition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015). https://doi.org/10.48550/arXiv.1409.1556

Olga Russakovsky, J. et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. (IJCV). 115 (3), 211–252. https://doi.org/10.1007/s11263-015-0816-y (2015).

Shen, Q. et al. : Q-Net: Query-Informed Few-Shot Medical Image Segmentation. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2023). https://doi.org/10.48550/arXiv.2208.11451

He, K. et al. : Deep Residual Learning for Image Recognition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp: 770–778 (2016). https://doi.org/10.1109/CVPR.2016.90

Gleeson, F. et al. Implementation of artificial intelligence in thoracic imaging-a what, how, and why guide from the European Society of Thoracic Imaging (ESTI). Eur. Radiol. 33 (7), 5077–5086. https://doi.org/10.1007/s00330-023-09409-2 (2023).

Rajpurkar, P. & Lungren, M. P. The current and future state of AI interpretation of medical images. N. Engl. J. Med. 388 (21), 1981–1990. https://doi.org/10.1056/NEJMra2301725 (2023).

Borakati, A. et al. Diagnostic accuracy of X-ray versus CT in COVID-19: a propensity-matched database study. BMJ Open. 10 (11). https://doi.org/10.1136/bmjopen-2020-042946 (2020). e042946.

Lau, A. et al. The Radiographic and Mycobacteriologic correlates of Subclinical Pulmonary TB in Canada: a retrospective cohort study. Chest 162 (2), 309–320. https://doi.org/10.1016/j.chest.2022.01.047 (2022).

Akyon, F. C., Altinuc, S. O. & Temizel, A. Slicing Aided Hyper Inference and Fine-tuning for Small Object Detection. IEEE International Conference on Image Processing (ICIP). pp: 966–970 (2022). https://doi.org/10.1109/ICIP46576.2022.9897990

Funding

No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

Y. T. mainly engaged in Methodology, Software, Formal analysis, Writing - Original Draft; Y. L. mainly engaged in Project design and administration, Methodology, Writing - Review & Editing; Y C. mainly engaged in Conceptualization, Resources, Formal analysis, Writing - Original Draft; J. Z. mainly engaged in Conceptualization, Investigation, Resources, Writing - Review & Editing; H. B. mainly engaged in Investigation, Formal analysis, Data Curation, Validation.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tian, Y., Liang, Y., Chen, Y. et al. Multilevel support-assisted prototype optimization network for few-shot medical segmentation of lung lesions. Sci Rep 15, 3290 (2025). https://doi.org/10.1038/s41598-025-87829-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-87829-4