Abstract

There are serious security issues with the quick growth of IoT devices, which are increasingly essential to Industry 4.0. These gadgets frequently function in challenging environments with little energy and processing power, leaving them open to cyberattacks and making it more difficult to implement intrusion detection systems (IDS) that work. In order to address this issue, this study presents a unique feature selection algorithm based on basic statistical methods and a lightweight intrusion detection system. This methodology improves performance and cuts training time by 27–63% for a variety of classifiers. By utilizing the most discriminative features, the suggested methods lower the computational overhead and improve the detection accuracy. The IDS achieved over 99.9% accuracy, precision, recall, and F1-Score on the dataset IoTID20, with consistent performance on the NSLKDD dataset.

Similar content being viewed by others

Introduction

Context

Recently, the world has seen the COVID-19 pandemic, and each walk of life has been affected by an equivalent. Countries, governments, and native bodies emphasized the spread of the virus by intruding on human bodies. If we look at 2020, we will see that there has been an increase in cyberattacks in India and other geographic regions. Therefore, a reliable system that can sense the attack and take the required preventive actions is needed. Preventive actions might include alienating the machine, applying the antivirus, or informing the people that cyber-physical systems utilized in various operations are often saved. Ajitesh et al. highlighted the importance of securing wireless sensor networks in the face of increasing cyber threats1. The attack’s impact is magnified if there is an attack on the IoT systems of any industry. The typical methods have limitations in stopping such attacks because of continuously changing impact methods and vulnerabilities. These attacks on the IoT might be manual attacks, or the attack vector may be generated via some piece of code or any technology. The increasing magnitude of attack is often attributed to evolving hardware, software, algorithms, and dependence on cyber-related technologies for Industry 4.0. Better systems translate into more load on the system, more cyber traffic, and less time to categorize a malicious URL2. However, the rapid proliferation of IoT across nearly all areas of life has introduced a host of new cybersecurity threats. This stems from IoT devices often having limited computing and energy resources, making them more susceptible to attacks. As IoT deployment continues to grow, it brings significant security challenges, with malicious intrusions posing severe threats to the integrity and privacy of sensitive data.

An intrusion detection system (IDS) was conceptualized to stop these attacks. The IDS usually monitors the network and checks parameters such as flow and network packets or reads the logs to detect any malicious or suspicious activity, bringing the entire system down3. These IDSs are commonly associated with a high false-positive rate and poorly susceptible to unknown attacks4. The unknown attacks are due to changing network technology. It is imperative to make an IDS that may easily work efficiently upon new and unknown attacks. Ghadi et al. in5discussed the integration of federated learning with the IoT to address privacy and efficiency in smart city applications, suggesting that advanced AI techniques could increase IDS effectiveness. The ideal disadvantage of the normal IDS is that it does not self-learn and takes necessary action unless it is specifically told about the associated principles and actions6. Machine learning (ML) algorithms are found to address such issues within other problem areas; thus, they are considered the golden key that resolves the problems of handling unknown attacks and provides correct classification with minor errors7. Currently, this paper aims to identify good machine learning algorithms from commonly used algorithms and compare them. The input to the machine learning algorithm is typically a feature vector consisting of multiple features; therefore, the classification algorithm is typically supported by the varied weights assigned to the features during a vector. The author has been interested in using all the features during a vector and has tried to match the accuracy of the machine learning algorithms using only a few significant features selected through essential statistical techniques.

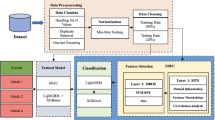

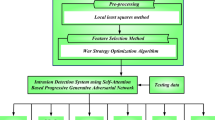

The normal IDS system classifies traffic as malicious or normal and is supported by the given ruleset. These rule sets are not frequently updated; hence, normal IDS systems cannot find the categorization, making an equivalent mistake until the rule sets are not updated. However, machine learning-based IDSs are sufficient for self-learning and categorizing unseen traffic with higher accuracy. The machine learning-based IDSs are not hooked into the given ruleset but rather categorize supported features and, therefore, weight-related features. Figure 1 shows a schematic diagram of the IDS.

Current ML based methodologies for network intrusion detection often assume that data packet types and feature patterns in traditional networks are analogous to those in IoT environments. However, IoT smart devices vary significantly in aspects such as computational capability, functionality, hardware characteristics, and the ability to generate diverse data features. Consequently, IDS designed for conventional networks are unsuitable for IoT environments due to these distinct differences.

Furthermore, IDS systems traverses through a high volume of data at the high paces, which unavoidably intensifies the computational cost to conclude whether it is benign or malicious. Therefore, there is a need for effective and efficient feature selection methods, particularly to detect zero-day attacks59. Anomaly based methodologies are found to be efficiently detect zero-day attacks by demonstrating normal (benign) network traffic such that any network that digresses from this predictable behaviour is considered an anomaly (containing zero-day attacks)59,60.

-

Key aspects and novel elements.

We also present a comparison of machine learning for intrusion detection, and show that machine learning techniques such as random forest (RF) and AdaBoost (AD) provide superior performance compared to traditional algorithms. As evident from the results, the best performing results are achieved by using the RF and AD based algorithms with almost best accuracy, precision, recall, and F1 score metrics for detecting the cyber-attacks category. This study takes a new approach in using statistical methods like the Pearson correlation coefficient for feature selection as well as chi-square tests, which helps the machine learning process since it threads out the actual features that need to be examined. This study highlights the lack of performance of traditional algorithms with a significantly enlarged feature set and its impact on feature space by comparing it to the ensemble technique that has guaranteed performance even with increased number of features. Through novel, basic statistical techniques for feature selection the study demonstrates that very significant improvements in IDS performance can be achieved at a much lowered computational cost. Moreover, it provides a new perspective on boosting the accuracy of shallow learning algorithms with a carefully selected feature subset for cybersecurity-related machine learning processes. Future studies should investigate the combined usage of state-of-the-art feature selection techniques along with deep learning models, advancing the accuracy as well as generalizability of IDSs.

The main contributions of the study are as follows:

-

The study applied basic statistical methods to create an effective feature selection algorithm. The study ranks the chi-square (CHI), Pearson correlation coefficient (Cor) and the analysis of variance (ANOVA) techniques against the worst performing machine learning or ML classifiers while also estimating the best number of features producing the most accurate performance from each classifier.

By combining the Cor and CHI approaches, this study suggests a stronger feature selection method. The suggested method is beneficial for inactivating essential signal features utilized by ML classifiers that produce high accuracy, thus lowering the computational costs. The proposed fusion scheme is simple, effective, reliable and acceptable in IoT environments. Also compared with other techniques it can attain greater performance with lower training time.

-

The authors combine the decision tree (DT) algorithm with suggested feature selection strategies to offer an IDS. An IDS with a maximum accuracy of 99.95% for the NSLKDD dataset and 99.89% for the IoTID20 dataset is demonstrated to be very intelligent and effective by the proposed device.

-

The results of this study are compared to those of other recent studies that used IoT datasets, such as the IoTID20 and NSLKDD datasets. In terms of accuracy and other aspects, the IDS using the CorChi algorithm produced comparable and comparable results.

Structure of the article

The paper opens by discussing the rising impact of cyberattacks on modern organizations and the essential role of IDSs in defending against these threats. The literature review explores prior research, provides a classification of IDSs, and examines the connection between feature selection and machine learning in cybersecurity. The third section outlines the dataset, algorithms, and statistical methods used for feature selection, along with a description of the experimental setup and research methodology. The results and discussion compare the performance of different algorithms and evaluate the effectiveness of various feature selection techniques. The paper concludes by summarizing the findings and recommending future research, focusing on advanced machine learning methods and in-depth feature analysis.

Literature Review

Current research

To prevent tracts, researchers have concentrated their attention on IDS systems over the last few decades and have adopted a broad range of techniques and approaches at different layers16. Several researchers have broken down IDSs into two types: signature-based and anomalous-based IDSs (AIDS)17. Among these two methods, signature-based IDSs are utilized extensively in business.

Signature-based IDSs (SIDSs), the first generation of IDSs18, incorporate a list of preconfigured attacks, and network patterns are checked against the attacks in the list; they fail severely if those attacks fail to match those on the list of attacks, and this list must be updated on an ongoing basis. AIDS generally has a list, and traffic with a signature that is inconsistent with the list is considered an attack. However, ML algorithms can assist AIDS patients in becoming capable of detecting unseen interactions as benign or harmful, and ML enables them to learn on their own.

IDSs are divided into two categories by a small number of academics19,20: host-based IDSs (HIDSs) and network-based IDSs (NIDSs). Both the NIDS and HIDS have benefits as well as drawbacks. For example, NIDSs are rapid; however, they typically incorrectly categorize traffic by relying on its encryption, whereas the HIDS system remains unharmed by network cryptography; nevertheless, it requires all configuration files to detect an attack. Multiple studies have demonstrated that self-learning technology could provide an acceptable option for a highly precise IDS.

According to Saheed et al.21, coupling an IDS with ML delivers numerous strategic benefits. Several studies4,9,10,14,21,23have employed deep algorithms, including DNNs, RNNs, and long short-term memory (LSTM), and have demonstrated excellent performance. Shallow ML algorithms such as the SVM, DT, and KNN algorithms for machine learning have been employed in a small number of studies17,21.

Bhavani et al.22reported that single ML classifiers are significantly less precise and accurate and have a worse misclassification rate, which was confirmed by23, who also demonstrated that the RF algorithm has higher accuracy and lower misclassification. A group of experts12,25,27 spanning multiple geographies reported that ensemble algorithms increase accuracy and simultaneously decrease misclassification rates. The accuracy of shallow ML algorithms can be increased further by employing regularization techniques and techniques such as boosting. After contrasting deep learning methods with shallow methods, Vinaykumar et al.23concluded that deep learning methods render a more effective approach for IDSs. According to12, deep learning approaches outperform shallow machine learning techniques. They also state that deep approaches with little or little preprocessing may quickly detect the features influencing the results and assign weights to them. On the other hand, machine learning employs semantic details, which may be challenging to recognize and therefore require considerable initial processing, such as translating an object to an integer or category value to values in ordinary values9,24,60.

Chua et al.25worked with both supervised and unsupervised machine learning techniques, such as SVM and KNN, to recognize dubious web sites by making use of static feature sets from linguistic content and URLs, by picking the appropriate features, ML methods may achieve high accuracy in categorizing the target variable in a binary with several classes12. Feature selection is a massive and difficult task because of the manual labor involved. Fortunately, deep learning with very little instruction, a lack of prior experience, and preparatory processing can determine the appropriate weighting for an attribute26,27. The weights of the significant attributes are significant, determining the output positively or detrimentally. Du et al.28 established a classification system for the IoT that makes use of both LSTM and CNN procedures using the KDDCup99 dataset to deliver the theoretical framework. For recognizing assaults on IoT gadgets, their model uses the CNN’s feature selection and long short-term memory abilities. The investigations they conducted revealed remarkable precision along with sensitivity.

Chakrawarti et al.29 applied an effective fully interconnected neural network combined with LSTM to categorize malicious and benign incidents on the KDDCup99 dataset. They reported an accuracy higher than 99%.

Wang et al.30 achieved 95% accuracy in categorizing system assaults by nonsupervising hierarchical clustering approaches - BIRCH and supervising techniques such as autoencoders. BIRCH is a fantastic technique for analyzing challenging and large datasets. Samunnisa et al.31 presented an intrusion detection system that is competent in addressing both known and unidentified dangers. They advise implementing the combination including the KNN clustering strategy and the random forest classifier as a reaction to day zero assaults. They reported an accuracy of 98.27%.

Satyanarayana and Chatrapathi32 built an IDS system dependent on the genetic algorithm (GA) with ensemble techniques, including random forest. The GA method contributed to identifying those that were most important and applicable characteristics, allowing the classifier to correctly identify normal and attack vectors. They were able to achieve an accuracy of approximately 98% when the UNSWNB15 dataset was used. Jadhav et al.33 developed a versatile and efficient IDS system leveraging a hybrid algorithm combining RNNs and LSTMs. They reached accuracies of 83.29% on the KDD Cup99 dataset and 68.59% on the NSLKDD dataset.

Alqarni34 employed ant colony optimization (ACO) to determine characteristics before the SVM classifier was used. The scientific results exhibited outstanding accuracy for assessing candidacy as a potential IDS. Sharma et al.35 employed techniques of filtering as well as deep neural networks to recognize hazards and weaknesses in IoT systems. They nevertheless managed to achieve an accuracy level of 84% on the dataset generated by the GAN and UNSWNB15.

Songma et al.36 conducted a series of tests employing principal component analysis (PCA) along with a number of classifiers, namely, XGBoost and random forest. The researchers utilized PCA to derive 11 crucial features, which yielded 99% accuracy with the CICIDS2018 dataset. Najafi et al.37 used three feature selection approaches, namely, ABC (artificial bee colony), ACO (ant colony optimization), and flower pollination (FP), for choosing significant features extracted from the CICIDS2018 dataset. The chosen attributes were thereafter evaluated for overall accuracy via various types of classification algorithms. A large number of classifiers displayed accuracies ranging from 89 to 99%. However, constructing the model and selecting the experimental features requires a long time.

Alzughaibi et al.38 used a CICIDS 2018 dataset to train a model utilizing multilayer perceptron (MLP) and backpropagation (BP) to detect assaults. They succeeded in achieving accuracy levels of 98% in a cloud setting. Qazi et al.39 designed a deep learning network by integrating an RNN and a CNN. The CNN contributed to the collection of local relevant features, whereas the RNN helped in sorting on the basis of the RNN-collected features. The innovative tactics proved effective, delivering an accuracy of 98% with the CICSIDS2018 dataset. Kshirsagar et al.40 adopted an array of feature selection approaches and a classification method to distinguish malware attack vectors from regular network vectors. The ensemble lists the 24 major characteristics used by the J48 classifier. J48 was able to correctly detect assaults and normal vectors in 99% of the situations.

Xu et al.41 recommended an IDS system built around a CNN and the BILSTM algorithm using the SoftMax classifier. The model was demonstrated to be extremely sensitive and accurate. The study demonstrated an accuracy of approximately 89% and a precision of 91%, with an F1 score exceeding 93%. Li et al.42 discovered that a model that uses a CNN and LSTM performs unsuccessfully for large datasets; therefore, they proposed a boosting policy through a GAN. They were able to improve the accuracy by 5%, even when facing unknown assaults.

Using the most recent datasets available, Table 1 displays the most recent research that have attained excellent accuracy. This study employs sophisticated feature selection techniques or extremely complex neural networks to increase accuracy. These sophisticated and complex methods frequently demand a large amount of processing power and memory. Therefore, our study focuses on examining and suggesting an intelligent intrusion detection system that uses a simple and suitably calibrated machine learning technique. Our thorough review of the literature and the noted research gaps served as the foundation for this. We want to reduce the processing and memory demands on the system classifier and eliminate any superfluous complexity in order to make the IDS appropriate for the Industrial IoT. This study’s main goal is to use straightforward and trustworthy machine learning-based intrusion detection system classifiers to lower training time complexity and false alarm rates considerably when compared to those reported in the literature.

For the past few decades, IDS systems have been the subject of research, and numerous researchers have used a range of methods and strategies at various stages to halt tracts2. IDSs have been divided into two categories by a small number of researchers: anomaly-based IDSs (AIDS) and signature-based IDSs8. Among those two, signature-based IDSs are prevalent within the industry. The signature-based IDS (SIDS), the oldest generation IDS8, has an inventory of predefined attacks, and traffic signatures are matched to the signatures present within the list; these signatures fail miserably if the attacks are not a part of the list and if the list must be updated frequently. AIDS traditionally desires an inventory, and traffic whose signature does not match the list is marked as an attack. Authors in9 demonstrated that machine learning could significantly improve predictive tasks in complex systems. Machine learning algorithms can help AIDS patients identify unseen traffic as benign or malicious, and machine learning helps AIDS patients self-learn.

Even while a single feature might not have much of an effect by itself, taken as a whole, they might affect how decisions are made. Furthermore, several qualities that are considered essential could be related to one another and have no bearing on the IDS’s ultimate accuracy. The application of basic statistical methods for feature selection, both alone and in hybrid frameworks, is not well documented in the literature32. According to a comprehensive evaluation of the literature, hybridization-based feature selection methods can improve classifier accuracy. Furthermore, the literature that is currently accessible shows that basic statistical feature selection strategies are less computationally and memory intensive than other approaches. Because it suggests a feature selection method that reduces computing expense and offers features that help classifiers correctly categorize attacks, this study is distinctive. Using basic statistical methods and a basic machine learning classifier (Decision Tree) to arrange data in the IoTID20 dataset, it provides a thorough study of IDS. The suggested intrusion detection system (IDS) quickly and accurately classifies threats.

Background

-

Machine Learning Algorithms.

Gaussian Naïve Bayes Algorithm (NB) - Using basic statistical methods and a basic machine learning classifier (Decision Tree) to arrange data in the IoTID20 dataset, it provides a thorough study of IDS. The suggested IDS quickly and accurately classifies threats.

The Algorithm of Gaussian Naïve Bayes (NB) The traditional Bayes theorem and probability theory serve as the foundation for this approach. Because it assumes that every characteristic in a dataset is independent of every other feature, this classification technique is naïve. The posterior probability is determined by this technique by multiplying the likelihood of evidence forgiveness by the prior knowledge.

Here, E1, E2, E3…… En are events in any random experiment, and A is any random event with event E.

Support Vector Machine Algorithm (SVM) - It is a nonprobability-based model attempting to classify events in a space separable by hyperplanes. The equation expresses the hyperplanes.

Here, β0 denotes the bias, and βt stands for the weight.

Logistic Regression Algorithm (LR) – This algorithm is used to classify the dataset into two classes, i.e., it is used for binary classification. Regression is one of the oldest algorithms used in classification problems and uses the sigmoid function (given in Eq. 3) as an activation function.

Decision Tree (DT) - This classification algorithm uses the information gained at each step to classify the tuple in a dataset (T) concerning a given feature (F) to a target class (C). The information gain (IG) can be easily known as a difference in entropy E. The information gain and entropy are given in Eqs. (4) and (5).

Random forest algorithm (RF) – The RF algorithm is an ensemble learning method that builds upon the Decision Tree (DT) framework. It enhances model performance by constructing multiple decision trees with controlled depth, where the maximum height of each tree is limited to N, representing the number of selected features. This constraint helps reduce overfitting and improves the model’s generalization capability, resulting in higher classification accuracy.

AdaBoost Algorithm (AD): This algorithm combines multiple weak classifiers and provides a robust classifier. It can be represented by Eq. (6).

where h(x) is the output of the weak classifier and where α is the weight associated with the classifier.

-

Statistical Techniques for Feature Selection.

Data scientists use multiple techniques to find the essential features of a dataset. However, the correlation coefficient is used the most in the experiments.

Pearson correlation coefficient – It is used for identifying the relationship between the two features of a dataset. It can be calculated as a product of the covariance and standard deviation of the features in a dataset. Equation (7) represents the mathematical representation as.

The correlation coefficient effectively measures the dependence of the target and features, but it has been associated with issues when a feature has missing data or noise.

The chi-square method (Chi2) is used to measure the independence, shown as χ2, of two variables or events. χ2 measures how much observation is different from the expected value and suggests that a lower χ2 is more independent or vice versa. χ2 can be calculated as given in Eq. 8.

… Eq. 8

ANOVA – This is referred to as a statistical test that measures the variance between two observed samples and within the samples. The ANOVA is calculated via the F score, which is expressed in the following equations:

Performance measures

-

Accuracy (PA)– Accuracy is the ratio of correctly classified data to the total number of observations.

-

Precision (PP)- Precision can be considered the ratio of actual observations to the observations predicted by the classifier.

-

Recall (PR)– This can be regarded as the ratio of observations classified as true in the sample.

-

The F1 score (PF) can be regarded as the harmonic means of precision and recall.

Research Methodology

This section details the methodology followed for the experiments. PySpark, which offers the ability to write Python code on Apache Spark, was utilized in the study via the Google Colab platform. Scikit-learn is used for machine learning and feature selection techniques. The computer used for the model’s training and testing has the following setup: The 13th generation Intel(R) Core(TM) i5-1345U has an 8-core CPU, 8-core GPU, 16 GB of RAM, and 512 GB of SSD. It runs at 1.60 GHz. The experiments were carried out for 10 times and the mean readings were taken. The results were statistically worried using t-test.

In this study, we considered IOTID20 dataset which is publicly existing network intrusion datasets to explore zero-day assaults. However, the dataset did not have zero-day assaults, so zero-day attacks were mimicked breaking a dataset into training (only benign) and testing (only malicious) network traffic59. This practice is in line with the techniques followed in various studies59. Further NSLKDD dataset was used because it had lesser number of instances. The variation in the size of dataset helps to determine that proposed feature selection technique works efficiently irrespective of the dataset size and is scalable.

The experiments were carried out using the complex classifiers RF and AD in addition to the simple ML algorithms, which are LR, SVM, NB and DT. All the classifiers were initially trained on the IoTID20 dataset and NSLKDD dataset. The details of these datasets are given in this section. Each dataset has multiple classification categories. To simplify the problem, the data labels were binarized and rearranged via Algorithm 1 Now, consider the attack vector F and the target response as T. T contains the binary values 0 and 1 only.

F = {f1,f2,……,fn}.

T = {t1,t2,……,tn}T.

The complete dataset D can be obtained by combining F and T and is given as.

D = {D1,D2,… ,Dn}.

The performance (PA, PR, PF, and Pp) of all the classifiers was measured with the full dataset via Algorithm 2 The three classifiers that exhibited very low performance were selected for the study via Algorithm 2 and were categorized as M.

M = {LR, SVM, NB}.

Algorithm 3

used these poor classifiers to the tweaked dataset, selecting the top 5, top 10, top 15, and top 20 features using straightforward but reliable feature selection methods including chi square, the Pearson correlation coefficient, and ANOVA. Mathematically, the output can be represented as.

For D with the feature space, F is pruned to obtain G, H, I, and J, which are given below and are calculated via Algorithm 3

G = {G1, G2,...,G5} | G ⊂ F.

H= {H1, H2,....,H10} | H ⊂ F.

J = {J1, J2,...,J15} | J ⊂ F.

K = {K1, K2,...,K20} | K ⊂ F.

The performance of each classifier was measured again to identify the optimum number of parameters via Algorithm 4

The top 15 parameters of the top two feature selection techniques, which consistently showed high performance with all the classifiers, were considered. A new dataset considering the top 15 parameters of each feature selection technique was considered. The features that appeared in both sets were considered only once. Thus, a new dataset with 17 features for IoTID20 and 16 features for NSLKDD was created.

The selected datasets, referred to as the Chico dataset, were applied to high-performing classifiers, namely, DT, RF and AD. The performances of these classifiers were measured and compared with those of the latest available methods. The research methodology is detailed in feature 2 and can be seen in Fig. 2.

For D with the feature space, F is pruned to obtain G, H, I, and J, which are given below and are calculated via Algorithm 3

G = {G1, G2,...,G5} | G ⊂ F.

H= {H1, H2,...,H10} | H ⊂ F.

J = {J1, J2,...,J15} | J ⊂ F.

K = {K1, K2,...,K20} | K ⊂ F.

The performance parameter for models in M for G, H, and J is calculated via the parameters given in Sect. 3.3, and all the performance parameters are compared. Algorithm 4describes the logic of the complete program set up, and the results obtained are defined and discussed in detail.

The ML model were regulated using GridSearchCV for picking models with excellent performance. The hyper parameters for ML models are given in Table 2.

Dataset description

Two Internet of Things datasets, IoTID20 and NSLKDD, were used in the study. The IoTID20 dataset was collected from an Internet of Things smart property that includes both normal (benign) traffic and a wide range of IoT threats, notably DDoS, DoS, Mirai, and ARP spoofing. The network mapper (Nmap) application was used to construct and gather the IoTID20 dataset inside the testing facility atmosphere, emulating several types of real-world attacks and threats44.

This work was validated via an additional dataset, NSL-KDD45. It outperforms the KDD99 dataset by addressing redundancy and redundancy problems with data in its initial dataset. The NSL-KDD dataset carries 32 continuous variables and 9 nominal features. These attributes represent multiple operations that occur in an Internet of Things system. Five target classes are added to the dataset: normal, probe, DoS, R2L, and U2R. The NSL-KDD dataset aims to reduce the extensiveness of the initial KDDcup99 dataset while still preserving its relevance for detecting intrusions and related applications. Standard scaling and normalization procedures were carried out for processing the datasets. The attack labels were binarized as additional processing of the datasets.

Dataset pre-processing

Various techniques have been proposed to address the problem of imbalanced datasets, with oversampling minority class instances being a common solution. One of the most widely used methods in recent years is the Synthetic Minority Over-sampling Technique (SMOTE). To create a balanced dataset, a sample containing an equal number of ‘benign’ and ‘attack’ labels was derived from the original dataset. This balanced approach helps to minimize the risk of any model overfitting or underfitting. The features, as described in the previous section, were individually evaluated and verified to ensure that their values conform to the definitions. Most features with null or undefined values were filled using the most frequent values.

Dataset normalization

Data normalization is a crucial step in preparing data for classification tasks. It helps reduce the range of data, aiding the objective function, which typically does not perform efficiently on wide-ranging data. In this study, min-max normalization was used to ensure that the data values remain within the range of [0, 1], where 0 represents the minimum and 1 represents the maximum value. This transformation was systematically applied across all features in the dataset to ensure uniformity and optimize the learning process.

Results and discussion

The basic machine learning algorithms—LR, NB, SVM, DT—and the ensemble algorithms—RF and AD—were run on IoTID20 and verified on the NSLKDD dataset. The performance baseline parameters, such as accuracy, precision, recall, and F1 score, were calculated by taking the mean of 10 iterations of each experiment. Figures 3, 4 and 5 show that the machine-learning algorithms achieved accuracies ranging from 60 to 70%. Thus, the basic ML classifier was found to be less accurate and efficient in classifying the attacks.

These basic classifiers were further studied by selecting a few top features via simple statistical techniques such as the CHI, the Pearson coefficient and ANOVA. For the studies, the top 5, top 10, top 15 and top 20 features were selected. For the experiments, the threshold of 0.55 is assigned to the test CHI statistics such that all features which fall above the value 0.55 are selected as the optimal subset. This threshold is calculated post multiple iterations of experiments with 5 iterations of each of IoTID20 and NSLKDD datasets. Hence, more than half of the attribute subsets fall below the threshold which means that the statistic is insignificant for those attributes. These selected features were used to extract the data from the IoTID20 dataset, and new datasets were created. The new datasets with the top selected features were individually applied to the ML classifiers, and the performance parameters were noted. Figure 4 shows the comparison of the ML classifiers with features selected via the CHI technique. Each classifier was subsequently applied to the new dataset via the features selected via Person correlation coefficient techniques. The details of the experiments are given in Fig. 4. Similar experiments were carried out to select features via ANOVA techniques and classifiers as demonstrated in Fig. 6.

Interestingly, all the classifiers achieved the lowest accuracy, with the top 5 parameters selected through any technique. This indicates that too few features do not help an IDS categorize malicious URLs. All the classifiers showed a drastic increase in accuracy, with the top 10 to top 15 features selected irrespective of the feature selection technique. SVM showed an accuracy of 70% with features selected via the Pearson correlation and more than 70% with features selected via the Chi-square method. However, NB outperforms the other methods, with an accuracy of approximately 75%, when the top 15 features selected by either method are used. The performance of classifiers deteriorated when the number of features was increased to 20. There was a sharp decrease in NB and LR, but the degradation of the SVM performance was not smoother than that of the other methods.

The critical analysis of the experimental results with various numbers of features and techniques helps to conclude that features selected through the simple techniques CHI and Corr helped to increase the accuracy of the classifier compared with the features selected via the ANOVA technique. The Pearson correlation coefficient and Chi2 methods determine relationships such as the dependence or independence of the target on a given feature; hence, both methods can yield higher accuracy with few impactful features and show the same pattern of accuracy with respect to the number of features as depicted in Figs. 4 and 5. However, ANOVA attempts to determine the variance between the target values and feature values and determine whether both the feature and target belong to the same statistically coherent dataset. Hence, ANOVA does not establish any relationship between the feature and target, which is the reason behind the low accuracy of the classification for vectors prepared with features selected via ANOVA. Furthermore, the evaluation suggests that all the simple ML classifiers achieved the highest accuracy when the top 15 features selected by any feature selection technique were used.

Thus, the top 15 features selected via CHI and Corr were considered to create a new dataset of features from the IoTID20 dataset as shown in Table 3. All the duplicate features were considered once, and 26 features were selected, and the new dataset is referred to as the Corchi dataset in this study. The Corchi dataset was applied to the ML classifiers DT, RF and AD. The configuration matrices of each classifier are given in Fig. 7. The Corchi database helps each of the classifiers achieve accuracies in the range of 98-99%. DT yielded the greatest increase in accuracy, i.e., approximately 29%, and achieved the highest accuracy of 99.8%, whereas RF and AD yielded accuracies of approximately 98%. These experiments were repeated 5 times, and the average accuracy was reported. The configuration matrices of the experiments are given in Fig. 7.

The validity of the experiments and proposed techniques was verified by repeating the experiments with the NSLKDD dataset. The results of each classifier and feature selection technique are given in Fig. 8. Interestingly, CHI and Corr helped increase the accuracy of the classifiers. Each classifier achieved the highest accuracy for approximately 15 top features. The accuracy of each classifier was much lower with the top 5 and top 20 features. The experiments on the NSLKDD dataset further justifies the superiority of the proposed technique. The proposed feature selection technique helped to create the new dataset with 18 features as summarized in Table 4. The DT classifier achieved the highest accuracy of 99.9% when the Corchi dataset created from the NSLKDD dataset was used. DT outperforms RF and AD, with accuracies of 99% and 98.2%, respectively. The configuration matrices of DT, RF and AD with the Corchi feature are given in Fig. 9. The results of validation and verification were found to be statistically significant as each had p value of less than 0.01 (0.0015 for IoTID20 and 0.0089 for NSLKDD) using the t-test.

Comparison with the Latest Published Research.

The work was compared against the most recent research on the issue, using the same dataset. Table 5 shows the different performance metrics reported in the research and previous investigations. The outcomes of the NSLKDD and IoTID20 datasets are compared with the most recent research findings in Fig. 10. The proposed study contrasts with prior studies that utilized the IoTDID20 dataset, whereby Albulayhi et al.49 and De Souza et al. established an accuracy of more than 99%. Song et al. and Qaddoura et al.46 obtained accuracies ranging from 97 to 98%. The suggested research additionally indicated an accuracy range of 96%, which is slightly lower but similar to investigations that delivered high-performance IDS systems. Notably, however, these investigations employed feature selection techniques as well as deep learning approaches. The feature learning algorithms and deep learning solutions are complicated solutions that impose unnecessary strain on the IDS system and demand considerable processing capacity. The proposed research proposes a lightweight and compact IDS solution based on basic but simple and robust statistics-based feature selection in machine learning techniques and tailored hyperparameters. Thus, the work demonstrates comparable outcomes with the most recent studies without introducing any complexity and with reduced computational power usage as demonstrated in Table 5.

Table 6 compares the findings of the planned study using the NSL KDD dataset to the results of other recently published studies with the NSLKDD dataset. Figure 11 depicts high accuracy as well as other performance criteria, including precision, F1 score, and recall. The study indicated that the performance criteria ranged from greater than 99%. The performance of IDSs ranks among the top two IDS systems published since 2019. The study achieves 99% accuracy and precision without the use of heavy classifiers or complicated feature selection techniques. The suggested IDS is unique since it uses simple and robust classifiers with good performance.

The analysis of the Table 7compares the computation time of latest published studies with the proposed IDS with IoTID20 and NSLKDD dataset. The proposed IDS system consumed lesser timing while compared to latest published studies. The computation time signifies the computational complexity and utilization of computational resources57.

Conclusion and future work

This research compared shallow learning techniques, ensemble learning techniques, and shallow learning techniques by statistically selecting the feature. This study proposed a new feature selection technique using the basic statistical techniques Chi2 and the Pearson coefficient to extract the top 5, 10, and 20 features. The vectors that use these features were applied to traditional machine learning algorithms. The study revealed that 15 top features are necessary to emulate the accuracy or better accuracy for the IoTID20 dataset. The new feature selection technique helps increase the accuracy and other performance parameters of low-performing classifiers. The proposed technique was further tested with the NSLKDD dataset. The proposed feature selection technique yielded the best results, with 99% accuracy for both datasets, when the DT classifier was used. The IDS using the Corchi technique with the DT classifier performed competitively with recently published studies. The study demonstrated the use of simple yet robust statistical techniques to create effective and efficient feature selection techniques for IDSs.

In the future, this work can be extended by applying a transfer learning (TL) approach and using domain-specific pre-trained models. TL leverages already extracted features to enhance the intrusion detection and addresses the limitations of small and imbalanced datasets. Furthermore, the exploitation of deep neural networks (DNN) may reduce the intrusion detection time. While DNNs typically have more parameters, increasing the model’s computation time, the goal is to manage a trade-off between achieving high detection accuracy and maintaining low computational costs. This balance will ensure the feasibility of applying intrusion detection models in real-time scenarios. It is crucial to optimize DNN structures for real-time streaming data processing. Effective strategies to reduce detection latency include model reduction, quantization, and parallelization with GPUs or TPUs. When combined, these techniques accelerate the detection process, allowing for rapid responses to emerging threats and enhancing overall cybersecurity resilience.

Data availability

The dataset used in this study is publicly available at https://ieee-dataport.org/documents/nsl-kdd-0 and https://ieee-dataport.org/open-access/iot-network-intrusion-dataset.

References

Kumar, A. et al. Mohit Bajaj, Ateeq Ur Rehman, Elsayed Tag-Eldin A Novel Decentralized Blockchain Architecture for the Preservation of Privacy and Data Security against Cyberattacks in Healthc. Sens. 2022, 22, Issue 15, 5921. pp: 1–14. https://doi.org/10.3390/s22155921

Akashdeep Bhardwaj, S. et al. Unmasking vulnerabilities by a pioneering approach to securing smart IoT cameras through threat surface analysis and dynamic metrics. Egypt. Inf. J. 27, 100513. https://doi.org/10.1016/j.eij.2024.100513 (2024).

Muhammad Sajid, K. R., Malik, A., Almogren, T. S., Malik, A. H. & Khan Jawad Tanveer, Ateeq Ur Rehman enhancing intrusion detection: a hybrid machine and deep learning approach. J. Cloud Comput. 13, 123. https://doi.org/10.1186/s13677-024-00685-x (2024).

Lee, I. Internet of things (IoT) cybersecurity: literature review and IoT cyber risk management. Future Internet. 12 (9), 157 (2020).

Ghadi, Y. Y. et al. Integration of Federated learning with IoT for smart cities applications, challenges, and solutions. PeerJ Comput. Sci. 9, e1657. https://doi.org/10.7717/peerj-cs.1657 (2023).

Wang, G. P. & Yang, J. X. SKICA: a feature extraction algorithm based on supervised ICA with kernel for anomaly detection. J. Intell. Fuzzy Syst. 36 (1), 761–773 (2019).

Saheed, Y. K., Abdulganiyu, O. H. & Tchakoucht, T. A. Modified genetic algorithm and fine-tuned long short-term memory network for intrusion detection in the internet of things networks with edge capabilities. Appl. Soft Comput. 155, 111434. https://doi.org/10.1016/j.asoc.2024.111434 (2024).

Ramadan, R. A. & Yadav, K. A Novel Hybrid Intrusion Detection System (IDS) for the Detection of Internet of Things (IoT) Network Attacks (Annals of Emerging Technologies in Computing (AETiC), 2020). Print ISSN, 2516 – 0281.

Verma, A. & Ranga, V. Machine learning based intrusion detection systems for IoT applications. Wireless Pers. Commun. 111, 2287–2310 (2020).

Mazhar, M. S. et al. Forensic Analysis on Internet of Things (IoT) Device using Machine to Machine (M2M) Framework. (2022). https://doi.org/10.3390/electronics11071126

Mukherjee, I., Sahu, N. K. & Sahana, S. K. Simulation and modeling for anomaly detection in IoT network using machine learning. Int. J. Wireless Inf. Networks. 30 (2), 173–189 (2023).

Zhou, M. et al. Robust rgb-t tracking via adaptive modality weight correlation filters and cross-modality learning. ACM Trans. Multimedia Comput. Commun. Appl. 20 (4), 1–20 (2023).

Idrissi, I., Azizi, M. & Moussaoui, O. IoT security with Deep Learning-based Intrusion Detection Systems: A systematic literature review. In 2020 Fourth international conference on intelligent computing in data sciences (ICDS) (pp. 1–10). IEEE. (2020), October.

Kaushik, S. et al. Efficient, lightweight cyber intrusion detection system for IoT ecosystems using mi2g algorithm. Computers 11 (10), 142 (2022).

Altulaihan, E., Almaiah, M. A. & Aljughaiman, A. Anomaly Detection IDS for detecting DoS attacks in IoT Networks based on machine learning algorithms. Sensors 24 (2), 713 (2024).

Nawaal, B., Haider, U., Khan, I. U. & Fayaz, M. Signature-based intrusion detection system for IoT. In Cyber Security for Next-Generation Computing Technologies (141–158). CRC. (2024).

Baccari, S., Hadded, M., Ghazzai, H., Touati, H. & Elhadef, M. Anomaly Detection in Connected and Autonomous Vehicles: A Survey, Analysis, and Research Challenges (IEEE Access, 2024).

Ni, C. & Li, S. C. Machine learning enabled Industrial IoT Security: challenges, trends and solutions. J. Industrial Inform. Integr., 100549. (2024).

Khatib, A., Hamlich, M. & Hamad, D. Machine learning based intrusion detection for cyber-security in IoT networks. In E3S Web of Conferences (Vol. 297, p. 01057). EDP Sciences. (2021).

Nanjappan, M., Pradeep, K., Natesan, G., Samydurai, A. & Premalatha, G. DeepLG SecNet: utilizing deep LSTM and GRU with secure network for enhanced intrusion detection in IoT environments. Cluster Comput., 1–13. (2024).

Saheed, Y. K., Usman, A. A., Sukat, F. D. & Abdulrahman, M. A novel hybrid autoencoder and modified particle swarm optimization feature selection for intrusion detection in the internet of things network. Front. Comput. Sci. 5, 997159. https://doi.org/10.3389/fcomp.2023.997159 (2023).

Bhavani, T. T., Rao, M. K. & Reddy, A. M. Network intrusion detection system using random forest and decision tree machine learning techniques. In First international conference on sustainable technologies for computational intelligence (pp. 637–643). Springer, Singapore. (2020).

Vinayakumar, R., Poornachandran, P. & Soman, K. P. Scalable framework for cyber threat situational awareness based on domain name systems data analysis. In Big data in Engineering Applications (113–142). Springer, Singapore (2018).

Chua, T. H. & Salam, I. Evaluation of Machine Learning algorithms in Network-based intrusion detection using progressive dataset. Symmetry 15 (6), 1251 (2023).

Chun, C. J. & Jeong, C. Data-Driven Resource Allocation for Deep Learning in IoT Networks (IEEE Internet of Things Journal, 2023).

Babayigit, B. & Abubaker, M. Toward a generalized hybrid deep learning model with optimized hyperparameters for malicious traffic detection in the Industrial Internet of things. Eng. Appl. Artif. Intell. 128, 107515 (2024).

Rabhi, S., Abbes, T. & Zarai, F. IoT routing attacks detection using machine learning algorithms. Wireless Pers. Commun. 128 (3), 1839–1857 (2023).

Du, J., Yang, K., Hu, Y. & Jiang, L. Nids-cnnlstm: network intrusion detection classification model based on deep learning. IEEE Access. 11, 24808–24821 (2023).

Chakrawarti, A. & Shrivastava, S. S. Intrusion detection system using long short-term memory and fully connected neural network on Kddcup99 and NSL-KDD dataset. Int. J. Intell. Syst. Appl. Eng. 11 (9 s), 621–635 (2023).

Wang, D., Nie, M. & Chen, D. BAE: Anomaly Detection Algorithm based on clustering and autoencoder. Mathematics 11 (15), 3398 (2023).

Samunnisa, K., Kumar, G. S. V. & Madhavi, K. Intrusion Detection System in Distributed Cloud Computing: Hybrid Clustering and Classification Methods25100612 (Sensors, 2023).

Satyanarayana, G. & Chatrapathi, K. S. Improving intrusion detection performance with genetic algorithm-based feature extraction and ensemble machine learning methods. Int. J. Intell. Syst. Appl. Eng. 11 (4), 100–112 (2023).

Jadhav, K. P., Arjariya, T. & Gangwar, M. Intrusion detection system using recurrent neural network-long short-term memory. Int. J. Intell. Syst. Appl. Eng. 11 (5 s), 563–573 (2023).

Alqarni, A. A. Toward support-vector machine-based ant colony optimization algorithms for intrusion detection. Soft. Comput. 27 (10), 6297–6305 (2023).

Sharma, B., Sharma, L., Lal, C. & Roy, S. Anomaly based network intrusion detection for IoT attacks using deep learning technique. Comput. Electr. Eng. 107, 108626 (2023).

Songma, S., Sathuphan, T. & Pamutha, T. Optimizing intrusion detection systems in three phases on the CSE-CIC-IDS-2018 dataset. Computers 12 (12), 245 (2023).

Najafi Mohsenabad, H. & Tut, M. A. Optimizing cybersecurity attack detection in computer networks: a comparative analysis of Bio-inspired optimization algorithms using the CSE-CIC-IDS 2018 dataset. Appl. Sci. 14 (3), 1044 (2024).

Alzughaibi, S., Khediri, E. & S A cloud intrusion detection systems based on DNN using backpropagation and PSO on the CSE-CIC-IDS2018 dataset. Appl. Sci. 13 (4), 2276 (2023).

Qazi, E. U. H., Faheem, M. H. & Zia, T. HDLNIDS: Hybrid Deep-Learning-Based Network Intrusion Detection System. Appl. Sci. 13 (8), 4921 (2023).

Kshirsagar, D. & Kumar, S. Toward an intrusion detection system for detecting web attacks based on an ensemble of filter feature selection techniques. Cyber-Physical Syst. 9 (3), 244–259 (2023).

Xu, H., Sun, L., Fan, G., Li, W. & Kuang, G. A Hierarchical Intrusion Detection Model Combining Multiple Deep Learning Models with Attention Mechanism (IEEE Access, 2023).

Li, S. et al. HDA-IDS: a hybrid DoS attacks intrusion detection system for IoT by using semisupervised CL-GAN. Expert Syst. Appl. 238, 122198 (2024).

Liu, H. & Lang, B. Machine learning and deep learning methods for intrusion detection systems: a survey. Appl. Sci. 9 (20), 4396 (2019).

Ullah, I. & Mahmoud, Q. H. A Scheme for Generating a Dataset for Anomalous Activity Detection in IoT Networks (Springer International Publishing, 2020).

Hussein, A. Y., Falcarin, P. & Sadiq, A. T. Enhancement performance of random forest algorithm via one hot encoding for IoT IDS. Period Eng. Nat. Sci. 9, 579–591 (2021).

Albulayhi, K. et al. IoT intrusion detection using machine learning with a novel high performing feature selection method. Appl. Sci. 2022 (12(10)), 5015 (2022).

Qaddoura, R., Faris, H. & Aljarah, I. An efficient evolutionary algorithm with a nearest neighbor search technique for clustering analysis. J. Ambient Intell. Humaniz. Comput. 12, 8387–8412 (2021).

Qaddoura, R., Al-Zoubi, A. M., Almomani, I. & Faris, H. A multistage classification approach for iot intrusion detection based on clustering with oversampling. Appl. Sci. 11 (7), 3022 (2021).

Song, Y., Hyun, S. & Cheong, Y. G. Analysis of autoencoders for network intrusion detection. Sensors 21 (13), 4294 (2021).

De Souza, C. A., Westphall, C. B. & Machado, R. B. Two-step ensemble approach for intrusion detection and identification in IoT and fog computing environments. Comput. Electr. Eng. 98, 107694 (2022).

Kumar Samriya, J., Kumar, S., Kumar, M., Wu, H. & Singh Gill, S. Machine Learning-Based Network Intrusion Detection Optimization for Cloud Computing Environments, in IEEE Transactions on Consumer Electronics, vol. 70, no. 4, pp. 7449–7460, Nov. (2024). https://doi.org/10.1109/TCE.2024.3458810

Chu, W. L., Lin, C. J. & Chang, K. N. Detection and classification of advanced persistent threats and attacks using the support vector machine. Appl. Sci. 9 (21), 4579 (2019).

Carrera, F. et al. Combining unsupervised approaches for near real-time network traffic anomaly detection. Appl. Sci. 12 (3), 1759 (2022).

Cao, B., Li, C., Song, Y., Qin, Y. & Chen, C. Network intrusion detection model based on CNN and GRU. Appl. Sci. 12 (9), 4184 (2022).

Fu, Y., Du, Y., Cao, Z., Li, Q. & Xiang, W. A deep learning model for network intrusion detection with imbalanced data. Electronics 11 (6), 898 (2022).

Imrana, Y. et al. χ 2-bidlstm: a feature driven intrusion detection system based on χ 2 statistical model and bidirectional lstm. Sensors 22 (5), 2018 (2022).

Kareem, S. S., Mostafa, R. R., Hashim, F. A. & El-Bakry, H. M. An effective feature selection model using hybrid metaheuristic algorithms for iot intrusion detection. Sensors 22 (4), 1396 (2022).

Soleymanzadeh, R., Aljasim, M., Qadeer, M. W. & Kashef, R. Cyberattack and fraud detection using ensemble stacking. AI 3 (1), 22–36 (2022).

Heidari, A., Navimipour, N. J. & Unal, M. A secure intrusion detection platform using blockchain and radial basis function neural networks for internet of drones. IEEE Internet Things J. 10 (10), 8445–8454 (2023).

Heidari, A., Shishehlou, H., Darbandi, M., Navimipour, N. J. & Yalcin, S. A reliable method for data aggregation on the industrial internet of things using a hybrid optimization algorithm and density correlation degree. Cluster Comput., 1–19. (2024).

Acknowledgements

This work was supported by King Saud University, Riyadh, Saudi Arabia, through Researchers Supporting Project number RSP2025R498.

Author information

Authors and Affiliations

Contributions

Sunil Kaushik: Conceptualization; Data curation; Formal analysis; Methodology; Writing - original draft; Software.Akashdeep Bhardwaj: Investigation; Methodology; Writing - original draft; Writing - review & editing.Ahmad Almogren: Project administration; Investigation; Methodology; Writing - review & editing.Salil bharany: Validation; Investigation; Writing - review & editing.Ayman Altameem: Writing - review & editing; Methodology; Conceptualization.Ateeq Ur Rehman: Writing - review & editing; Software; Resources; Methodology.Seada Hussen: Project administration; Writing - review & editing; Methodology; Conceptualization.Habib Hamam: Project administration; Writing - review & editing; Data curation; Formal analysis.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kaushik, S., Bhardwaj, A., Almogren, A. et al. Robust machine learning based Intrusion detection system using simple statistical techniques in feature selection. Sci Rep 15, 3970 (2025). https://doi.org/10.1038/s41598-025-88286-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-88286-9

Keywords

This article is cited by

-

Energy-efficient deep learning-based intrusion detection system for edge computing: a novel DNN-KDQ model

Journal of Cloud Computing (2025)