Abstract

The Parrot Optimizer (PO) has recently emerged as a powerful algorithm for single-objective optimization, known for its strong global search capabilities. This study extends PO into the Multi-Objective Parrot Optimizer (MOPO), tailored for multi-objective optimization (MOO) problems. MOPO integrates an outward archive to preserve Pareto optimal solutions, inspired by the search behavior of Pyrrhura Molinae parrots. Its performance is validated on the Congress on Evolutionary Computation 2020 (CEC’2020) multi-objective benchmark suite. Additionally, extensive testing on four constrained engineering design challenges and eight popular confined and unconstrained test cases proves MOPO’s superiority. Moreover, the real-world multi-objective optimization of helical coil springs for automotive applications is conducted to depict the reliability of the proposed MOPO in solving practical problems. Comparative analysis was performed with seven recently published, state-of-the-art algorithms chosen for their proven effectiveness and representation of the current research landscape-Improved Multi-Objective Manta-Ray Foraging Optimization (IMOMRFO), Multi-Objective Gorilla Troops Optimizer (MOGTO), Multi-Objective Grey Wolf Optimizer (MOGWO), Multi-Objective Whale Optimization Algorithm (MOWOA), Multi-Objective Slime Mold Algorithm (MOSMA), Multi-Objective Particle Swarm Optimization (MOPSO), and Non-Dominated Sorting Genetic Algorithm II (NSGA-II). The results indicate that MOPO consistently outperforms these algorithms across several key metrics, including Pareto Set Proximity (PSP), Inverted Generational Distance in Decision Space (IGDX), Hypervolume (HV), Generational Distance (GD), spacing, and maximum spread, confirming its potential as a robust method for addressing complex MOO problems.

Similar content being viewed by others

Introduction

Design issues are fundamentally optimization problems requiring appropriate optimization methods and algorithms. The increasing complexity of modern design challenges has rendered traditional optimization methods, rooted in mathematical concepts, insufficient for providing timely and effective solutions. One such conventional method is the gradient-based algorithm, which tackles optimization problems using the gradient of the objective function1. In recent decades, addressing the limitations of standard optimization algorithms and developing new, efficient optimization algorithms has garnered significant attention. Technological advancements have spurred the creation of new optimization algorithms that offer high efficiency, accuracy, and speed in handling complex optimization problems2. Other significant challenges, including non-convexity, non-smoothness, and local optimization of search spaces, have primarily driven this research.

Due to these constraints, academics and experts have been inspired to develop novel metaheuristic algorithms to meet a range of optimization problems3. As information technologies evolve, numerous optimization problems emerge across diverse fields, such as engineering, bioinformatics, and geophysics4,5. Different optimization problems are classified as NP-hard, indicating that unless \(NP = P\), they cannot be determined in a polynomial amount of time6. Consequently, precise mathematical methods are feasible only for small-scale issues.

Researchers have thus turned to approximation methods to find workable solutions within a reasonable time. These techniques are classified into heuristics and metaheuristics7. Heuristics are problem-specific, working well for particular problems but often failing in different contexts. In contrast, black-box optimizers, or general algorithmic frameworks, like metaheuristics, can solve almost any optimization problem8. Metaheuristics are a class of higher-level heuristics used to solve many optimization issues. Recently, many MH algorithms have been successfully applied to tackle complex problems9,10. The strength of these algorithms is their capacity to solve optimization problems of any size or complexity with a reasonable degree of quality. Numerous optimization issues, including continuous, discrete, single- and multi-objective, have been resolved using MH algorithms11.

MH algorithms leverage robust stochastic techniques and have gained significant recognition for their adaptability and performance in addressing complex challenges across various domains. Applications include global optimization problems12, production scheduling13, feature selection14,15, flex-route transit services16, wind energy conversion systems17, image segmentation18, and deep learning-based optimization10,19. A prominent category of metaheuristics is swarm intelligence, inspired by the collective behavior of animals, insects, and birds. Notable examples include liver cancer algorithm20, parrot optimizer21, slime mould algorithm22, Potter Optimization Algorithm (POA)23, Carpet Weaver Optimization (CWO)24, Fossa Optimization Algorithm (FOA)25, Addax Optimization Algorithm (AOA)26, and Dollmaker Optimization Algorithm (DOA)27. Moreover, there are some excellent algorithms developed, such as Sculptor Optimization Algorithm (SOA)28, Sales Training Based Optimization (STBO)29, Orangutan Optimization Algorithm (OOA)30, Tailor Optimization Algorithm (TOA)31, and Spider-Tailed Horned Viper Optimization (STHVO)32

Real-world issues frequently have several, sometimes opposing, goals. Consequently, single-objective optimization is not as appropriate for these real-world situations as multi-objective optimization problems (MOPs). To solve MOPs, each goal is usually approached as a single-objective OP and solved in order of decreasing significance33. As an alternative to increase overall performance, all single solvers can exchange information between iterations. In MOO, two or more competing objectives are concurrently optimized while subjecting constraints to construct Pareto-front optimal trade-off solutions. Different MOPs are optimized in numerous fields using MOO algorithms34,35.

Three general categories can be used to group MOO techniques: a priori, a posteriori, and interactive techniques36. Before the real optimization process starts, a priori approach, which relies on prior knowledge of the problem and the objectives, tries to uncover Pareto optimal solutions. One popular technique in these approaches is to take a multi-objective problem (MOP) and turn it into a single-objective problem by determining a single-objective function as the weighted sum of the normalized costs for each objective. Even if they are simple, they must be discussed with the decision-maker to establish the desired weights37.

After optimization, a Posteriori approach uses the solutions found throughout the process to determine Pareto optimal solutions. By keeping the MOP formulated, these approaches eliminate the requirement to combine various objectives or establish a set of weights. A posteriori techniques encompass a variety of evolutionary algorithms, such as simulated annealing, genetic algorithms, particle swarm optimization, NSGA-II, SPEA2, MOEA/D, and NSGA-III. These algorithms can identify Pareto optimal solutions in a single run and then use decision-making38.

Human intervention occurs during the optimization process when using interactive approaches. By using these techniques, the decision-maker can communicate with the optimization method and offer input on the answers the algorithm has produced. Interactive particle swarm optimization, interactive evolutionary strategies, and interactive genetic algorithms are examples of interactive techniques39.

Multi-objective evolutionary algorithms (MOEAs) have been gaining popularity and are widely adopted for addressing MOPs because of their efficiency in producing multiple Pareto optimal solutions in a single execution. This effectiveness results from using a population-based search method with few distinct dominance relationships. When used to simultaneously construct a robust approximation to the Pareto front (PF) and Pareto set (PS), MOEAs are very effective as an a posteriori approach40. Most existing MOEAs can be organized into four classes depending on their preference techniques: domination-based, indicator-based, decomposition-based, and hybrid MOEAs. MOEAs typically evaluate the function values of all newly generated solutions to assess the quality of the selection process. As such, before these algorithms reach convergence, they must frequently undergo multiple function evaluations41.

However, traditional optimization strategies often fall short of delivering satisfactory long-term results. These conventional techniques face several limitations, including sensitivity to initial estimates, reliance on the accuracy of differential equation solvers, and a high probability of getting stuck in the local region42. Various advanced algorithms have been developed to address these complex optimization issues efficiently.

The PO, developed by T. Junbo Lian et al.43, is a strong algorithm successfully used for various engineering issues, manufacturing methods, and scientific models. Building on the strengths of PO, we were inspired to create a multi-objective version, termed MOPO, specifically designed to tackle MOO problems. The effectiveness of MOPO is rigorously evaluated by employing the CEC’2020 benchmarks. Also, MOPO is compared against seven well-established optimization algorithms: MOPSO44, NSGA-II45, MOGWO46, MOWOA47, MOSMA48, IMOMRFO49, and MOGTO50. The reasons behind selecting this comparison pool are that it includes some state-of-the-art algorithms in the domain of MOO, such as MOPSO and NSGA-II; it also includes very recent and strong algorithms, such as MOSMA, IMOMRFO, and MOGTO. The main reason is it employs the same Pareto-based archiving mechanisms using the non-dominated ranking and crowding distance tactics that are incorporated with the proposed MOPO for a fair comparison. Generally, comparisons should be made with state-of-the-art, recent, and strong algorithms. The Proposed MOPO can hold onto the finest candidates among the Pareto optimum solutions. With an additional crowding distance (CD) operator to enhance the diversity and coverage of the premium solutions, the individuals in the swarm update their positions in a manner akin to the original PO. Furthermore, the parrot’s premium host places come from the archive of non-dominated solutions (NDSs), guaranteeing thorough investigation and exploitation throughout the optimization procedure.

To summarize, this paper has made the following contributions:

-

The notable advantages of the PO inspired the development of its multi-objective version, MOPO, and its performance is benchmarked against seven cutting-edge MOO algorithms.

-

The CEC’2020 benchmarks are used to assess the efficacy of MOPO.

-

To demonstrate the robustness of MOPO, the PSP, IGDX, and HV indicators are employed.

-

MOPO’s performance is assessed using four specific engineering design challenges and eight popular constrained and unconstrained test cases. Evaluation metrics include GD, spacing, and maximum spread.

-

Moreover, the real-world MOO of helical coil springs for automotive applications is addressed to depict the reliability of the proposed MOPO to solve real-world challenges.

-

The Wilcoxon test, Friedman test, and various performance metrics are utilized to estimate the significance of MOPO compared to seven well-established optimization algorithms.

The remainder of the paper is arranged as follows. “Related Work” Section includes an overview of the previous studies. In “Basic Concepts of Multi-Objective Optimization Problems (MOPs)” Section, the basic ideas of MOP are explained. “The Parrot Optimizer (PO)” Section contains the original PO. In “The Proposed Multi-Objective Parrot Optimizer (MOPO)” Section, the suggested MOPO is presented. “Excremental Results Analysis and Discussion” Section analyzes the experimental results. “Limitations and Advantages of the Proposed MOPO” Section presented the advantages and limitations of the proposed method. “Conclusion and Future Directions” Section illustrates the conclusions along with some suggestions for further investigation.

Related work

MOO is a crucial field of study in optimization, addressing issues where several objective functions must be simultaneously optimized. Unlike single-objective optimization, Pareto-optimal solutions, or a range of equally viable alternatives, are produced by MOO51. These solutions provide a trade-off with the different objectives, making MOO particularly relevant in real-world applications where multiple criteria must be considered. The field of MOO has evolved significantly since its inception. Early approaches primarily aggregated multiple objectives into a single composite objective function. However, these methods often fail to capture the complexity of trade-offs between different objectives. Introducing Pareto-based methods marked a significant milestone, allowing for a more nuanced approach to handling multiple objectives. Several algorithms have been developed to address MOO problems, each with its advantages and limitations:

Genetic Algorithms (GAs): One of the earliest and most widely used approaches is the Non-dominated Sorting Genetic Algorithm (NSGA) and its enhanced variant NSGA-II45. NSGA-II is renowned for its efficient, non-dominated sorting procedure and ability to maintain diverse solutions. This method starts by employing a non-dominated sorting mechanism to label Pareto sets, starting from the first non-dominated front. The selection procedure is then guided by the CD metric that a crowded-comparison operator assigns to each solution. The system optimizes solutions in less populated locations and emphasizes elitist alternatives with lower domination ratings for survival, hence maintaining diversity. To ensure the population size remains consistent with the initial size, the selection of non-dominated individuals is repeated multiple times. These steps continue until a predefined termination condition is met.

Particle Swarm Optimization (PSO): MOPSO adapts the principles of particle swarm optimization to handle multiple objectives. MOPSO has shown promising results in maintaining diversity and convergence52. Coello and Lechuga53 introduced the MOPSO algorithm, demonstrating a more time-efficient performance than NSGA-II. MOPSO uses a fixed-capacity repository to hold NDS that can be used in later stages. The algorithm partitions the search space into equal-sized hypercubes to find less-explored regions within the objective function’s search space. This methodology guarantees a wide distribution of solutions throughout the PF and contributes to preserving solution variety. Consequently, this gives designers many options rather than concentrating solutions in specific regions.

Differential Evolution (DE): Multi-objective Differential Evolution (MODE) is a widely recognized technique celebrated for its robustness and simplicity. MODE algorithms have effectively tackled various complex optimization problems54. In a comprehensive study by Gunantara55, the application of evolutionary MOO algorithms to engineering problems is thoroughly examined. Various MOO techniques have been created to optimize MOPs, including the multi-objective NSGA-III56, MODE57, MOGWO46, Dragonfly algorithm58, MO self-adaptive multi-population based Jaya algorithm (SAMP-Jaya)59, and MO moth flame optimization60. These studies highlight the efficacy of meta-heuristic algorithms in optimizing MOPs and comparing the true Pareto optimal front for various complex problems.

Numerous comparative studies have evaluated the performance of different MOO algorithms across various benchmark problems. These studies provide valuable wisdom into the powers and shortcomings of each approach. For instance, a MOO algorithm named MOCGO/DR based on Decomposition (MOCGO/D) was suggested by Yacoubi et al.41. This algorithm divides the MOP into single-objective sub-problems during decomposition using a Normalized Boundary Intersection (NBI) technique. Furthermore, the Multi-Objective Exponential Distribution Optimizer (MOEDO), which integrates elite non-dominated sorting and CD approaches, was proposed by Kalita et al.11. MOEDO incorporates an information feedback mechanism (IFM) to balance exploration and exploitation. This enhances convergence and lessens the possibility of stalling in local optima, a common drawback in conventional optimization techniques. Zitzler et al.61 introduced the strength Pareto evolutionary algorithm (SPEA). Knowles and Corne62 created the Pareto archived evolution strategy (PAES-2). Tejani et al.63 presented enhanced multi-objective symbiotic organisms search (MOSOS) designed to solve engineering optimization issues.

Furthermore, A new multi-objective version of the Geometric Mean Optimizer (GMO), called MOGMO, was presented by Pandya et al.64. MOGMO adopts a robust offspring production and selection process, combining the standard GMO with an elite, non-dominated sorting mechanism to locate Pareto optimal solutions successfully. A grid-based BFO method with multiple resolutions for MOPs was proposed by Junzhong Ji et al.65 (MRBFO). Four optimization mechanisms-chemotaxis, conjugation, reproduction, and elimination and dispersal-are redesigned by MRBFO. Moreover, Houssein et al.66 introduced a multi-objective algorithm of the self-adaptive Equilibrium Optimizer (self-EO), MO-self-EO. The MO-self-EO was validated on two categories of multi-objective problems: the CEC’2020 MO routines and MO engineering design challenges. The algorithm employs a selection strategy to enhance convergence and solution diversity, combining CD with an IFM. Meanwhile, Dhiman et al.67 suggested the Multi-Objective Spotted Hyena Optimizer (MOSHO), featuring a fixed-size archive to manage NDS. Additionally, Zarbakht et al.68 presented the Multi-Layer Ant Colony Optimization (MLACO) algorithm for solving multi-objective community detection problems. Using a parallel search method, MLACO improves convergence and demonstrates computational efficiency.

Moreover, Seyedali et al.9 suggested an Ant Lion Optimizer (ALO) multi-objective version to tackle engineering design issues. The Multi-Objective Seagull Optimization Algorithm (MOSOA) was introduced by Dhiman Gaurav et al.69 to solve various optimization problems. This was more recently. Shankar et al.70 created an enhanced multi-objective method to improve solution spread and convergence in engineering design challenges. Mozaffari et al.71 presented the Synchronous Self-Learning Pareto Strategy (SSLPS), a multi-objective algorithm for vector optimization. Also, Kumar et al.72 suggested the Multi-Objective Thermal Exchange Optimization (MOTEO) method for truss design. Based on Newton’s law of cooling, this multi-objective version enhances the single-objective TEO algorithm, integrating non-dominated sorting and CD methods for improved performance.

Furthermore, a variant of the gorilla troops optimizer (GTO) was developed in50 to tackle issues with MO optimization. It refers to this version as MOGTO. Houssien et al.73 proposed a new approach by integrating a multi-objective technique with the bird swarm algorithm (BSA), resulting in the development of the MBSA method. The MBSA successfully generates NDS while maintaining diversity among the optimal solutions. An MO form of advanced moth flame optimization (MFO) based on CD and NDS was presented by Saroj et al.74. To address the shortcomings of MFO, a weight strategy (WS) and a mathematical quasi-reflection-based learning (QRL) method were first introduced to the traditional MFO. Subsequently, this advanced MFO has been expanded into a MO variation called MOQRMFO. The MnMOMFO, a novel NDS and CD-based MO variation of the MFO method for MO optimization issues, was introduced in75. The method addresses the shortcomings of MFO and enhances its performance by incorporating notions from arithmetic and geometric means. Subsequently, this improved MFO was configured into an MO version, and NDS and CD techniques were utilized to attain a Pareto optimal front that was uniformly distributed.

Recent advancements in MOO have increasingly focused on hybrid algorithms, combining various optimization techniques’ strengths. Hybrid multi-objective evolutionary algorithms (MOEAs) have become particularly popular for addressing complex MOPs, leveraging the combined strengths of multiple algorithms76. The two main things to think about with these hybrid algorithms are choosing which algorithms to combine and how to integrate them. Recent work has combined several tactics to produce progeny populations77. However, further exploration is needed in other domains. Notable examples of hybrid MOEAs include the Hybrid Multi-Objective Evolutionary Algorithm based on the Search Manager Framework for big data optimization problems76, the Hybrid Selection Multi/Many-Objective Evolutionary Algorithm77, the Hybrid TOPSIS-PR-GWO Approach for multi-objective process parameter optimization78, and the Simulated Annealing Based Undersampling (SAUS) method for tackling class imbalance in multi-objective optimization79. Additionally, Moghdani et al.80 presented the MO volleyball premier league technique (MOVPL) to address global optimization problems with many target jobs. Teams vying for spots in a top volleyball league served as the inspiration for this optimization approach.

Other widely recognized MOO algorithms include the Multi-Objective Equilibrium Optimizer (MOEO)81, the MOSMA82, the Multi-Objective Arithmetic Optimization Algorithm (MOAOA)83, and the multi-objective Coronavirus disease optimization algorithm (MOCOVIDOA)37. Additionally, the Multi-Objective Evolutionary Algorithm based on Decomposition (MOEA/D)84, and the Multi-Objective Multi-Verse Optimization (MOMVO)85.

The Non-dominated Sorting Grey Wolf Optimizer (NS-GWO)86, the Multi-Objective Gradient-Based Optimizer (MOGBO)87, the Multi-Objective Plasma Generation Optimizer (MOPGO)88, the Multi-Objective Marine Predator algorithm for solving Multi-Objective Optimization problems (MOMPA)89, and the Non-dominated Sorting Harris Hawks Optimization (NSHHO)90 are some more significant contributions. The Decomposition-Based Multi-Objective Symbiotic Organism Search (MOSOS/D)91 and the Decomposition-Based Multi-Objective Heat Transfer Search (MOHTS/D)92 are two other noteworthy algorithms.

In the context of the recent related studies, the work in93 proposed a dynamic holographic modeling approach for the augmented visualization of digital twin scenarios for bridge construction. A dynamic segmentation technique with variable screen size was developed to generate holographic scenes more effectively, and a motion blur control approach was developed to increase holographic scene rendering efficiency based on human visual features. Finally, the prototype system was created, and the necessary experimental analysis was accomplished. Design for remanufacturing (DfRem) is an essential concept that tries to limit carbon emissions during the remanufacturing phase from the start. However, low-carbon standards and customer preferences are not fully considered when developing schemes, which may limit the potential to cut carbon emissions. To solve the issue, the study in94 developed a DfRem scheme generation approach that combines the quality function deployment for low-carbon (QFDC) model with the functional-motion-action-structure (FMAS) model. Furthermore, the work in95 also suggested a multihead attention self-supervised (MAS) representation model, which is a self-supervised learning-based sensor feature extraction network. Similarly, the work described in96 presented causal intervention visual navigation (CIVN), which is based on deep reinforcement learning (DRL) and causal intervention. Appliance types and power consumption patterns differ significantly across industries. This can lead to inconsistencies in the identification results of traditional appliance load monitoring methods across industries. For that, a non-intrusive appliance load monitoring (NIALM) solution for diverse industries based on multiscale spatio-temporal feature fusion has been proposed in97. The efficient tracking and capture of escaping targets using unmanned aerial vehicles (UAVs) is the focus of the UAV pursuit-evasion problem. This topic is crucial for public safety applications, especially when intrusion monitoring and interception are involved. The study in98 proposed a novel swarm intelligence-based UAV pursuit-evasion control framework, called the “Boids Model-based DRL Approach for Pursuit and Escape” (Boids-PE), which combines the advantages of swarm intelligence from bio-inspired algorithms and deep reinforcement learning (DRL) in order to address the difficulties of data acquisition, real-world deployment, and the limited intelligence of existing algorithms in UAV pursuit-evasion tasks. Moreover, a collaborative imaging technique using a reverse-time migration (RTM) algorithm and a zero-lag cross-correlation imaging condition was presented in99. It was developed independently from ground-penetrating radar (GPR) and pipe-penetrating radar (PPR) data. Likewise, in order to improve the degree of fairness while meeting the required priority requirements, the study in100 examined and defined the slice admission control (SAC) problem in 5G/B5G networks as a nonlinear and nonconvex MOO problem. Consequently, to address the issue, the study also suggested a heuristic approach known as prioritized slice admission control considering fairness (PSACCF).

Furthermore, recent studies have been introduced in the domain of optimization, such as in101, which suggested a novel solution for nonconvex problems involving multiple variables. This is particularly true for problems that are usually resolved by an alternating minimization (AM) strategy, which divides the original optimization problem into a number of subproblems that correspond to each variable and then iteratively optimizes each subproblem using a fixed updating rule. In102, a strategy for simultaneously optimizing mobile robot base position and cabin angle using a homogeneous stiffness domain (HSD) index for large spaceship cabins was presented. The work in103 looked at the joint design of a multiple-input multiple-output (MIMO) broadcast waveform and a receive filter bank for RSU-mounted radar in a spectrally packed vehicle-to-infrastructure communication environment. A non-convex problem was presented with the criterion of maximizing the average signal-to-interference-plus-noise ratio (SINR), which involves the weighted-sum waveform energy over the overlaid space-frequency bands, as well as energy and similarity constraints. In this work, an iterative approach was proposed for solving the joint optimization issue. Similarly, the study in104 developed a thermal-elastohydrodynamic mixed lubrication model for journal-thrust linked bearings that takes into account pressure, flow, and thermal continuity conditions and validated it with trials. Based on this, preliminary research was conducted into the effect of textural characteristics on lubrication under real-world heavy load situations. The orthogonal test design was then utilized to identify the texture parameter with the highest influence on optimization. The PSO approach was then used for synchronous texture design for both the journal and the thrust sections. Additionally, a method for identifying uneven industrial loads using optimized CatBoost with entropy characteristics was developed105. The study in106 addressed the refined oil distribution problem with shortages through a multi-objective optimization strategy from the perspective of oil marketing company decision-makers. The modeling and solving approach included creating a crisp multi-depot vehicle routing model, developing a robust optimization model, and proposing the MOPSO model. Moreover, in107, a multi-objective optimization model for an adaptive maintenance window-based opportunistic maintenance (OM) method was proposed.

Despite significant progress, several challenges remain in the field of MOO. One major challenge is the scalability of algorithms for high-dimensional problems. Another issue is the need to handle constraints efficiently in MOO problems. Furthermore, developing algorithms that can dynamically adapt to changing problem landscapes is an ongoing area of research. In this work, we propose a novel multi-objective optimization algorithm, the MO Parrot Optimizer (MOPO), to address some of the existing gaps in the field. By leveraging innovative strategies inspired by natural phenomena, MOPO provides a reliable and effective method for resolving challenging MOO issues.

Basic concepts of multi-objective optimization problems (MOPs)

Solving single-objective optimization problems is generally simpler than solving MOPs. This is mainly because only one distinct solution is subject to one objective function. In single-objective scenarios, the absolute optimal solution is found, and the singularity of the objective makes it easy to compare solutions. On the other hand, more solutions to MOO issues complicate evaluating the answers108.

The mathematical formula of a multi-objective optimization problem is presented as109:

Here, the symbols \(Q\), \(P\), and \(D\) stand for the number of variables, objective functions, equality constraints, and inequality constraints in that order. \(L_i\) and \(U_i\) denote the lower and upper bounds of the \(i\)-th parameter.

Pareto dominance

Let \(\vec {x}\) and \(\vec {y}\) be two solutions with cost functions represented as:

For a minimization problem as shown in Fig. 1, solution \(\vec {x}\) is expressed to overpower solution \(\vec {y}\) (represented as \(\vec {x} \preceq \vec {y}\)) if none of \(\vec {y}\)’s cost elements are less than the equivalent cost elements of \(\vec {x}\), and at least one element of \(\vec {x}\) is smaller than that of \(\vec {y}\). This can be formally defined as:

Pareto optimality

The idea of Pareto optimality is dependent on Pareto dominance110:

A solution \(\vec {x} \in X\) is called Pareto-optimal if and only if there is no \(\vec {y} \in X\) such that \(\vec {y} \preceq \vec {x}\).

Pareto optimal set

The Pareto optimal set \(P_s\) comprises all NDS to a specified problem. Mathematically, it is described as:

The Pareto optimal set is visually represented in Fig. 2, showing the ensemble of optimal solutions.

Pareto optimal front

The Pareto optimal front \(P_f\) denotes the set of Pareto-optimal solutions mapped in the objective area. It can be described as:

As Fig 2 explains, the PF \(P_f\) denotes. the optimal set in the objective area.

The MOO process is typically illustrated by an intermediate or current front of NDS discovered by the optimization process, which approximates the true PF, as depicted in Fig. 3.

NSGA-II is a multi-objective genetic algorithm that is frequently used45. In NSGA-II, Pareto sets are identified and labeled, beginning with the first non-dominated front and using a non-dominated sorting strategy.

The parrot pptimizer (PO)

PO is a modern optimization algorithm motivated by the key behaviors of Pyrrhura Molinae parrots. This algorithm emulates four distinct behavioral traits observed in trained Pyrrhura Molinae parrots-namely, foraging, resting, communication, and fear of strangers-to perform the core optimization tasks of exploration (diversification) and exploitation (intensification). These behaviors are modeled mathematically to simulate the optimization process. The following outlines the mathematical framework of the PO:

Foraging attitude

In the foraging attitude in PO, parrots mostly use observation to estimate the approximate food position or consider the owner’s position. Then, they fly in the direction of the estimated position. The positional movement, therefore, obeys the following Eq. (6):

where \({Max}_{\text{ cy }}\) indicates the maximum number of cycles and rand(0, 1) represents a random number in [0, 1].

In Eq. (6), \(C_i^t\) denotes the current position, while \(C_i^{t+1}\) represents the position after the next update. \(C_{\text {mean}}^t\) is the average position of the current agents, and L(D) signifies the Levy distribution used to model the flight behavior of the parrots. \(C_{\text {optimal}}\) refers to the best position discovered from initialization to the current iteration and is also known as the host position. The variable t represents the current cycle count. The term \(\left( C_i^t - C_{\text {optimal}}\right) * L(D)\) captures the movement of the parrots relative to the host position, while \(\operatorname {rand}(0,1) * \left( 1 - \frac{t}{\text {Max}{\text {cy}}}\right) ^{\frac{2t}{\text {Max}{\text {cy}}}} * C_{\text {mean}}^t\) describes the influence of the overall population position on the direction of movement towards food sources.

The average position of the current swarm, denoted as \(C_{\text {mean}}^t\), is calculated using the formula provided in Eq. (7).

where \(S_{in}\) denotes the swarm size.

The Levy distribution can be derived using the rule specified in Eq. (8), with \(\gamma\) set to 1.5.

Remaining attitude

The major behavior of Pyrrhura Molinae, a very gregarious bird, is to take off suddenly and land on any area of its owner’s body, where it stays motionless for a while. This procedure can be expressed mathematically as:

where ones(1, D) indicates the whole vector of dimension D. \(C_{\text{ optimal }} * L(D)\) indicates the flight to the host, and \(rand(0,1) * ones(1, \text{D})\) indicates the procedure of randomly stopping at a portion of the host’s body.

Communicating attitude

Parrots of the Pyrrhura Molinae family are naturally sociable and exhibit close group communication. Both flying to the flock and communicating without flying to the flock are included in this communication attitude. Both behaviors are taken to occur equally likely in the PO, and the current population’s mean position represents the flock’s center. This procedure can be expressed mathematically as:

where, \(0.2 \cdot \operatorname {rand}(0, 1) \cdot \left( 1 - \frac{t}{\text {Max}_{\text {cy}}}\right) \cdot \left( C_i^t - C_{\text {mean}}^t\right)\) represents the operation of an individual entering a parrot’s group for communication, and \(0.2 \cdot \operatorname {rand}(0, 1) \cdot \exp \left( -\frac{t}{\operatorname {rand}(0, 1) \cdot \text {Max}_{\text {cy}}}\right)\) represents the operation of an individual flying out directly after communication. Both conducts are implemented based on a randomly developed \(Q\) in the range \([0,1]\).

Fear of strangers attitude

Birds, particularly Pyrrhura Molinae parrots, have an innate fear of foreigners. The following mathematical illustration shows how they behaved, avoiding foreign people and hiding with their masters while they were looking for a haven Eq. (11):

where \(\operatorname {rand}(0,1) * \cos \left( 0.5 \pi * \frac{t}{\text{ Max}_{cy}}\right) * \left( C_{\text{ optimal }}-C_i^t\right)\) denotes the process of re-orientating to fly towards the master and \(\cos (\operatorname {rand}(0,1) * \pi ) \ * \left( \frac{t}{\text{ Max}_{cy}}\right) ^{\frac{2}{\text {Max}_{cy}}} * \left( C_i^t-C_{\text{ optimal }}\right)\) indicates the procedure of moving away from the foreigners. The steps in the PO’s optimization methodology are represented as follows:

Step 1: Configure the parameters for the optimizer: Swarm size (\(S_{in}\)), individuals dimension (D), low bound (lb), high bound (ub), maximum number of cycles (\(Max_{cy}\)) and parameters (\(\gamma\) and Q). Step 2: Initialize the parrots stochastically \((C_1, C_2, \ldots , C_{S_{in}})\). Step 3: Decide the parrots’ fitness (acquire host position and premium fitness). Step 4: While the stopping requirement was not met. Step 5: Update the swarm individuals using Eqs. (6), (9), (10), and (11), respectively, based on a random integer value called St, which takes a value from the discrete uniform distribution from 1 to 4 (\(St = randi(1, 4)\)). Step 6: If \(St = 1\), then apply the foraging attitude using Eq. (6). Step 7: If \(St = 2\), then apply the remaining attitude using Eq. (9). Step 8: If \(St = 3\), then apply the communicating attitude using Eq. (10). Step 9: If \(St = 4\), then apply the fear of strangers attitude using Eq. (11). Step 10: Apply boundary control on parrots and then update host position and premium fitness. Step 11: Return host position, premium fitness.

The proposed multi-objective parrot optimizer (MOPO)

To fully understand the MOPO approach, it’s important to explore the key mechanisms that it incorporates:

The process starts with an elitist non-dominated sorting method. This method identifies NDS within the search space and applies NDS and the non-dominated ranking (NDR) of these solutions82. Figure 4 illustrates how to use the NDR. While at least one solution from the first front dominates the solutions on the second front, none of the solutions on the first front do. According to Fig. 4, the NDR of a dominated solution in this situation denotes the number of solutions that defeat the solution Z48.

MOPO utilizes a CD operator to preserve solution variation. The CD operator gives an approximation of the density of the surrounding solutions. Figure 5 illustrates the CD for a solution SLN, defined as the size of the largest hexagonal area around SLN that does not contain any other solutions48. This strategy guarantees a balanced distribution of solutions within the search area. As shown in Eq. (12), the variety of solutions obtained by the CD operator is essential for avoiding premature convergence and guaranteeing the exploration of different regions in the search space.

where \(f_{i}^{high}\) signifies the highest value of the i-th objective function, and \(f_{i}^{low}\) denotes its lowest value. The CD for a solution SLN is calculated as the average distance between its neighboring solutions \((SLN-1, SLN+1)\).

The mechanism of the non-dominated sorting approach is depicted in Fig. 6. In the beginning, the parent and current population solutions use the NDR. After that, the solutions with the lowest rankings are removed, and the remaining solutions are arranged using the CD process. Only solutions with higher CD estimations are kept after those with the lowest CD values are eliminated. This process involves determining the target location dimension of the Pareto solution based on the CD value. The PO is then employed to update the positions of the solutions, with MOPO utilizing the same update process as the original PO. A detailed explanation of the PO’s optimization update process is provided in “The Parrot Optimizer (PO)” Section. Every solution in the population has its objective function assessed at each iteration, and the NDS are archived. This archive serves as the basis for the optimization process in the subsequent iteration.

The solutions stored in the archive are regularly updated to reflect the most recent changes, ensuring that outdated solutions are replaced. MOPO effectively refines solution distribution by utilizing the CD method, enabling it to achieve and archive Pareto optimal solutions. The optimization strategy for the developed MOPO is outlined in Algorithm 1. The MOO approach of the MOPO model can be summarized in the following steps:

Step I: Establish the control parameters required to make the optimizer execute. Step II: Randomly select the parent swarm \(S_a\) within the decision space S using chaotic initialization. Step III: Within the objective space F, evaluate every objective function. Step IV: Generate a new swarm \(S_b\) and combine it with \(S_a\) to form the swarm \(S_c\). Step V: Rank the solutions in \(S_c\) based on NDS, incorporating both CD and NDR. Step VI: Populate the new candidate solution swarm with the top-performing \(S_{in}\) solutions. Step VII: Repeat the above steps until the stopping criterion is satisfied.

The largest number of Pareto optimal solutions that may be stored in the archive, which is used in the MOO process, is constrained by the archive’s capacity. The rationale is that computation complexity increases with archive size. It is known that doing this reduces the actual approximation cost. Furthermore, the full archive issue can arise from reducing the archive size. To avoid these issues, the archive is periodically removed from neighborhoods with high population density, enabling freshly added solutions from areas with lower population density to be kept in the archive.

Ultimately, because the developed MOPO employs the same NDS and CD approaches as the NSGA-II algorithm, its computational complexity is \(O(M * S_{in}^{2})\), where M is the number of target routines and \(S_{in}\) indicates the swarm size. The following is an illustration of the computational time complexity for the biggest index of cycles:

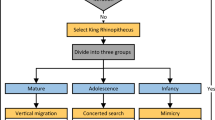

The flowchart for the developed MOPO is depicted in Fig. 7, where t represents the current cycle index and \(Max_{cy}\) represents the largest index of cycles.

Excremental results analysis and discussion

This section describes how the CEC’2020 test routines were used to test and compare the suggested MOPO’s performance against other top algorithms. The evaluation and comparison of MOPSO44, NSGA-II45, MOGWO46, MOWOA47, MOSMA48, IMOMRFO49, and MOGTO50 reveal the performance of MOPO in the MOO domain.

Experimental configuration

The experiments were performed in a standardized computing environment to ensure consistent and fair comparisons across all algorithms. The hardware setup includes an Intel Core i5-3317U CPU with a 1.70 GHz frequency, 4 GB RAM, and a 500 GB hard drive. The software environment consists of the Windows 8 operating system and MATLAB R2013a (8.1.0.604), which was used for implementing and running the optimization algorithms. These configurations were selected to provide a reliable and reproducible testing platform for evaluating the performance of the proposed MOPO algorithm against other state-of-the-art multi-objective optimization algorithms. All tests and simulations are executed on the same computer system to guarantee a fair comparison.

Parameter configurations

To evaluate the robustness of the proposed MOPO technique on the CEC’2020 benchmark suite, this paper employs six standard performance metrics, comparing MOPO against other prominent methods111. Simulations are conducted using MATLAB R2013a as the integrated development environment (IDE). The results of MOPO are benchmarked against those from seven renowned multi-objective optimization algorithms. All algorithms are tested under standardized simulation settings to ensure a fair comparison. Table 1 details each method’s parameter settings and common configurations. Following best practices outlined in112, each method’s parameters were adjusted to their default configurations to minimize the risk of parameterization bias.

Performance metrics

This paper uses six performance indicators to compare the proposed MOPO’s effectiveness versus rival algorithms: PSP, IGDX in decision space, HV, spacing, GD, and maximum spread. The PS generated, and the actual PS of the evaluation function are compared using the PSP metric113. This is how the PSP metric is computed in Eq. (14).

where the cover rate is indicated by the CR measure, as indicated by Eq. (14). By calculating the arithmetic mean distance between the derived solutions of the method and the real solutions, IGDX defines the inverted generational distance in the solution domain114. Lower IGDX scores indicate more variability and integration. The IGDX metric is calculated as Eq. (15)

where a set of acquired solutions is denoted by U, and a set of true/reference solutions is represented by \(U^{*}\). The intersection proportion between the reference PS and the obtained PS is defined by the cover rate measure CR, which is computed as Eq. (16).

where \(\delta _{l}\) is calculated as follows Eq. (17):

Here, n indicates the decision space’s dimensionality; \(v_{l}^{\min }\) and \(v_{l}^{\max }\) represent the minimum and max of the acquired PS for the lth parameter. In contrast, \(V_{l}^{\min }\) and \(V_{l}^{\max }\) indicate the true PS for the lth variable113.

The HV metric estimates the extent of the objective space dominated by approximated solutions SLN and restricted by a reference point \(Ref=\left( Ref_{1}, \ldots , Ref_{u}\right) ^{T}\), which is defeated by all attributes on the PF61. It is calculated by Eq. (18)

where the Lebesgue metric of a set Z is denoted by \(\operatorname {L}(Z)\). For bi-objective test routines, Ref is typically set to \((1.2,1.2)^{T}\), and for three-objective test routines, to \((1.2,1.2,1.2)^{T}\).

The Spacing is calculated by Eq. (19).

The Generational distance (GD) is calculated in Eq. (20):

The Maximum spread is calculated by Eq. (21).

where n denotes the number of Pareto optimal solutions, \(d_i\) represents the Euclidean distance between the ith real Pareto optimal solution and the nearest solution found, m denotes the number of actual Pareto optimal solutions, and \(\bar{c}\) represents the average of all \(d_i\). \(d_i=\min _j\left( \left| f_1^i(\vec {x})-f_1^j(\vec {x})\right| +\left| f_2^i(\vec {x})-f_2^j(\vec {x})\right| \right)\) for \(i, j=1,2, \ldots , n\), where \(f_1^i(\vec {x})\) represents the objective value of the ith real Pareto optimal solution, and \(f_1^j(\vec {x})\) represents the objective value of the closest jth Pareto optimal solution obtained in the reference set.

To sum up, greater algorithmic efficacy is indicated by high PSP, low IGDX, high HV, low spacing, low GD, and low spread.

Series 1: analysis of MO CEC’2020 functions

The CEC’2020 benchmarks contain 24 assessment cases with varying multi-objective decision regions (featuring nonlinear, linear, convex, and concave PF topologies), which are used in this paper to evaluate the effectiveness of the proposed MOPO technique. The competing algorithms were tested over 20 independent runs, each consisting of 500 iterations, 50,000 function evaluations, and 100 individuals per test case. The results are presented as average (Mean) and standard deviation (SD) statistical indicators in Tables 2, 3 and 4. Additionally, Fig. 8 illustrates the boxplot of the PSP measure for the 24 test cases from the CEC’2020 benchmark. For this reason, the highest PSP score denotes better algorithm performance because, as Eq. (14) illustrates, the PSP metric is a maximization metric.

Boxplot graphs are considered the most significant method of illustrating the agreement between the presented data, even though they describe the data distribution. Figure 8 makes it evident that except MMF14_a, MMF11_I, MMF12_I, MMF13_I, and MMF16_l3, the boxplots of the developed MOPO technique are tight and have the highest scores for the majority of test routines. MOSMA does better in MMF11_I and MMF12_I than MOPO. MOPSO, IMOMRFO, and NSGA-II outperform MOPO in MMF14_a, MMF13_I, and MMF16_l3, respectively. Thus, we can conclude that the MOPO only performs mediocrely in MMF14_a, MMF11_I, MMF12_I, MMF13_I, and MMF16_l3. Generally, in most evaluation routines, the MOPSO, NSGA-II, and MOGWO algorithms yield subpar results with the lowest PSP scores; similarly, the MOWOA in certain test routines, notably MMF7, MMF11, and MMF12, exhibit poor performance.

The statistical findings of the PSP metric on 20 runs for each algorithm are presented in Table 2 as Mean and SD. Mostly, the MOPO produces the greatest results regarding evaluation concerns. But with MMF14_a, the MOPSO performs better than the MOPO. Furthermore, using MMF11_I and MMF12_I, the MOSMA performs better than the MOPO. Likewise, with MMF13_I and MMF16_l3, respectively, the IMOMRFO and the NSGA-II perform better than the developed MOPO. Similarly, Table 4 presents statistical findings for the IGDX metric for 20 runs of each algorithm, broken down into Mean and SD. In most test routines, the MOPO typically yields the best results. But with MMF14_a, the MOPSO performs better than the MOPO. Furthermore, using MMF11_I and MMF12_I, the MOSMA performs better than the MOPO. Likewise, with MMF13_I and MMF16_l3, the IMOMRFO and the NSGA-II perform better than the developed MOPO. Similarly, Table 4 shows the statistical findings of the HV metric in terms of Mean and SD over 20 runs for each algorithm. In general, the developed MOPO outperforms most test methods. However, the MOPSO beats the MOPO regarding MMF14_a, similar to the PSP and IGDX findings. Furthermore, the MOSMA outperforms the MOPO in MMF11_I and MMF12_I. Furthermore, the IMOMRFO and the NSGA-II outperform the developed MOPO using MMF13_I and MMF16_l3, respectively. After this point, focusing on the tabulated PSP, IGDX, and HV statistical findings, it can be stated that when using the PSP, IGDX, and HV measurements, the produced MOPO produces superior results in most evaluation cases. Still, it may rank second or third in its poorest performance.

Convergence curves offer a graphical representation essential for assessing the Pareto solutions (PSs) generated by different algorithms. Figure 9 through 11 illustrate the convergence behavior of the evaluated methods across various test routines. Specifically, routines MMF1, MMF14, and MMF15_a have been selected for detailed visualization to validate the PSs produced by the algorithms in question.

Figure 9 reveals that the MOPO algorithm consistently produces superior PSs compared to the other methods under review. In Fig. 10, it is evident that MOPO excels in locating more PSs within the decision space compared to the seven competing approaches. Moreover, compared to NSGA-II, MOWOA, and MOSMA, the PSs generated by MOPO are more evenly distributed throughout the global PSs in the test case space.

Figure 11 presents the PSs for the MMF15_a routine. Here, MOPO, MOPSO, MOSMA, and MOGTO demonstrate its capability to generate additional PSs in the decision space. Notably, MOPO’s PSs are more uniformly spread than its competitors.

In contrast, Figures 12 through 14 display the convergence curves of the Pareto fronts (PFs) for the MMF10_I, MMF12, and MMF15_a test routines. Figure 12 shows that the PF sets obtained by MOPSO, IMOMRFO, and MOGTO are significantly larger than those of other methods. However, MOPO achieves a notably superior PF compared to its peers.

Figure 13 illustrates the PF for the MMF12 function, where MOPO stands out by generating a more uniformly distributed PF across the objective space compared to NSGA-II, MOGWO, MOWOA, and MOSMA. Similarly, Fig. 14 demonstrates that MOSMA, MOGTO, and MOPO are particularly effective in identifying larger and more evenly distributed PFs in the objective space relative to their competitors.

The Wilcoxon rank-sum test is also used to conduct statistical analysis and confirm the significance of the observed results. Because metaheuristic algorithms are inherently unpredictable and stochastic, it is important to ensure that the performance outcomes do not result from chance115. Table 5 presents the results of the Wilcoxon test for each pair of algorithms in the PSs comparison. The MOPO’s performance is confirmed to be significant, with all p-values being \(\le\) 0.05, which is below the 5% significance level for the PSP, IGDX, and HV metrics.

Series 2: analysis of standard MO problems

To validate the developed MOPO, four well-known ZDT test routines from116 were employed for comparison against other competing algorithms. The simulations were conducted over 20 independent runs. As shown in Table 6, the GD, Spacing, and Spread metrics were used to assess convergence and efficacy. The following deductions can be made in light of the results:

-

Table 6 illustrates that, according to the GD measure that is utilized to validate the convergence output of simulation methods, the developed MOPO acquires superior findings over ZDT1, ZDT2, ZDT3, and ZDT6. Regarding spacing measure, the developed MOPO acquires the superior finding over ZDT1, ZDT2, and ZDT3 and is very competitive with MOPSO over ZDT6. For spread measure, the developed MOPO acquires the superior finding over ZDT1 compared to other rivals, whereas MOGTO achieves the superior finding over ZDT2 and ZDT3. Equally, the MOPSO acquires superior outcomes over ZDT6. Moreover, Figs. 15, 16, 17 and 18 depicts the coverage and convergence gained with each MO algorithm over all the considered ZDT routines.

-

The performance of the comparison approaches is validated in Table 7 over four constrained benchmark routines, namely the OSY, BNH, KITA, and CONSTR test routines. The simulation was run 20 times independently. In addition, measures such as GD, spacing, and spread are calculated to demonstrate the effectiveness and convergence of comparing approaches. The resulting GD findings represent the better convergence of the developed MOPO over the restricted routines, as seen by the bolded findings in Table 7, which illustrate that the developed MOPO acquires the premium findings across all test cases in terms of GD measure. Table 7’s third and fourth columns guarantee that the created MOPO is resistant against competing solutions regarding spacing and spread measures. Furthermore, findings demonstrate a better diversity of the developed MOPO than all the test routines.

Series 3: analysis of MO engineering problems

Engineering design challenges are crucial for assessing how well MAs operate. The performance of the proposed MOPO was evaluated in this study using four MO engineering design challenges: the speed reducer, disc brake, I-beam, and cantilever beam. Every MO algorithm is run 20 times in isolation. Additionally, the spacing metric is applied to the premium PFs produced for every MO algorithm; the given findings show how effective MOPO is at locating near-optimal PSs.

Speed reducer design

By addressing the speed reducer design optimization problem117, addresses the MO engineering design challenge. Seven design variables are included in this challenge: \(X = [x1, x2, x3, x4, x5, x6, x7]^{T}\), which stands for the gear face width, teeth module, number of pinion teeth, distance between bearings 1, the distance between bearings 2, diameter of shaft 1, and diameter of shaft 2, in that order. As stated in Eq. 22, the speed reducer design problem is a multi-criteria optimization task with two weight and stress reduction goals that are meant to be minimized.

Table 8 presents the best scores of the spacing metric for each MO method, while Fig. 19 shows the premium PFs acquired by the developed MOPO for the MO speed reducer design challenge.

where the upper and lower boundaries of the design variables are represented in the following:

\(\begin{array}{l} 2.6 \le x_{1} \le 3.6, 0.7 \le x_{2} \le 0.8, 17 \le x_{3} \le 28, 7.3 \le x_{4} \le 8.3, \\ 7.3 \le x_{5} \le 8.3, 2.9 \le x_{6} \le 3.9, 5.0 \le x_{7} \le 5.5. \end{array}\)

Disc brake design

Optimizing the disc brake design is the second MOO task tackled by118. The aim is to find the ideal dimension scores X for the design. \(X = [x1, x2, x3, x4]^{T}\) is the equation containing the four design variables. The discs’ inner and outer radii are denoted by x1 and x2, their engagement force by x3, and their number of friction surfaces by x4. As seen in Eq. 24, the disc brake design issue is an MOP with two minimization objectives: minimizing the brake’s mass (f1) and stopping time (f2). Table 9 displays the spacing measure premium scores for every MO approach. Furthermore, the premium gained PFs for the produced MOPO in the disc brake design challenge are visualized in Fig. 20.

where \(55 \le x_{1} \le 80,75 \le x_{2} \le 110,1,000 \le x_{3} \le\) 3,000 , and \(2 \le x_{4} \le 20\).

I-beam design challenge

The I-Beam design challenge focuses on determining the beam’s optimal dimension scores X, represented as \(X = [x1, x2, x3, x4]^{T}\). This is a multi-criteria optimization problem with two minimization objectives, as described in Eq. 26: minimizing the cross-sectional area (f1) and minimizing the deflection of the beam (f2)119. Table 10 presents the premium scores of the spacing measure for each MO method, while Fig. 21 illustrates the premium acquired PFs for the developed MOPO in the I-beam design challenge.

where constraints of problem dimensions are \(10 \le x_{1} \le 80,10 \le x_{2} \le 50, \quad 0.9 \le x_{3}\) and \(x_{4} \le 5\). The design of the MO I-beam problem is under the following restriction:

\(\text {Subject to: }g_{1}(X)=\frac{180000 x_{1}}{x_{3}\left( x_{1}-2 x_{4}\right) ^{3}+2 x_{2} x_{4}\left[ 4 x_{4}^{2}+3 x_{1}\left( x_{1}-2 x_{4}\right) \right] } +\frac{15000 x_{2}}{\left( x_{1}-2 x_{4}\right) x_{3}^{3}+2 x_{4} x_{2}^{3}} \le 16.\)

Cantilever beam design

The objective of the cantilever beam design problem is to determine the optimal dimension scores Q for the beam, represented as \(Q = [d, l]^{T}\), where d denotes the beam’s diameter and l represents the length of the beam. This is a multi-criteria optimization problem with two minimization objectives, as outlined in Eq. 27: minimizing the weight (f1) and minimizing the deflection of the beam (f2)120. Table 11 presents the premium scores of the spacing measure for each MO method. In contrast, Fig. 22 illustrates the premium acquired PFs for the developed MOPO in the cantilever beam design challenge.

The constraints for the problem dimensions are \(10 \le d \le 50\) and \(200 \le l \le 1000\). The following constraints apply to the cantilever beam design problem:

where \(\text{S}_{\text{y}} = 300\) Mpa and \(\delta _{\max } = 5 \;\) mm.

Series 4: analysis of real-world MO helical coil spring problem for automotive application

The helical coil spring is manufactured of IS4454 and has a constant axial compressive load. The primary goal is to develop a coil spring for use in a commercial three-wheeler automobile utilizing the MOO approach121,122. The design of a helical spring is a multi-criteria optimization issue with two goals: (i) maximize strain energy and (ii) minimize spring volume. Furthermore, the design process is subject to a set of eight limitations in order to achieve the required criteria. The spring has three design factors (Eq. (29)): mean coil diameter (D), wire diameter (d), and number of active coils (Na). The parameters used in the helical coil spring design problem are listed in Table 12. Moreover, the optimization findings in Table 13 show that the proposed MOPO algorithm produces more reliable solutions with less volume spring area and more durable strain energy than the other competitors; additionally, the design variables of spring designs generated by the proposed MOPO are near-optimal and could be utilized in automotive applications. Based on their priorities, decision-makers can choose the best spring design from the Pareto solutions offered by the proposed MOPO for the automobile industry.

Where d indicates wire diameter, D indicates coil diameter, and Na indicates number of coil turns. The spring volume (minimization goal) is depicted in Eq. (30), while the strain-energy (maximization goal) is illustrated in Eq. (31) as follows:

Where, \(f_{\max }\) indicates maximum load while \(\delta\) denotes deflection of the spring in Eq. (32) as follows:

The design process subjects to eight constraints as follows:

Observations from the experiments

In summary, the following observations from the experiments are:

-

1.

The proposed MOPO, being an optimization method, presents certain advantages:

-

The experimental findings showed that the MOPO has great coverage and convergence abilities (see Tables 2, 3, and 4). The MOPO algorithm inherits PO’s high convergence, whilst the archive and non-dominated sorting maintenance methods contribute to its high coverage.

-

The main mechanisms that guarantee convergence in PO and MOPO are the diversity of four distinct attitudes (foraging, remaining, communicating, and fear of strangers) and the use of Levy distribution.

-

Furthermore, a comparison is made between the archive solutions and the NDSs. This method highlights the coverage rate of the Pareto optimal front solutions (see Fig. 12) since solutions in the intensity zones in the archive are more likely to be discarded.

-

-

2.

The proposed MOPO demonstrates superior performance and effective convergence towards the true Pareto optimal sets compared to other MO algorithms evaluated. However, the MOPO has some limitations, as outlined below:

-

Based on the PO, MOPO incurs relatively high computational costs. This is due to PO’s requirement to mathematically simulate the behavior of the Pyrrhura Molinae parrot, including foraging, remaining, communicating, and responding to threats, which necessitates complex calculations at each cycle with time complexity of up to \(O(S_{in}^2 \cdot \log (S_{in}))\).

-

MOPO may occasionally become trapped in local optima, as indicated by the convergence technique used in PO to accelerate convergence (see Table 3 for MMF14_a, MMF11_I, and MMF13_I).

-

For MOPs with two or three objectives, the MOPO method is primarily intended. When the number of objectives rises, it becomes less effective, and a greater percentage of the solutions become non-dominated, quickly filling the archive. As a result, MOPO works well on issues with less than four objectives.

-

Furthermore, MOPO is specifically tailored for continuous variable optimization problems, as the PO algorithm is designed to address such problems exclusively.

-

-

3.

The similarities and differences of the proposed MOPO with the contemporary MO algorithms are as follows:

-

The proposed MOPO is similar to previous Pareto dominance-based algorithms, such as the NSGA-II, in that it uses the non-dominated sorting mechanism to get the Pareto solution for the population.

-

The proposed MOPO uses the archive memory to preserve the NDSs discovered throughout the optimization process, just like other archive-based multi-objective algorithms.

-

The proposed MOPO uses the original PO updating method to investigate additional NDSs with high convergence and coverage rates, which improves the MOPO performance over previous similar multi-objective algorithms.

-

Limitations and advantages of the proposed MOPO

This section specifies some of the advantages and weaknesses of the proposed MOPO method. The advantages listed below demonstrated how effective the MOPO algorithm was at resolving different challenging optimization issues:

-

The proposed MOPO demonstrates strong convergence and coverage abilities, leveraging the diverse behavioral strategies (foraging, remaining, communicating, and fear of strangers) inspired by the Parrot Optimizer (PO). This contributes to maintaining a balanced exploration-exploitation trade-off.

-

The integration of an outward archive and non-dominated sorting improves the maintenance of Pareto optimal solutions, enhancing performance metrics such as PSP, HV, and IGDX.

-

MOPO provides robust performance across a diverse set of constrained and unconstrained engineering design challenges, as shown in Tables 2, 3 and 4.

The proposed MOPO demonstrates strong performance across various benchmarks and real-world problems. However, like all algorithms, it is subject to limitations and areas where future research can contribute to further advancements. Some key limitations and opportunities for improvement include:

-

Most current algorithms, including MOPO, are tailored for a specific dimensionality of objectives. Designing a unified framework capable of effectively handling a dynamic range of objectives remains an open research challenge.

-

Integrating decision-maker preferences into the evolutionary process enhances practical applicability. Future extensions of MOPO could incorporate interactive mechanisms to adaptively refine solutions based on user-defined trade-offs.

-

Like other MOEAs, MOPO requires careful parameter tuning, which can be computationally expensive. Developing adaptive parameter control strategies to improve robustness and reduce computational costs is a critical direction for improvement.

Conclusion and future directions

This paper introduced MOPO, a multi-objective optimization algorithm inspired by the Parrot Optimizer (PO). MOPO extends PO’s capabilities by incorporating an external archive and utilizing Pareto dominance theory to refine its optimization strategy. We benchmarked MOPO against seven leading multi-objective optimization algorithms, such as MOPSO, NSGA-II, MOGWO, MOWOA, MOSMA, IMOMRFO, and MOGTO. The evaluation leveraged the CEC’2020 benchmark function, which contains 24 test functions with diverse PF and set characteristics. MOPO’s performance was rigorously assessed using multiple indicators such as Pareto Sets Proximity (PSP), Inverted Generational Distance in Decision Space (IGDX), and Hypervolume (HV). Additionally, MOPO’s effectiveness was demonstrated through extensive testing on eight popular constrained and unconstrained test issues and four engineering design issues using metrics like Generational Distance (GD), spacing, and maximum spread. Moreover, the real-world multi-objective optimization of helical coil spring for automotive application was tackled to depict the reliability of the proposed MOPO to solve real-world challenges. Our findings reveal that MOPO generates high-quality solutions and closely approaches the optimal PF. The algorithm’s power to retain the best candidates among true Pareto optimal solutions is enhanced by integrating a CD operator and an archive of NDS. This design ensures both effective exploration and exploitation throughout the optimization process.

Looking ahead, MOPO shows significant potential for application in machine learning domains, such as feature selection, parameter optimization, and data preprocessing. Future work will further refine MOPO and explore its significance in solving additional complex real-world issues.

Data availibility

The data sets provided during the current study are available: In116 and https://www3.ntu.edu.sg/home/epnsugan/index_files/CEC2020/CEC2020-3.htm.

Abbreviations

- PO:

-

Parrot optimizer

- MOPO:

-

Multi-objective parrot optimizer

- IMOMRFO:

-

Improved multi-objective manta-ray foraging optimization

- MOGTO:

-

Multi-objective gorilla troops optimizer

- MOGWO:

-

Multi-objective grey wolf optimizer

- MOWOA:

-

Multi-objective whale optimization algorithm

- MOSMA:

-

Multi-objective slime mold algorithm

- MOPSO:

-

Multi-objective particle swarm optimization

- NSGA-II:

-

Non-dominated sorting genetic algorithm II

- MOEA:

-

Multi-objective evolutionary algorithm

- CEC:

-

Congress on evolutionary computation

- PSP:

-

Pareto set proximity

- IGDX:

-

Inverted generational distance

- HV:

-

Hypervolume

- GD:

-

Generational distance

- MH:

-

Metaheuristic

- MO:

-

Multi-objective

- MOP:

-

Multi-objective problem

- MOO:

-

Multi-objective optimization

- PF:

-

Pareto front

- PS:

-

Pareto set

- NDS:

-

Non-dominated solution

- NDR:

-

Non-dominated ranking

- CD:

-

Crowding distance

References

Meng, Z., Li, G., Wang, X., Sait, S. M. & Yıldız, A. R. A comparative study of metaheuristic algorithms for reliability-based design optimization problems. Arch. Comput. Methods Eng. 28, 1853–1869 (2021).

Stork, J., Eiben, A. E. & Bartz-Beielstein, T. A new taxonomy of global optimization algorithms. Nat. Comput. 21(2), 219–242 (2022).

Houssein, E. H., Oliva, D., Samee, N. A., Mahmoud, N. F. & Emam, M. M. Liver cancer algorithm: A novel bio-inspired optimizer. Comput. Biol. Med. 165, 107389 (2023).

Charles, A. & Parks, G. Application of differential evolution algorithms to multi-objective optimization problems in mixed-oxide fuel assembly design. Ann. Nucl. Energy 127, 165–177 (2019).

Judge, M. A., Manzoor, A., Maple, C., Rodrigues, J. J. & ulIslam, S. Price-based demand response for household load management with interval uncertainty. Energy Rep. 7, 8493–8504 (2021).

Talbi, E.-G. A taxonomy of hybrid metaheuristics. J. Heuristics 8, 541–564 (2002).

Swan, J. et al. Metaheuristics “in the large’’. Eur. J. Oper. Res. 297(2), 393–406 (2022).

Emam, M. M., El-Sattar, H. A., Houssein, E. H. & Kamel, S. Modified orca predation algorithm: developments and perspectives on global optimization and hybrid energy systems. Neural Comput. Appl. 35(20), 15051–15073 (2023).

Mirjalili, S., Jangir, P. & Saremi, S. Multi-objective ant lion optimizer: A multi-objective optimization algorithm for solving engineering problems. Appl. Intell. 46(1), 79–95 (2017).

Emam, M. M., Houssein, E. H., Samee, N. A., Alohali, M. A. & Hosney, M. E. Breast cancer diagnosis using optimized deep convolutional neural network based on transfer learning technique and improved coati optimization algorithm. Expert Syst. Appl. 255, 124581 (2024).

Kalita, K. et al. Multi-objective exponential distribution optimizer (moedo): A novel math-inspired multi-objective algorithm for global optimization and real-world engineering design problems. Sci. Rep. 14(1), 1816 (2024).

Cheng, Z. et al. Hybrid firefly algorithm with grouping attraction for constrained optimization problem. Knowl.-Based Syst. 220, 106937 (2021).

Sun, K. et al. Hybrid genetic algorithm with variable neighborhood search for flexible job shop scheduling problem in a machining system. Expert Syst. Appl. 215, 119359 (2023).

Hosney, M. E. et al. Efficient bladder cancer diagnosis using an improved rime algorithm with orthogonal learning. Comput. Biol. Med. 182, 109175 (2024).

Emam, M. M., Houssein, E. H., Samee, N. A., Alkhalifa, A. K. & Hosney, M. E. Optimizing cancer diagnosis: A hybrid approach of genetic operators and sinh cosh optimizer for tumor identification and feature gene selection. Comput. Biol. Med. 180, 108984 (2024).

Li, M., Tang, J., Zeng, J. & Huang, H. A kriging-based optimization method for meeting point locations to enhance flex-route transit services. Transportmet. B: Transp. Dyn. 11(1), 1281–1310 (2023).

Priyadarshi, N., Bhaskar, M. S. & Almakhles, D. A novel hybrid whale optimization algorithm differential evolution algorithm-based maximum power point tracking employed wind energy conversion systems for water pumping applications: Practical realization. IEEE Trans. Industr. Electron. 71(2), 1641–1652 (2023).

Sun, M. et al. Double enhanced solution quality boosted rime algorithm with crisscross operations for breast cancer image segmentation. J. Bionic Eng.[SPACE]https://doi.org/10.1007/s42235-024-00590-8 (2024).

Emam, M. M., Samee, N. A., Jamjoom, M. M. & Houssein, E. H. Optimized deep learning architecture for brain tumor classification using improved hunger games search algorithm. Comput. Biol. Med. 160, 106966 (2023).

Houssein, E. H., Oliva, D., Samee, N. A., Mahmoud, N. F. & Emam, M. M. Liver cancer algorithm: A novel bio-inspired optimizer. Comput. Biol. Med. 165, 107389 (2023).

Lian, J. et al. Parrot optimizer: Algorithm and applications to medical problems. Comput. Biol. Med. 172, 108064 (2024).

Li, S., Chen, H., Wang, M., Heidari, A. A. & Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Futur. Gener. Comput. Syst. 111, 300–323 (2020).

Hamadneh, T. et al. On the application of potter optimization algorithm for solving supply chain management application. Int. J. Intell. Eng. Syst.[SPACE]https://doi.org/10.22266/ijies2024.1031.09 (2024).

Alomari, S. et al. Carpet weaver optimization: A novel simple and effective human-inspired metaheuristic algorithm. Int. J. Intell. Eng. Syst.[SPACE]https://doi.org/10.22266/ijies2024.0831.18 (2024).

Hamadneh, T. et al. Fossa optimization algorithm: A new bio-inspired metaheuristic algorithm for engineering applications. Int. J. Intell. Eng. Syst 17, 1038–1045 (2024).

Hamadneh, T. et al. Addax optimization algorithm: A novel nature-inspired optimizer for solving engineering applications. Int. J. Intell. Eng. Syst.[SPACE]https://doi.org/10.22266/ijies2024.0630.57 (2024).

Kaabneh, K. et al. Dollmaker optimization algorithm: A novel human-inspired optimizer for solving optimization problems. Int. J. Intell. Eng. Syst.[SPACE]https://doi.org/10.22266/ijies2024.0630.63 (2024).

Hamadneh, T. et al. Sculptor optimization algorithm: A new human-inspired metaheuristic algorithm for solving optimization problems. Int. J. Intell. Eng. Syst.[SPACE]https://doi.org/10.22266/ijies2024.0831.43 (2024).

Hamadneh, T. et al. Sales training based optimization: A new human-inspired metaheuristic approach for supply chain management. Int. J. Intell. Eng. Syst.[SPACE]https://doi.org/10.22266/ijies2024.1231.96 (2024).

Hamadneh, T. et al. Orangutan optimization algorithm: An innovative bio-inspired metaheuristic approach for solving engineering optimization problems. Int. J. Intell. Eng. Syst. 18(1), 45–58. https://doi.org/10.22266/ijies2025.0229.05 (2025).

Hamadneh, T. et al. On the application of tailor optimization algorithm for solving real-world optimization application. Int. J. Intell. Eng. Syst. 1, 10. https://doi.org/10.22266/ijies2025.0229.01 (2025).

Hamadneh, T. et al. Spider-tailed horned viper optimization: An effective bio-inspired metaheuristic algorithm for solving engineering applications. Int. J. Intell. Eng. Syst. 18(1), 29–41 (2025).

Zhou, A. et al. Multiobjective evolutionary algorithms: A survey of the state of the art. Swarm Evol. Comput. 1(1), 32–49 (2011).

Coello, C. A. C. et al. Evolutionary Algorithms for Solving Multi-objective Problems Vol. 5 (Springer, 2007).

Pradhan, P. M. & Panda, G. Solving multiobjective problems using cat swarm optimization. Expert Syst. Appl. 39(3), 2956–2964 (2012).

Sharma, S. & Kumar, V. A comprehensive review on multi-objective optimization techniques: Past, present and future. Arch. Comput. Methods Eng. 29(7), 5605–5633 (2022).

Khalid, A. M., Hamza, H. M., Mirjalili, S. & Hosny, K. M. Mocovidoa: A novel multi-objective coronavirus disease optimization algorithm for solving multi-objective optimization problems. Neural Comput. Appl. 35(23), 17319–17347 (2023).

Huy, T. H. B. et al. Multi-objective search group algorithm for engineering design problems. Appl. Soft Comput. 126, 109287 (2022).

Li, Y.-J. & Li, H.-N. Interactive evolutionary multi-objective optimization and decision-making on life-cycle seismic design of bridge. Adv. Struct. Eng. 21(15), 2227–2240 (2018).

Xu, Z. & Zhang, K. Multiobjective multifactorial immune algorithm for multiobjective multitask optimization problems. Appl. Soft Comput. 107, 107399 (2021).

Yacoubi, S., Manita, G., Chhabra, A., Korbaa, O. & Mirjalili, S. A multi-objective chaos game optimization algorithm based on decomposition and random learning mechanisms for numerical optimization. Appl. Soft Comput. 144, 110525 (2023).

Chopard, B., Tomassini, M., Chopard, B. & Tomassini, M. Performance and limitations of metaheuristics. Introd. Metaheuristics Optim.[SPACE]https://doi.org/10.1007/978-3-319-93073-2_11 (2018).

Lian, J. et al. Parrot optimizer: Algorithm and applications to medical problems. Comput. Biol. Med. 172, 108064 (2024).

Coello, C. A. C., Pulido, G. T. & Lechuga, M. S. Handling multiple objectives with particle swarm optimization. IEEE Trans. Evol. Comput. 8(3), 256–279 (2004).

Deb, K., Pratap, A., Agarwal, S. & Meyarivan, T. A fast and elitist multiobjective genetic algorithm: Nsga-ii. IEEE Trans. Evol. Comput. 6(2), 182–197 (2002).

Mirjalili, S., Saremi, S., Mirjalili, S. M. & Coelho, L. D. S. Multi-objective grey wolf optimizer: A novel algorithm for multi-criterion optimization. Expert Syst. Appl. 47, 106–119 (2016).

Kumawat, I. R., Nanda, S. J. & Maddila, R. K. Multi-objective whale optimization. in Tencon 2017-2017 IEEE Region 10 Conference, IEEE 2747–2752 (2017).

Houssein, E. H. et al. An efficient slime mould algorithm for solving multi-objective optimization problems. Expert Syst. Appl. 187, 115870 (2022).

Kahraman, H. T., Akbel, M. & Duman, S. Optimization of optimal power flow problem using multi-objective manta ray foraging optimizer. Appl. Soft Comput. 116, 108334 (2022).

Houssein, E. H., Saad, M. R., Ali, A. A. & Shaban, H. An efficient multi-objective gorilla troops optimizer for minimizing energy consumption of large-scale wireless sensor networks. Expert Syst. Appl. 212, 118827 (2023).

Fonseca, C. M. & Fleming, P. J. An overview of evolutionary algorithms in multiobjective optimization. Evol. Comput. 3(1), 1–16 (1995).

Nebro, A. J., Durillo, J. J., Coello, C. A. C. & Analysis of leader selection strategies in a multi-objective particle swarm optimizer. in IEEE Congress on Evolutionary Computation. IEEE, vol. 2013, 3153–3160 (2013).

Lechunga, M. Mopso: A proposal for multiple objective particle swarm optimizations. in Proceedings of the 2002 Congress on Evolutionary Computation, Part of the 2002 IEEE World Congress on Computational Intelligence 2051–11056 (2002).

Babu, B. & Anbarasu, B. Multi-objective differential evolution (mode): An evolutionary algorithm for multi-objective optimization problems (moops). in Proceedings of international symposium and 58th annual session of IIChE (Citeseer, 2005).

Gunantara, N. A review of multi-objective optimization: Methods and its applications. Cogent Eng. 5(1), 1502242 (2018).

Bhesdadiya, R. H., Trivedi, I. N., Jangir, P., Jangir, N. & Kumar, A. An nsga-iii algorithm for solving multi-objective economic/environmental dispatch problem. Cogent Eng. 3(1), 1269383 (2016).

Ali, M., Siarry, P. & Pant, M. An efficient differential evolution based algorithm for solving multi-objective optimization problems. Eur. J. Oper. Res. 217(2), 404–416 (2012).

Mirjalili, S. Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 27(4), 1053–1073 (2016).

Manzoor, A. et al. A priori multiobjective self-adaptive multi-population based jaya algorithm to optimize ders operations and electrical tasks. IEEE Access 8, 181163–181175 (2020).