Abstract

Generally, music genres have not new established framework, since they are often determined by the composer’s background by cultural or historical impact and geographical origin. In this work, a new methodology is presented based on deep learning and metaheuristic algorithms to enhance the performance in music style categorization. The model consists of two main parts: a pre-trained model, a ZFNet, through which high level features are extracted from audio signals and a ResNeXt model for classification. A fractional-order-based variant of the Grey Lag Goose Optimization (FGLGO) algorithm is used to optimize the parameters of ResNeXt to boost the performance of the model. A dual-path recurrent network is employed for real-time music generation and evaluate the model on two benchmark datasets, ISMIR2004 and extended Ballroom, compared to the state-of-the-art models included CNN, PRCNN, BiLSTM and BiRNN. Experimental results show that with accuracy rates of 0.918 on the extended Ballroom dataset and 0.954 on the ISMIR2004 dataset, the proposed model improves accuracy and efficiency incrementally over existing models.

Similar content being viewed by others

Introduction

Music may be categorized into many genres using various methods, such as popular music or secular music. The subjective and problematic character of music’s creative essence frequently leads to overlapping genres and subjective categorization1. Conversely, genre pertains to distinct classifications of art2. Presently, there is a lack of agreement or a thorough framework regarding music genres3. Automatically classifying music works as belonging to certain genres is what genre identification is all about4. Music retrieval, music education, analysis, and recommendations are just a few of the many real-world uses for this kind of work5. Genre identification in music, however, is no easy task since music is both nuanced and subjective, changing over time and depending on the listener6.

A kind of hybrid method was suggested by Yang et al.7 called PRCNN (Parallel Recurrent Convolutional Neural Network). The focus of the Bi-RNN blocks and parallel Recurrent CNN is extracting spatial features and temporal directives of the frame. The data was classified by feeding syncretic vectors into the function of Softmax. Regarding the GTZAN the performance of ResNet-18, AlexNet, PRCNN, and VGG-11 were 87.6%, 88.8%, 92.0%, and 88.7%, respectively. Regarding the dataset of Extended Ballroom, the performance of the VGG-11, ResNet-18, PRCNN, and AlexNet were 93.4%, 93.38%, 92.5%, and 92.0, respectively. Eventually, the study revealed the dominance of the suggested model over others.

Prabhakar and Lee8 suggested five techniques for categorizing distinct genres of music. The techniques were classifier of deep learning along with BiLSTM (Bidirectional Long Short-Term Memory) as BAG DLM, TSM-RA (Tangent Space Mapping on the basis of Riemannian Alliance), classification by the use of Transfer SVM (Support Vector Machine), classification employing sequential machine learning examination along with SDA (Stacked Denoising Autoencoder) classifier, and ELNSC-WVG (Elastic Net Sparse Classifier on the basis of Weighted Visibility Graph). Three music datasets were involved, including ISMIR 2004, GTZAN, and MagnaTagATune. It was demonstrated that categorization’s value of accuracy was 93.51%that was gained while employing the model of BAG.

A new model was proposed by Yu et al.9 that attention mechanism is integrated on the basis of Bidirectional RNN (Recurrent Neural Network). This model considers the variations in spectrums. The present study has employed 2 models that are on the basis of attention mechanism: serial and parallelized attention. It was discovered in the current research that superior outcomes could be obtained by PLA (Parallelized Linear Attention). Considering GTZAN, it was revealed that SLA (Serial Linear Attention) and PLA could achieve accuracy value of 75.8% and 76.7%, respectively. In addition, SLA (Serial Linear Attention) and PLA could, in turn, achieve accuracy value of 90.6% and 90.7 considering Extended Ballroom.

Liu et al.10 created a CNN (Convolutional Neural Network) that considered multi-scale regularity of time. This was achieved by incorporating appropriate semantic attributes, which were decision-maker. The aim was to distinguish between various music genres. There are some objective datasets based on which the results were assessed. Those datasets are Extended Ballroom, GTZAN, and Ballroom. Subsequently, the categorization accuracy of Extended Ballroom, GTZAN, and Ballroom were 97.2%, 93.9%, and 96.7%. the dimension of the trained model turned out to be 0.18 M, which was employed in various tests.

Cheng et al.11 desired to enhance the efficacy of the users while looking for various genres of music. To do so, a CNN (Convolutional Neural Network) was integrated with RNN (Recurrent Neural Network) structure for implementing a model of music genre categorization. During the pre-training stage, the MFC (Mel-Frequency Cepstrum) was utilized as sound instances’ vector of feature. Librosa was utilized for converting the original files of audio into MFC type. The purpose was achieving pattern of sensory that was near hearing ability of human beings. The model was trained by CRNN approach and MFCC (Mel-Frequency Cepstral Coefficients) that its value of accuracy was 43%. Since the accuracy value is really little, there should be some more studies in this field and employ some novel algorithms for enhancing the model’s efficacy and efficiency.

In order to overcome the limitations of existing models, a novel approach is proposed that combines deep learning techniques with a metaheuristic algorithm. This innovative strategy aims to achieve superior precision and effectiveness in the identification of music genres. The main objective is to use the strengths of both deep learning and metaheuristic optimization to develop a robust and powerful model for music genre identification.

The contribution can be summarized in three key aspects. Firstly, a pre-trained ZFNet is utilized to extract high-level features from the audio signals. This simplifies the feature extraction process and improves the quality of feature representation. Then, a cutting-edge deep neural network called ResNeXt is employed for the classification task. This network is capable of handling large-scale and fine-grained classification problems with remarkable efficiency and accuracy. Finally, a fractional-order-based version of the Grey lag Goose Optimization (FGLGO) algorithm is incorporated to optimize the parameters of ResNeXt. This technique enhances the optimization process’s exploration and exploitation capacities, preventing the model from sticking in local optima and premature convergence.

The study uses a pre-trained ZFNet and a ResNeXt model for feature extraction and classification respectively, which is perfect for this caliber of audio genre identification. ZFNet which is an improved version of AlexNet as it well serves for the training and validating of high-level features from the spectrograms with improved interpretability and accuracy. Additionally, by utilizing smaller filter sizes and strides in the initial convolutional layers, it enables the capture of finer-grained details from audio signals, a key factor in differentiating subtle distinctions across various music genres. Furthermore, the deconvolutional layers of ZFNet offer insights into the feature extraction process, allowing for insight into how the model interprets audio properties. For ZFNet, it is a particularly well-suited model for extracting spectral features such as spectral crest, spectral entropy, and pitch that are most relevant for the genre.

For the classification task, ResNeXt model that has a better performance for large-scale and fine-grained classifications was chosen. ResNeXt proposes a novel dimension that can be added to existing residual networks called “cardinality”, or the number of parallel paths within a residual block. This architecture increases the ability of the model to learn varying and intricate representations without adding excessive computational overhead ResNeXt outperforms traditional CNNs and RNNs in terms of accuracy and undoubtedly in terms of efficiency, making it a good fit for music genre classification task, wherein the difference between genres can be highly subtle. Moreover, the residual connections in ResNeXt help prevent the vanishing gradient issue from occurring, allowing for deeper networks to be effectively trained, which ensures robustness.

Research data

Our proposed method for music genre detection was evaluated using two datasets, namely extended Ballroom and ISMIR2004, which are explained in details in the following.

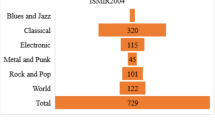

ISMIR2004

The ISMIR is a standard dataset that was collected from the 5th International Conference on Music Info.

rmation Retrieval that was held in 2004 which aimed to evaluate different approaches and methods for music genre classification using a common dataset and evaluation metrics12. The dataset consisted of 1000 audio excerpts of 30 s each, evenly distributed over 6 genres: blues and jazz (26 samples), classical (320 models), world (122 models), metal and punk (45 models), electronic (115 models), and rock and pop (101 models). Table evaluation metrics were accuracy, precision, recall, and F-measure. The dataset can be accessed through the provided link:

https://ismir2004.ismir.net/genre_contest/index.html#genre.

Extended ballroom

The extended Ballroom dataset is a renowned Ballroom dataset’s upgraded iteration. It encompasses a wider range of tracks compared to its predecessor, including a comprehensive list of track repetitions such as exact duplicates and karaoke versions. Consequently, this dataset expands the potential applications in this domain. The creation of the Extended Ballroom dataset was necessitated by several factors: the relatively limited number of tracks, subpar audio quality, and the continued availability of the original website, which still allows users to pay attention to thirty second quotations while providing tempo and genre annotations13. To compile the Extended Ballroom dataset, all audio excerpts from the website were extracted, accompanied by all available meta-data. Furthermore, various types of repetitions among the 4,180 downloaded tracks were semi-automatically annotated. This Extended Ballroom dataset offers numerous advantages, including improved audio quality, six times the number of tracks, the addition of 5 novel rhythm annotations, and classes for diverse kinds of repetitions.

The dataset can be accessed through the provided link:

http://anasynth.ircam.fr/home/media/ExtendedBallroom.

Table 1 tabulates various genres and the number of samples for ISMIR2004 and extended Ballroom.

This table presents a complementarity between the datasets either in its nature (a wider variety of genres) or in its size (the sheer size of extended Ballroom compared to ISMIR2004). This essentially as this gives us an idea about the composition of the dataset and how well can the model generalize over different genres and dataset sizes.

We did some improvements to mitigate risks of overfitting during model development and evaluation. We used data augmentation techniques (e.g., pitch shift, time stretch, noise addition) to artificially increase the number of data and to train for better generalization. Second, we applied regularization techniques, such as dropout and weight decay, to avoid overfitting to particular features by the model.

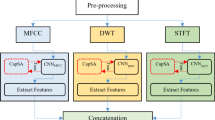

Audio property extracting and normalization

As part of our research into music genre classification, the features that capture the spirit of different genres are extracted by carefully analyzing the audio signal14. The next classification assignment will be well-grounded on the extensive collection of different data that painstakingly retrieved. Figure (1) defines is a block schematic of the feature extraction technology that is suggested.

In this layer, one starts with raw audio input and transforms it through several configurations (pre-processing, spectral analysis, compute features) to extract meaningful patterns. Instead, the entire audio signals are transformed into features like spectral crest, spectral entropy, spectral flux, pitch, and harmonic ratio. These features are then standardized for uniformity and compatibility for further analysis. The resulting set of characteristics is the input of the classification model in the process. Taming the audio data is an essential step; the model needs to prioritize particular characteristics, and this progression is of temporal nature. Using the schematic above, we present, in a visually appealing manner, our feature extraction pipeline, their integration, and optimal results. In the following, the required explanation about the utilized features is explained in details.

Audio features are chosen for their capacity to reflect unique and significant attributes of audio signals pertinent to genre classification15. Spectral crest quantifies the sharpness of peak of a spectrum, making it a good candidate for genre classification for those genres with harmonic structures, such as classical or jazz. The spectral entropy is a measure of chaos in the spectrum of the sound wave which can be used to discriminate between genres defined by their tonal complexity (e.g., electronic versus acoustic music). Spectral flux quantifies the amount of spectral change between consecutive time frames in the audio signal over time; this component captures dynamic aspects of the audio like rhythm and transitions between sound segments, enabling the distinguishability of sound genres, e.g., rock or dance music.

This selection was based on the known usefulness of these features in previous studies and represent important aspects of music (timbre, rhythm, harmony) while being computationally cheap and interpretable by other algorithms16. These characteristics are then utilized by the proposed model to successfully learn the nuances of each genre for increased accurate robust classification. Their relevance and selection criteria are an integral part of the model’s design and help to justify their usage in the feature extraction process.

Spectral crest

Spectral crest quantifies the degree of sharpness or prominence of a spectrum’s peak. This feature refers to the ratio between the highest amount in the spectrum and the average amount of the spectrum. A high spectral crest signifies the presence of a few numbers of prominent components in the spectrum, while a low spectral crest suggests that the spectrum is relatively even or noisy.

The mathematical expression for spectral crest is:

The magnitude spectrum of the signal is represented by \(\:{S}_{k}\), and the frequency range of interest is denoted as \(\:[{b}_{1},{b}_{2}]\).

The spectral crest is a spectral descriptor frequently employed for audio feature extraction. It is capable of capturing some elements of the sound’s timbre, tone, and musical style. For instance, a pure tone has a spectral crest value of 1, but white noise has a spectral crest value that is about 0. Figure (2) illustrates an instance of feature extraction from a sound wave by spectral crest, precisely obtained from a Classical sound.

The spectral crest, defined as the ratio of maximum value of the spectrum to the mean value of the spectrum, gives an idea of the concentrating or dispersing of spectral energy. Extracted spectral crest feature From the above spectral crest example, we note that the Classical music sound wave contains distinguishable features for Classical music as compared to other genres, such as the directly visible harmonic structure in the lower frequencies and complex harmonics throughout. Here, the spectral crest provides an intuitive impression about how much key audio features are extracted, and a visual representation is given to justify the step from the raw signal to the processed feature for the respective genre classification task.

Spectral entropy

Spectral entropy quantifies the level of disorder or instability present in a spectrum. The term refers to the result obtained by multiplying the normalized spectrum by its logarithm and then taking the negative sum of the product. A high spectral entropy signifies a spectrum that is more uniform or random, whereas a low spectral entropy signifies a spectrum that is more concentrated or organized. The mathematical expression for spectral entropy is:

\(\:{p}_{k}\) is the spectrum of the signal that has been normalized, and \(\:\left[{b}_{1},{b}_{2}\right]\) is the specific frequency range that is of interest. The normalization is achieved by performing a division operation on the spectrum, dividing it by the total of its values, i.e., \(\:{\sum\:}_{k\in\:\left[{b}_{1},{b}_{2}\right]}{p}_{k}=1\).

One spectral descriptor that can be utilized for audio feature extraction is spectral entropy. Some of a sound’s depth, variety, and richness can be captured by it. As an illustration, the spectral entropy of a pure tone is zero, whereas that of white noise is around one. In terms of spectral entropy, a flute has a lower value than a piano. Figure (3) illustrates an instance of feature extraction from a sound wave by spectral entropy, precisely obtained from a Classical sound.

Spectral entropy gives us a way of measuring the order of the spectral representation, by taking the negative sum of the spectrum normalized to the total energy and multiplying that by the logarithm of a normalized spectrum. Here is an example of extracted spectral entropy feature for the Classical music sound wave which keeps track of the complexity and richness of sound. This shows the capacity of spectral entropy in differentiating between major music features helping to compare the original signal line with the feature line and the importance of it for any music genre recognition/tasks.

Spectral flux

Spectral flux is a measurement of the way a spectrum varies as time passes. It is computed as the squared difference between the normalized spectra of 2 successive frames. A high spectral flux indicates fast spectrum change, whereas a low spectral flux indicates greater spectrum stability. The mathematical formula for spectral flux is:

where, \(\:{p}_{k}^{\left(n\right)}\) and \(\:{p}_{k}^{\left(n-1\right)}\) represent the normalized spectra of the current and previous frames, respectively.

Spectral flux is a spectral descriptor that has the potential to be utilized in audio feature extraction. It has the ability to capture various elements of a sound’s dynamics, rhythm, and onset. For instance, a sound exhibiting a high spectral flux could suggest an abrupt alteration in pitch, timbre, or volume, like a drum strike, a chord progression, or a note attack. Conversely, a sound with a low spectral flux might indicate a consistent or prolonged sound, such as a drone, a hum, or a note decay.

Figure (4) illustrates an instance of feature extraction from a sound wave by spectral flux, precisely obtained from a Classical sound.

Spectral flux measures the rate of spectral change, computed as the squared difference between two successive frames normalized by the spectra of both frame. The extracted spectral flux feature in this example captures the dynamic fluctuations and transitions, emphasizing small-time features that classify its rhythmic and structural variation in the Classical music sound wave. Here it’s clear to see the outcome and effectiveness of spectral flux in characterizing the temporal components of audio signals and in turn is used widely to determine and describe temporal aspects of sound, in particular to further music genre classification/analysis.

Pitch

The pitch of a sound is determined by its perceived fundamental frequency, which is measured using various techniques such as spectral analysis, autocorrelation, and cepstrum. These methods help extract the pitch from an audio signal by analyzing its harmonic structure. One commonly used algorithm for estimating pitch is the YIN algorithm, which identifies the period of the signal that minimizes the difference function. The pitch is then calculated as the inverse of this period, indicating the highness or lowness of the sound.

where, \(\:{F}_{s}\) specifies the frequency of the sample, and \(\:{R}_{x}\left(\tau\:\right)\) describes the signal autocorrelation function and is achieved as follows:

where, \(\:\tau\:\) describes the delayed samples, and \(\:x\left(n\right)\) and \(\:x(n+\tau\:)\) determine the signal value at the current time and the next time point, respectively. Figure (5) illustrates an instance of feature extraction from a sound wave by pitch, precisely obtained from a Classical sound.

Common methods for calculating pitch include spectral analysis and autocorrelation being one popular method used to approximate the period of the signal to yield pitch as its inverse. Here, the extracted pitch feature from the Classical music sound wave successfully reflects the harmonic structure and musicality, the tonal and melodic characteristics. A useful thing about pitch being visually represented in this way is that the clear contrast between processed feature and raw signal allows us to see the importance of this quality in determining audio traits for both tasks like classifying music, as well as analysis.

Harmonic ratio

The harmonic ratio quantifies the harmonic characteristics of a sound by comparing the energy of the harmonic components to the overall signal energy. A greater harmonic ratio suggests a more melodic or musical sound, whereas a lower harmonic ratio suggests a noisier or less harmonious sound. The mathematical expression for the harmonic ratio is as follows:

where, \(\:{S}_{k}\) describes the signal’s magnitude spectrum, \(\:N\) specifies the entire number of frequency bins, and \(\:{N}_{h}\) represents the bins’ number that are the fundamental frequency’s harmonics. The fundamental frequency refers to the lowest frequency component of a periodic signal, while the harmonics are the integer multiples of the fundamental frequency.

The harmonic ratio serves as a spectral descriptor that enables the extraction of audio features. It encompasses various aspects of sound, including pitch, timbre, and quality. A pure tone possesses a harmonic ratio of 1, whereas white noise exhibits a harmonic ratio that approaches 0. Instruments with a consistent and distinct pitch, like the flute, possess a higher harmonic ratio compared to instruments with intricate and fluctuating pitches, like the guitar. Similarly, musical genres abundant in melody and harmony, such as classical music, tend to have a higher harmonic ratio than genres characterized by noise and distortion, like metal17. Figure (6) illustrates an instance of feature extraction from a sound wave by Harmonic ratio, precisely obtained from a Classical sound.

This ratio provides an overview of how melodic or noisy the sound is. It is calculated by dividing the sum of the magnitudes at harmonic frequencies over the sum of the magnitudes across all frequencies. Analogous to discussed classical music example, extracted harmonic ratio feature captures harmonic richness and tonality through structured and musical nature of classical music sound wave. The audio Harmonic ratio visualization is an example of the Harmonic ratio’s usefulness in identifying informative audio characteristics; it emphasizes the difference between the original incoming audio signal and the transformed feature and it’s clear that’s a useful feature to have on our music genre classification and analysis pipeline.

Signal normalization

Normalization is a method employed to scale the audio features by transforming their values into a range that extends from 0 to 1. This is accomplished by calculating the difference between every amount and the min value of the property, and then dividing it by the span of the property18. Here, we used Min-Max method for normalization. The formula for Min-Max Normalization is as stated below:

where, \(\:S\) specifies the original value, and \(\:\underset{\_}{\text{S}}\) and \(\:\overline{\text{S}}\), in turn, represent the min and the max amounts of the features. This process yields the normalized value, \(\:{S}_{N}\).

The proposed ZFNet/ ResNeXt/FGLGO model

Following the completion of information pre-processing and the acquisition of spectrograms, the information undergoes the ZFNet/ResNeXt model approach to facilitate data modification, feature extraction, and recognition. This study explores a novel method to extract music genre features. Initially, the ZFNet neural network, which has been pretrained, is customized using music genre datasets19. Subsequently, the residual layers of the adapted ZFNet are regulated by replacing an ResNeXt. Finally, the Fractional-order Grey lag Goose Optimization enhances the extension capabilities of the ResNeXt.

ZFNet

In 2013, Zeiler and Fergus unveiled ZFNet as a new design of a convolutional neural network. The model’s performance and interpretability were enhanced by enhancements made to AlexNet, which served as its foundation. In order to extract finer-grained information from input signals, ZFNet employs smaller strides and filter sizes in its first two convolutional layers. Deconvolution is another tool that ZFNet use to help comprehend the network’s learning process and see the intermediate feature maps.

By using ZFNet on the spectrograms of the audio signals, feature extraction in music genre classification tasks may be accomplished. The amplitude and frequency of the sound as it evolves over time may be seen in a spectrogram. We can get high-level features that encapsulate the characteristics of many musical genres-rhythm, tempo, pitch, and timbre by feeding the spectrograms into ZFNet.

One linear procedure that may be used to create a feature map from an input signal is convolution, which involves applying a filter, also known as a kernel, to the input image. At each place, the filter calculates the dot product between itself and the input patch as it slides across the input picture20. Unless padding or striding is used, the input picture and the output feature map have the same spatial dimensions. The convolution formula is as follows:

The given equation represents the relationship between the input image (\(\:x\)), the filter (\(\:w\)), the bias vector (\(\:b\)), and the output feature map (\(\:y\)). Here, \(\:x\) is a signal of dimensions \(\:H\times\:W\times\:C\), \(\:w\) is a filter of dimensions \(\:F\times\:F\times\:C\times\:K\), \(\:b\) is a bias vector of size \(\:K\), and \(\:y\) is the resulting feature map with dimensions \(\:H\times\:W\times\:K\).

The next layer is deconvolution. Deconvolution refers to the process of undoing the convolution operation, which involves generating an input signal from an output feature map. In deconvolution, the same filter and bias are utilized as in convolution, but the forward and backward passes are swapped. The deconvolution formula is as follows:

where, \(\:x\) describes the input image of size \(\:H\times\:W\times\:C\), \(\:w\) represents the filter of size \(\:F\times\:F\times\:C\times\:K\), \(\:y\) specifies the output feature map of size \(\:H\times\:W\times\:K\), and \(\:b\) signifies the bias vector of size \(\:C\).

Afterward, Rectified Linear Unit has been employed. The Rectified Linear Unit (ReLU) is a type of nonlinear activation function that performs element-wise thresholding on the input, effectively converting any negative values to zero. The mathematical expression for ReLU is as follows:

The size of \(\:x\) and \(\:y\) can be either scalars, vectors, or matrices, but they must be of the same size.

The next layer is Max Pooling. Max Pooling is a technique used for downsampling an input by applying a max filter to non-overlapping regions. This operation facilities in diminishing the input’s spatial dimensions. Within each region, the max filter identifies the maximum value, which is then returned. The formula for performing max pooling is as follows:

Given an input of size \(\:H\times\:W\times\:C\), the output \(\:y\) has a size of \(\:[\frac{H-P}{s}]+1\times\:[\frac{W-P}{s}]+1\times\:C\), where \(\:P\) represents the pooling size and \(\:S\) represents the pooling stride.

A fully connected layer is a linear operation that establishes connections between every input unit and every output unit, resulting in a vector of scores. The equation for a fully connected layer is as follows:

Where, \(\:x\) represents the input vector of size \(\:N\), \(\:W\) specifies the weight matrix of size \(\:N\times\:M\), \(\:b\) describes the bias vector of size \(\:M\), and \(\:y\) is the output vector of size \(\:M\).

Finally, classifiers like linear support vector machines (SVMs) or softmax layers may be trained using these characteristics to forecast which musical genres a piece of music belongs to.

ResNeXt

Residual Neural Network (ResNet) is a deep neural network architecture precisely developed to address the issue of vanishing gradients in convolutional neural networks (CNNs) with many layers. The vanishing gradient problem arises when the gradients become extremely small as they pass through multiple layers, hindering effective learning and optimization. ResNet introduces the concept of residual connections or skip connections, which enable information to bypass specific layers within the network.

By directly utilizing the activations from previous layers, ResNet facilitates the flow of gradients and simplifies the learning of deep representations. The fundamental idea behind ResNet lies in the utilization of residual blocks, containing of multiple convolutional layers tracked with skip connections. These skip connections merge the original input of a block with its output, creating a shortcut path for information flow. ResNet’s hyperparameters are reduced by the homogeneous neural network ResNeXt through the incorporation of ‘cardinality’ into the width and depth of ResNet. The transformation’s size is specified by the cardinality. In ResNeXt model, the block is represented in Fig. (7).

The left component diagram represents a conventional ResNet block, whereas the rightmost diagram represents the ResNeXt block with a cardinality of 32. The transformations are iterated 32 times, and the final result is consolidated.

Two rules describe ResNeXt’s essential architecture. To start, the hyper parameters are shared across blocks that generate same-dimensional spatial maps. When the spatial map is reduced by a factor of 2, the block widths are doubled. One element may be expressed in terms of units for both the input and output vectors.

The last layer, characterized by its arbitrary nature, is represented as the \(\:{\left(v\:-\:1\right)}^{th}\) layer. The weight component propagating input from the \(\:{\left(v-1\right)}^{th}\) layer to the \(\:{v}^{th}\) layer is denoted as \(\:y\left(v\right)\in\:{C}_{r\times\:r}\), whereas the recurrent weight factor of the \(\:{v}_{th}\) layer is signified as \(\:Y\left(v\right)\in\:{C}_{r\times\:V}\:\). The weight factor of each layer is denoted by \(\:C\) in this context. The input vector components can be mathematically formulated as follows:

where, \(\:q\) is the arbitrary unit integer for the \(\:{v}^{th}\) layer and \(\:r\) is an arbitrary unit that the total number of units for the \(\:{u}^{th}\) layer, \(\:q{\prime\:}\) stands for an arbitrary elements unit number in layer \(\:v\), \(\:{w}_{qi}^{\left(v\right)}\) specifies the factor of \(\:{Y}^{\left(v\right)}\), \(\:{\epsilon\:}_{qq}^{{\prime\:}\left(v\right)}\) determines the factor of \(\:{y}^{\left(v\right)}\).

The output vector components of layer \(\:v\) could be achieved in this way:

The factor of \(\:{Y}^{\left(v\right)}\) and \(\:{y}^{\left(v\right)}\) is denoted by the term \(\:{w}_{qi}^{\left(v\right)}\) and \(\:{\epsilon\:}_{qq}^{{\prime\:}\left(v\right)}\). The unit number of the arbitrary element in the layer \(\:y\) is represented by the symbol \(\:q{\prime\:}\). The mathematical representation for the components of the output vector in the vth layer could be expressed as:

Furthermore, the proposed recurring feature set improves the accuracy of sentiment analysis classification. The term’s weight value is transformed into \(\:{w}_{qi}^{\left(v\right)}\) to streamline the classification method, and the term’s unit in the function is denoted as \(\:{U}_{q}^{(v-1,w)}\). The subsequent equation solves the offered deep learning construction’s bias term.

where, \(\:{U}^{\left(v,w\right)}\) represents the system’s output layer.

a proper technique to improve the efficacy of the ResNeXt is to optimally selected its arrangement based on weight factor. In order to streamline the organization procedure, the optimal weight factor is currently being handled as a solution vector. This is determined based on the cost function and can be achieved with minimizing the error function, i.e.,

here, n shows samples’ number, and \(\:{U}_{i}^{\left(v,w\right)}\) and \(\:{E}_{i}^{\left(v,w\right)}\) represent the actual output of network and the estimated output, respectively.

in this study, by employing a metaheuristic technique, the suggested deep learning technology’s weight amounts are tailored to achieve the utmost effectiveness. Consequently, this leads to an improvement in the classification performance. The present study uses a fractional-order variant of the Grey lag Goose Optimizer for achieving this purpose.

The following section determines how we designed the proposed fractional-order variant of the Grey lag Goose Optimizer.

Fractional grey lag Goose optimization

This portion explains the recommended Grey lag Goose Optimization’s mathematical and motivation model. The communal manner in addition to the geese’s energetic action assisted as the source for the stimulus aimed at GGO algorithm.

-

A)

Geese: communal manner.

Faithfulness is one of the most famous features of Geese. They spend their lifetime with their partners and are so caring for their offspring. Frequently, they have a tendency to be near the sick or hurt spouse or chicken. They remain to do so when the winter season is forthcoming. The remaining part of the group migrates for heater climates. Once a spouse of a goose passes away, the goose tends to be lonely, and several geese might select to be alone the rest of their lifespans, declining to remarriage forever.

Geese enjoy to pomposity their feathers whilst foraging for nutrition in the lawn and get-together leaves and pushes to enhance their dwellings.

Annually during the spring season, eggs are hatched. The man geese guard the hidden eggs in their nest whilst the women watch them for 30 days. Several geese have a preference to utilizing the identical nest that eggs are lied over of many years.

-

B)

Geese: Energetic manner.

An assortment of geese family gathers together to make a larger collection identified as a gaggle, here the birds watch out for one another. In this collection, whilst the remain members of this group is busy consuming food, there are naturally 1 or 2 protectors, ‘‘sentries’’, that is watching out for predators. The gaggle members substitute the role of the guard, similar to ship sailors watching out for either pirates or enemies.

Healthy geese have a tendency to care for wounded peers and injured geese would gather together to defend one another from predators and to assistance another in discovering nourishment. Ducks are known as energetic and sociable creatures when going in large clusters are at their most relaxed, and when they are on the water, they refer to as ‘‘paddlings’’. During day, they spend their times on seeking for nourishment at night and in the low deep water or the grassland, and, they paddle and rest next to the additional members. Ganders are able to show the wonderful deeds of aeronautical. They migration yearly in big flocks while they fly 1000 km in just one flying.

Whilst soaring, a flock of geese shape themselves in a formation resembling the letter “V”. Accordingly, the front geese could reduce the effect of air struggling on ones who is at the raer. This gives this chance to the geese to soar around 70% further than as a collection than they are able alone. Once the front geese were tired, they changed to the rear, allowing those at the rear to serve as their source of inspiration for the privileged. Geese take pleasure long recollections serving them to influence their goals. They direct through their yearly immigrations by depending on acquainted breakthroughs. Also, celestial navigation aimed at leadership.

-

C)

Grey lag Goose Optimization (GGO) algorithm.

The current work introduces a suggestion optimization approach called the Grey lag Goose Optimization (GGO) algorithm. The GGO algorithm commences by stochastically producing some persons.

Every member designates a potential proposal that could serve as a candidate solution to the problem. \(\:{Y}_{i}(i\:=\:1,\:2,\dots\:,\:n)\) is defined as the population of GGO that has size \(\:m\) representing a gaggle. \(\:{F}_{n}\:\)is fitness function, that is designated to assess the members in the collection. Having computing the fitness function for every member as agent \(\:{Y}_{i}\:\), \(\:P\) as the finest solution as the leader is designated.

The GGO algorithm’s Dynamic Groups manner splits total members into \(\:{m}_{1}\:\)that is an exploration set and \(\:{m}_{2}\:\)as an exploitation set. The solutions’ number in every set is accomplished energetically

using every iteration along with the finest solution. The exploration group consists of \(\:{m}_{1}\:\) agents, while the exploitation set consists of \(\:{m}_{2}\:\)agents. GGO commences the groups with a 50% allocation for exploration and a 50% allocation for exploitation. Therefore, the agents’ number in \(\:{n}_{1}\:\)is diminished, and the agents’ number within the \(\:{m}_{2}\:\)is enlarged. Though, If the fitness amount of the finest solution remains same for 3 consecutive iterations, the algorithm increases the number of agents in\(\:\:{m}_{1}\:\) to obtain a improved solution and avoid local optima with hopefulness.

-

D)

Exploration operation.

Exploration involves the captivating process of identifying specific areas inside the search region and avoiding the problem of being stuck in a suboptimal solution by actively progressing toward the ideal outcome.

Moving towards the finest solution

with commissioning this method, the geese pioneer would actively seek out novel and suitable places to discover adjacent its existing position. This is proficient by frequently relating the several prospective options to discover the finest one on the basis of fitness.

The GGO algorithm utilizes the subsequent formulations to attain the renewal of the Z and A vectors: \(\:Z\:=\:2a.{r}_{1}\:-a\) and \(\:A=\:2.{r}_{2}\:\)throughout iterations using the \(\:\varvec{a}\) parameter altered linearly from 2 to zero:

\(\:Y(t\:+\:1)\:=\:{Y}^{*}\left(t\right)\:-\:Z.|A.Y(t)-\:Y(t\left)\right|\:(\)19

In which \(\:Y\left(t\right)\) is defined as an agent at an iteration\(\:\:t\). \(\:{Y}^{*}\left(t\right)\:\)signifies the finest solution situation. \(\:Y(t\:+\:1)\) is the agent’s renewal situation. The \(\:{r}_{1}\)and \(\:{r}_{2}\:\)amounts are altering from 0 to 1, stochastically.

The subsequent equation would be utilized on the basis of selecting 3 stochastic search agents that are paddlings, termed \(\:{Y}_{P1}\),\(\:\:{Y}_{P2}\), and,\(\:\:{Y}_{P3}\)to make agents under pressure not to be influenced by 1 leader location to become superior exploration. The location of the existing search agent would be renewed by the next formula for |\(\:Z|\:\ge\:\:1\).

here the amount of \(\:{\:s}_{1}\), \(\:{\:s}_{2}\), and \(\:{\:s}_{3}\:\)are renewing from 0 to 2. The \(\:x\) is lessening exponentially and is computed as in the subsequent formula.

here\(\:\:t\) is iteration number, and the maximum iterations number is demonstrated by \(\:{t}_{max}\).

The 2nd renewing procedure, in which the \(\:a\) and \(\:Z\) vector amounts are reduced, is calculated by next formula for \(\:{\:r}_{3}\) is more and equal to 0.5.

here \(\:c\) has a fixed amount, \(\:f\) is a stochastic amount between − 1 and 1. The \(\:{\:s}_{4}\:\)is renewing from 0 to 2, whilst \(\:{\:r}_{4}\:\)and \(\:{\:r}_{5}\:\)are renewing from 0 to 1.

-

E)

Operation of exploitation.

The exploitation team is tasked with enhancing the existing solutions. The GGO determines the individual with the highest level of physical condition at the end of each cycle and grants them corresponding recognition. The GGO applies 2 diverse strategies, that are described in below, for attaining its exploitation objective. The subsequent formula is utilized to progress in the most optimal manner.

The 3 solutions (sentries), \(\:{Y}_{\text{s}1}\),\(\:\:{Z}_{\text{s}2}\), and, \(\:{Z}_{\text{s}3}\) lead additional members (\(\:{Z}_{\text{N}\text{o}\text{n}\text{s}\text{e}\text{n}\text{t}\text{r}\text{y}}\)) to alter their locations towards the assessed prey’s location. The next formulas indicate the procedure of location renewing.

here \(\:{Z}_{1}\), \(\:{Z}_{2}\:\), \(\:{Z}_{3}\) are computed as\(\:\:Z\:=\:2a.{r}_{1}-\:a\) and \(\:{A}_{1},\:{A}_{2},\:{A}_{3}\:\)are computed as\(\:\:A=\:2{r}_{2}\). The renewal locations for the members, \(\:Y\left(t+1\right),\:\)could be stated as an average of the 3 solutions \(\:{Y}_{1}\), \(\:{Y}_{2}\), and \(\:{Y}_{3}\)

as follows

-

F)

Searching the part round the finest solution.

The greatest gifted selection is placed near to the finest answer (leader) whilst in the air. These stimuli some members to discover for improvements by considering areas near to the perfect answer, called \(\:{Y}_{\text{F}1}\). The GGO accomplishes the above-mentioned procedure with the next formula.

-

G)

Determination of the optimal solution.

The exploration set’s alteration method and scanning individuals are utilized that based on them the GGO suggests extraordinary exploration abilities. The GGO could delay meeting because of its robust exploring abilities. It will be commenced with gaining the GGO with information, counting with population size, alteration ratio, and iterations.

The members are then divided into 2 sets with GGO: individual who accomplish investigative effort and individual who accomplish exploitative work. The GGO process animatedly adjusts every set’s size through the iterative procedure of determining the finest response. Every set applies 2 methods to organize its effort. Then, the GGO stochastically reorganizes the response among iterations. In 1 iteration, a solution constituent from the exploration set can journey to the exploitation set in the subsequent. The GGO’s selective approach assure that the leader is saved in location through the process.

The GGO algorithm’s stages, are employed aimed at renewing locations of the exploration set (\(\:{m}_{1}\)) and the exploitation set (\(\:{m}_{2}\)). \(\:{r}_{1}\) is renewed throughout iterations as \(\:{r}_{1}=c\left(1-(t/{t}_{max}\right)\), here \(\:t\) is existing iteration, \(\:c\) has a fixed amount, and \(\:{t}_{max}\:\)is the iterations’ number. In the final part of every iteration, GGO renews the agents inside the search area, and stochastically adjusts the agents’ trajectory to swap their characteristics between the exploration and exploitation sets. During the last stage, GGO implements the optimal solution.

Fractional grey lag Goose optimization (FGLGO)

The utilization of fractional order can also serve as a means to alter the metaheuristics, which are optimization algorithms that employ a random-search strategy in order to discover the most optimal solution for a given problem. One rationale behind employing fractional order to modify the metaheuristics is to enhance their overall performance and robustness21. By incorporating fractional order, the metaheuristics gain increased flexibility and diversity within their search process, enabling them to explore various regions of the solution space with varying scales and resolutions. Additionally, fractional order can aid the metaheuristics in evading local optima and preventing premature convergence, as it introduces a greater degree of randomness and chaos into their dynamics.

A brief introduction to fractional calculus (FC) and its uses in fractional methods is given in this work. A powerful method for meta-heuristic algorithm speed improvement has recently been suggested: fractional-order calculus (FC). When it comes to studying process, memory, and genetic traits, the FC method suggests a methodical and successful approach. Because it takes memory into account while renewing solutions, the fitness criterion (FC) is a useful tool for making meta-heuristic algorithms work better. One of the most popular fractional calculus (FC) models is the Grunwald-Letnikov (GL) model. Here is one way to explain the mechanism:

where,

The computation of the GL fractional derivative of order σ makes use of the gamma function, which is represented as \(\:\varGamma\:\left(t\right)\). This derivative, which is calculated using the subsequent formula, is represented as \(\:{D}^{\alpha\:}\left(Y\left(t\right)\right)\).

The memory window length is represented by \(\:N\). \(\:T\) controls the sampling time, while \(\:\alpha\:\) represents the derivative order operator. The above equation may be rewritten using the following equation if we assume that \(\:\alpha\:\) is equal to 1:

where, \(\:{S}^{1}\left[Y\left(t\right)\right]\) specifies the variance measure observed by two stated actions.

For enhancing the algorithm’s behaviour, this research uses FC memory in the following ways:

The common equation is derived through the following formulation.

It is possible to reorganize the algorithm’s equation in accordance with the given equation:

The algorithm’s condition may be recalculated using the subsequent formula, which is based on the previous equation and uses \(\:m\) as 4, denoting the first four terms of memory data:

-

H)

Algorithm validation.

To prove its dependability, the suggested modified FGLGO algorithm needed to be objectively validated. The first 23 traditional benchmark functions that were solved using this method were those that have been around for a long time. The results of EEFO were compared to those of five more sophisticated algorithms: Golden sine algorithm (GSA)22, Gaining-Sharing Knowledge-based algorithm (GSK)23, Atom Search Optimization (ASO)24, Equilibrium Optimizer (EO)25, and Dwarf Mongoose Optimization Algorithm (DMOA)26. In Table 2, we can see how the parameter amounts of the competing algorithms are compared.

The research was carried out by using fixed-dimensional functions from F14-F23, multimodal functions from F8-F13, and unimodal functions from F1-F7. A dimension of thirty was associated with each function. The major objective of the study was to determine the minimum feasible value for each of the twenty-three functions stated earlier. An algorithm would be considered very efficient if it could minimize its output value.

A trustworthy study was conducted by examining the optimization solutions of the algorithms in the solution with the help of the mean value and standard deviation (StD). Testing every algorithm under the same circumstances, including a set population size and an upper limit on the number of iterations, allowed for a strong and fair comparison. The maximum number of iterations and nodes in this example’s population is 200. We can ensure more precise and reliable outcomes if we repeat this technique 30 times. Table 3 shows how the FGLGO algorithm fares in comparison to the other methods.

Instead of employing the proposed modified FGLGO algorithm, mean value and standard deviation (StD) are used to measure the performance of competing algorithms. The standard deviation shows the dispersion or variety of results, whereas the mean shows the average performance of each method throughout many runs. After looking over the data, a few things stand out.

First, when compared to other methods, FGLGO always produces better mean values for fixed-dimensional functions (F14–F23). In comparison to GSA, GSK, ASO, EO, and DMOA, it finds the minimal possible value for these benchmark functions more effectively, since it obtains lower output values. Second, when it comes to multimodal functions (F8-F13), FGLGO shows that it can hold its own against other algorithms. Based on the standard deviation figures, FGLGO shows very steady and consistent results, albeit it may not always obtain the lowest mean value.

In a similar vein, FGLGO outperforms competing algorithms for unimodal functions (F1-F7) by producing mean values that are on par with them. Furthermore, the standard deviation data suggest that FGLGO maintains a constant performance level throughout all runs.

FGLGO provides a completely new method to optimize ResNeXt model parameters from fractional aspect which is better than rest of the optimization methods like stochastic gradient descent (SGD) or genetic algorithms; some of the examples in the literature used standard type of optimization methods while here we define the new optimization technique. FGLGO focuses more on the concept of memory and hereditary of fractional calculus to update its search mechanism dynamically, thus avoiding possible local optima problems associated with SGD.

Its fractional-order update incorporates past states and gradients, allowing the algorithm to escape local optima and end up in more resistant solutions. Moreover, the dynamic grouping strategy adopted by FGLGO, which splits the population into an exploration set and an exploitation set, prevents the population from falling into a local optimum from the search process, thus improving its efficiency. However, the algorithm has its drawbacks; the fractional-order calculations upon which it relies can introduce computation overhead, causing it to be slower than some more basic techniques (e.g., SGD) for large-scale problems.

Additionally, the hyperparameters like fractional order and memory window length are critical for the performance of the model as require fine tuning. Given these challenges, FGLGO’s ability to improve the performance of deep learning models, such as music genre classification, demonstrates its potential as a powerful optimization tool for tasks that have a high demand for accuracy and robustness. By discussing both its benefits and shortcomings, one can better understand when and in what circumstances it can be applied and where it fails and needs to evolve.

Experimental analysis

The current study utilized a Hybrid ZFNet/ResNeXt/FGLGO approach to extract features and identify sound spectrums. The proposed model was enhanced using a Fractional variant of the grey lag goose optimizer. The Hybrid ZFNet/ResNeXt/FGLGO successfully extracted features such as Spectral crest, spectral entropy, spectral flux, pitch, and harmonic ratio based on ZFNet. These features were then identified using ResNeXt as a classifier to determine the likelihood of different genres.

To evaluate the model’s performance, it was applied to two widely used datasets, namely ISMIR2004 and extended Ballroom, and a comparative analysis was conducted with some state-of-the-art methods. The model precision assessment has been performed by evaluating the results, which entail classifying the music into their corresponding genres. The precision assessment of the Hybrid ZFNet/ResNeXt/FGLGO model utilizing the extended Ballroom dataset is displayed in Table 4.

The model unveiled impressive accuracy rates across various genres, with the lowest rate being 0.885 for Samba. This signifies that the model effectively extracted features like spectral crest, spectral entropy, spectral flux, pitch, and harmonic ratio using ZFNet and utilized ResNeXt to classify them, enabling the distinction between different musical genres. To evaluate the model’s precision, the extended Ballroom dataset, widely recognized for genre classification tasks, was employed.

The high accuracy rates achieved by the proposed model demonstrate its proficiency in real-world datasets. When compared to up-to-dated methods, the Hybrid ZFNet/ResNeXt/FGLGO approach surpassed many, highlighting its superiority in genre classification tasks. Notably, the precision assessment results consistently yielded accuracy rates above 0.9, indicating the proposed model’s ability to classify genres with a high level of precision27. This precision could prove valuable for applications such as music recommendation systems or music streaming platforms.

Similarly, the accuracy of the Hybrid ZFNet/ResNeXt/FGLGO model was evaluated using the ISMIR2004 dataset, as presented in Table 5.

The accuracy rate for classical music in the dataset was 0.947, which is the highest among all genres. This implies that the proposed model effectively extracted features like spectral crest, spectral entropy, spectral flux, pitch, and harmonic ratio using ZFNet and classified them using ResNeXt to differentiate between classical music genres. Furthermore, the model achieved high accuracy rates for other genres, most of which were above 0.9.

This indicates that the offered model has a precise classification capability for various music genres, making it promising for applications like music recommendation systems or music streaming platforms. It is important to note that the accuracy rates achieved by the proposed model are comparable to or even better than those attained by up-to-date methods for music genre arrangement28. This showcases the effectiveness of the Hybrid ZFNet/ResNeXt/FGLGO model in accurately classifying music into different genres.

In order to further elucidate the effectiveness of the Hybrid ZFNet/ResNeXt/FGLGO model, its behaviour in terms of Accuracy, Precision, and Recall was compared to five cutting-edge models, namely Convolutional Neural Network (CNN)10, Parallel Recurrent Convolutional Neural Network (PRCNN)7, Bidirectional Long Short-Term Memory (BiLSTM)8, and Bidirectional Recurrent Neural Network (BiRNN)9. A comparative analysis of the Hybrid ZFNet/ResNeXt/FGLGO model and the aforementioned State-of-the-Art models, which have been thoroughly examined, is presented in Table 6.

The findings designate that the offered model surpasses all additional approaches in terms of performance. It achieves an accuracy of 0.918, precision of 0.954, and recall of 0.896 in the extended ballroom dataset. In the ISMIR2004 dataset, the proposed model attains an accuracy of 0.927, precision of 0.957, and recall of 0.967. On the other hand, the CNN model exhibits a lower accuracy of 0.836 compared to all other evaluated methods.

The PRCNN model, although better than the CNN model, still falls short of the other methods with an accuracy of 0.874. The BiLSTM model outperforms both the CNN and PRCNN models with an accuracy of 0.907, but it is still inferior to the proposed model. Lastly, the BiRNN model demonstrates a lower accuracy of 0.863 when compared to all other evaluated methods.

Discussions

This study holds significant implications for future work as well as MIR applications. This research covers the way for developing MIR technologies in various directions as it demonstrated how the hybrid ZFNet/ResNeXt/FGLGO model achieves better values in accuracy, precision, and recall in music genre classification. The promising results of introducing ZFNet for feature extraction and ResNeXt for classification could also lead to future research into hybrid deep learning architectures for other MIR tasks, including mood detection, artist identification or music recommendation systems.

This paper demonstrates the efficacy of the implemented FGLGO approach in optimizing a CNN-based model, validating the strengths of metaheuristic functions with respect to parameter optimization for deep learning structures and inspiring further studies on potential areas to apply similar techniques in improving parameters used in modeling deep learning structures. Moreover, the design of the carefully curated set of audio features (spec. crest, entropy, and flux) also highlights the significance of feature engineering in MIR, encouraging the exploration of new or complementary features that account for cultural, rhythmic, or timbral details.

This model can greatly enhance music recommender systems, as it allows platforms to provide more precise and customized recommendations according to the musical genres. While one potential application is to create precise genre-based web organization tools for music education, it can also help musicologists study evolving genres and cultural effects on genre. Another strength of the model is that it can generalize well across various domains. In the larger context, these developments do not only uplift the current benchmark in MIR, but spark new directions for creativity in academics and real-world scenarios.

Conclusions

There are different genres of music in the world, from classical music to pop, jazz, rock and national music of each country. Music genres may be delineated by many attributes including rhythm, melody, instrumentation, cultural setting, historical era, and listener interpretation. The proliferation and development of music throughout history have given rise to a multitude of genres and sub-genres, making it difficult to build a comprehensive and universally applicable categorization system. This research introduced a new methodology aimed at music genre arrangement by integrating deep learning methods with a metaheuristic algorithm. The methodology used in the present study involves using a pre-trained ZFNet to extract sophisticated characteristics from the audio signals, and then employing a ResNeXt model to carry out the classification task. In addition, a fractional-order-based version of the FGLGO algorithm was included to optimize the parameters of ResNeXt and improve the overall performance. The evaluations were conducted on two well recognized benchmark datasets, ISMIR2004 and extended Ballroom, and conducted a comparative analysis against many cutting-edge models, like CNN, PRCNN, BiLSTM, and BiRNN. The experimental findings indicated that the proposed model attains superior accuracy and efficiency in music genre recognition, surpassing the performance of current models. The versatility of the model allows it to be used in a range of applications that need the identification of music genres, including music recommendation, music analysis, and music retrieval. The future plan can be include expanding the proposed model’s capabilities to encompass a wider range of music genres and more intricate audio signals. Additionally, other deep learning architectures and metaheuristic algorithms may be investigated to enhance the efficiency of the suggested model. Finally, discussing potential enhancements to the model and exploring other datasets can be beneficial to provide a more extended system for this purpose.

Data availability

All data generated or analysed during this study are included in this published article.

References

Ndou, N., Ajoodha, R. & Jadhav, A. Music genre classification: A review of deep-learning and traditional machine-learning approaches, in IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), 2021, pp. 1–6: IEEE. (2021).

Farajzadeh, N., Sadeghzadeh, N. & Hashemzadeh, M. PMG-Net: Persian music genre classification using deep neural networks. Entertainment Comput. 44, 100518 (2023).

Mikhaylova, A. A. & Mikhaylov, A. S. Re-distribution of knowledge for innovation around Russia. Int. J. Technological Learn. Innov. Dev. 8 (1), 37–56 (2016).

Jaishankar, B., Anitha, R., Shadrach, F. D., Sivarathinabala, M. & Balamurugan, V. Music genre classification using African Buffalo optimization. Comput. Syst. Sci. Eng., 44, 2, (2023).

Daneshfar, F. & Kabudian, S. J. Speech Emotion Recognition Using Multi-Layer Sparse Auto-Encoder Extreme Learning Machine and Spectral/Spectro-Temporal Features with New Weighting Method for Data Imbalance, in 11th International Conference on Computer Engineering and Knowledge (ICCKE), 2021, pp. 419–423: IEEE. (2021).

Li, Y., Zhang, Z., Ding, H. & Chang, L. Music genre classification based on fusing audio and lyric information. Multimedia Tools Appl. 82 (13), 20157–20176 (2023).

Yang, R., Feng, L., Wang, H., Yao, J. & Luo, S. Parallel recurrent convolutional neural networks-based music genre classification method for mobile devices. IEEE Access. 8, 19629–19637 (2020).

Prabhakar, S. K. & Lee, S. W. Holistic approaches to music genre classification using efficient transfer and deep learning techniques. Expert Syst. Appl. 211, 118636 (2023).

Yu, Y. et al. Deep attention based music genre classification, Neurocomputing, vol. 372, pp. 84–91, (2020).

Liu, C., Feng, L., Liu, G., Wang, H. & Liu, S. Bottom-up broadcast neural network for music genre classification. Multimedia Tools Appl. 80, 7313–7331 (2021).

Cheng, Y. H., Chang, P. C., Nguyen, D. M. & Kuo, C. N. Automatic Music Genre Classif. Based CRNN Eng. Lett., 29, 1, (2020).

Cano, P. et al. ISMIR 2004 audio description contest. Music Technol. Group. Universitat Pompeu Fabra Tech. Rep., (2006).

Marchand, U. & Peeters, G. (2016). The extended ballroom dataset,.

Mikhaylova, A. A., Mikhaylov, A. S. & Hvaley, D. V. Receptiveness to innovation during the COVID-19 pandemic: asymmetries in the adoption of digital routines. Reg. Stud. Reg. Sci. 8 (1), 311–327 (2021).

Zehao, W. et al. Optimal economic model of a combined renewable energy system utilizing modified. Sustain. Energy Technol. Assess. 74, 104186 (2025).

Shayan, M. E. et al. An innovative two-stage machine learning-based adaptive robust unit commitment strategy for addressing uncertainty in renewable energy systems. Int. J. Electr. Power Energy Syst. 160, 110087 (2024).

Mir, M., Shafieezadeh, M., Heidari, M. A. & Ghadimi, N. Application of hybrid forecast engine based intelligent algorithm and feature selection for wind signal prediction. Evol. Syst. 11 (4), 559–573 (2020).

Yuan, Z., Wang, W., Wang, H. & Ghadimi, N. Probabilistic decomposition-based security constrained transmission expansion planning incorporating distributed series reactor. IET Generation Transmission Distribution. 14 (17), 3478–3487 (2020).

Duan, F., Song, F., Chen, S., Khayatnezhad, M. & Ghadimi, N. Model parameters identification of the PEMFCs using an improved design of crow search algorithm. Int. J. Hydrog. Energy. 47 (79), 33839–33849 (2022).

Ghiasi, M. et al. Enhancing power grid stability: design and integration of a fast bus tripping system in protection relays. IEEE Trans. Consum. Electron. (2024).

Li, S. et al. Evaluating the efficiency of CCHP systems in Xinjiang Uygur autonomous region: an optimal strategy based on improved mother optimization algorithm. Case Stud. Therm. Eng. 54, 104005 (2024).

Tanyildizi, E. & Demir, G. Golden sine algorithm: A novel Math-Inspired algorithm. Adv. Electr. Comput. Eng., 17, 2, (2017).

Mohamed, A. W., Hadi, A. A. & Mohamed, A. K. Gaining-sharing knowledge based algorithm for solving optimization problems: a novel nature-inspired algorithm. Int. J. Mach. Learn. Cybernet. 11 (7), 1501–1529 (2020).

Zhao, W., Wang, L. & Zhang, Z. Atom search optimization and its application to solve a hydrogeologic parameter Estimation problem. Knowl. Based Syst. 163, 283–304 (2019).

Faramarzi, A., Heidarinejad, M., Stephens, B. & Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl. Based Syst. 191, 105190 (2020).

Agushaka, J. O., Ezugwu, A. E. & Abualigah, L. Dwarf mongoose optimization algorithm. Comput. Methods Appl. Mech. Eng. 391, 114570 (2022).

Xu, H. & Razmjooy, N. Self-adaptive Henry gas solubility optimizer for identification of solid oxide fuel cell. Evol. Syst. pp. 1–19, (2023).

Sun, J., Wang, L. & Razmjooy, N. Anterior cruciate ligament tear detection based on deep belief networks and improved honey Badger algorithm. Biomed. Signal Process. Control. 84, 105019 (2023).

Author information

Authors and Affiliations

Contributions

yuanyetian wrote the main manuscript text and prepared figures. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tian, Y. Deep neural networks and fractional grey lag Goose optimization for music genre identification. Sci Rep 15, 6702 (2025). https://doi.org/10.1038/s41598-025-91203-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-91203-9