Abstract

This paper uses deep learning techniques to present a framework for predicting and classifying surface roughness in milling parts. The acoustic emission (AE) signals captured during milling experiments were converted into 2D images using four encoding Signal processing: Segmented Stacked Permuted Channels (SSPC), Segmented sampled Stacked Channels (SSSC), Segmented sampled Stacked Channels with linear downsampling (SSSC*), and Recurrence Plots (RP). These images were fed into convolutional neural networks, including VGG16, ResNet18, ShuffleNet and CNN-LSTM for predicting the category of surface roughness values. This work used the average surface roughness (Ra) as the main roughness attribute. Among the Signal processing techniques, SSPC could achieve the highest accuracy, above 98%, across most models, owing to minimal preprocessing of signals. ShuffleNet demonstrated a strong combination of accuracy (96–98%) and low computational cost. The robustness of networks was evaluated by introducing Gaussian noise at two levels. SSPC and SSSC were the most noise-resistant approaches, maintaining testing accuracy above 90% at high noise. Augmenting acoustic data with machining parameters (cutting speed, depth, feed rate, tool type) as additional inputs could improve the model’s accuracy and convergence rate, especially for noisy data. Finally, ShuffleNet was identified as an optimal architecture for real-time monitoring due to its accuracy, noise resilience, and low computational cost. In summary, this study demonstrates the capability of deep convolutional networks combined with innovative signal encoding techniques to accurately predict surface roughness values and categories under various cutting conditions. Based on process signatures, the framework provides a data-driven approach to monitoring and optimizing machining processes in real-time.

Similar content being viewed by others

Introduction

Machining, recognized as an essential and complex manufacturing method, is vital in major production sectors. The complexity of machining arises from the significant influence of numerous input variables on the outcome, which challenges the creation of relationships and models between input and output. The appropriate selection of input variables is a critical but complicated step to achieve the desired products, as the process is very complex, and there is no suitable formula for the relationship between input and output. Above all, the correct selection of input variables, including cutting parameters and machine tool characteristics, is crucial as they strongly influence machining efficiency, part quality, and production time. Therefore, predicting the output of the machining process is one of the critical phases for control and planning1.

Two primary groups of approaches were commonly employed for forecasting machining results: model-based and data-driven approaches. Model-based methods are based on establishing relationships between variables and outputs, which also follow physical and mechanical laws between input and output in the machining process. This requires a thorough understanding of the physical model. However, the challenges arise from the high sensitivity of the machining process results, its complexity, the numerous influencing variables, and the uncertainties caused by various factors, making model-based approaches complicated2. Data-driven models utilize process-related data, but collecting such data can be costly and time-consuming. Certain methods utilize historical and real-time sensor data for decision-making but still require significant data2,3. The development of concepts related to Industry 4.0 and advances in sensor technology have led to the emergence of industrial big data suitable for use in data-driven methods4,5. The application of artificial intelligence tools, such as machine learning and deep learning, which constitute integral components of smart manufacturing, also makes it possible to use and analyze this big data to achieve the desired result6.

Surface roughness is an important quality indicator for machined surfaces and is closely related to the accuracy of fit, wear resistance, fatigue strength, contact stiffness, vibration, and noise of a mechanical part7. In addition, it can directly monitor the mechanical properties of the workpiece, such as fatigue, surface friction, and fracture resistance, and thus significantly influence a product’s service life and reliability8. The six main categories of machining parameters that impact surface roughness are cutting characteristics, dynamic properties, workpiece properties, machine tool properties, and tool properties7. Therefore, the precise measurement of this surface quality criterion is crucial and a challenge. Traditional methods using a contact-based stylus that must come into contact with the surface of the workpiece have been used due to their high reliability. However, this method has limitations, such as the complicated access to components and parts in industrial environments8. To accomplish this, the machine tool must stop, the part must be separated from the cutting zone, and the surface roughness must be measured offline. This is, however, time-consuming and not practical in many cases. Therefore, alternative real-time monitoring methods, strategies, and approaches are anticipated.

In recent years, there has been an increasing demand for data-driven methods to predict surface roughness values in machined parts. Surface roughness can be obtained using both model-based and data-based methods. The surface roughness value is obtained in the model-based approach by creating a statistical or classification regression model through response surface analysis. In the data-driven approach, two crucial and influential factors for the performance of data-driven methods were selecting an appropriate prediction model and considering the extracted features as inputs7.

Review of related works

Many machine learning methods can predict and estimate surface roughness values, such as support vector machine (SVM)9,10,11, random forest (RF)12, Gaussian process regression (GPR)12, ensemble learning13, artificial neural network (ANN)14,15,16, least squares SVM (LS-SVM)17. In their study, Abu-Mahfouz et al.9 showed that vibration signals and SVM can accurately predict surface roughness in end milling processes. They used statistical parameters, Fast Fourier Transforms (FFT), and wavelet packets to analyze the vibration signals. They introduced a Support Vector Machine (SVM) classifier to make predictions about surface roughness.

Yeganefar et al.10 conducted a study on predicting and optimizing surface roughness and cutting forces in aluminum slot milling. The analysis involves using regression analysis, neural networks, and support vector machines. The study investigates the effect of cutting parameters such as cutting speed, depth of cut, feed per tooth, and tool type on the results. Considering the significant effect of heat on the machined surface and the use of cutting fluid, Dubey et al.11 have considered the parameter of cutting fluid concentration with different particle sizes along with cutting speed, cutting depth, and feeding rate as inputs. They used linear regression (LR), random forest (RF), and support vector machine (SVM) methods to predict surface roughness values. The results show that the random forest method performs better than the other two models. Wang et al.17 utilized a least squares support vector machine (LS-SVM) model to predict surface roughness in slow tool servo (STS) turning of lenses. The input parameters were tool nose radius, feed rate, depth of cut, C-axis speed, and discretization angle. This demonstrated that the LS-SVM approach can effectively predict surface roughness in STS machining based on key cutting parameters. The study by Khorasani and Yazdi15 developed a dynamic simulation system using an artificial neural network to predict surface roughness in milling. Inputs included cutting parameters, material, coolant, vibrations, and noise.

The system achieved 99.8% and 99.7% accuracy on testing and recall data sets, outperforming other published models. Gupta et al.16 used support vector regression (SVR) and artificial neural networks (ANN) to predict surface roughness in turning. Inputs were turning parameters like speed and feed rate. Integrating the models with genetic algorithms enabled the optimization of the parameters to minimize surface roughness. Motta et al.12 developed machine learning models using Random Forest (RF) and Gaussian Process Regression (GPR) to predict surface roughness during cylinder turning. Inputs were cutting forces, temperature, vibration data, and cutting parameters. Zhou et al.13 use a GA to optimize the hyperparameters of an ensemble gradient boosting model for surface roughness prediction and optimization in machining applications. Kant and Sangwang14 utilized artificial neural networks (ANN) and genetic algorithms (GA) to predict and optimize surface roughness.

ANN models demonstrate high predictive accuracy under conditions similar to regression and fuzzy logic models. A literature review in machine learning highlights the limitation of shallow learning methods, particularly their low processing power when dealing with raw data (unstructured or unformatted). This limitation adversely affects their generalization capabilities18. The review also emphasizes that shallow learning methods necessitate manual feature extraction, a process highly dependent on prior knowledge of the system, leading to the challenge of low generalization19. Moreover, as datasets grow and exhibit complex nonlinear relationships, manual feature extraction becomes computationally expensive and time-consuming. Consequently, researchers have turned their attention to exploring deep and complex structures to address these challenges and enhance the capabilities of machine learning methods.

The recent development and the use of deep learning have gained attention due to its ability to overcome feature extraction challenges, feature generalization properties, and process large industrial datasets20. The focus is on the structure of networks with multiple layers of nonlinear processing units. Notable networks in this area include Convolutional Neural Networks (CNNs)7,21,22 and Recurrent Neural Networks (RNNs)23. CNNs are suitable for tasks with grid-like data, such as images, and utilize their hierarchical feature detection. RNNs effectively processed sequential data and were suitable for natural language processing (NLP) and time series analysis tasks. Huang and Lee21 utilize a 1D convolutional neural network model that fuses vibration and sound signals sensor data to predict surface roughness. The inputs were features extracted from X and Y vibration data and sound data collected during machining experiments.

The model performed well, achieving a good prediction accuracy on the test data, with an RMSE of 0.0368 μm for surface roughness. Lin et al.7 utilized deep learning models such as 1D-CNN, FFT-DNN, and FFT-LSTM to predict surface roughness during milling using vibration signal data. The input features used in these models are extracted from vibration signals such as FFT and 1D-CNN. Grzenda and Bustillo24 presented a paper that proposes a semi-supervised learning method that uses unlabeled vibration data from new tool setups to improve the accuracy of surface roughness prediction in milling. The approach blends labeled and handpicked unlabeled vibration data to train kNN and random forest models. The experiments represented statistically significant accuracy improvements, especially in discretized roughness prediction. Pan et al.22 utilized a 1D convolutional neural network model with discrete surface roughness values to classify and predict vibration signals. The input for this model has collected vibration signals during the machining tests. The results showed that this model achieved over 10% higher prediction accuracy than direct regression of surface roughness values. Gue et al.23 conducted a study where they used a 1D convolutional neural network model to predict vibration signals based on discrete surface roughness values. They collected vibration signals during the machining tests and used them as inputs for the model. The results showed that the classification-based prediction approach achieved 10% more accuracy than directly regressing surface roughness values. In their study, Anagün et al.25 utilized a deep learning technique that incorporated ensemble models and convolutional neural networks (CNNs) to classify the surface roughness of EDM (Electric Discharge Machining) surfaces. The input images were captured at magnifications of 50X, 100X, and 200X and featured surfaces with varying roughness levels. Using an ensemble approach, the team achieved an impressive accuracy rate of 99.42% in classifying roughness in 50X.

The use of deep learning technology in predicting machining processes has great potential for improvement. It helps to understand the relationship between process and features, leading to higher prediction accuracy. With deep learning, large amounts of industrial data can be modeled without manual extraction of features, resulting in a more straightforward evaluation of modeling accuracy. Complex models can be created by applying deep learning, and by incorporating more data, a significant impact on the output of the process can be achieved. This approach makes it possible to close the gap between the relationship between process parameters and process output. Applying this method can improve the quality control of machined parts and process optimization.

In this paper, a deep learning-based framework was implemented that utilized the environmental AE signal for surface roughness value prediction and classification. The primary goal is to develop a highly accurate prediction model for evaluating the surface roughness of machined parts. In this context, the two-dimensional images were extracted from time series signals using various methods as input to convolutional networks, including Rosenet18, VGG16, ShuffleNet, and CNN-LSTM. Incorporating process parameters such as cutting speed, cutting depth, feed rate, and tool type into the network increased accuracy under different conditions. This approach enabled offline prediction and classification of surface roughness values and facilitated real-time predictions. In addition, a deep learning-based relationship model was created between the machined parts’ AE signal and surface roughness. Continuous real-time monitoring of the AE signals could be used to predict the machined parts’ surface and edge quality more applicable. This could also be considered as the main originality of the research work. No similar work was found in the literature in the same context.

This paper involves the development of a novel method with low computational complexity for signal processing and image generation. The proposed approach achieves an approximate 96–98% accuracy in predicting surface roughness classes while maintaining system simplicity and utilizing lightweight neural networks. Additionally, the method demonstrates strong resilience to noisy signals, declining accuracy by only 5–8% under high noise conditions. This is a reasonable result, given the noise levels in the input. It has also been shown that by incorporating additional parameters as inputs to the network, the accuracy and classification capability of the network can be further improved.

Materials and methods

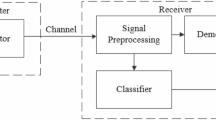

The process of estimating and categorizing surface roughness values is illustrated in Fig. 1 and involves multiple steps. Firstly, the data captured by the sensors and process parameters are fed into the network. Various techniques are employed to convert the time series into two-dimensional images, and the accuracy of each technique was evaluated using four convolutional networks. The robustness of the networks was tested by introducing varying degrees of noise to the primary signals in different states. Lastly, the accuracy and efficiency of the networks are evaluated by adding process parameters as additional inputs. All other details regarding the process are explained in further detail below.

Experimental procedure

The effects of cutting speed (Vc), depth of cut (ap), feed per tooth (fz), and tool on average surface roughness (Ra) during slot milling of AA 2024-T351, AA 6061-T6, and AA 7075-T6 were examined using a multi-level full factorial experimental design comprising 54 trials and two replications. The average values of recorded values were used for additional studies. Table 1 lists the experimental factors along with their respective values. End milling tools with three teeth (Z = 3), a tool diameter (D) of 19.05 mm, and a helix angle of 30° were utilized, along with several carbide inserts with varying nose radius and coatings (Table 1). The coatings were chosen according to industrial recommendations by the tool manufacturer.

Slot milling tests were performed on a three-axis CNC machine tool (HURON-K2 × 10, power 50 kW, speed 28,000 rpm, torque 50 Nm). The first AE sensor was located 0.2 m away from the cutting zone. The data acquisition was conducted using these AE sensors with a sampling frequency (fs) of 100 kHz. Rigorous controls were implemented during the initial tests to ensure stability in the cutting process and monitor the machine and tool vibrations and dynamic behavior. Employing rigid tools and a fixed workpiece fixture revealed a slight deviation in the positions of the cutting tool and the workpiece. After each cutting test, a new insert was introduced to counter potential variations in AE signals arising from tool wear. Furthermore, an AE sensor was strategically located 2 m from the chip formation area to assess background noise. The substantial signal-to-noise ratio (SNR) observed between the first and second sensors affirms the limited impact of background noise on the accuracy of the signal recorded by the first sensor, positioned near the chip-forming region. Detailed specifications of the workpiece and a summary of the response value utilized are outlined in Tables 2 and 3, respectively.

After cutting operations, the most widely used roughness parameter, Ra, was measured using the surface profilometer Mitutoyo SJ 400. In this article, the values for surface roughness are divided into five categories using Table 4. The study utilized an AE data collection system, illustrated in Fig. 2a, which includes two TEDS AE microphones. As noted earlier, the first microphone was placed near the machining area (Fig. 2b), while the second is situated two meters from the chip formation region (Fig. 2c). Both microphones are integrated with a data preprocessing unit, as shown in Fig. 3. The second sensor is specifically used to monitor ground background noise. The arrangement of the workpiece during the machining tests is depicted in Fig. 2d.

Methodological procedure

Modeling approach

In various applications, transforming time series signals into two-dimensional images using diverse algorithms proves beneficial for training convolutional networks. This restructuring of time series data enables the effective learning of temporal dependencies26. The selection of the approach for converting time signals into two-dimensional images can impact the network’s performance. Utilizing image processing techniques for data preprocessing is another avenue to enhance network efficiency. This report delves into the detailed explanation of four distinct signal-to-image conversion methods outlined in the subsequent sections.

Segmented stacked permuted channels (SSPC)

This data acquisition method of AE signals uses a groundbreaking method that combines the data from channels 1, A(t) and 2, B(t) into a unified vector representation.

This combined vector, denoted V(t), undergoes a transformation step and is converted into a two-dimensional matrix M(). The usual temporal axis in the resulting image is redefined in a way that differs from the conventional representation of temporal changes along the horizontal axis.

Here, T and F represent the temporal and reshaped feature dimensions or the number of segments created during the stacking process. This transformation aims to restructure the time-series data into an image format, revealing hidden patterns and correlations that CNNs can efficiently detect.

T represents time, marking the duration of each time segment in the sliding window approach. A larger value of T captures more of the signal’s overall changes over time, offering a broader perspective of its dynamics. In contrast, a smaller T focuses on shorter time intervals, providing a finer resolution for detecting quick changes.

Meanwhile, F refers to the feature axis, which divides the signal into bins based on its spatial or frequency components. This division helps to highlight specific patterns or structures that may appear across different segments of the signal. Transforming the signal into a 2D image format allows convolutional neural networks (CNNs) to effectively capture and analyze these intricate time and frequency-based features, enhancing their ability to identify complex relationships in the data.

The matrix \(\:\varvec{M}\) is first normalized to a 0–255 scale to ensure consistent scaling across all segments, removing variations in amplitude caused by factors like environmental noise or signal acquisition differences. This step makes the data more suitable for image-based analysis, especially for convolutional neural networks (CNNs), which work best with inputs in this range. Normalization also allows the data to be easily visualized as grayscale images, where pixel intensities directly reflect the signal’s normalized values. After normalization, the 2D Fast Fourier Transform (FFT) is applied, converting the spatial representation of the signal into the frequency domain to reveal periodic patterns and frequency characteristics. A logarithmic transformation is applied to the FFT magnitude to enhance this representation further. This compresses the dynamic range, amplifying subtle frequency details that dominant components might otherwise overshadow. Together, these steps normalization, FFT, and logarithmic scaling feature that the AE signal’s temporal and frequency features are preserved and enhanced, allowing the CNN to uncover complex patterns and relationships within the data. This approach provides a distinctive perspective on the AE signal that departs from traditional time-centered representations and potentially reveals latent patterns within the merged signal channels. A few photos created using this method are illustrated in Fig. 4.

Segmented sampled stacked channels (SSSC/SSSC*)

In this method, the first channel, \(\:{\varvec{X}}_{1}\left(\varvec{t}\right)\), represents the primary signal, such as an audio signal, containing both the desired information and noise. The second channel, \(\:{\varvec{X}}_{2}\:\left(\varvec{t}\right)\), serves as the ambient noise signal, capturing the noise components present in the environment. By subtracting the second channel from the first, the third channel, \(\:{\varvec{X}}_{3}\left(\varvec{t}\right)\) is generated, which represents the partially noise-free version of the signal. This process can be mathematically expressed as:

Here, \(\:{X}_{3}\left(t\right)\) emphasizes the desired information in the signal while significantly reducing the influence of noise. This transformation forms the basis for constructing a more refined signal representation, facilitating the analysis of its temporal and spatial features.

In the next step, the signals \(\:{X}_{1}\left(t\right)\), \(\:{X}_{2}\:\left(t\right)\), and \(\:{X}_{3}\left(t\right)\) are split into smaller time windows of size \(\:T\). Each window captures a specific portion of the signals, reflecting their behavior over time. Let \(\:{N}_{T}\) represent the total number of these time windows. For each window \(\:k\), the corresponding segment of the signals is denoted as:

The choice of time windows for the segment applied to the signal significantly affects the image size. In this approach, two methods are used to reduce the size of the generated images. The first method uses linear sampling. It consists of selecting a representative point from several points between the initial and final size of the part. This ensures that the vector length reaches the desired length. Select representative points \(\:{P}_{j}\:\) linearly across the window. Let \(\:M\) be the desired vector length after sampling, and \(\:L\) be the original length of \(\:{W}_{k}\). The linear sampling indices are given by:

The second method uses low sampling. Here, an average is calculated for each score, which is representative. This reduces the length of the vectors from all three channels. This sequence was used to assess the impact of channel correlation on the overall result. The low sampling method simplifies the signal representation by calculating the average value for each time window, effectively compressing the data while retaining its essential characteristics. Equation (7) computes the average value \(\overline{W} _{k}\) for a given window \(\:{W}_{k}\), while Eq. (8) forms the resulting vector \(\:{W\:}_{k}^{avg}\), which consists of the averages from all time windows. This approach reduces the vector length and helps analyze the correlation between channels.

If the downsampling method is linear, it is called SSSC*. Otherwise, it is abbreviated as SSSC in this article. Figure 5 displays the origin using the proposed method and the average downsampling method. Figure 6 shows the images created via the linear downsampling method.

Recurrence plot (RP)

Time series exhibit recurring behavior, including periodicities and irregular cyclicities, often observed in dynamic nonlinear systems or stochastic processes27. The Recurrence Plot is a visualization tool for exploring the m-dimensional phase space trajectory and provides a 2D representation of recurrences. By showing points at which trajectories return to previous states, RP is a widely used tool for analyzing recurring behaviors in time series generated by dynamic systems28. It can be formulated as:

where \(\left\| . \right\|\)is a norm operation, \(\epsilon\) is a threshold value, \(\:H\) is the Heaviside function used to binarize the distance matrices, \(\:N\) is the number of states, \(\:m\) is the dimension of the phase space and \(\:{\overrightarrow{s}}_{i}\) is the \(\:i\_th\) state in the phase space. The resulting RP image has a matrix size of n × n, where n is the product of the number of sensor sequences and the sliding window length on the time series data. Figure 7 shows several images created using the RP method.

Data preprocessing for image formation

In this article, a time window of 2048 timestamps is used in both channels. The desired overlap is 75%. Each of these windows contains two main channels in all three methods. In the SSSC/SSSC* method, in addition to these two main channels, a third channel is used by subtracting the second channel from the first and repeated channels to calculate their correlation. In addition, operations such as data normalization of each window and other processing operations were performed on these windows. This is shown in Fig. 8. Finally, the photos are created with a size of 64 × 64, which is used as input for the convolutional network.

Model architecture

In this study, four deep neural networks were utilized. The first three networks VGG16, ResNet18, and ShuffleNet are based on convolutional architectures. The fourth model is based on a long short-term memory (LSTM) network, which is effective for analyzing sequential data and time series. Transfer learning facilitated the rapid development of efficient models, with pre-trained models employed to extract features from input images. However, given the differences between the training images and those used by these pre-trained networks, direct classification was not feasible. As a solution, the networks were used solely as feature extractors. After the flattening layer, all subsequent fully connected layers were replaced with custom layers, and the original models were no longer utilized for classification. This approach allowed for a tailored fit to the specific dataset and classification task.

Performance metrics

For classification purposes, accuracy is one of the measurement criteria of neural networks. Of course, this measure cannot always show the high efficiency of the network, for example. If the data are unbalanced, measures other than accuracy should be monitored and reported29. Therefore, different criteria are used to evaluate networks in addition to accuracy. Therefore, it is crucial to adopt the correct evaluation criteria in deep learning tasks to optimize the performance of classifiers30.

-

a.

Accuracy: The accuracy criteria is the ratio of the classes’ accurate predictions to all evaluation samples.

-

b.

Sensitivity or Recall: This statistic measures the proportion of positive patterns that are correctly classified.

-

c.

Precision: The positive patterns successfully predicted from all projected patterns in a positive class are measured using precision.

-

d.

F1-Score: It computes the harmonic mean of recall and precision.

-

e.

Top of Form.

-

f.

Computational cost: The mean duration needed for the model to complete a single inference.

-

g.

Top of Form.

In the above equations, \(\:TP,\:TN,\:FP\) and \(\:FN\) are the total true-positive, true-negative, false-positive, and false-negative instances for a classifier for all classes. Table 5 shows the distribution of the data in each class and three categories of training data, validation, and test data. However, it should be noted that this dataset is unbalanced, which is insufficient to evaluate the accuracy and computational cost values accurately due to the imbalance of the dataset. The imbalance of classes in the dataset makes the network’s performance unsatisfactory, even if it has high accuracy. For example, it may show good accuracy in detecting a class with a high number of data, which makes the overall accuracy of the network high due to the large number and influence of a particular class. However, it may not be effective for correct detection in different classes. In such cases, it is recommended that the F1 score be evaluated to evaluate the correctness of the network. This score considers both precision and recall, making it a more reliable measure of network performance.

Results and discussion

This section examines the model’s classification accuracy when generating 2D images from time series signals using different neural network architectures. The network’s performance is evaluated by adding noise at different levels and modes to determine how it affects the accuracy. In addition, the effect of adding process parameters as additional inputs to the network on its performance in both the original (noise-free) and noise modes is also investigated. When analyzing the correlation matrix between the process parameters and the surface roughness classes based on Fig. 9, it is evident that there is no significant relationship between the very important process parameters, such as cutting speed, cutting depth, feed rate, and the resulting surface roughness class. These observations highlight the complex and nonlinear nature of the underlying dynamics that govern these production processes. The lack of a clear correlation indicates that it is difficult to detect fine details in the interaction between process parameters and surface roughness through traditional analysis methods. Therefore, it becomes critical to use deep neural networks to discover complex relationships and patterns in data. The inherent ability of deep networks to capture complex dependencies and hierarchical representations makes them valuable tools for detecting subtle relationships that may elude conventional analytical approaches. This discovery emphasizes the importance of using advanced methods to discover hidden relationships in complex data.

To train the convolutional neural network, input images with a size of 64 × 64 pixels and a single channel with a batch size of 16 are used. Classification distribution was based on Table 6. For the training process, 80% of the entire dataset was used for training purposes. After that, the remaining 20% was divided into two parts: 50% for validation data and the remaining 50% for test data. A stochastic gradient optimizer (SGD) with a learning rate of 0.001 and a momentum of 0.9 is used to train the convolutional network. These parameters were chosen to optimize the convergence of the network during the training iterations.

Model accuracy and performance for different methods

Table 7 shows the training accuracy for four image generation methods, where each method was tested in four different network architectures. Training, validation, and test data accuracy were evaluated for all four network methods. The results show that in the first three networks, the highest accuracy for the SSSC method was achieved for the first three networks, which are different architectures of convolution networks. However, considering that the architecture of the fourth network (CNN-LSTM) has an excellent performance in the evaluation of time series data, but in this method, unlike the other three methods, the horizontal axis of the images does not represent time, the accuracy has decreased compared to the previous three modes. In the other three methods, downsampling was performed to reduce the size of the input images to 64 × 64. In general, a decrease in accuracy is observed. However, when downsampling is implemented as an average (SSSC), less accuracy is observed than in the linear mode.

The comparison of mean accuracy, precision, recall, and F1 score in Table 8 shows that method 1 (SSPC encoding) achieved the highest performance, with accuracy above 98% for most models. However, the CNN-LSTM architecture dropped to 94.64% due to its time dependency. Method 2 (SSSC encoding) also performed well, though slightly less accurately than Method (1) In particular, ShuffleNet experienced a drop to 96.73% accuracy with Method (2) Method 3 (SSSC* coding) followed a similar trend with lower accuracy, particularly for ResNet 18 and ShuffleNet. Conversely, Method 4 (RP encoding) showed significantly lower performance, with accuracy not exceeding 89.49% for all models. In summary, preserving more original data (methods 1 and 2) resulted in higher accuracy than methods involving data manipulation or downsampling (methods 3 and 4). These results emphasize the importance of selecting coding methods tailored to the model characteristics.

The evaluation of image encoding methods goes beyond the images’ quality and includes the crucial aspect of computational costs. The computational cost, i.e., the average time required for image generation and data inference, is a crucial criterion for evaluating the efficiency and effectiveness of these methods. Lower computing costs enable faster data processing and ensure more resource-efficient use, while higher costs can lead to longer processing times and higher resource consumption. A comparative analysis of computational costs for the different encoding methods across network architectures was performed in Fig. 10. The VGG16 architecture had the longest processing times across all encoding methods.

In contrast, the ShuffleNet architecture demonstrated excellent performance with inference times of around 10 ms for all methods. When paired with SSSC encoding, ShuffleNet showed high accuracy (Table 8) and fast processing capability. The strong predictive performance and low computational cost make ShuffleNet with SSSC encoding an ideal candidate for real-time online forecasting applications. With processing times in millisecond order, ShuffleNet could rapidly assess AE signals and provide actionable feedback on surface roughness under tight time constraints. The efficiency of compact network architectures like ShuffleNet enables the deployment of these data-driven models directly on machining shop floors.

This study used a handcrafted feature extraction approach to evaluate how well the proposed signal imaging method performs. Instead of converting the data into 2D images, statistical and frequency-based features were extracted from each segment of the AE signals using the same time window as the image-based approach. The extracted features include the mean, standard deviation, minimum and maximum values, median, mean absolute deviation, root mean square (RMS), and frequency-domain features like the mean and standard deviation of the FFT magnitude. These features were then directly used for classification with traditional machine learning models. This comparison helps determine how the proposed method stacks up against conventional feature-based approaches in predicting surface roughness.

To classify the extracted features, three widely used machine learning algorithms were tested: Support Vector Machine (SVM), Random Forest (RF), and XGBoost (XGB). The SVM model was configured with an RBF kernel, C = 1, and gamma set to “scale”, striking a balance between model complexity and generalization. The Random Forest classifier used 100 decision trees with no maximum depth restriction, allowing it to capture intricate patterns in the data. Meanwhile, the XGBoost model was optimized with 100 estimators, a learning rate of 0.1, and a maximum tree depth of 6, ensuring an effective trade-off between feature selection and predictive performance. The dataset was split into 80% training and 20% testing to train and evaluate these models, using stratified sampling to maintain class balance. This setup ensures that each model is fairly tested on unseen data. The results of this comparison, shown in Table 9, provide insight into how well traditional machine learning methods perform against the proposed approach, helping to assess its overall effectiveness.

As seen in Table 9, the proposed method performs significantly better than traditional machine learning models that rely on handcrafted features. While approaches like Random Forest and XGBoost show decent accuracy, they still lag behind the deep learning model, which automatically extracts features and achieves an impressive 99% accuracy. This result demonstrates the strength of the proposed method in identifying complex patterns, making it a much more effective solution for classification.

Model performance comparison under varying noise levels

In industry, noisy signals from factors such as electromagnetic interference or low-quality sensors can significantly affect the accuracy of monitoring systems, making it difficult to distinguish between normal and abnormal behavior. Tests were conducted under various interference levels to evaluate the networks’ robustness, simulating different noise conditions. Gaussian noise was applied to the input signals before converting them into images. Table 10 presents the evaluation results, focusing on model accuracy across different noise levels and categorized by encoding method.

The impact of Gaussian noise on model accuracy was tested at two levels, with standard deviations of 0.4 (Level 1) and 0.8 (Level 2) relative to the input signal range (Table 4). Both zero-mean and non-zero-mean noise distributions were introduced. Method 1 (SSPC encoding) showed the most robustness at all noise levels, with training accuracy above 95% and testing accuracy over 90% even at the highest noise levels. Method 2 (SSSC encoding) also showed good resilience to noise but was slightly lower than Method 1. Methods 3 and 4 performed worse, with testing accuracy dropping to 50–75% at Level 1, and their performance gap with Methods 1 and 2 grew wider at Level 2, indicating less robustness under moderate noise conditions. Non-zero mean noise caused greater accuracy drops than zero mean noise for all methods. Methods 1 and 2 excelled at detecting abnormal behavior in noisy environments.

Additionally, it is important to note that the second sensor used for data collection was outside the core process, primarily capturing environmental noise. This external noise introduced into the dataset required high noise levels to impact network performance significantly. Acknowledging this factor provides a clearer understanding of the network’s robustness and predictive accuracy under noisy conditions caused by external factors.

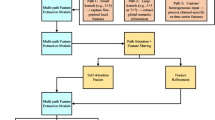

Evaluating networks with augmented feature space

In the previous sections, surface roughness was evaluated and predicted exclusively based on time series data. This section investigates the network performance by incorporating the process parameters as additional inputs. This relationship was established, as shown in Fig. 11. During each machining process, the four parameters specified in Table 2 served as input, and after the machining process, the AE signals were recorded, and corresponding features were calculated.

A normalization process was initially applied to integrate the process parameters into the network, considering the values relative to other numbers of the same parameter. These normalized values were then added to the flattened network layer. The efficacy of this approach was assessed in various scenarios, encompassing situations with noise-free data and scenarios involving noisy data. This comprehensive evaluation aims to gauge the impact of incorporating process parameters on the network’s performance, providing insights into its effectiveness under different conditions.

In this section, evaluations were performed solely using the ShuffleNet architecture. This network was selected due to its previously demonstrated high accuracy and fast processing capability. This efficiency makes it well-suited for integration and testing in real-time machining monitoring applications. By focusing the analysis on a single high-performing network architecture, more in-depth insights can be gained into the impact of augmenting time series data with process parameters on model robustness and generalization performance. Table 11 comprehensively compares network accuracy on the ShuffleNet architecture before and after adding process parameters. The assessment includes different encoding methods: SSPC, SSSC, SSSC*, and RP.

As shown in Fig. 12, there is a significant improvement in the accuracy values after combining the process parameters. The RP method shows the highest improvement rate, especially considering its low accuracy before adding process parameters compared to other methods. The growth rate of accuracy after adding process parameters is inversely proportional to the initial accuracy. Methods with lower accuracy initially experience a higher growth rate, emphasizing the positive impact of integrating process parameters on the overall accuracy of the ShuffleNet architecture.

In addition to improving prediction accuracy, adding parameters accelerates the process of model convergence during the training of the ShuffleNet architecture. As can be seen in Fig. 13, the learning curve showing the accuracy of the model across epochs converges faster when process data are included along with the AE time series. In particular, when the model is trained on multivariate input data, it reaches its maximum accuracy in fewer epochs. In addition, the loss curve decreases faster despite the process parameters. Faster loss reduction indicates that the model can minimize errors faster when training with additional variables. Adding time series data with process parameters improves the final model’s accuracy and speeds up training by enabling faster optimization. This suggests that the process data provides valuable signals to the model beyond AE alone, enabling more efficient learning. Accelerated learning by influencing multimodal inputs provides an additional benefit for integrating process measurements into the model.

Furthermore, the efficacy of adjusting process parameters is extended to noisy data scenarios, as detailed in Table 12. The corresponding Fig. 14, reflecting the impact on accuracy, reveals that fine-tuning process parameters enhance accuracy even in noise. After incorporating process parameters, the highest accuracy is achieved at noise level 1, followed by the same scenario at noise level 2. Adding these parameters mitigates accuracy loss induced by elevated noise levels. Consequently, the network with added process parameters outperforms the one relying solely on AE signals, even with higher noise levels at level 2.

Finally, Table 13 shows the general classification of method.

Conclusion

This article explores the potential of using various methods of encoding time series signals to predict and classify surface roughness values using deep convolutional networks. The framework involves creating two-dimensional images using four different methods, including a unique approach that transforms the time series signals recorded during the machining process into photos. Deep convolutional networks such as VGG16, Rsenet18, ShuffleNet, and CNN-LSTM were used to check the accuracy and efficiency of each network and evaluate calculation cost values. Different noise levels were added to the input data to improve the accuracy values of the networks after the addition of noise to determine the networks’ efficiency more accurately. Additionally, process parameters were used as additional input, and it was observed that the accuracy of the network improved. The following general conclusions can be confidently reached based on the experimental results. Cutting speed, feed per tooth, depth of cut, and tool type strongly affected responses. However, the effect of feed rate was more profound, followed by tool type and depth of cut.

-

(1)

The SSPC method requires less preprocessing than existing methods and is more accessible for image creation. The results indicate that this method achieves the highest accuracy of approximately 98% under noise-free conditions and around 91% when noise is introduced. In contrast, the CNN-LSTM network, which has a temporal nature, demonstrates an accuracy of about 94% under noise-free conditions.

-

(2)

The averaging method has been observed to provide better results among the two downsampling methods, SSSC and SSSC*. This method achieves an accuracy of approximately 96–98% and demonstrates strong resilience to noise, with a reduction in accuracy of only about 2–5% under noisy conditions. The RP methods are essential for downsampling the data, as the computational volume would have significantly increased without them. Overall, downsampling effectively reduces data size while maintaining accuracy. Due to the low computing cost of the ShuffleNet network, which is approximately 8 to 10 milliseconds, this network is ideally suited for real-time and online forecasting applications.

-

(3)

Due to the less pre-fitting in the SSPC method, it may perform less well when noise is added to the original data values. For example, when there is a non-zero average noise with a level of one, the accuracy drop in the test data for the SSSC method is only about 2%, resulting in an accuracy of 94.64%. In comparison, the SSPC method has a drop of about 1%. experiences resulting in an accuracy of 92.29%.

-

(4)

Using process parameters as an additional input to the network improved the efficiency of the network, and somehow, the lower the accuracy of the network, the higher the improvement rate. Also, adding these parameters led to faster convergence, and the network loss values decreased faster.

Innovative approaches for encoding time series signals and converting one-dimensional signals into two-dimensional images are suggested as the outlook for future works. Furthermore, exploration is conducted on integrating various signals recorded during the data acquisition, such as force and AE signals. Techniques like Data-level fusion, Decision-level fusion, and Feature-level fusion are employed for this purpose. Additionally, advocacy is made for applying regression analysis to accurately estimate surface roughness coefficients based on AE signals, particularly in tea classification studies. Discrete surface roughness values can be employed instead of regression in certain analyses.

Data availability

Data Availability : Data sets generated during the current study are available from the corresponding author upon reasonable request.

Change history

17 June 2025

A Correction to this paper has been published: https://doi.org/10.1038/s41598-025-07096-1

Abbreviations

- ANN:

-

Artificial neural network

- AA:

-

Aluminium alloy

- Ra:

-

Average surface roughness (µm)

- Vc :

-

Cutting speed (m/min)

- fz :

-

Feed per tooth (mm/tooth)

- ap :

-

Depth of cut (mm)

- SVM:

-

Support vector machine

- GPR:

-

Gaussian process regression

- RF:

-

Random forest

- FFT:

-

Fast Fourier transforms

- GA:

-

Genetic algorithms

- CNN:

-

Convolutional neural networks

- AE:

-

Acoustic emission

- SNR:

-

Signal-to-noise ratio

- SSPC:

-

Segmented stacked permuted channels

- SSSC/SSSC*:

-

Segmented sampled stacked channels

- RP:

-

Recurrence plot

References

Serin, G. et al. Review of tool condition monitoring in machining and opportunities for deep learning. Int J Adv Manuf Technol 109. https://doi.org/10.1007/s00170-020-05449-w

Guo, M. et al. Prediction of surface roughness using deep learning and data augmentation. J. Intell. Manuf. Spec. Equip (2024). https://doi.org/10.1108/JIMSE-10-2023-0010

Bai, L. et al. A hybrid physics-data-driven surface roughness prediction model for ultra-precision machining. Sci. China Technol. Sci. 66, 1289–1303 (2023).

Nasir, V. & Sassani, F. A review on deep learning in machining and tool monitoring: Methods, opportunities, and challenges. Int. J. Adv. Manuf. Technol. 115, 2683–2709 (2021).

Kang, H. S. et al. Smart manufacturing: Past research, present findings, and future directions. Int. J. Precis. Eng. Manuf.-Green Technol. 3, 111–128 (2016).

A Review on Deep Learning Applications in Prognostics and Health Management | IEEE Journals. & Magazine | IEEE Xplore, https://ieeexplore.ieee.org/document/8889720 (Accessed 4 February 2024).

Lin, W-J. et al. Evaluation of deep learning neural networks for surface roughness prediction using vibration signal analysis. Appl. Sci. 9, 1462 (2019).

Feng, P. et al. Model-based surface roughness Estimation using acoustic emission signals. Tribol. Int. 144, 106101 (2020).

Abu-Mahfouz, I. et al. Surface roughness prediction as a classification problem using support vector machine. Int. J. Adv. Manuf. Technol. 92, 803–815 (2017).

Yeganefar, A., Niknam, S. A. & Asadi, R. The use of support vector machine, neural network, and regression analysis to predict and optimize surface roughness and cutting forces in milling. Int. J. Adv. Manuf. Technol. 105, 951–965 (2019).

Dubey, V., Sharma, A. K. & Pimenov, D. Y. Prediction of surface roughness using machine learning approach in MQL turning of AISI 304 steel by varying nanoparticle size in the cutting fluid. Lubricants 10, 81 (2022).

Motta, M. P. et al. Machine learning models for surface roughness monitoring in machining operations. Procedia CIRP. 108, 710–715 (2022).

Zhou, T. et al. Prediction of surface roughness of 304 stainless steel and multi-objective optimization of cutting parameters based on GA-GBRT. Appl. Sci. 9, 3684 (2019).

Kant, G. & Sangwan, K. S. Predictive modelling and optimization of machining parameters to minimize surface roughness using artificial neural network coupled with genetic algorithm. Procedia CIRP. 31, 453–458 (2015).

Khorasani, A. & Yazdi, M. R. S. Development of a dynamic surface roughness monitoring system based on artificial neural networks (ANN) in milling operation. Int. J. Adv. Manuf. Technol. 93, 141–151 (2017).

Gupta, A. K. et al. Optimisation of turning parameters by integrating genetic algorithm with support vector regression and artificial neural networks. Int. J. Adv. Manuf. Technol. 77, 331–339 (2015).

Wang, X. et al. Predictive modeling of surface roughness in lenses precision turning using regression and support vector machines. Int. J. Adv. Manuf. Technol. 87, 1273–1281 (2016).

Martínez-Arellano, G., Terrazas, G. & Ratchev, S. Tool wear classification using time series imaging and deep learning. Int. J. Adv. Manuf. Technol. 104, 3647–3662 (2019).

Huang, Z. et al. Tool wear predicting based on multisensory Raw signals fusion by reshaped time series convolutional neural network in manufacturing. IEEE Access. 7, 178640–178651 (2019).

Kou, R. et al. Image-based tool condition monitoring based on convolution neural network in turning process. Int. J. Adv. Manuf. Technol. 119, 3279–3291 (2022).

Huang, P-M. & Lee, C-H. Estimation of tool wear and surface roughness development using deep learning and sensors fusion. Sensors 21, 5338 (2021).

Pan, Y. et al. Online prediction of ultrasonic elliptical vibration cutting surface roughness of tungsten heavy alloy based on deep learning. J. Intell. Manuf. 33, 675–685 (2022).

Guo, W. et al. Prediction of surface roughness based on a hybrid feature selection method and long short-term memory network in grinding. Int. J. Adv. Manuf. Technol. 112, 2853–2871 (2021).

Grzenda, M. & Bustillo, A. Semi-supervised roughness prediction with partly unlabeled vibration data streams. J. Intell. Manuf. 30, 933–945 (2019).

Anagün, Y., Işik, Ş. & Hayati Çakir, F. Surface roughness classification of electro discharge machined surfaces with deep ensemble learning. Measurement 215, 112855 (2023).

Gouarir, A. et al. In-process tool wear prediction system based on machine learning techniques and force analysis. Procedia CIRP. 77, 501–504 (2018).

Kirichenko, L., Zinchenko, P. & Radivilova, T. Classification of time realizations using machine learning recognition of recurrence plots. In (eds Babichev, S., Lytvynenko, V., Wójcik, W. et al.) Lecture Notes in Computational Intelligence and Decision Making 687–696. (Springer, 2021).

Zhang, Y. et al. Encoding time series as Multi-Scale signed recurrence plots for classification using fully convolutional networks. Sensors 20, 3818 (2020).

Alzubaidi, L. et al. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data. 8, 53 (2021).

Hossin, M. A review on evaluation metrics for data classification evaluations. Int. J. Data Min. Knowl. Manag Process. 5, 01–11 (2015).

Author information

Authors and Affiliations

Contributions

Validation, M.Z.; formal analysis, M.Z.; investigation, M.Z., M.SH., S.A.N; writing—original draft preparation, M.Z., M.SH., S.A.N., supervision and writing—review and editing, M.SH., S.A.N. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: The original version of this Article contained errors in the names of the authors Mohammad Zangane and Mohammad Shahbazi, which were incorrectly given as M Zangane and M Shahbazi, respectively.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zangane, M., Shahbazi, M. & Niknam, S. Using deep convolutional networks combined with signal processing techniques for accurate prediction of surface quality. Sci Rep 15, 7134 (2025). https://doi.org/10.1038/s41598-025-92114-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-92114-5