Abstract

Using social robots is a promising approach for supporting senior citizens in the context of super-aging societies. The essential design factors for achieving socially acceptable robots include effective emotional expressions and cuteness. Past studies have reported the effectiveness of robot-initiated touching behaviors toward interacting partners on these two factors in the context of interaction with adults, although the effects of such touch behaviors on them are unknown in seniors. Therefore, in this study, we investigated the effects of robot-initiated touch behaviors on perceived emotions (valence and arousal) and the feeling of kawaii, a common Japanese adjective for expressing cute, lovely, or adorable. In experiments with Japanese participants (adults: 21–49, seniors: 65–79) using a baby-type robot, our results showed that the robot’s touch significantly increased the perceived valence regardless of the expressed emotions and the ages of the participants. Our results also showed that the robot’s touch was effective in adults in the context of arousal and the feeling of kawaii, but not in seniors. We discussed the differential effects of robot-initiated touch between adults and seniors by focusing on emotional processing in the latter. The findings of this study have implications for designing social robots that have the capability of physical interaction with seniors.

Similar content being viewed by others

Introduction

Due to the increase in the number of senior citizens worldwide, achieving high-quality nursing care with technology is an essential challenge for researchers. One possible approach is using care-receiving robots that interact with seniors to encourage care-taking behaviors toward robots and for increasing their daily activities. In this context, robotics researchers have developed different kinds of social robots, including a pet type1 and a baby type2. Such social robots have manifested positive effects not only on seniors at senior facilities but also on nursing staffs whose mental loads were reduced3. Social acceptance is required to accelerate the spread of such robots. Recent studies have focused on two essential factors (of robots) to create acceptable social robots from the perspective of ordinary people: their emotional expressions and their cuteness.

Concerning the former, several past studies reported that emotional cues increase the social acceptance both for social robots and such other artificial agents as non-humanoid urban robots4 and virtual humans5. Recent human–robot interaction studies have focused on emotional expressions6 using facial aspects7, body movements8, and voice characteristics9. Touch behaviors from robots to people have also been used as emotional cues to enhance the expressed emotions of social robots10,11,12.

Concerning the cuteness of robots, many recent commercial robots have been designed with cute appearances, including LOVOT13, AIBO14, and Paro15. These robots (developed in Japan) share a common design policy, kawaii, a common Japanese adjective that conveys cute, lovely, or adorable16,17. Konrad Lorenz’s baby schema, a well-known factor that induces perceptions of cuteness18,19, has also been adapted for designing such robots. Robotics researchers investigated other elicitors to induce the feeling of kawaii except for the appearance of robots, including their number20 and their locomotion behaviors21. Recent human–robot interaction studies focused on touch behaviors from robots to an object as another elicitor related to the feeling of kawaii22.

Interestingly, recent studies suggest that touch behaviors from robots provided positive effects from both perspectives, i.e., emotional expressions and cuteness. In interaction with seniors, past studies reported the positive aspects of such touch behaviors as bonding between nurses and seniors23 and enhancing the long-term mental health care24 and psychiatric care of seniors25. Past studies also investigated the positive effects of touch interactions between robots and seniors, such as therapy effects, by interacting with a seal robot26 and explored the benefits of an animal-like robot for dementia care27. These studies focused on social acceptance and nursing care perspectives and reported the positive outcomes of touch interaction with robots from various views.

However, robot-initiated touch effects on the perceived emotions and the feeling of kawaii have never been investigated in the context of social robots for the elderly; the above previous studies that focused on robot-initiated touch behaviors mainly conducted their experiments with adults. Some previous studies suggested that the perceptions of emotional expressions and the feeling of kawaii differ between seniors and younger adults28,29,30,31. Visual and auditory features seem to become less effective in the emotional perceptions of seniors. On the other hand, although aging decreases touch sensitivity and acuity, past research reported that seniors described higher subjective pleasantness toward touch stimuli than other groups of adults32,33,34, perhaps indicating that touch behavior might be a useful tool for enhancing a robot’s expressed emotions.

Therefore, investigating the effects of robot-initiated touch behaviors with seniors on the emotional expressions and the feeling of kawaii might help build knowledge for socially acceptable robots for that age cohort. Contextualizing these findings to the current research, i.e., following the positive effects for seniors with touch stimuli32,33,34 and touch interaction effects with robots26,27, we hypothesized that robot-initiated touch interaction might have positive effects in the context of perceived emotions and the feeling of kawaii across different age groups. Based on this hypothesis, we made the following predictions:

-

Prediction 1: A baby robot’s touches will increase the perceived valence of the perceived emotion regardless of the user’s age.

-

Prediction 2: A baby robot’s touches will increase the perceived arousal of the perceived emotion regardless of the user’s age.

-

Prediction 3: A baby robot’s touches will increase the feeling of kawaii regardless of the user’s age.

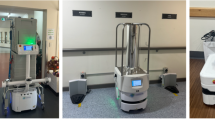

With a background reflecting these considerations, we developed a robot that can actively touch users based on an existing baby robot (Fig. 1), which was specially designed for dementia sufferers. We experimentally investigated the effects of its touch behaviors on the perceptions of the emotional expressions and the feeling of kawaii with 48 adult and senior participants (24 people from ages 21 to 49 and 24 from 65 to 79, with identical gender ratios in both age groups.) They experienced four different interactions, following 2 × 2 × 2 experiment factors (age: adult and seniors, touch: with and without touch, and emotion: happy and angry expressions by voices). We evaluated their perceived feelings toward the expressed emotions (valence, arousal) through two questionnaire items and another item to evaluate the feeling of kawaii.

Results

Perceived feelings toward expressed emotions

We used an affective slider35, which investigates valence and arousal values toward the emotions expressed by the robot. We conducted a three-way mixed measure analysis of variance (ANOVA) for age, touch, and emotion factors to investigate the F-values (F), the p-values (p), and the effect size (partial eta squared, ηp2).

For valence (Fig. 2, left), prediction 1 was supported; the results showed that the robot’s touch behaviors significantly increased the perceived valence regardless of the expressed emotions (happy and angry) and the participant’s age cohorts (adults and seniors). The analysis found significant differences in the touch factor (F (1, 46) = 12.144, p < 0.001, ηp2 = 0.209), the emotion factor (F (1, 46) = 73.742, p < 0.001, ηp2 = 0.616), the age factor (F (1, 46) = 33.753, p < 0.001, ηp2 = 0.423), and the simple interaction effects between the emotion and age factors (F (1, 46) = 23.155, p < 0.001, ηp2 = 0.335). The analysis did not find a significant difference in the simple interaction effect between the touch and age factors (F (1, 46) = 2.961, p = 0.092, ηp2 = 0.060) in the simple interaction effect between the touch and emotion factors (F (1, 46) = 0.015, p = 0.902, ηp2 = 0.001) or in the two-way interaction effect (F (1, 46) = 0.070, p = 0.793, ηp2 = 0.002).

The simple main effects showed significant differences: adult < seniors, p < 0.001 in the angry condition, angry < happy, p < 0.001 in the seniors condition, and angry < happy, p < 0.001 in the adult condition.

For arousal (Fig. 2, right), prediction 2 was partially supported. Touch behaviors were only effective in adults in the context of arousal; seniors evaluated these values more highly regardless of the touch behaviors. Our analysis found significant differences in the touch factor (F (1, 46) = 7.206, p = 0.010, ηp2 = 0.135), the emotion factor (F (1, 46) = 32.897, p < 0.001, ηp2 = 0.417), the age factor (F (1, 46) = 9.780, p = 0.003, ηp2 = 0.175), the simple interaction effects between the touch and age factors (F (1, 46) = 7.139, p = 0.010, ηp2 = 0.134), and the simple interaction effects between the emotion and age factors (F (1, 46) = 8.894, p = 0.005, ηp2 = 0.162). We found no significant difference in the simple interaction effect between the touch and emotion factors (F (1, 46) = 1.347, p = 0.252, ηp2 = 0.028) or in the two-way interaction effect (F (1, 46) = 1.424, p = 0.293, ηp2 = 0.030).

The simple main effects showed significant differences: adult < seniors, p < 0.001 in the without-touch condition, without-touch < with-touch, p < 0.001 in the adult condition, adult < seniors, p < 0.001 in the angry condition, and angry < happy, p < 0.001 in the adult condition.

The feeling of kawaii

Following past studies, we used a single questionnaire item (“the degree of the feeling of kawaii,” 0-to-10 response format: 0: “not kawaii at all,” 10: “extremely kawaii”) to measure the feeling of kawaii20. We employed an 11-point response format because a past study argued that using 11 scales closely approximated interval data36. We conducted a three-way mixed measure analysis of variance (ANOVA) for the age, touch, and emotion factors.

For the feeling of kawaii (Fig. 3), prediction 3 was partially supported. Touch behaviors were only effective in adults in the context of the feeling of kawaii; seniors evaluated these values more highly regardless of the touch behaviors. The analysis found significant differences in the touch factor (F (1, 46) = 14.496, p < 0.001, ηp2 = 0.240), the emotion factor (F (1, 46) = 42.894, p < 0.001, ηp2 = 0.483), and in the simple interaction effect between the touch and age factors (F (1, 46) = 8.250, p = 0.006, ηp2 = 0.152). The analysis did not find a significant difference in the age factor (F (1, 46) = 2.764, p = 0.103, ηp2 = 0.057), in the simple interaction effects between the emotion and age factors (F (1, 46) = 0.312, p = 0.579, ηp2 = 0.007), in the simple interaction effect between the touch and emotion factors (F (1, 46) = 0.142, p = 0.708, ηp2 = 0.003), or in the two-way interaction effect (F (1, 46) = 0.959, p = 0.333, ηp2 = 0.020). The simple main effects showed significant differences: adult < seniors, p = 0.032 in the without-touch condition and without-touch < with-touch, p < 0.001 in the adult condition.

Discussion

This study provides several implications about a robot’s touch effects on perceived emotions and the feeling of kawaii. One interesting phenomenon is the different touch effects between adults and seniors. Similar to past studies, adults reacted positively to the robot’s touch. On the other hand, seniors only showed a significant difference in perceived valance, although past studies reported that age did not play a significant role in human–human touch interaction37,38. We thought that one possible reason was a ceiling effect. In fact, all the questionnaire results from the senior group are basically higher than those of the adult age group, and a past study reported that seniors reported greater overall valence and arousal than young adults toward affective stimuli39. In other words, our experiment settings might have difficulty confirming the effects of robot-initiated touch behaviors on the seniors’ perceived emotions and the feeling of kawaii. If so, why were the effects of touch shown in the valence (prediction 1) but not in the arousal and the feeling of kawaii (predictions 2 and 3)?

One possible explanation is the dedifferentiation of emotional processing in seniors. A past study40 reported that seniors perceive visual stimuli as a more positive valence with less arousal than younger adults. We thought that the robot-initiated touch behavior was a positive stimulus; therefore, seniors perceived a high valence with less arousal compared to the younger participants. This situation would enhance the touch effects toward perceived valence but suppress the touch effects toward perceived arousal.

Another past study reported that the feeling of kawaii toward infants clearly increased with the age of the observers41. Based on such characteristics of seniors, the touch effects on the feeling of kawaii might not have appeared in the senior group in our study because our robot looks like an infant, and they felt it was more kawaii just by looking at it. Note that even though the positive effects are limited for seniors, increasing the perceived valence remains useful for achieving acceptable social robots in the context of effective emotional expressions.

In our study, the questionnaire results under the angry condition were basically higher than the middle score (4) among the seniors, which might indicate that they positively evaluated the baby robot’s angry expression differently than the adult participants. However, this result might have been influenced by the shorter experience period of our study. A past study3, which used the original version of baby robots for 2 weeks at an elderly nursing home, reported that some participants refused to interact with the robots due to their angry (crying) behavior. A long-term experiment is needed to investigate the effects of a baby robot’s touch on mitigating negative impressions of its angry behaviors.

This study has several limitations regarding the types of robots and their touching behaviors. We only used a single baby robot and a simple touching behavior during its emotional expressions and conducted experiments in a single country. Testing with different baby robots and touch behaviors (e.g., rubbing or squeezing) in different cultural contexts would provide knowledge about the combined effects of these factors. Moreover, adjusting the touch timing and frequency during speaking is important to provide more natural emotional expressions, similar to other forms of touch interaction during conversations42. Note that despite these limitations, our experiment results provide knowledge about the relationships between robot-initiated touch and perceived emotional feelings.

Methods

This study and all its procedures were approved by the Advanced Telecommunication Research Review Boards (501-4). All the participants provided written informed consent before joining this study. All the methods were performed in accordance with relevant guidelines and regulations.

Device

Figure 4 shows the robot used in this study, a modified version of HIRO3 called Sawatte Hiro-chan. We added a motor device (RS314MDVF) and a battery to control both its arms for the touch behaviors during the interactions. We used M5Stack to control its voices and the motor device. We prepared ten seconds of voices for both happy and angry emotions.

Conditions

This study considered three factors: (1) touch, (2) emotion, and (3) age. The touch and emotion factors were treated as a within-subject design; the age factor was treated as a between-subject design. Each participant experienced four conditions that combined two levels of touch and emotion factors. The orders of the experiment conditions were counterbalanced.

In the touch factor, we prepared two levels: with-touch and without-touch. In the former condition, the robot touched the participants’ hands by moving both its arms three times while expressing an emotion. In the without-touch condition, the robot did not move its arms. In the emotion factor, we prepared two levels: happy and angry. We used actual recordings of a baby laughing and crying for this experiment. In the age actor, we prepared two levels: adults (21–49) and seniors (65–79).

Participants

Forty-eight healthy participants joined the experiment: 12 females and 12 males, from ages 21–49 (mean 34.2), and 12 females and 12 males, from ages 65–79 (mean 71.6). Analysis with G*power43 (a medium effect size of 0.25, power of 0.95, and an α level of 0.05) showed that the sample size was 36, which indicated that the number of participants in this study was sufficient. All were native Japanese speakers gathered through a recruitment agency.

Measurements

We used an affective slider35, which investigates valence and arousal values toward the emotions expressed by the robot. This software enables participants to input the valence and arousal values by a GUI. The values range from 0.0 to 1.0.

To evaluate the feeling of kawaii, we used a single questionnaire item (“the degree of the feeling of kawaii,” 0-to-10 response format: 0: “not kawaii at all,” 10: “extremely kawaii”) to measure the feeling of kawaii20. We employed an 11-point response format because a past study argued that using 11 scales closely approximated interval data36.

Data availability

Data supporting this study’s findings are available upon reasonable request from the corresponding authors.

References

Nakashima, T., Fukutome, G. & Ishii, N. Healing effects of pet robots at an elderly-care facility. In 2010 IEEE/ACIS 9th international conference on computer and information science, 407–412 (2010).

Kanoh, M. Babyloid. J. Robot. Mechatron. 26(4), 513–514 (2014).

Sumioka, H., Yamato, N., Shiomi, M. & Ishiguro, H. A minimal design of a human infant presence: A case study toward interactive doll therapy for older adults with dementia. Front. Robot. AI 8, 633378 (2021).

Hoggenmueller, M., Chen, J. & Hespanhol, L. Emotional expressions of non-humanoid urban robots: The role of contextual aspects on interpretations. In Proceedings of the 9TH ACM international symposium on pervasive displays, 87–95 (2020).

Ham, J., Li, S., Looi, J. & Eastin, M. S. Virtual humans as social actors: Investigating user perceptions of virtual humans’ emotional expression on social media. Comput. Hum. Behav. 155, 108161 (2024).

Stock-Homburg, R. Survey of emotions in human–robot interactions: Perspectives from robotic psychology on 20 years of research. Int. J. Soc. Robot. 14(2), 389–411 (2022).

Cameron, D. et al. The effects of robot facial emotional expressions and gender on child–robot interaction in a field study. Connect. Sci. 30(4), 343–361 (2018).

Tuyen, N. T. V., Elibol, A. & Chong, N. Y. Learning bodily expression of emotion for social robots through human interaction. IEEE Trans. Cogn. Dev. Syst. 13(1), 16–30 (2020).

Striepe, H., Donnermann, M., Lein, M. & Lugrin, B. Modeling and evaluating emotion, contextual head movement and voices for a social robot storyteller. Int. J. Soc. Robot. 13, 441–457 (2021).

Zheng, X., Shiomi, M., Minato, T. & Ishiguro, H. What kinds of robot’s touch will match expressed emotions?. IEEE Robot. Autom. Lett. 5(1), 127–134 (2019).

Teyssier, M., Bailly, G., Pelachaud, C. & Lecolinet, E. Conveying emotions through device-initiated touch. IEEE Trans. Affect. Comput. 13, 1477–1488 (2020).

Zheng, X., Shiomi, M., Minato, T. & Ishiguro, H. Modeling the timing and duration of grip behavior to express emotions for a social robot. IEEE Robot. Autom. Lett. 6(1), 159–166 (2020).

Yoshida, N. et al. Production of character animation in a home robot: A case study of lovot. Int. J. Soc. Robot. 14(1), 39–54 (2022).

Fujita, M. AIBO: Toward the era of digital creatures. Int. J. Robot. Res. 20(10), 781–794 (2001).

Shibata, T. An overview of human interactive robots for psychological enrichment. Proc. IEEE 92(11), 1749–1758 (2004).

Kinsella, S. Cuties in japan. In Women, media and consumption in Japan, 230–264 (Routledge, 2013).

Nittono, H., Fukushima, M., Yano, A. & Moriya, H. The power of kawaii: Viewing cute images promotes a careful behavior and narrows attentional focus. PLoS ONE 7(9), e46362 (2012).

Lorenz, K. The innate forms of potential experience. Z. Tierpsychol. 5, 235–409 (1943).

Brooks, V. & Hochberg, J. A psychophysical study of “cuteness”. Percept. Mot. Skills 11, 205 (1960).

Shiomi, M., Hayashi, R. & Nittono, H. Is two cuter than one? Number and relationship effects on the feeling of kawaii toward social robots. PLoS ONE 18(10), e0290433 (2023).

Ohkura, M. Kawaii engineering. In Kawaii engineering, 3–12 (Springer, 2019).

Okada, Y. et al. Kawaii emotions in presentations: Viewing a physical touch affects perception of affiliative feelings of others toward an object. PLoS ONE 17(3), e0264736 (2022).

Routasalo, P. Non-necessary touch in the nursing care of elderly people. J. Adv. Nurs. 23(5), 904–911 (1996).

Gleeson, M. & Timmins, F. The use of touch to enhance nursing care of older person in long-term mental health care facilities. J. Psychiatr. Ment. Health Nurs. 11(5), 541–545 (2004).

Salzmann-Erikson, M. & Eriksson, H. Encountering touch: A path to affinity in psychiatric care. Issues Ment. Health Nurs. 26(8), 843–852 (2005).

Shibata, T. & Wada, K. Robot therapy: A new approach for mental healthcare of the elderly—A mini-review. Gerontology 57(4), 378–386 (2011).

Jung, M. M., Van der Leij, L. & Kelders, S. M. An exploration of the benefits of an animallike robot companion with more advanced touch interaction capabilities for dementia care. Front. ICT 4, 16 (2017).

Beer, J. M., Smarr, C.-A., Fisk, A. D. & Rogers, W. A. Younger and older users׳ recognition of virtual agent facial expressions. Int. J. Hum. Comput. Stud. 75, 1–20 (2015).

Hayes, G. S. et al. Task characteristics influence facial emotion recognition age-effects: A meta-analytic review. Psychol. Aging 35(2), 295 (2020).

Cortes, D. S. et al. Effects of aging on emotion recognition from dynamic multimodal expressions and vocalizations. Sci. Rep. 11(1), 2647 (2021).

Toyoshima, A. & Nittono, H. The concept and components of “Kawaii” for older adults in Japan. Jpn. Socio-Gerontol. Soc. 41(4), 409–419 (2020).

Sehlstedt, I. et al. Gentle touch perception across the lifespan. Psychol. Aging 31(2), 176 (2016).

McIntyre, S., Nagi, S. S., McGlone, F. & Olausson, H. The effects of ageing on tactile function in humans. Neuroscience 464, 53–58 (2021).

Schlintl, C. & Schienle, A. Evaluation of affective touch: A comparison between two groups of younger and older females. Exp. Aging Res. 50, 568–576 (2023).

Betella, A. & Verschure, P. F. The affective slider: A digital self-assessment scale for the measurement of human emotions. PLoS ONE 11(2), e0148037 (2016).

Wu, H. & Leung, S.-O. Can Likert scales be treated as interval scales?—A Simulation study. J. Soc. Serv. Res. 43(4), 527–532 (2017).

Russo, V., Ottaviani, C. & Spitoni, G. F. Affective touch: A meta-analysis on sex differences. Neurosci. Biobehav. Rev. 108, 445–452 (2020).

Saarinen, A., Harjunen, V., Jasinskaja-Lahti, I., Jääskeläinen, I. P. & Ravaja, N. Social touch experience in different contexts: A review. Neurosci. Biobehav. Rev. 131, 360–372 (2021).

Smith, D. P., Hillman, C. H. & Duley, A. R. Influences of age on emotional reactivity during picture processing. J. Gerontol. Ser. B 60(1), P49–P56 (2005).

Grühn, D. & Scheibe, S. Age-related differences in valence and arousal ratings of pictures from the International Affective Picture System (IAPS): Do ratings become more extreme with age?. Behav. Res. Methods 40(2), 512–521 (2008).

Nittono, H., Lieber-Milo, S. & Dale, J. P. Cross-cultural comparisons of the cute and related concepts in Japan, the United States, and Israel. SAGE Open 11(1), 2158244020988730 (2021).

Akiyoshi, T., Sumioka, H., Nakanishi, J., Kato, H. & Shiomi, M. Modeling and implementation of intra-hug gestures during dialogue for a huggable robot. IEEE Robot. Autom. Lett. 10(1), 136–143 (2025).

Faul, F., Erdfelder, E., Lang, A.-G. & Buchner, A. G* Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39(2), 175–191 (2007).

Acknowledgements

This work was partially supported by JST Moonshot R&D Grant Number JPMJMS2011 (experiment), JST CREST Grant Number JPMJCR18A1 (hardware development), and JSPS KAKENHI Grant Numbers JP21H04897 (evaluation) and JP24K21327 (writing).

Author information

Authors and Affiliations

Contributions

Masahiro Shiomi contributed to the study’s conception and design. Material preparation, data collection, and analysis were done by Masahiro Shiomi. The robot’s concept and development were done by Hidenobu Sumioka. The first draft of the manuscript was written by Masahiro Shiomi, and both authors commented on its previous versions. Both read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Shiomi, M., Sumioka, H. Differential effects of robot’s touch on perceived emotions and the feeling of Kawaii in adults and seniors. Sci Rep 15, 7590 (2025). https://doi.org/10.1038/s41598-025-92172-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-92172-9