Abstract

Autism spectrum disorder (ASD) includes a varied set of neuropsychiatric illnesses. This disorder is described by a definite grade of loss in social communication, academic functioning, personal contact, and limited and repetitive behaviours. Individuals with ASD might perform, convey, and study in a different way than others. ASDs naturally are apparent before age 3 years, with related impairments affecting manifold regions of a person’s lifespan. Deep learning (DL) and machine learning (ML) techniques are used in medical research to diagnose and detect ASD promptly. This study presents a Two-Tier Metaheuristic-Driven Ensemble Deep Learning for Effective Autism Spectrum Disorder Diagnosis in Disabled Persons (T2MEDL-EASDDP) model. The main aim of the presented T2MEDL-EASDDP model is to analyze and diagnose the different stages of ASD in disabled individuals. To accomplish this, the T2MEDL-EASDDP model utilizes min-max normalization for data pre-processing to ensure that the input data is scaled to a uniform range. Furthermore, the improved butterfly optimization algorithm (IBOA)-based feature selection (FS) is utilized to identify the most relevant features and reduce dimensionality efficiently. Additionally, an ensemble of DL holds three approaches, namely autoencoder (AE), long short-term memory (LSTM), and deep belief network (DBN) approach is employed for analyzing and detecting ASD. Finally, the presented T2MEDL-EASDDP model employs brownian motion (BM) and directional mutation scheme-based coati optimizer algorithm (BDCOA) techniques to fine-tune the hyperparameters involved in the three ensemble methods. A wide range of simulation analyses of the T2MEDL-EASDDP technique is accomplished under the ASD-Toddler and ASD-Adult datasets. The performance validation of the T2MEDL-EASDDP method portrayed a superior accuracy value of 97.79% over existing techniques.

Similar content being viewed by others

Introduction

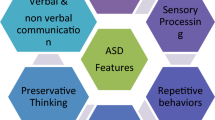

ASDs are developmental disabilities defined by challenges in communication and social interaction, as well as repetitive, restricted, and stereotypical behaviour patterns1. Symptoms are usually apparent before age 3 years. The nature of these difficulties, with an absence of biological markers for analysis and variations in clinical descriptions over time, raises tasks to monitor ASD occurrence. Accurate data reporting is crucial to recognize ASD occurrence in the population and can assist primary research2. ASD is a critical neurodevelopment disease with extremely high medical care costs. ASD is an everlasting absence of stereotypical behaviour and social interaction, frequently accompanied by extensive communication skill deterioration. ASD is connected with neurological and genetic elements3. ASD-related behaviours are stated through social interaction, the capability of imagining and thinking, repetitive activities, and communication disorders with other individuals. If ASD had been analyzed earlier, it could have been treated. Primary ASD detection plays a significant role in medical analysis4. Then, this analysis can notify personalized treatment regimens to enhance the living standards for children with ASD and their families. Unfortunately, the analysis process of ASD can take much time with valuable testing approaches5. In recent times, the enhancement of ASD cases around the globe has been a motivation for scientists or doctors to discover more efficient screening approaches. ASD symptoms closely resemble those of other mental disorders, like depression, which require distinctly different treatment approaches, leading to potential confusion6.

To simplify the analytical process and reduce the waiting period, a computerized approach for recognizing ASD, which necessitates little or beginner supervision, would be significant progress over common practice. Usually, it is identified in its initial phase, but the major bottleneck exists in the tedious and subjective nature of present analysis procedures7. Consequently, there is a waiting period of at least 13 months from the earlier suspicion to the actual analysis. Treating and detecting ASD in the early phase is highly important. This aids in alleviating or decreasing the symptoms to some extent, therefore enhancing the overall living standards of the individual. Nevertheless, a valuable period is lost due to the gaps between analysis and primary concern as this disease remains undetected8. Various investigations have discovered significant autism features in multiple ways to recognize autism, like voice recognition, eye tracking, feature extraction, medical image analysis, and facial recognition. Developed information technology that utilizes the Artificial Intelligence (AI) method has assisted in analyzing ASD earlier through facial pattern detection9. Recently, DL and ML have attained several accomplishments, obtaining more significance when employed in sciences like biomedicine and medicine. ML approaches are suggested or utilized in medical diagnostics and decision-making to aid data interpretation. Thus, screening disease approaches with the help of ML are widely examined10. ML approaches would not only aid in assessing the ASD risk precisely and rapidly but are also essential to streamlining the entire analysis process and assisting families in accessing much-needed therapies quickly.

This study presents a Two-Tier Metaheuristic-Driven Ensemble Deep Learning for Effective Autism Spectrum Disorder Diagnosis in Disabled Persons (T2MEDL-EASDDP) model. The main aim of the presented T2MEDL-EASDDP model is to analyze and diagnose the different stages of ASD in disabled individuals. To accomplish this, the T2MEDL-EASDDP model utilizes min-max normalization for data pre-processing to ensure that the input data is scaled to a uniform range. Furthermore, the improved butterfly optimization algorithm (IBOA)-based feature selection (FS) is utilized to identify the most relevant features and reduce dimensionality efficiently. Additionally, an ensemble of DL holds three approaches, namely autoencoder (AE), long short-term memory (LSTM), and deep belief network (DBN) approach is employed for analyzing and detecting ASD. Finally, the presented T2MEDL-EASDDP model employs brownian motion (BM) and directional mutation scheme-based coati optimizer algorithm (BDCOA) techniques to fine-tune the hyperparameters involved in the three ensemble methods. A wide range of simulation analyses of the T2MEDL-EASDDP technique are accomplished under the ASD-Toddler and ASD-Adult datasets. The key contribution of the T2MEDL-EASDDP technique is listed below.

-

The T2MEDL-EASDDP technique utilizes min-max normalization to scale features within a specified range, ensuring effectual data pre-processing. This step assists in improving the performance of the model by standardizing the input data. It plays a significant role in optimizing the analysis and detection of ASD by improving model stability and convergence.

-

The T2MEDL-EASDDP model employs the IBOA technique for feature selection, ensuring that only the most relevant features are chosen. This improves model performance by mitigating complexity and improving accuracy. By concentrating on key features, the model becomes more effectual and capable of better detecting ASD.

-

The T2MEDL-EASDDP approach utilizes an ensemble of DL methods, comprising AE, LSTM, and DBN, to analyze and detect ASD using every technique’s merits. This integration enhances the model’s capability to capture intrinsic patterns and discrepancies in the data. By incorporating these models, the approach improves detection accuracy and robustness.

-

The T2MEDL-EASDDP method implements the BDCOA model to fine-tune the model parameters, improving its accuracy and robustness. This optimization technique allows for more precise adjustments, improving the model’s performance. Effectually tuning the parameters assists the model in achieving improved generalization and stability in ASD detection.

-

Integrating IBOA for feature selection and BDCOA for model tuning, along with a hybrid DL ensemble approach, creates an optimized solution for ASD detection. This integration improves the model’s capability to identify relevant features and fine-tune parameters for enhanced performance. The novelty is the seamless fusion of advanced optimization algorithms with a multi-model DL framework, enhancing detection accuracy and efficiency for ASD diagnosis.

Literature survey

In11, an understandable ASD method that depends on DL for autism disease recognition in children and toddlers is projected. The presented method is separated into two different modules. Initially, applies a DL method for autism disease recognition. The second utilizes an XAI method called SHAP to emphasize the main features and describe the results of the methods. In12, employing approaches from ML, a method is created that is efficient for attaining higher performance to identify the earlier indicators of ASD in children. This work utilizes ML approaches, like RF, SVM, linear discriminant analysis, and k-nearest neighbour approaches, to separate populations into those who have ASD and do not have it. Al-Muhanna et al.13 present an ultra-light, computer-aided, Attention-based Hybrid Optimized Residual Memory Network (AHRML) for efficient and precise ASD recognition. At this point, an innovative hybridized Arithmetic HHO is applied to reduce the feature dimensions and modernize the disability of the identification process. Lakhan et al.14 project an effective and difficult ASD platform with complete services to improve ASD structure outcomes. The presented method is the federated learning-enabled convolutional neural network with LSTM (FCNN-LSTM) method utilizing multimodal databases. A security method that depends on AES was applied inside the FL framework to guarantee patient data security. Nogay and Adeli15 advanced an optimum CNN method utilizing the Grid Search Optimization (GSO) method. 3 CNN methods were intended to be employed in the method. (1) These methods are the quadruple classification method formed by taking gender, (2) The quadruple classification method was generated by taking age (3) The eightfold classification method was generated by considering both age and gender. Pokhrel et al.16 present a novel method for ASD classification, balancing the ability of ANNs with facial landmark recognition. This method exploits the subtleties fixed in these complicated particulars. The research examines dual classification, an ML challenge that dichotomizes data into one of 2 diverse categories. Furthermore, PCA is a dimensionality reduction model essential in altering data into smaller representations while retaining crucial modification. Prasad et al.17 focus on ASD classification utilizing MRI Images by Artificial Gannet Optimization enabled DCNN (AGO-DCNN). The bilateral filter is applied to extract the noise from the input MRI image, and this phase of image pre-processing ROI extraction is organized. The AGO is utilized to remove the essential region. AGO is a recently intended method that integrates dual optimizations, including Gannet Optimizer Algorithm (GOA) and Artificial Ecosystem-based Optimization (AEO).

Ullah et al.18 project a body-worn multi-sensor-based IoT framework utilizing ML to identify the complicated sign language of speech-impaired children. Optimum sensor position is crucial for feature extraction, as dissimilarities for positioning outcomes to understand the precise detection. Нryntsiv et al.19 propose a methodology for evaluating efficiency based on criteria like speech development, communication, and emotional regulation. Nadimi-Shahraki, Zamani, and Mirjalili20 propose an enhanced whale optimization algorithm (E-WOA) method by using a pooling mechanism and three effectual search strategies named migrating, preferential selecting, and enriched encircling prey. Prakash et al.21 propose a methodology to analyze behavioural videos for diagnosing ASD, utilizing a pipeline for child detection, action localization, and classification. Umrani and Harshavardhanan22 propose an automatic anxiety detection module for ASD utilizing a Deep CNN optimized by intelligent search techniques to tune classifier parameters. Polavarapu et al.23 present the involutional neural networks and ML techniques (INN-ASDNet) method, a novel approach that utilizes involutional neural networks and ML to predict autism. It uses location-specific involution kernels and a random forest (RF) classifier, requiring fewer parameters, reducing memory usage, and improving computation speed. Simon et al.24 introduce an approach for diagnosing ASD by utilizing eye-tracking data and ML. Gaze data was analyzed with Principal Component Analysis (PCA) and integrated into CNNs for feature extraction and classification, with transfer learning (TL) applied to enhance performance. Kavitha and Siva25 introduce the 3T Dilated Inception Network (3T-DINet) framework for ASD diagnosis utilizing rs-fMRI images. The model integrates a 3T dilated inception module with three dilation rates for extracting multi-scale brain features and employs Residual networks for better feature extraction. To improve performance, the model is optimized with a Crossover-based Black Widow Optimization (CBWO) approach. Vengidusamy et al.26 explore the complexities of ASD, accentuating early diagnosis, neuroimaging, genetic markers, and behavioural assessments for precise diagnosis. Lu et al.27 propose a DL technique for quantifying hand motion using the Sequential Bag of Convolutional Features (SBoCF) framework, incorporating Bag of Words (BoW) with a skeleton-based CNN for gesture classification, enabling automatic hand motor assessment. Sravani and Kuppusamy28 developed an automatic model for detecting ASD and Typically Developing (TD) individuals. It comprises pre-processing with edge detection and data augmentation, followed by model optimization using DCNNs fine-tuned with Dipper-Throated Particle Swarm Optimization (DTPSO). Grad-CAM is utilized for model interpretation.

Despite the improvements in ASD detection methods, there are still limitations in attaining highly accurate and generalized models across various populations. Many existing techniques depend on large, complex datasets that need substantial computational resources and are prone to overfitting. Furthermore, most approaches concentrate on a single modality (e.g., MRI, eye-tracking, or behavioural data), which limits the capability to capture the full complexity of ASD. There is also a lack of standardized, universally accepted evaluation metrics for evaluating the model’s performance. Moreover, few methods address real-time, on-the-ground applications, particularly in resource-constrained settings. Further research is required to improve model robustness, mitigate computational complexity, and incorporate multimodal data for a more comprehensive understanding of ASD.

The proposed model

In this paper, a novel T2MEDL-EASDDP model is developed. The main aim of the presented T2MEDL-EASDDP model is to analyze and diagnose the different stages of ASD in disabled individuals. The T2MEDL-EASDDP model has data normalization, dimensionality reduction using IBOA, three ensemble classification models, and a based parameter selection process to accomplish this. Figure 1 represents the entire procedure of the T2MEDL-EASDDP method.

Data normalization

Initially, the T2MEDL-EASDDP model undergoes min-max normalization for data pre-processing to ensure that the input data is scaled to a uniform range29. This model is chosen as it effectually scales data within a specified range, usually between 0 and 1, ensuring that all features contribute equally to the model. This is crucial when the dataset comprises features with varying units or magnitudes, as it prevents dominant features from skewing the outputs. Compared to other techniques, such as Z-score normalization, min-max normalization is more appropriate when the model requires a fixed input range, such as neural networks that often perform better with normalized data. This method enhances convergence speed and model stability, making it highly effective for DL tasks. Moreover, it assists in avoiding issues of numerical instability that can arise when features have significantly diverse scales, giving a more effective and reliable training process.

Min-max normalization is a straightforward technique that usually measures data to a stated range \(\:\left[\text{0,1}\right].\) The procedure includes deducting the least rate from every data point and separating within the range. Equation (5) signifies this normalization solution.

Here, \(\:x\) denotes the novel value of a variable. \(\:{x}_{\text{m}\text{i}\text{n}}\) and \(\:{x}_{\text{m}\text{a}\text{x}}\) represent the minimum and maximum values correspondingly. This model is simple to understand and perform, making it appropriate for states where feature scale is vital, like techniques that are delicate to fluctuating input measures. Conversely, its susceptibility to outliers can pretend the scaling, theoretically affecting the result of the method, particularly in databases with great values.

Dimensionality reduction: IBOA

Next, the IBOA-based FS efficiently identifies the most relevant features and reduces dimensionality30. This model is chosen because it can effectually detect and retain the most relevant features from a high-dimensional dataset. Unlike conventional methods, namely Recursive Feature Elimination (RFE), IBOA utilizes an optimization technique inspired by butterfly behaviour, which improves its capacity to explore the feature space and avoid local optima. This results in better feature selection accuracy and enhanced model performance. IBOA is specifically efficient for complex datasets, as it can handle large feature sets and mitigate computational costs by eliminating irrelevant or redundant features. Compared to other optimization techniques, IBOA balances exploration and exploitation, presenting robust feature selection with minimal computational overhead. Its adaptability to various datasets makes it a highly effectual choice for improving the performance of ML models. Figure 2 illustrates the steps involved in the IBOA model.

Chaotic mapping is mainly described by features like non-periodicity, unpredictability, non-repeatability, and good ergodicity, which are leveraged to enhance the range of the population and improve the model’s performance. In the original BOA, the butterfly range undergoes owing to the initialization of the randomly generated population. So, this paper presents Tent chaotic mapping to evenly dispense the population of butterflies and extend its searching range. Its mathematical formulation is given below:

Where \(\:\in\:\left(\text{0,1}\right)\).

The new BOA model has not fixed the search-step length of a solitary butterfly. Throughout the process, the length of the search step is not restricted owing to the higher degree of freedom of persons. To evade the limitation of butterfly individual search-step dimension, this paper develops a weight coefficient, which dynamically alters as per an individual fitness value. Its mathematical formulation is expressed below:

Here, \(\:{F}_{i}\) denotes the present individual fitness value, \(\:{F}_{b}\) represents the present global optimum, and \(\:{F}_{w}\) represents the worst fitness rates.

When the fitness value of the present individual is closely equal to the worse global fitness, then the advanced weight coefficient is allocated to that distinct, and also, the step dimension will take in their drive, intended to evade the set-up in a local optimal. If the present fitness value is dissimilar from the value of the global worst, then it is closer to the value of the global optimum. Also, the weight coefficient is lower, and the moving step size certifies the higher-precision exploration in the latter stage, evading the individual by avoiding the global optimum value. It will decrease the model’s performance.

Besides, by following the No free lunch theorem, a single technique cannot be entirely relevant to every issue, so this paper presents a sine-cosine model for enhancing the search stage of BOA. When united with the adaptive weighted co-efficient, the formulation of global and local search stages of BOA is upgraded below:

While \(\:{r}_{1}=a\times\:(1-t/{t}_{\text{m}\text{a}\text{x}}),\) \(\:a\) denotes a constant, which is fixed to 2; \(\:t\) represents the current iteration count; \(\:{t}_{\text{m}\text{a}\text{x}}\) denotes a maximum iteration count; \(\:{r}_{2}\) refers to a randomly generated number amid \(\:0\) and 2 \(\:\pi\:;\) \(\:{r}_{3}\) indicates random value among \(\:0\) and 2; and \(\:{r}_{4}\) is the generated number at random between \(\:0\) and 1.

In the IBOA model, the objectives are combined into a solitary objective formulation such that a present weight recognizes every importance of an objective. Here, a fitness function (FF) that unites both objectives of FS is utilized. Its mathematical formulation is expressed in Eq. (6).

Here, \(\:Fitness\left(X\right)\) signifies the fitness value of a subset \(\:X,\) \(\:E\left(X\right)\) embodies the classifier rate of error by utilizing the nominated features in the \(\:X\) subset, \(\:\left|R\right|\) and \(\:\left|N\right|\) denote the number of chosen features and original features in the database, correspondingly; \(\:\beta\:\) and \(\:\alpha\:\) are the weights of the reduction ratio and classifier error, \(\:\beta\:=(1-\alpha\:)\) and \(\:\alpha\:\in\:\left[\text{0,1}\right].\)

ASD detection process: three ensemble models

In addition, an ensemble of DL holds three approaches, AE, LSTM, and DBN, which are employed for analyzing and detecting ASD. An ensemble of AE, LSTM, and DBN is chosen for analyzing and detecting ASD due to the complementary merits of each model. AE outperforms in unsupervised feature learning, capturing complex data representations, while LSTM effectually handles sequential data, which is significant for time series or behaviour patterns in ASD. On the contrary, DBN captures hierarchical feature representations, making it highly efficient for structured data like brain scans or behavioural features. Integrating these three techniques allows the model to utilize spatial and temporal patterns, improving its ability to detect ASD. This hybrid approach presents more comprehensive learning and enhanced accuracy related to individual models, as each model compensates for the limitations of the others, giving a robust solution for ASD detection.

AE classifier

An AE neural network is a feedforward neural network (NN) with an output layer size equivalent to the input layer31. These kinds of NNs are tailored to function unsupervised, whereas they struggle through training input vectors to reconstruct output vectors. AEs include dual major modules: encoding and decoding.

The AE workings in a method so as the vector of input \(\:X\) has been converted in the encoding to a hidden representation \(\:H\), as offered in Eq. (7).

\(\:W\) denotes a weighted matrix, \(\:\partial\:\) which means an activation function rather than a rectified linear unit (RLU) or sigmoid function, and \(\:\beta\:\) represents a biased vector.

The first input space is reconstructed by converting the vector of hidden representation \(\:H\) utilizing decoding, as shown in Eq. (8).

The error of reconstruction \(\:\epsilon\:\) is then computed by discovering the variance among the reconstructed vector \(\:\widehat{X}\) and the new input vector, as shown in Eq. (9).

This method is trained in the unsupervised model to reduce the reconstruction error \(\:\epsilon\:\). It achieves this by learning the relations among input characteristics. The input would be relatively similar after being trained using data that resembles the training data. When the input generates a higher \(\:RE\), it is measured as data.

LSTM classifier

The vanishing gradient problem of recurrent neural networks (RNNs), which contains poor capability for short-term mutation data acquisition, is solved by LSTM32. Furthermore, the LSTM’s activation functions, such as tanh and sigmoid, have an exact super-saturation region, which prevents an input data flow from altering dramatically in the supersaturated area’s value range. This paper proposes an LSTM with a transformation gating mechanism to further enhance the LSTM network’s ability to learn long‐term dependencies. Every LSTM block has five major modules such as forget gate \(\:\left({f}_{t}\right)\), input gate \(\:\left({i}_{t}\right)\), cell state \(\:\left({c}_{t}\right)\), hidden layer (HL) \(\:\left({h}_{t}\right)\), and an output gate \(\:\left({\text{o}}_{t}\right)\). The main feature of LSTM is the memory from one cell to the following, while the RNN requires a cell state. The cell state is constantly upgraded; if a little data is not needed, it might be rejected, and novel data might be inserted with three gates. The process of LSTM is formulated below in the mathematical formulation.

As per the mathematical formulations stated above, \(\:{x}_{t}\) denotes an input at the present time-step, \(\:{h}_{t-1}\) refers to an HL from the preceding time-step; \(\:b\) denotes the bias matrix, which is linked to an input gate, and HL; \(\:{c}_{t-1}\) is the memory cell from the prior time step; \(\:{c}_{t}\) indicates the memory cell state of the present time-step; \(\:\sigma\:\), \(\:tanh\) represents an activation function.

DBN classifier

The intellectual model using DBN has been presented to tackle the problem33. DBN is a dynamic Bayesian network. Thus, it contains a valuable fault tolerance performance in the decision-making procedure. DBN comprises numerous limited Boltzmann machines (RBM) and a top‐layer classifier. While \(\:h\) and \(\:v\) represent hidden elements and visible units of the system, \(\:{v}_{i}\in\:\left\{\text{0,1}\right\},{h}_{j}\in\:\left\{\text{0,1}\right\}\), and \(\:a,\) \(\:b\) represent the biased values of the system. Figure 3 portrays the structure of DBN.

RBM assumes that the combined distribution of hidden and visible units is according to canonical distribution, which is defined as demonstrated:

Here, \(\:Z\) signifies the divider functions, fine-tuning the likelihood amount to 1, and \(\:E\) represents the networking energy system. Considering that the system encounters the characteristics of the stochastical procedure that the Ising method defines, the network energy method is stated as shown:

An elementary general theory for an arbitrary method is that lower energy identifies more strength. Therefore, the RBM experiences unsupervised training by reducing the energy \(\:E\). Based on Eq. (15), this is equivalent to increasing the probability \(\:P\). The optimizer aim of RBM is designated as shown:

Now \(\:\theta\:=\{w,\:a,\:b\}\) signifies bias and weights value of RBM models; \(\:S=\{{v}^{1},{v}^{2},\:\cdots\:,{v}^{{n}_{s}}\}\) represents the input database; \(\:{n}_{s}\) designates the quantity of input model samplings. Regarding upgrading bias and weights, the faster model, for example, contrastive divergence (CD), according to the Markov chain Monte Carlo (MCMC) theory, is applied. The DBN’s topmost layer classifier is a general BP technique in this study.

Parameter selection: BDCOA

Finally, the presented T2MEDL-EASDDP model utilizes BDCOA to fine-tune the hyperparameters involved in the three ensemble methods34. This model is chosen because it can effectively balance exploration and exploitation during optimization. Unlike conventional techniques such as grid or random search, BDCOA integrates advanced strategies like Brownian motion and directional mutation to avert getting stuck in local optima, enhancing the quality of the solution. Its robustness allows it to fine-tune complex model parameters with higher precision, resulting in improved model performance and faster convergence. BDCOA is appropriate for high-dimensional and computationally intensive tasks, where conventional methods mostly face difficulty finding optimal configurations. BDCOA optimizes model accuracy and stability by optimizing parameters dynamically while mitigating computational cost, making it an ideal choice over conventional parameter selection techniques. Figure 4 demonstrates the steps involved in the BDCOA model.

The BM and Directional Mutation Scheme (DMS) were combined into the BDCOA to avoid the sub-optimal solution and improve the searching space efficacy when hunting for optimal performance. The typical COA is a metaheuristic technique that imitates the natural actions of coati. The COA utilizes the standard of assisting between agents, imitating a creature’s act. Next, the BM and DMS are combined to evade the sub-optimal solution and improve the searchability, which aims to attain an effectual balance between exploitation and exploration throughout the search procedure. This outcome improved global search efficacy in optimal control signals discovery employed to improve the obtained signal excellence. The search procedure for optimal control signal has been described below:

Initialization procedure

The matrix \(\:X\) contains randomly generated initial population positions, which was signified in Eq. (18); the number of coatis was definite by \(\:N\), and the problem size was represented by \(\:m.\) Each \(\:ith\) coati position is stated in Eq. (19), where an objective of each population was calculated, and the vector \(\:F\) is made with the size \(\:N\text{x}1\) that signified in Eq. (20). The primary solutions are randomly generated value of voltages.

While the location of coati \(\:\chi\:\) is signified as \(\:{X}_{i}\), the valuation of the \(\:jth\) decision variable is represented as \(\:{x}_{i,j}.\)

Directional mutation

Here, the route of coatis was altered to improve finding in the searching space efficacy. The DMS in Eq. (7) was gained by utilizing the directed value \(\:d\) to enhance the searching space efficacy.

Here, the solution expressed from the upmost \(\:S\times\:N\) solutions is stated as \(\:{X}_{best}\), dual dissimilar solutions selected at random from the population are indicated as \(\:{X}_{r2}\) and \(\:{X}_{r3}\), whereas \(\:\rho\:\) symbolizes the real value and constant factor selected between \(\:\left[\text{0,1}\right]\); \(\:d\) refers to the directed value, which was stated in Eq. (22).

Exploration stage (hunt and attack)

Here, the coatis are divided into dual dissimilar sets. The initial cluster goes up the tree to scare the victim, whereas the next cluster stays below the tree to drop the scared target. The coati’s victim was denoted as \(\:Iguana\). Equation (23) states the 1st part of coatis that jumps up the tree. When the Iguana falls, its position is altered randomly depending upon Eq. (24). Equation (25) identifies the 2nd part of the coatis that stays underneath the tree. The BM has been combined in this stage. In contrast, the exploration escapes from sub-optimal performances and hunts in other areas with a vast ability to find global optimal value. This dynamic and adaptive procedure improves the BDCOA’s ability to exploit and explore the search space effectively. Every step of BM is exposed by the likelihood function meant by standard Gaussian distribution with unit variance \(\:\left({\sigma\:}^{2}=1\right)\) and mean \(\:(\delta\:=0)\). The likelihood density function of BM that is \(\:BM\left(y,\delta\:,\sigma\:\right)\) at a definite point, \(\:y\), was meant in Eq. (26).

Here, the novel position calculated for\(\:\:ith\) coati is meant as \(\:{X}_{i}^{P1},{\:x}_{i,j}^{P1}\) is in \(\:jth\) dimension, the novel position’s fitness value was signified as \(\:{F}_{i}^{P1}\) that amongst \(\:\left[\text{0,1}\right]\) is signified as \(\:r\), lower and upper values of \(\:jth\) variable were signified as \(\:l{b}_{j}\) and \(\:u{b}_{j},\:\)correspondingly; the prey’s position is represented as \(\:Iguana,\:Iguan{a}_{j}\) is its \(\:jth\) dimension, \(\:I\) denote an integer value, which is both 1 and 2, location of prey is indicated as \(\:Iguan{a}^{G}\), its \(\:jth\) dimension is expressed as \(\:Iguan{a}_{j}^{G},\:Iguan{a}^{G}{\prime\:}s\) value of fitness is represented as Iguana. When the fitness of \(\:{X}_{i}^{P1}\) of \(\:i\)th coati has enhanced fitness compared to the fitness of old position \(\:{X}_{i}\), it is unchanged. Or else, the old position has been sustained, as exposed in Eq. (27)

Every solution \(\:\left(coati\right)\) fitness is estimated as per RMS. It was calculated by employing Eqs. (28) and (29).

The residual stage is signified as \(\:R\left(r\right)=A\left(r\right)-C\left(r\right)\), and the standardized encircle is denoted as \(\:S.\)

Exploitation stage (escaping from the predators)

When a predator assaults the coati, the coati travels to a randomly generated position closer to the site, depending on Eqs. (30) and (31). The novel site was sufficient where the fitness is improved as per Eq. (32).

In this equation, \(\:P2\) means the location and fitness of coati, and the lower and upper limits of the \(\:jth\) decision variable are correspondingly represented as \(\:l{b}_{j}^{local}\) and \(\:u{b}_{j}^{local}\).

The FS is the major feature that manipulates the performance of BDCOA. The hyperparameter range procedure includes the solution-encoded technique to assess the effectiveness of the candidate solution. Here, the BDCOA deliberates accuracy as the foremost standard for projecting the FF. Its formulation is mathematically expressed below.

Here, \(\:TP\) signifies the positive value of true, and \(\:FP\) represents the positive value of false.

Result analysis and discussion

In this part, the experimental validation of the T2MEDL-EASDDP technique is examined below the dual dataset, namely the ASD-Toddler dataset35 and the ASD-Adult dataset36. The ASD-Toddler dataset consists of 1000 samples under dual classes, as shown in Table 1. The total number of attributes is 17, but only 14 features have been selected.

Figure 5 exhibits the classifier results of the T2MEDL-EASDDP approach by the Toddler dataset. Figure 5a and b represents the confusion matrix using precise identification and classification of all class labels with 70%TRPH and 30%TSPH. Figure 5c shows the PR examination, which indicates higher performance than all class labels. Eventually, Fig. 5d demonstrates the ROC examination, revealing proficient outcomes with high ROC values for different classes.

Table 2 and Fig. 6 depict the ASD disorder detection of the T2MEDL-EASDDP technique on the Toddler dataset. The performances indicate that the T2MEDL-EASDDP technique appropriately recognized the samples. On 70%TRPH, the T2MEDL-EASDDP model provides an \(\:acc{u}_{y},\:\:sen{s}_{y}\), \(\:spe{c}_{y}\), \(\:{F}_{measure}\), and \(\:AU{C}_{score}\:\)of 94.89%, 94.89%, 94.89%, 96.03%, and 94.89%, respectively. Also, on 30%TSPH, the T2MEDL-EASDDP model provides average \(\:acc{u}_{y},\:\:sen{s}_{y}\), \(\:spe{c}_{y}\), \(\:{F}_{measure}\), and \(\:AU{C}_{score}\:\)of 97.79%, 97.79%, 97.79%, 97.96%, and 97.79%, respectively.

In Fig. 7, the training (TRA) \(\:acc{u}_{y}\) and validation (VAL) \(\:acc{u}_{y}\) performances of the T2MEDL-EASDDP approach on the Toddler dataset are depicted. The \(\:acc{u}_{y}\:\)values are computed through a range of 0–50 epochs. The figure indicated that the values of TRA and VAL \(\:acc{u}_{y}\) present an increasing trend, indicating the capability of the T2MEDL-EASDDP approach through enhanced performance across numerous repetitions. In addition, the TRA and VAL \(\:acc{u}_{y}\) values remain close through the epochs, notifying lesser overfitting and showing enhanced outcomes of the T2MEDL-EASDDP method, assuring steady prediction on unnoticed samples.

The TRA loss (TRALOS) and VAL loss (VALLOS) graph of the T2MEDL-EASDDP technique by the Toddler dataset is shown in Fig. 8. The loss values are computed across an interval of 0–50 epochs. It is showcased that the values of TRALOS and VALLOS reveal a diminishing trend, which indicates the proficiency of the T2MEDL-EASDDP approach in corresponding tradeoffs between generalization and data fitting. The constant decrease in loss values also ensures the improved performance of the T2MEDL-EASDDP approach and tunes the prediction results after a bit.

The ASD-Adult dataset consists of 680 samples under dual classes, as exposed in Table 3. The total number of attributes is 20, but only 15 have been selected.

Figure 9 states the classifier results of the T2MEDL-EASDDP methodology on the Adult dataset. Figure 9a and b illustrates the confusion matrix through specific classification and identification of all classes below 70%TRPH and 30%TSPH. Figure 9c shows the PR study, indicating enhanced performance in all classes. Eventually, Fig. 9d demonstrates the ROC study, which signifies proficient outcomes with high ROC values for different class labels.

Table 4 and Fig. 10 show the ASD disorder detection of the T2MEDL-EASDDP approach on the Adult dataset. The performances indicate that the T2MEDL-EASDDP approach correctly recognized the samples. On 70%TRPH, the T2MEDL-EASDDP model provides average \(\:acc{u}_{y},\:\:sen{s}_{y}\), \(\:spe{c}_{y}\), \(\:{F}_{measure}\), and \(\:AU{C}_{score}\:\)of 94.52%, 94.52%, 94.52%, 95.33%, and 94.52%, respectively. Similarly, on 30%TSPH, the T2MEDL-EASDDP model delivers average \(\:acc{u}_{y},\:\:sen{s}_{y}\), \(\:spe{c}_{y}\), \(\:{F}_{measure}\), and \(\:AU{C}_{score}\:\)of 93.19%, 93.19%, 93.19%, 94.77%, and 93.19%, respectively.

In Fig. 11, the TRA \(\:acc{u}_{y}\) and VAL \(\:acc{u}_{y}\) performances of the T2MEDL-EASDDP technique on the Adult dataset are exhibited. The \(\:acc{u}_{y}\:\)values are computed through an interval of 0–50 epochs. The figure implied that the TRA and VAL \(\:acc{u}_{y}\) values display an increasing trend, indicating the competency of the T2MEDL-EASDDP method with higher performance through multiple repetitions. Simultaneously, the TRA and VAL \(\:acc{u}_{y}\) values remain close across the epochs, notifying lower overfitting and showing the superior performance of the T2MEDL-EASDDP approach, guaranteeing reliable prediction on unnoticed samples.

In Fig. 12, the TRALOS and VALLOS graph of the T2MEDL-EASDDP approach on the Adult dataset is exposed. The loss values are computed across a range of 0–50 epochs. It is represented that the values of TRALOS and VALLOS show a diminishing tendency, which indicates the proficiency of the T2MEDL-EASDDP approach in harmonizing an exchange between generalization and data fitting. The constant decrease in loss values further assures the maximum performance of the T2MEDL-EASDDP technique and tunes the prediction results after a while.

Table 5 represents the comparative analysis of the T2MEDL-EASDDP model with existing methods37,38,39.

Figure 13 illustrates \(\:sen{s}_{y}\) and \(\:spe{c}_{y}\) outcomes of the T2MEDL-EASDDP model with existing techniques. The simulation results implied that the T2MEDL-EASDDP model outperformed more outstanding performances. According to \(\:sen{s}_{y}\), the T2MEDL-EASDDP technique has maximal \(\:sen{s}_{y}\) of 97.79% whereas the 3D Grad-CAM, CNN, ASD-DiagNet, MVS-GCN, HOFC, CONCAT-SDAE, and MMSDAE models have diminish \(\:sen{s}_{y}\) of 84.14%, 86.87%, 70.77%, 65.54%, 71.23%, 94.27%, and 92.66%, correspondingly. Also, according to \(\:spe{c}_{y}\), the T2MEDL-EASDDP technique has a higher \(\:spe{c}_{y}\) of 97.79%. In contrast, the 3D Grad-CAM, CNN, ASD-DiagNet, MVS-GCN, HOFC, CONCAT-SDAE, and MMSDAE models have diminished \(\:spe{c}_{y}\) of 83.36%, 84.09%, 72.50%, 71.86%, 63.40%, 95.52%, and 97.17%, correspondingly.

The comparison study of the T2MEDL-EASDDP method with existing techniques is illustrated in terms of \(\:acc{u}_{y}\), and \(\:{F}_{measure}\) in Fig. 14. The simulation result indicated that the T2MEDL-EASDDP technique outperformed more outstanding performances. According to \(\:acc{u}_{y}\), the T2MEDL-EASDDP model has enhanced \(\:acc{u}_{y}\) of 97.79%, whereas the 3D Grad-CAM, CNN, ASD-DiagNet, MVS-GCN, HOFC, CONCAT-SDAE, and MMSDAE techniques have diminished \(\:acc{u}_{y}\) of 83.72%, 85.40%, 71.73%, 71.66%, 73.36%, 94.85%, and 95.73%, correspondingly. According to \(\:{F}_{measure}\), the T2MEDL-EASDDP technique has a maximum \(\:{F}_{-measure}\) of 97.96%. In contrast, the 3D Grad-CAM, CNN, ASD-DiagNet, MVS-GCN, HOFC, CONCAT-SDAE, and MMSDAE techniques have minimum \(\:{F}_{measure}\) of 86.46%, 85.77%, 84.68%, 92.13%, 90.96%, 93.74%, and 95.34%, correspondingly.

Conclusion

In this paper, a novel T2MEDL-EASDDP model is developed. The main aim of the presented T2MEDL-EASDDP model is to analyze and diagnose the different stages of ASD in disabled individuals. The T2MEDL-EASDDP model has data normalization, dimensionality reduction using IBOA, three ensemble classification models, and a BDCOA-based parameter selection process to accomplish this. Initially, the T2MEDL-EASDDP model undergoes min-max normalization for data pre-processing to ensure that the input data is scaled to a uniform range. Next, the IBOA-based FS efficiently identifies the most relevant features and reduces dimensionality. In addition, an ensemble of DL holds three approaches: AE, LSTM, and DBN, which are employed for analyzing and detecting ASD. Finally, the presented T2MEDL-EASDDP model applies BDCOA to fine-tune the hyperparameters involved in the three ensemble methods. A wide range of simulation analyses of the T2MEDL-EASDDP technique are accomplished under the ASD-Toddler and ASD-Adult datasets. The performance validation of the T2MEDL-EASDDP method portrayed a superior accuracy value of 97.79% over existing techniques. The limitations of the T2MEDL-EASDDP method comprise the reliance on a single dataset, which may affect the model’s generalizability to diverse populations with varying characteristics. Moreover, the computational complexity of the proposed approach may need significant resources, limiting its accessibility for resource-constrained environments. The study also does not account for real-time, on-the-ground application, which is substantial for practical implementation. Furthermore, the models used may not fully capture the complexities and heterogeneity within ASD, as they primarily concentrate on a limited set of features. Future work should explore integrating multimodal data, such as genetic, neuroimaging, and behavioural information, to improve model robustness. Additionally, investigating methods for mitigating computational overhead while maintaining performance would make the approach more scalable. Lastly, more attention could be given to validating the model with real-world data to assess its practical utility in clinical settings.

Data availability

The data that support the findings of this study are openly available in the Kaggle repository at https://www.kaggle.com/datasets/fabdelja/autism-screening-for-toddlers and https://www.kaggle.com/datasets/andrewmvd/autism-screening-on-adults,

References

Erkan, U. & Thanh, D. N. Autism spectrum disorder detection with machine learning methods. Curr. Psychiatry Res. Reviews Formerly: Curr. Psychiatry Reviews. 15 (4), 297–308 (2019).

Daniels, A. M., Halladay, A. K., Shih, A., Elder, L. M. & Dawson, G. Approaches to enhancing the early detection of autism spectrum disorders: A systematic review of the literature. J. Am. Acad. Child. Adolesc. Psychiatry. 53 (2), 141–152 (2014).

Vakadkar, K., Purkayastha, D. & Krishnan, D. Detection of autism spectrum disorder in children using machine learning techniques. SN Comput. Sci., 2 1–9. (2021).

Yuan, J., Holtz, C., Smith, T. & Luo, J. Autism spectrum disorder detection from semi-structured and unstructured medical data. EURASIP J. Bioinf. Syst. Biol., 2017 1–9. (2016).

Oh, S. L. et al. A novel automated autism spectrum disorder detection system. Complex. Intell. Syst. 7 (5), 2399–2413 (2021).

Sharma, A. & Tanwar, P. June. Deep analysis of autism spectrum disorder detection techniques. In 2020 International Conference on Intelligent Engineering and Management (ICIEM) (pp. 455–459). IEEE. (2020).

Moridian, P. et al. Automatic autism spectrum disorder detection using artificial intelligence methods with MRI neuroimaging: A review. Front. Mol. Neurosci., 15 999605. (2022).

Zunino, A. et al. August. Video gesture analysis for autism spectrum disorder detection. In 2018 24th International Conference on Pattern Recognition (ICPR) (pp. 3421–3426). IEEE. (2018).

Zwaigenbaum, L., Brian, J. A. & Ip, A. Early detection for autism spectrum disorder in young children. Paediatr. Child Health. 24 (7), 424–432 (2019).

Pal, M. & Rubini, P. Fusion of brain imaging data with artificial intelligence to detect autism spectrum disorder. Fusion: Pract. Appl. 2 89 —99 (2024).

Atlam, E. S. et al. EASDM: Explainable autism spectrum disorder model based on deep learning. J. Disab. Res., 3(1), 20240003. (2024).

Alkahtani, H., Aldhyani, T. H. & Alzahrani, M. Y. Early screening of autism spectrum disorder diagnoses of children using artificial intelligence. J. Disabil. Res. 2 (1), 14–25 (2023).

Al-Muhanna, M. K. et al. An attention-based hybrid optimized residual memory network (AHRML) method for autism spectrum disorder (ASD) detection. J. Disab. Res., 3(3) 20240030. (2024).

Lakhan, A., Mohammed, M. A., Abdulkareem, K. H., Hamouda, H. & Alyahya, S. Autism Spectrum Disorder detection framework for children based on federated learning integrated CNN-LSTM. Comput. Biol. Med., 166 107539 (2023).

Nogay, H. S. & Adeli, H. Multiple classifications of brain MRI autism spectrum disorder by age and gender using deep learning. J. Med. Syst., 48(1) 15. (2024).

Pokhrel, H. P., Nepal, R., Poudel, S. & Timsina, P. Autism Spectrum Disorder Detection using Facial Landmark Detection and Artificial Neural Network. (2023).

Prasad, V., Ganeshan, R. & Rajeswari, R. Artificial gannet optimization enabled deep convolutional neural network for autism spectrum disorders classification using MRI image. Multimedia Tools and Applications, pp.1–27. (2024).

Ullah, F. et al. Fusion-based body-worn IoT sensor platform for gesture recognition of autism spectrum disorder children. Sensors, 23(3), 1672 (2023).

Нryntsiv, M., Zamishchak, M., Bondarenko, Y., Suprun, H. & Dushka, A. Approaches to speech therapy for children with autism spectrum disorders (ASD). Int. J., 14(1), 33 (2025).

Nadimi-Shahraki, M. H., Zamani, H. & Mirjalili, S. Enhanced whale optimization algorithm for medical feature selection: A COVID-19 case study. Comput. Biol. Med., 148, 105858. (2022).

Prakash, V. G. et al. Video-based real‐time assessment and diagnosis of autism spectrum disorder using deep neural networks. Expert Systems, 42(1) e13253. (2025).

Umrani, A. T. & Harshavardhanan, P. Efficient classification model for anxiety detection in autism using intelligent search optimization based on deep CNN. Multimedia Tools Appl. 83 (23), 62607–62636 (2024).

Polavarapu, B. L. et al. INN-ASDNet: embracing involutional neural networks and random forest for prediction of autism spectrum disorder. Arab. J. Sci. Eng., 1–19. (2025).

Simon, J., Kapileswar, N., Datchinamoorthi, M. & Muthukumar, S. January. Gaze-Assisted Autism Spectrum Disorder Identification: A Fusion of Machine Learning and Deep Learning Approaches for Preemptive Identification. In 2024 5th International Conference on Mobile Computing and Sustainable Informatics (ICMCSI) (pp. 525–529). IEEE. (2024).

Kavitha, V. & Siva, R. 3T dilated inception network for enhanced autism spectrum disorder diagnosis using resting-state fMRI data. Cognit. Neurodyn., 19(1) 22. (2025).

Vengidusamy, S., Kottaimalai, R., Arunprasath, T. & Govindaraj, V. December. Deep Learning Methods on Recognizing Autism Spectrum Disorder. In 2024 International Conference on Sustainable Communication Networks and Application (ICSCNA) (pp. 780–788). IEEE. (2024).

Lu, B. et al. SBoCF: A deep learning-based sequential bag of convolutional features for human behavior quantification. Comput. Human Behav., 165108534. (2025).

Sravani, K. & Kuppusamy, P. Optimized deep convolutional neural network for autism spectrum disorder detection using structural MRI and DTPSO. IEEE Access. (2024).

Taghipour-Gorjikolaie, M. et al. An AI-based hierarchical approach for optimizing breast cancer detection using MammoWave device. Biomed. Signal Process. Control, 100 107143 (2025).

Yuan, Y. et al. Short-term wind power prediction based on IBOA-AdaBoost-RVM. J. King Saud University-Science 36(11), 103550. (2024).

Rassam, M. A. Autoencoder-Based neural network model for anomaly detection in wireless body area networks. IoT 5 (4), 852–870 (2024).

Jayanth, T., Manimaran, A. & Siva, G. Enhancing Stock Price Forecasting with a Hybrid SES-DA-BiLSTM-BO Model: Superior Accuracy in High-Frequency Financial Data Analysis. IEEE Access. (2024).

Sun, Q., Zhu, J. & Chen, C. A Novel Detection Scheme for Motor Bearing Structure Defects in a High-Speed Train Using Stator Current. Sensors, 24(23), 7675. (2024).

Shankarnahalli Krishnegowda, S., Ganesh, A. K., Bidare Divakarachari, P., Shankarappa, V. Y. & Gollara Siddappa, N. BDCOA: wavefront aberration compensation using improved swarm intelligence for FSO communication. Photonics 11 (11), 1045 (2024).

https://www.kaggle.com/datasets/fabdelja/autism-screening-for-toddlers

https://www.kaggle.com/datasets/andrewmvd/autism-screening-on-adults

Han, J., Jiang, G., Ouyang, G. & Li, X. A multimodal approach for identifying autism spectrum disorders in children. IEEE Trans. Neural Syst. Rehabil. Eng. 30, 2003–2011 (2022).

Yang, R., Ke, F., Liu, H., Zhou, M. & Cao, H. M. Exploring sMRI biomarkers for diagnosis of autism spectrum disorders based on multi-class activation mapping models. IEEE Access. 9, 124122–124131 (2021).

Wang, M., Guo, J., Wang, Y., Yu, M. & Guo, J. Multimodal autism spectrum disorder diagnosis method based on DeepGCN. IEEE Trans. Neural Syst. Rehabil. Eng. (2023).

Acknowledgements

The authors extend their appreciation to the King Salman center For Disability Research for funding this work through Research Group no KSRG-2024- 093.

Funding

The authors thank the King Salman center For Disability Research for funding this work through Research Group no KSRG-2024- 093.

Author information

Authors and Affiliations

Contributions

T.A. and S.S. established and proposed the main idea. SS and R.A. worked on the implementation. S.S. and T.A. wrote the main manuscript and R.A made the figures and tables as well as revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Alotaibi, S.S., Alghamdi, T.A. & Alharthi, R. Two-tier nature inspired optimization-driven ensemble of deep learning models for effective autism spectrum disorder diagnosis in disabled persons. Sci Rep 15, 10059 (2025). https://doi.org/10.1038/s41598-025-93802-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-93802-y