Abstract

Fractal interpolation has gained significant attention due to its ability to model complex, self-similar structures with high precision. However, optimizing the parameters of Iterated Function System (IFS)-based fractal interpolants remains a challenging task, particularly for Rational Fractal Cubic (RFC) splines, which offer greater flexibility in shape control. In this study, we propose an evolutionary optimization strategy to enhance the accuracy and adaptability of RFC splines by optimizing their scaling factor and shape parameters using our novel Fractal Differential Evolution (FDE) algorithm. The FDE method iteratively refines the parameter space to achieve an optimal fit to the target data, demonstrating improved convergence and computational efficiency compared to traditional approaches. To validate the effectiveness of our method, we present a detailed numerical example showcasing the impact of optimized parameters on RFC spline interpolation. Furthermore, as a practical application, we develop a predictive model by approximating the FDE-optimized RFC spline using an Artificial Neural Network (ANN). The ANN is fine-tuned through the FDE algorithm to minimize the Euclidean distance between the RFC spline and the network’s predictions, ensuring high accuracy. This neural network model is subsequently used for extrapolation, enabling robust predictions beyond the observed data. Our results highlight the potential of integrating fractal-based interpolation with machine learning techniques, paving the way for applications in computational geometry, image processing, and time series forecasting.

Similar content being viewed by others

Introduction

Fractals, with their self-replicating patterns and intricate structures, have long intrigued researchers across multiple disciplines. Introduced by Benoit Mandelbrot, who is often regarded as the father of fractal geometry, these unique geometric characteristics make fractals ideal for modeling a wide range of natural phenomena and complex systems. Applications of fractals span diverse fields, including the visualization of natural landscapes and the analysis of financial markets1. The development of fractal interpolation functions (FIFs) has significantly advanced the field of spline interpolation, offering enhanced shape-preserving properties. Early foundations on fractal functions and interpolation provided critical insights into their mathematical properties2,3. Building on these foundations, research has expanded to include constrained interpolation with fractal splines4,5,6,7 and the development of \(\:\alpha\:\)-fractal rational splines for various numerical applications8,9,10. Recent advancements have introduced new classes of rational cubic spline fractal interpolation functions, which incorporate shape parameters to preserve monotonicity and convexity in univariate interpolation11,12,13. These innovations allow for greater flexibility and control over the interpolation process, resulting in more precise and visually appealing curves. Rational cubic splines with tension control parameters can manipulate the shape of a curve, yielding both interpolatory and rational B-spline forms14. Moreover, advancements in rational cubic spline interpolation schemes with shape control parameters have enabled interactive alteration of curve shapes in computer graphics, preserving smoothness and continuity15,16,17. Machine learning interpolation techniques offer powerful tools for accurately predicting physical properties in complex datasets, an approach that has proven particularly valuable in fields such as materials science18. Fractal interpolation has been increasingly explored in combination with machine learning techniques to enhance predictive accuracy and data modeling. Various optimization and learning approaches have been integrated to improve interpolation efficiency and parameter estimation. For instance, sparse multiple empirical kernel learning has been employed for brain disease diagnosis, demonstrating improved generalization in.

cross-domain transformations19. Additionally, multi-objective model selection algorithms have been applied to optimize online sequential learning, enhancing classification accuracy and adaptive equalization20. Furthermore, evolutionary machine learning techniques with adaptive optimization algorithms have been utilized to predict recurrent spontaneous abortion, highlighting the effectiveness of automated parameter tuning in medical diagnostics21.

Fractal interpolation has been widely explored for its ability to reconstruct complex data structures with self-similarity. However, determining the IFS parameters is a challenging task. Traditional approaches often involve manual or trial-and-error adjustments, which can be time-consuming, subjective, and may not consistently yield satisfactory results. An explicit fractal interpolation method based on affine transforms was proposed by the authors22, where the vertical scaling factor\(\:\left(\alpha\:\right)\) is approximated using a locally explicit expression. While this approach simplifies computations, it risks oversimplification and may fail to capture intricate fractal details, potentially leading to inaccuracies in interpolated curves. A fractal interpolation approach was also applied by the authors23 to improve neural network predictions for time series data, incorporating the Hurst parameter in the analysis. However, estimating \(\:\alpha\:\) using the Hurst parameter can be unreliable due to its sensitivity to noise and data variability, which may compromise interpolation accuracy. To address these challenges, a rational cubic spline fractal interpolation function was introduced by the authors7, employing a rational iterated function system. By adjusting \(\:\alpha\:\) and shape parameters within each sub-interval, the method ensured boundary adherence and improved interpolation flexibility. However, this refinement introduces additional computational complexity, requiring meticulous fine-tuning and increasing processing time. In the context of time series forecasting, fractal interpretation and wavelet analysis were utilized by the authors24 to estimate \(\:\alpha\:\) in Iterative Function Systems (IFS), further demonstrating the applicability of fractal methods in real-world scenarios. Additionally, a fractal interpolation algorithm for self-affine signal reconstruction was developed by the authors25, optimizing \(\:\alpha\:\) using Genetic Algorithms (GA) to enhance signal quality. Despite its effectiveness, this approach involves a complex fitness function with multiple variables, posing challenges in achieving optimal solutions. Furthermore, the behavior of fractal interpolation curves can be highly sensitive to small changes in the IFS parameters, making it challenging to achieve robust and reproducible results across different datasets or applications. To address these challenges, optimization techniques are increasingly being employed to automate the process of identifying suitable IFS for fractal interpolation models. This sensitivity can hinder the generalization of fractal interpolation techniques and limit their applicability in diverse contexts.

This paper aims to further this body of work by optimizing IFS parameters for Rational Fractal Cubic (RFC) spline using advanced optimization techniques. Specifically, we employ a Fractional Differential Evolution (FDE) algorithm to fine-tune the shape and scale parameters, enhancing the accuracy and computational efficiency of the interpolation process. This approach addresses the limitations of traditional methods and provides a robust framework for shape-preserving interpolation in various applications. Neural network-based adaptation and optimization techniques have been explored in various applications, including EEG recognition26 and retinal vessel segmentation using generative adversarial networks27, which provide insights for our ANN approximation of the FDE-optimized RFC spline. This optimization ensures that the approximated ANN minimizes the Euclidean distance to the RFC. Once optimized, the ANN is used to predict the series. In this paper, the entire implementation is carried out in R programming, which is a novel approach since fractal-based analyses have primarily been conducted in MATLAB. This work paves the way for future researchers by demonstrating that R is also a powerful tool for such studies.

The remaining contents of the paper are as follows: "Preliminaries" covers fundamental concepts and the mathematical background. Section “Methodology” delineates the methodology and is divided into multiple subsections. "Results and discussion" presents the results and discussion, also divided into multiple subsections. Finally, "Conclusion" summarizes the findings. This is followed by the bibliography and an appendix, where the R codes are provided.

Preliminaries

Let \(\:\left\{\left({x}_{i},{y}_{i}\right):i\in\:{\mathbb{N}}_{N}\right\}\) be a prescribed set of interpolation data with strictly increasing abscissae, where \(\:{\mathbb{N}}_{N}\) denotes the set of the first \(\:N\) natural numbers. Let \(\:{d}_{i}\) be the derivative value at the knot point \(\:{x}_{i}\), which is given or estimated with some standard procedures. For \(\:i\in\:{\mathbb{N}}_{N-1}\), denote by \(\:{h}_{i}\) the local mesh spacing \(\:{x}_{i+1}-{x}_{i}\), and let \(\:{r}_{i}\) and \(\:{t}_{i}\) be positive parameters referred to as shape parameters for obvious reasons. The \(\:{\mathcal{C}}^{1}\) - rational fractal cubic interpolation function \(\:{\:}^{4}\) with a numerator as a cubic polynomial and a denominator as a linear function is defined by: Suppose the function \(\:f\in\:{\mathcal{C}}^{1}\left[{x}_{1},{x}_{N}\right]\) and whose graph is the fixed point of the iterated function system \(\:\left\{{\left({L}_{i}\left(x\right),f\left({L}_{i}\left(x\right)\right)\right\}}_{i=1}^{N-1}\right.\) satisfying \(\:f\left({x}_{i}\right)={y}_{i},i\in\:{\mathbb{N}}_{N}\), where \(\:{L}_{i}\left(x\right)={a}_{i}x+{b}_{i}\)

Also \(\:{p}_{i}\left(\theta\:\right)\) is given by:

\(\begin{array}{*{20}{c}} {{p_i}\left( \theta \right)={r_i}\left( {{y_i} - {\alpha _i}{y_1}} \right){{(1 - \theta )}^3}+{t_i}\left( {{y_{i+1}} - {\alpha _i}{y_N}} \right){\theta ^3}} \\ {+\left[ {\left( {2{r_i}+{t_i}} \right){y_i}+{r_i}{h_i}{d_i} - {\alpha _i}\left[ {\left( {2{r_i}+{t_i}} \right){y_1}+\left( {{r_i}\left( {{x_N} - {x_1}} \right){d_1}} \right)} \right]} \right]{{(1 - \theta )}^2}\theta } \\ {+\left[ {\left( {{r_i}+2{t_i}} \right){y_{i+1}} - {t_i}{h_i}{d_{i+1}} - {\alpha _i}\left[ {\left( {{r_i}+{t_i}} \right){y_N} - \left( {{t_i}\left( {{x_N} - {x_1}} \right){d_N}} \right)} \right]} \right]\left( {1 - \theta } \right){\theta ^2}} \end{array}\)

and \(\:{q}_{i}\left(\theta\:\right)\) is given by:

Consider the prescribed data set \(\:\left\{\left({x}_{i},{y}_{i}\right):i\in\:{\mathbb{N}}_{N}\right\}\). The derivatives at the knots \(\:{x}_{i}\) are denoted by \(\:{d}_{i}\). For simplicity, take \(\:{h}_{i}={x}_{i+1}-{x}_{i}\), for \(\:i\in\:{\mathbb{N}}_{N-1}\). Let \(\:{r}_{i}\) and \(\:{t}_{i}\) be the free shape parameters. In order to maintain the positiveness of the denominator in rational fractal splines, the parameters are restricted to be \(\:{r}_{i}>0\) and \(\:{t}_{i}>0\). Readers are recommended to see the articles5,8 for the construction of rational cubic spline fractal functions.

Methodology

This section encompasses the methodology employed in this study. Our objective is to develop a modified evolutionary strategy for optimizing the IFS parameters of the RFC spline. Therefore, it is essential to begin with the construction of an RFC target spline, which is detailed in "Construction of the RFC Target Spline". This represents the target fractal curve that we aim to approximate. Next, it is crucial to formulate the optimization problem, where we discuss the objective function and constraints in "Posing the Optimization Problem for the Fine-tuning of Scale and Shape Parameters". The FDE algorithm is presented in "FDE Algorithm and Methodology for Optimization" as Algorithm 1. Subsequently, the RFC spline is approximated using an ANN in "FDE optimized Feedforward Neural Network for Fractal Curve Approximation and Prediction" to predict the outer boundary points. This approximation utilizes the FDE algorithm to optimize the ANN parameters, as explained in Algorithm 2.

Construction of the RFC target spline

The below example clearly delineates how to construct the target curve using rational cubic spline interpolation.

Step 1 Fix the data points \(\:{\left\{\left({x}_{i},{y}_{i}\right)\right\}}_{i=1}^{5}=\left\{\right(\text{0,8}),(\text{5,7}),(\text{8,10}),(\text{10,8}),(\text{11,6}\left)\right\}\). Calculate the step sizes \(\:{h}_{i}\) and the divided differences \(\:{{\Delta\:}}_{i}\) using

Using the given data, we find: \(\:{h}_{1}=5.0,{{\Delta\:}}_{1}=-0.2,{h}_{2}=3.0,{{\Delta\:}}_{2}=1.0,{h}_{3}=2.0,{{\Delta\:}}_{3}=-1.0,{h}_{4}=1.0\), and \(\:{{\Delta\:}}_{4}=-2.0\). In addition, the values \(\:{r}_{c}=\{\text{7,8},\text{9,10}\},{t}_{c}=\{\text{3,4},\text{3,3}\}\), and \(\:{\alpha\:}_{c}=\left\{\text{0.4543,0.2727,0.1818,0.909}\right\}\) are chosen. Here \(\:{r}_{c}\), and \(\:{t}_{c}\) are the target shape parameters and also \(\:{\alpha\:}_{c}\) is the target scaling factor. These are the IFS parameters for the target fractal curve.

Step 2 For each segment \(\:i,{a}_{i}\) and \(\:{b}_{i}\) are calculated using Eq. (1). The parameters \(\:{a}_{i}\) and \(\:{b}_{i}\) for each segment are as follows

Step 3: Arithmetic mean method: The three-point difference approximation at internal grids is given as8.

and at the endpoints, the derivatives are approximated as

Using this, we find \(\:{d}_{1}=-0.9500,{d}_{2}=0.5500,{d}_{3}=-0.2000,{d}_{4}=-1.6667\), and \(\:{d}_{5}=-2.3333\). For each segment \(\:i\in\:{\mathbb{N}}_{4},{T}_{{1}_{i}},{T}_{{2}_{i}},{T}_{{3}_{i}}\), and \(\:{T}_{{4}_{i}}\) are computed as follows:

The \(\:{T}_{{j}_{i}}\) for \(\:j,i=\text{1,2},\text{3,4}\) are given in the Table 1.

Step 4 In the first iteration \(\:(k=1)\), for each segment \(\:i\in\:{\mathbb{N}}_{4}\) and each point \(\:s\in\:{\mathbb{N}}_{5},{\theta\:}_{{i}_{s}}\) is calculated as

\(\:{V}_{i{1}_{s}}={T}_{{1}_{i}}{\left(1-{\theta\:}_{{i}_{s}}\right)}^{3},{V}_{i{2}_{s}}={T}_{{2}_{i}}{\theta\:}_{{i}_{s}}^{3},{V}_{i{3}_{s}}={T}_{{3}_{i}}{\theta\:}_{{i}_{s}}{\left(1-{\theta\:}_{{i}_{s}}\right)}^{2},{V}_{i{4}_{s}}={T}_{{4}_{i}}{\theta\:}_{{i}_{s}}^{2}\left(1-{\theta\:}_{{i}_{s}}\right),{p}_{{i}_{s}}={V}_{i{1}_{s}}+{V}_{i{2}_{s}}+{V}_{i{3}_{s}}+{V}_{i{4}_{s}}\), and \(\:{q}_{{i}_{s}}=\left(1-{\theta\:}_{{i}_{s}}\right){r}_{i}+{\theta\:}_{{i}_{s}}{t}_{i}\). Table 2 showcases the results of the abovementioned constants for all the segments.

Step 5: Now we have to calculate \(\:{L}_{{i}_{s}}\left(x\right)\) and \(\:f\left({L}_{{i}_{s}}\left(x\right)\right)\) for each segment. The equations are given by: \(\:{L}_{i}\left({x}_{s}\right)={a}_{i}{x}_{s}+{b}_{i},\:f\left({L}_{i}\left({x}_{s}\right)\right)={\alpha\:}_{i}y+\frac{{p}_{is}}{{q}_{is}},\:i\in\:{\mathbb{N}}_{N-1}\). For \(\:i=1\) and \(\:s=1\), the equations are: \(\:{L}_{1}\left({x}_{1}\right)={a}_{1}{x}_{1}+{b}_{1}\), \(\:f\left({L}_{1}\left({x}_{1}\right)\right)={\alpha\:}_{1}{y}_{1}+\frac{{p}_{{1}_{1}}}{{q}_{1}}\) and so on. Table 3 gives the new generation points by applying the initial \(\:{x}_{i}\) values.

We got 20 points from which 8 points are the old generation points, these are the interpolated points of the data \(\:\left({x}_{i},{y}_{i}\right)\) and we name them as \(\:{X}_{1},{Y}_{1}\).

Step 6: We have to continue the iterations until the termination criteria are satisfied. For the second iteration, the new generation points \(\:{X}_{1},{Y}_{1}\) serve as the initial points. For the third iteration, the new generation points obtained from the second iteration serve as the initial points, and so on, until we get the fractal interpolated curve denoted as \(\:\mathcal{G}\). We continued up to 4 iterations to get our target curve, which is shown in Figure 3.

Posing the optimization problem for the Fine-tuning of scale and shape parameters

We see that \(\:\mathcal{G}\) is the attractor of the IFS, specifically called the fractal interpolated curve. Because the IFS uses the whole interpolation points to approach the smaller parts of the curve, the Vertical Scaling Factor (VSF) must satisfy \(\:\left|{\alpha\:}_{i}\right|<{a}_{i}\),

\(\:i\in\:{\mathbb{N}}_{N-1}\). Otherwise, the fractals can’t describe the self-similarity curves at any scales, which is the exclusive ability of the fractals. The parameters \(\:{r}_{i}\) and \(\:{t}_{i}\) are shape parameters essential for preserving the shape characteristics in fractal interpolation. Thus we are able to specify the vertical scaling and shape produced by the transformation. Therefore, \(\:{\alpha\:}_{i},{r}_{i}\), and \(\:{t}_{i}\) play a critical role in implementing a fractal interpolation. In our paper, we are trying to consider the VSF and shape parameters as variables of a certain kind of objective function. The problem of getting the VSF and shape parameters, therefore, becomes a problem of optimization with multiple variables and objectives. The optimization problem is posed as:

Minimize:

s.t. \(\:\left[ {\alpha \:_{{ji}} } \right]< a_{i} ,\:i \in \:{\mathbb N}_{{N - 1}} ,\:j \in \:{\mathbb N}_{N} ,\)

where, \(\:\mathcal{G}\left({\overrightarrow{\alpha\:}}_{c},{\overrightarrow{r}}_{c},{\overrightarrow{t}}_{c}\right)\) is the target fractal curve and \(\:{\mathcal{G}}^{\text{*}}\left({\overrightarrow{\alpha\:}}_{j},{\overrightarrow{r}}_{j},{\overrightarrow{t}}_{j}\right)\) is the approximated fractal curve,

\(\:{\overrightarrow{\alpha\:}}_{c},{\overrightarrow{r}}_{c},{\overrightarrow{t}}_{c}\) are fixed.

This problem seeks to find the scaling factors \(\:{\alpha\:}_{ji}\), shape parameters \(\:{r}_{ji}\), and \(\:{t}_{ji}\) that minimize the Euclidean distance between the target fractal curve at \(\:{\overrightarrow{\alpha\:}}_{c},{\overrightarrow{r}}_{c},{\overrightarrow{t}}_{c}\) and the approximated fractal curve at \(\:{\overrightarrow{\alpha\:}}_{j},{\overrightarrow{r}}_{j},{\overrightarrow{t}}_{j}\), while satisfying the constraints \(\:\left|{\alpha\:}_{ji}\right|<{a}_{i},{r}_{ji}>0\), and \(\:{t}_{ji}>0,\:\forall\:i,j\). In this context, this study delves into the application of a prominent optimization technique: Differential Evolution28. This algorithm serves as a valuable tool for the refinement and optimization of the \(\:{\alpha\:}_{ji},{r}_{ji}\), and \(\:{t}_{ji}\:\forall\:i,j\) involved in fractal interpolation curve generation. By employing this optimization method, we seek to find the ideal combination of \(\:{\alpha\:}_{ji},{r}_{ji}\), and \(\:{t}_{ji}\:\forall\:i,j\) that best replicates the intricate patterns we aim to model. The following sections will provide a comprehensive exploration of the Differential Evolution technique. Through this exploration, we aim to underscore the significance of this optimization technique as a fundamental component of our study, laying the groundwork for a deeper understanding of its practical applications in the context of fractal-based modeling.

FDE algorithm and methodology for optimization

Differential Evolution (DE), introduced by Storn and Price in 1997, is a robust and simple evolutionary optimization algorithm that operates on a population of candidate solutions through mutation, crossover, and selection, effectively exploring complex optimization landscapes. In this paper, we modified the Differential Evolution (DE) algorithm to optimize three parameters: the scaling factor \(\:\alpha\:\) and the shape parameters \(\:r\) and \(\:t\). We named this modified algorithm the Fractal Differential Evolution (FDE) algorithm. These parameters play a critical role in shaping the resulting fractal curves, and DE offers a powerful approach to fine-tune them, aiming to enhance the accuracy and fidelity of the approximated curves to the target data. The flowchart for the FDE algorithm is provided in Fig. 1, and the detailed algorithm, explaining each step along with its formulas, is given in Algorithm 1.

FDE optimized feedforward neural network for fractal curve approximation and prediction

In this section, we present the algorithm and mathematical formulation of the neural network (NN) prediction process used to approximate the fractal interpolation function (FIF) (see Algorithm 2), which is generated by the RFC algorithm. For a simplified understanding, the corresponding flowchart (see Fig. 2) is provided, while the algorithm should be referred to for a detailed formulation. The RFC points denoted by \(\:\left({X}_{k,i},{Y}_{k,i}\right),i=\text{1,2},\dots\:,n\) are constructed iteratively over several iterations using the parameters \(\:r,t\), and \(\:\alpha\:\) which are optimized for the desired fractal characteristics. Now a Neural Network \(\:NN\left({X}_{k}\right)\) is approximated to the attractor \(\:{\mathcal{G}}^{\text{*}}\left({\mathbf{x}}_{i}^{{\prime\:}}\right)\) which plots points \(\:\left({X}_{k,i},{Y}_{k,i}\right),i=\text{1,2},\dots\:,n\). The Fractal Differential Evolution (FDE) algorithm can be adapted to optimize the neural network parameters \(\:\eta\:\) (learning rate) and \(\:h\) (number of hidden neurons). The process begins by initializing a population of size \(\:N\) with random individuals in the solution space for the parameters \(\:\eta\:\) and \(\:h\). For each individual, the mutation scaling factor \(\:{F}_{i,k}\) and crossover probability \(\:C{R}_{i,k}\) are updated at each iteration using predefined rules, as defined in Eqs. (3) and (4), which involve random variables. Specifically, \(\:{F}_{i,k}\) is updated within the range \(\:\left[\text{0.1,0.9}\right]\), and \(\:C{R}_{i,k}\) is also bounded within [0.1, 0.9]. In the Mutation (as described in Eq. (5)), the algorithm combines the vectors of three randomly chosen individuals \(\:{\mathbf{x}}_{{\text{r}}_{1}},{\mathbf{x}}_{{\text{r}}_{2}}\), and \(\:{\mathbf{x}}_{{\text{r}}_{3}}\) to generate a mutant vector \(\:{\mathbf{u}}_{ij}\) for each individual. The mutation is applied according to the rule in Eq. (5). In the Crossover (as described in Eq. 6), the target vector \(\:{\mathbf{x}}_{i}=\left({\eta\:}_{i},{h}_{i}\right)\) is combined with the mutant vector \(\:{\mathbf{u}}_{ij}\) to generate a trial vector \(\:{\mathbf{x}}_{i}^{{\prime\:}}\), with a crossover probability \(\:C{R}_{i,k}\). The crossover is performed as per the rule in Eq. (6). After the crossover step, the Selection is performed by training a neural network using the new values \(\:{\eta\:}_{i}^{{\prime\:}}\) and \(\:{h}_{i}^{{\prime\:}}\) as the learning rate and the number of hidden neurons, respectively. The fitness of the trial vector is determined by calculating the Euclidean distance between the approximated neural network and the FDE optimized RFC spline as shown in the Eq. (9) where \(\:{\mathcal{G}}^{\text{*}}\left({\mathbf{x}}_{i}^{{\prime\:}}\right)\) represents the Rational Fractal Cubic (RFC) spline generated and \(\:NN\left({X}_{k,i}\right),i=\text{1,2},\dots\:,n\) represents the approximated neural network prediction for new data point \(\:{X}_{k,i}\), \(\:i=\text{1,2},\dots\:,n\). The algorithm then continues iterating through the mutation, crossover, evaluation, and selection steps until a stopping criterion is met. The stopping criterion can be based on the fitness value approaching a desired threshold \(\:\epsilon\:={10}^{-3}\), or a predefined maximum number of generations. The weights and biases of the neural network are updated using the gradient descent algorithm, which minimizes the loss function by adjusting the parameters in the direction of the negative gradient. The objective is to find the values of the weights and biases that reduce the error between the predicted and actual outputs. In each iteration of gradient descent, the weight updates are computed using Eq. (8), where \(\:{w}^{\left(l\right)}\) represents the weight matrix of the \(\:{l}^{\text{th\:}}\) layer, \(\:\eta\:\) is the learning rate, and \(\:\nabla\:L\left({w}^{\left(l\right)}\right)\) is the gradient of the loss function \(\:L\) with respect to the weights. Similarly, the biases are updated using Eq. (8), where \(\:{b}^{\left(l\right)}\) is the bias vector of the \(\:{l}^{\text{th}}\) layer, and \(\:\nabla\:L\left({b}^{\left(l\right)}\right)\) is the gradient of the loss function \(\:L\) with respect to the biases. The gradients are computed using backpropagation, which involves calculating the partial derivatives of the loss function with respect to each parameter. The learning rate \(\:\eta\:\) determines the step size of each update, and through iterative updates, the weights and biases are optimized to minimize the error between the network’s predictions and the true outputs. The loss function, which quantifies the difference between the predicted output and the actual target output, is typically the Mean Squared Error (MSE) in regression tasks. It is defined in Eq. (7) where \(\:L\) is the loss, \(\:n\) is the number of data points, \(\:{Y}_{k,i}\) is the actual target value for the \(\:{i}^{\text{th}}\) data point, and \(\:{\stackrel{\prime }{Y}}_{k,i}\) is the predicted output of the neural network. The difference between \(\:{Y}_{k,i}\) and \(\:{\stackrel{\prime }{Y}}_{k,i}\) represents the error term, and squaring this term assigns greater penalties to larger errors. The objective of the neural network training is to minimize this loss by adjusting the weights and biases. A smaller value of \(\:L\) indicates that the network’s predictions are closer to the actual values, while a larger value implies a greater discrepancy between the predicted and actual outputs.

Results and discussion

Here, this section delineates the results acquired from the study. It is divided into six subsections. The numerical example and R code illustration of the FDE algorithm for optimizing IFS parameters are presented in "FDE optimization for the fine tuning of the IFS parameters: Numerical example and results" and "FDE optimization of the IFS parameters: R code illustration and results", respectively. A comparative analysis of the proposed method with existing methods is showcased in "Comparative analysis". Furthermore, a sensitivity analysis is conducted to evaluate the impact of parameter variations on the model’s output. This analysis helps identify the most influential parameters, providing insights into their significance in the optimization process. The details of this analysis are presented in "Sensitivity Analysis". Additionally, a numerical example with an R code illustration is provided for the ANN approximation of the RFC spline with FDE-optimized ANN parameters. These are detailed in Sect. "FDE optimized Neural Network approximation: Numerical example and results" and "FDE optimized Neural Network approximation: R code illustrations and results", respectively.

FDE optimization for the fine tuning of the IFS parameters: numerical example and results

The following steps delineate the Differential Evolution optimization strategy to find the optimal \(\:\alpha\:\) numerically.

Step 1 (Initialization): Population \(\:\text{s}\text{i}\text{z}\text{e}\left(N\right)=4\), mutation scaling factor \(\:\left({F}_{i,1}\right)\) is 0.5 and crossover probability \(\:\left(C{R}_{i,1}\right)\) is 0.7 for \(\:i=1\) to 4. We relied on the control parameters as defined in Eqs. (3)- (4) \(\:{\:}^{11}\). Now the population points are taken \(\:\left({\alpha\:}_{i},{r}_{i},{t}_{i}\right)\) for \(\:i=1\) to 4 such that \(\:\left|{\alpha\:}_{i}\right|<{a}_{i}\) and \(\:{r}_{i},{t}_{i}>0\). They are:

For set 1: \(\:\:\left\{ {\alpha \:_{1} ,r_{1} ,t_{1} } \right\} = \underbrace {{\left\{ {[0.1306,0.2149,0.0743,0.8026]} \right.}}_{{\alpha \:_{1} }},\,\,\underbrace {{[3,4,5,6]}}_{{r_{1} }},\,\,\underbrace {{[2,3,4,5]\} }}_{{t_{1} }}.\)

For set 2:

For set 3:

For set 4: \(\:\left\{ {\alpha \:_{4} ,r_{4} ,t_{4} } \right\} = \underbrace {{\{ [0.3078,0.1561,0.0187,0.8179]}}_{{\alpha \:_{4} }},{\mkern 1mu} {\mkern 1mu} \underbrace {{[8.5,6.3,11,2]\} }}_{{r_{4} }}.\)

The initial configurations of the rational splines before applying the FDE optimization are depicted in Figure 3. Figure 3a displays the rational spline using the first set of IFS parameters, while Fig. 3b shows the spline with the second set of parameters. Similarly, Figs. 3c,d present the rational splines generated with the third and fourth sets of IFS parameters, respectively. These initial splines provide a baseline for evaluating the improvements achieved through the optimization process. The fitness function is defined as:

where \(\:{\alpha\:}_{c},{r}_{c},{t}_{c}\) represent the parameters of the target curve. As shown in Table 4, the parameter sets and their corresponding Euclidean distances are listed.

Step 2 (Mutation): The formula for calculating the mutant vector \(\:{u}_{ij}^{\alpha\:}\) is given by:

Similarly, the formula for calculating the mutant vector \(\:{u}_{ij}^{r}\) is given by:

And, the formula for calculating the mutant vector \(\:{u}_{ij}^{t}\) is given by:

Here, \(\:{\alpha\:}_{{\text{r}}_{1}},{r}_{{\text{r}}_{1}},{t}_{{\text{r}}_{1}},{\alpha\:}_{{\text{r}}_{2}},{r}_{{\text{r}}_{2}},{t}_{{\text{r}}_{2}}\), and \(\:{\alpha\:}_{{\text{r}}_{3}},{r}_{{\text{r}}_{3}},{t}_{{\text{r}}_{3}}\) defined in Eq. (5) as \(\:{x}_{{\text{r}}_{1}},{x}_{{\text{r}}_{2}},{x}_{{\text{r}}_{3}}\) are taken as \(\:{\alpha\:}_{4j},{r}_{4j},{t}_{4j},{\alpha\:}_{3j},{r}_{3j},{t}_{3j}\), and \(\:{\alpha\:}_{1j},{r}_{1j},{t}_{1j}\) for \(\:{u}_{1j}^{\alpha\:},{u}_{1j}^{r},{u}_{1j}^{t}\) respectively. Similarly for \(\:{u}_{2j}^{\alpha\:},{u}_{2j}^{r},{u}_{2j}^{t},{u}_{3j}^{\alpha\:},{u}_{3j}^{r},{u}_{3j}^{t}\), and \(\:{u}_{4j}^{\alpha\:},{u}_{4j}^{r},{u}_{4j}^{t}\) as in the Eq. (10). The same applies to \(\:r\) and \(\:t\) parameters in Eqs. (11,12). The values of \(\:{u}_{ij}^{\alpha\:}\) on substituting \(\:j=1\) to 4 in equations (10) are as follows:

The values of \(\:{u}_{ij}^{r}\) on substituting for \(\:j=1\) to 4 in equations (11) are as follows:

The values of \(\:{u}_{ij}^{t}\) on substituting for \(\:j=1\) to 4 in equations (12) are as follows:

For the mutation step, any negative values in \(\:{u}_{ij}^{r}\) or \(\:{u}_{ij}^{t}\) are corrected as follows:

Here, \(\:\epsilon\:\) is a small positive value say \(\:{10}^{-3}\) ensuring that \(\:r\) and \(\:t\) remain positive. Now the corrected \(\:{U}^{r}\) and \(\:{U}^{t}\) are as follows:

Step 3 (Crossover): Let \(\:{\alpha\:}_{ij}^{{\prime\:}}\) be defined as follows:

where \(\:C{R}_{i,1}\) is the crossover probability for the first iteration, \(\:{\mathbbm{r}}\sim\:\mathcal{U}\left(\text{0,1}\right)\) is a uniformly distributed random number generated for each \(\:j\) and \(\:\delta\:\). \(\:\delta\:\) is a randomly selected variable location, \(\:\delta\:\in\:1\) to \(\:N\). Here \(\:\delta\:=3,N\) is the population size i.e. \(\:N=4\) and \(\:m\) is the size of each vector \(\:\alpha\:,r\) and \(\:t\) i.e. \(\:m=4\). Table 5 illustrates the crossover for iteration 1. The parent vectors and their corresponding new vectors after crossover are defined as follows:

And the original parent vectors are represented as follows:

Step 4 (Selection): Recall the vector after crossover in iteration 1 as: For the parameter set \(\:\left\{\alpha\:\right\}\) :

For the parameter set \(\:\left\{r\right\}\) :

For the parameter set \(\:\left\{t\right\}\) :

The new vector \(\:\left({\alpha\:}_{1}^{{\prime\:}},{r}_{1}^{{\prime\:}},{t}_{1}^{{\prime\:}}\right)\) is derived from the first parent vector \(\:\left({\alpha\:}_{1},{r}_{1},{t}_{1}\right)\). Similarly, the new vector \(\:\left({\alpha\:}_{2}^{{\prime\:}},{r}_{2}^{{\prime\:}},{t}_{2}^{{\prime\:}}\right)\) is derived from the second parent vector \(\:\left({\alpha\:}_{2},{r}_{2},{t}_{2}\right)\). The new vector \(\:\left({\alpha\:}_{3}^{{\prime\:}},{r}_{3}^{{\prime\:}},{t}_{3}^{{\prime\:}}\right)\) is formed from the third parent vector \(\:\left({\alpha\:}_{3},{r}_{3},{t}_{3}\right)\). Finally, the new vector \(\:\left({\alpha\:}_{4}^{{\prime\:}},{r}_{4}^{{\prime\:}},{t}_{4}^{{\prime\:}}\right)\) is derived from the fourth parent vector \(\:\left({\alpha\:}_{4},{r}_{4},{t}_{4}\right)\). Now, we will decide whether the parent vector or the new vector \(\:\left({\alpha\:}_{i}^{{\prime\:}},{r}_{i}^{{\prime\:}},{t}_{i}^{{\prime\:}}\right)\) will survive. For that, we will compute the fitness values

We have,

\(\:E\left({\alpha\:}_{3},{r}_{3},{t}_{3}\right)=34.98788\), and \(\:E\left({\alpha\:}_{4},{r}_{4},{t}_{4}\right)=53.70365\). Similarly, for the new vectors, we have \(\:E\left({\alpha\:}_{1}^{{\prime\:}},{r}_{1}^{{\prime\:}},{t}_{1}^{{\prime\:}}\right)=49.31879\), \(\:E\left({\alpha\:}_{2}^{{\prime\:}},{r}_{2}^{{\prime\:}},{t}_{2}^{{\prime\:}}\right)=69.08627,E\left({\alpha\:}_{3}^{{\prime\:}},{r}_{3}^{{\prime\:}},{t}_{3}^{{\prime\:}}\right)=40.27481\), and \(\:E\left({\alpha\:}_{4}^{{\prime\:}},{r}_{4}^{{\prime\:}},{t}_{4}^{{\prime\:}}\right)=41.02909\). We see that only \(\:\left({\alpha\:}_{4}^{{\prime\:}},{r}_{4}^{{\prime\:}},{t}_{4}^{{\prime\:}}\right)\) had produced better fitness when compared to their corresponding parent vectors.

Step 5 (Termination): If the termination criteria, such as achieving the minimum fitness function value or reaching the maximum number of iterations, are not met in the first iteration, proceed to Step 1 and continue to the second iteration.

Step 1 (Initialization): The new population points are: \(\:\left({\alpha\:}_{1},{r}_{1},{t}_{1}\right),\left({\alpha\:}_{2},{r}_{2},{t}_{2}\right),\left({\alpha\:}_{3},{r}_{3},{t}_{3}\right)\) and \(\:\left({\alpha\:}_{4}^{{\prime\:}},{r}_{4}^{{\prime\:}},{t}_{4}^{{\prime\:}}\right)\).

Step 2 (Mutation): The formula for calculating the updated \(\:{u}_{ij}^{\text{*}}\), the mutant vector for the second iteration, using Eq. (5) is given by:

Here the mutation scaling factor for the second iteration \(\:{F}_{i,2}\) is taken as 0.5 using Eq. (3). The values of \(\:{u}_{ij}^{\alpha\:\text{*}}\) on substituting for \(\:j=1\) to 4 in equations (13) are as follows:

The values of \(\:{u}_{ij}^{r\text{*}}\) on substituting \(\:j=1\) to 4 in equations (14) are as follows:

The values of \(\:{u}_{ij}^{t\text{*}}\) on substituting \(\:j=1\) to 4 in equations (15) are as follows:

For the mutation step, any negative values in \(\:{u}_{ij}^{r\text{*}}\) or \(\:{u}_{ij}^{t\text{*}}\) are corrected as follows:

Correcting this by taking \(\:\epsilon\:=0.001\) we get

Step 3 (Crossover): Let the crossover vector \(\:{\alpha\:}_{i}^{{\prime\:}{\prime\:}}\) be defined as follows:

Similarly, we have

where \(\:C{R}_{i,2}\) is the crossover probability as per the second iteration and it is equal to 0.7 from equation \(\:4,\delta\:\) is a randomly selected variable location, \(\:N=4\) and \(\:m=4\). Table 6 explains the crossover for iteration 2.

Step 4 (Selection): We name the new vectors after crossover as \(\:{\alpha\:}_{i}^{{\prime\:}{\prime\:}}\) :

The new vector \(\:\left({\alpha\:}_{1}^{{\prime\:}{\prime\:}},{r}_{1}^{{\prime\:}{\prime\:}},{t}_{1}^{{\prime\:}{\prime\:}}\right)\) is derived from the first parent vector \(\:\left({\alpha\:}_{1},{r}_{1},{t}_{1}\right)\). Similarly, the new vector \(\:\left({\alpha\:}_{2}^{{\prime\:}{\prime\:}},{r}_{2}^{{\prime\:}{\prime\:}},{t}_{2}^{{\prime\:}{\prime\:}}\right)\) is derived from the second parent vector \(\:\left({\alpha\:}_{2},{r}_{2},{t}_{2}\right)\) . The new vector \(\:\left({\alpha\:}_{3}^{{\prime\:}{\prime\:}},{r}_{3}^{{\prime\:}{\prime\:}},{t}_{3}^{{\prime\:}{\prime\:}}\right)\) is formed from the third parent vector \(\:\left({\alpha\:}_{3},{r}_{3},{t}_{3}\right)\). Finally, the new vector \(\:\left({\alpha\:}_{4}^{{\prime\:}{\prime\:}},{r}_{4}^{{\prime\:}{\prime\:}},{t}_{4}^{{\prime\:}{\prime\:}}\right)\) is derived from the fourth parent vector \(\:\left({\alpha\:}_{4}^{{\prime\:}},{r}_{4}^{{\prime\:}},{t}_{4}^{{\prime\:}}\right)\). Now, we will decide whether the parent vector or the new vector will survive. For that, we will compute the fitness values,

We have, \(\:E\left({\alpha\:}_{1},{r}_{1},{t}_{1}\right)=24.79196,E\left({\alpha\:}_{2},{r}_{2},{t}_{2}\right)=68.66706,E\left({\alpha\:}_{3},{r}_{3},{t}_{3}\right)=34.98788\), and \(\:E\left({\alpha\:}_{4}^{{\prime\:}},{r}_{4}^{{\prime\:}},{t}_{4}^{{\prime\:}}\right)=41.02909\). Similarly, for the new vectors, we have \(\:E\left({\alpha\:}_{1}^{{\prime\:}{\prime\:}},{r}_{1}^{{\prime\:}{\prime\:}},{t}_{1}^{{\prime\:}{\prime\:}}\right)=44.88699,E\left({\alpha\:}_{2}^{{\prime\:}{\prime\:}},{r}_{2}^{{\prime\:}{\prime\:}},{t}_{2}^{{\prime\:}{\prime\:}}\right)=71.83928,E\left({\alpha\:}_{3}^{{\prime\:}{\prime\:}},{r}_{3}^{{\prime\:}{\prime\:}},{t}_{3}^{{\prime\:}{\prime\:}}\right)=38.71355\), and \(\:E\left({\alpha\:}_{4}^{{\prime\:}{\prime\:}},{r}_{4}^{{\prime\:}{\prime\:}},{t}_{4}^{{\prime\:}{\prime\:}}\right)=41.02909\). We see that \(\:\left({\alpha\:}_{4}^{{\prime\:}},{r}_{4}^{{\prime\:}},{t}_{4}^{{\prime\:}}\right)\) produces equal fitness when compared to their corresponding parent vector. All other new vectors fail to produce better fitness.

Step 5 (Termination): As the termination criteria, such as achieving the minimum fitness function value or reaching the maximum number of iterations, are not met in the second iteration also, proceed to Step 1 and continue to the third iteration.

FDE optimization of the IFS parameters: R code illustration and results

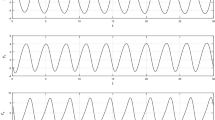

More attention is needed on the selection of the DE parameters crossover probability \(\:\left(C{R}_{i,k}\right)\) and mutation scaling factor \(\:\left({F}_{i,k}\right)\), as the significance of their values is not thoroughly investigated so far. To attain the termination criteria, a similar implementation to optimize the \(\:\alpha\:,r\), and \(\:t\) values for fractal curve approximation using FDE is constructed in R Programming. In the optimization process described herein, the FDE algorithm is employed. The primary goal is to minimize the Euclidean distance between the target fractal curve and approximated fractal curve computed based on a set of parameters, denoted as \(\:\alpha\:,r,t\) values. The “DEoptim” package is employed for DE optimization in this study. The optimization of parameters \(\:{F}_{i,k}\), denoted as \(\:F\), and \(\:C{R}_{i,k}\), denoted as \(\:CR\), in the R code for the FDE algorithm is conducted using a grid search methodology. Instead of relying on predefined equations for \(\:F\) and \(\:CR\) in Eqs. (4) and (3), grid search involves systematically exploring a range of values for \(\:F\) and \(\:CR\). This approach aims to identify the combination of \(\:F\) and \(\:CR\) that minimizes the fitness function, thereby improving the accuracy of the fractal curve approximation. The selection of optimal \(\:F\) and \(\:CR\) values is based on their performance in achieving convergence towards the target fractal curve, enhancing the overall effectiveness of the FDE algorithm in this fractal curve approximation task. The initial \(\:\alpha\:,r\), and \(\:t\) values are predefined, initiating the optimization process. The results include the optimized \(\:\alpha\:,r\), and \(\:t\) values, the corresponding curve, and the Euclidean distance between the approximated curve and the target fractal curve. These outcomes offer valuable insights into parameter configurations that effectively minimize the objective function, ensuring a close alignment between the calculated curve and the desired target fractal curve. The optimization process of the scaling and shape parameters for the RFC splines using the FDE algorithm is illustrated in Figure 4. Each subfigure represents a different iteration of the algorithm. Figure 4a shows the initial iteration (Iteration 1), where the parameters are at their starting values. In Fig. 4b, the parameters have been adjusted through the algorithm in Iteration 2. By Iteration 3, as seen in Fig. 4c, further optimization has been achieved. Finally, Fig. 4d displays Iteration 4, demonstrating the refined shape and scale parameters. Overall, these figures 4 provide a visual representation of the optimization process, highlighting the progressive improvement of the parameters at each iteration. As shown in Table 7, the Euclidean distance between the target fractal curve and the approximated fractal curve decreases progressively across iterations, indicating improved optimization. In the sixth iteration, the Euclidean distance is minimized to 3.108554, demonstrating a better approximation of the target fractal curve. This reduction is achieved through optimized shape parameters \(\:(r,t)\) and scaling factors \(\:\left\{\alpha\:\right\}\), along with the best combination of crossover probability (CR) and mutation scaling factor (F). To perform grid search for \(\:CR\) and \(\:F\) values using the FDE algorithm in R programming, the code snippet in the appendix A can be utilized. The code snippet demonstrates a grid search approach where \(\:CR\) and \(\:F\) values for the DE algorithm are iteratively tested across predefined ranges. The parameters \(\:r\) and \(\:t\) are chosen within the range of [0,5], while \(\:\alpha\:\) are constrained by \(\:\left[\text{0,0.4543,0.2727,0.1818,0.909}\right]\), which are the \(\:{a}_{i}\) values obtained from the construction of RFC for our data points (see Eq. (2)). After evaluating all combinations, the code outputs the best \(\:CR\) and \(\:F\) values along with their corresponding parameters and results. Here NA in the code denotes the null set and NP is the population size.

Comparative analysis

This section presents a comparative analysis of the proposed method, FDE, with existing optimization strategies and a newly applied method in the context of fine-tuning the IFS parameters. In particular, the Genetic Algorithm (GA), which has been incorporated in many studies, as discussed in "Introduction". Additionally, Particle Swarm Optimization (PSO) is an existing method that has been used to estimate the parameters of the Lorenz system (a chaotic system)29, but it has not been applied to IFS parameter optimization. Similarly, Simulated Annealing (SA) is a newly explored method in the context of IFS parameter optimization. Table 8 presents the Euclidean distances obtained by different optimization techniques. Among the methods compared, the Fractal Differential Evolution (FDE) algorithm achieved the lowest Euclidean distance, demonstrating its superior performance in optimizing both the vertical scaling factor (VSF) and shape parameters of the IFS model. The results indicate that the Fractal Differential Evolution (FDE) algorithm outperforms GA, SA, and PSO in minimizing the Euclidean distance. Unlike conventional optimization approaches, FDE effectively tunes the IFS parameters ensuring a more precise approximation of the fractal curve. Its ability to efficiently explore the parameter space while maintaining a balance between exploration and exploitation makes it particularly effective for this problem. Although the Differential Evolution(DE) algorithm demonstrates robust optimization capabilities, it does not always guarantee convergence to the global minimum. Like other evolutionary algorithms, DE is susceptible to premature convergence in complex, multimodal landscapes where multiple local minima exist. However, by maintaining a diverse population and incorporating adaptive mutation strategies, FDE reduces the likelihood of getting trapped in local minima, making it more reliable than traditional techniques such as GA and SA. The convergence plot is delineated in Fig. 5 .

The convergence plot illustrates the performance of four optimization algorithms in optimizing the IFS parameters(scaling factor \(\:\alpha\:\) and shape parameters \(\:r\) and \(\:t\) ). Here we applied all the 3 methods together with FDE and got the optimized parameter results, and using the optimized parameters, the RFC spline has been reconstructed and the term Euclidean distance refers to the Euclidean distance between the target fractal curve whose formulation has been explained in 3.1 and the approximated fractal curve : FDE (orange), GA (red), PSO (blue), and SA (green) in terms of Euclidean distance over iterations. Among them, FDE (orange) demonstrates the fastest and most stable convergence, reaching the lowest Euclidean distance early in the process. GA (red) also converges but exhibits noticeable fluctuations, indicating an unstable search pattern. PSO (blue) and SA (green) show rapid initial drops in Euclidean distance, but they do not reach the same low values as FDE. Notably, SA (green) experiences a sharp decline at the beginning but fails to maintain better long-term convergence. Overall, FDE outperforms the other algorithms by achieving the best convergence behavior with minimal fluctuations, while GA struggles with stability, and SA, despite its fast initial drop, does not sustain optimal convergence.

Sensitivity analysis

Sensitivity analysis was conducted to evaluate how small perturbations in the model parameters influence the overall system output. The objective is to determine which parameters have the most significant impact on the model’s behavior. This is achieved by systematically modifying each parameter and analyzing the corresponding change in the output. The sensitivity analysis follows a systematic approach. First, the key model parameters are identified, including \(\:\left\{{r}_{1},{r}_{2},{r}_{3},{r}_{4},{t}_{1},{t}_{2},{t}_{3},{t}_{4},{\alpha\:}_{1},{\alpha\:}_{2},{\alpha\:}_{3},{\alpha\:}_{4}\right\}\). Baseline values for these parameters are then generated using a uniform distribution. The model output is computed at these baseline values. To assess the sensitivity of each parameter, an individual perturbation is applied by increasing its value by 5% while keeping all other parameters constant. The resulting change in the model output is evaluated, and the absolute difference between the perturbed and baseline outputs is recorded as a measure of the parameter’s sensitivity. The computed sensitivity values for each parameter are summarized in Table 9. The values represent the absolute change in model output when each

Convergence Plot parameter is perturbed by 5%. The results indicate that parameters such as \(\:{r}_{1},{r}_{4}\), and \(\:{t}_{3}\) have the highest sensitivity values, meaning that even small changes in these parameters significantly impact the model output. Conversely, parameters like \(\:{\alpha\:}_{3}\) and \(\:{\alpha\:}_{4}\) exhibit the lowest sensitivity values, indicating that minor variations in these parameters have a relatively small effect on the system’s behavior. This analysis helps in identifying the most influential parameters, which can be prioritized for fine-tuning in further optimizations.

FDE optimized neural network approximation: numerical example and results

Require The fractal interpolation data is represented as \(\:\left\{{X}_{k,i},{Y}_{k,i}\right\}\), represents the \(\:{k}^{th}\) generation fractal interpolation data. For this example, we take \(\:k=1\) (first generation points). The training data points for \(\:{\left\{{X}_{k,i}\right\}}_{i=1}^{18}\) are:

\(\:[\text{0,2.272727,3.636364}\),\(\:\text{4.545455,5},\text{6.363636,7.181818,7.727273,8},\text{8.909091,9.454545,9.818182},\)

\(\:{\text{10,10,10}}{\text{.454545,10}}{\text{.727273,10}}{\text{.909091}},11]\).

The corresponding values of \(\:{\left\{{Y}_{k,i}\right\}}_{i=1}^{18}\) are:

\(\:[\text{8,6.624721,7.301572,6.946021,7},\text{8.325309,9.776919,10.018408,10}\), \(\:\text{9.675455,9.374684,8.468881,8},\text{8,7.551042,8.669955,6.681363,6}]\).

Step 1: Neural Network Initialization Initialize the weights by random generation. Here, \(\:{w}^{1,h}\) represents the weight connecting the input unit to the \(\:h\)-th hidden unit, \(\:{w}^{2,h}\) represents the weight connecting the \(\:h\)-th hidden unit to the output unit, \(\:{b}^{h}\) represents the bias of the \(\:h\)-th hidden unit, and \(\:{b}^{o}\) represents the bias of the output unit. After initializing the weights,

compute \(\:{Z}_{i}^{\left(1\right)},{a}_{i}^{\left(1\right)},{z}_{i}^{\left(2\right)},{\stackrel{\prime }{Y}}_{k,i}\), and \(\:L\). Update weights and biases using gradient descent; the updated weights and biases are given below:

The output layer bias, \(\:{b}^{o}\), is -1.79. Here, \(\:{w}^{1,h}\) represents the weight connecting the input unit to the \(\:{h}^{\text{th}}\) hidden unit, \(\:{w}^{2,h}\) represents the weight connecting the \(\:h\)-th hidden unit to the output unit, \(\:{b}^{h}\) represents the bias of the \(\:{h}^{\text{th}}\) hidden unit, and \(\:{b}^{o}\) represents the bias of the output unit. These values are initialized randomly to break symmetry and allow the network to learn effectively. The sigmoid activation function \(\:\sigma\:\left(z\right)=\frac{1}{1+{e}^{-z}}\) is used for both the hidden and output layers.

Step 2: Training the Neural Network The Table 10 illustrates the calculations for all input values for hidden layer 1 to 11. Here we take \(\:{h}_{\text{m}\text{a}\text{x}}\) as \(\:{h}_{\text{opt}}\) which is obtained from the FDE Algorithm and is explained in Step 3.

Step 3: Fractal Differential Evolution (FDE) Optimization Here we apply the FDE Algorithm 1 to optimize \(\:\eta\:\) and \(\:h\). The Fractal Differential Evolution (FDE) algorithm can be adapted to optimize the neural network parameters \(\:\eta\:\) (learning rate) and \(\:h\) (number of hidden neurons). The process begins by initializing a population of size \(\:N\) with random individuals in the solution space for the parameters \(\:\eta\:\) and \(\:h\). For each individual, the mutation scaling factor \(\:{F}_{i,k}\) and crossover probability \(\:C{R}_{i,k}\) are updated at each iteration using predefined rules, as defined in Eqs. (3) and (4), which involve random variables. The range \(\:\left[\text{0.1,0.9}\right]\) for \(\:{F}_{i,k}\) and \(\:C{R}_{i,k}\) is chosen to ensure a balance between exploration and exploitation during the optimization process, avoiding excessively small values that could hinder diversity and excessively large values that might lead to instability or premature convergence. In the Mutation step (as described in Eq. (6)), the algorithm combines the vectors of three randomly chosen individuals \(\:{\mathbf{x}}_{{\text{r}}_{1}},{\mathbf{x}}_{{\text{r}}_{2}}\), and \(\:{\mathbf{x}}_{{\text{r}}_{3}}\) to generate a mutant vector \(\:{\mathbf{u}}_{j}\) for each individual. The mutation is applied according to the rule in Eq. (5). In the Crossover step (as described in Eq. (6)), the target vector \(\:\mathbf{x}=(\eta\:,h)\) is combined with the mutant vector \(\:{\mathbf{u}}_{j}\) to generate a trial vector \(\:{\mathbf{x}}^{{\prime\:}}\), with a crossover probability \(\:C{R}_{k}\). The crossover is performed as per the rule in Eq. (6). After the crossover step, the Selection step is performed by training a neural network using the optimized values \(\:{\eta\:}_{\text{opt}}\) and \(\:{h}_{\text{opt}}\) as the learning rate and the number of hidden neurons, respectively. The fitness of the trial vector is determined by calculating the Euclidean distance between the approximated neural network and the FDE-optimized RFC spline, as shown in Eq. (9):

Here, \(\:{\mathcal{G}}^{\text{*}}\left({\mathbf{x}}^{{\prime\:}}\right)\) represents the Rational Fractal Cubic (RFC) spline, and \(\:NN\left({X}_{k,i}\right)\) represents the approximated neural network prediction for new data points \(\:{X}_{k,i}\). The algorithm then continues iterating through the mutation, crossover, evaluation, and selection steps until a stopping criterion is met. The stopping criterion can be based on the fitness value approaching a desired threshold \(\:\epsilon\:={10}^{-3}\), or a predefined maximum number of generations.

\(\:h\) | \(\:{x}_{k,i}\) | \(\:{z}_{i}\) | \(\:{a}_{i}\) | \(\:{\stackrel{\prime }{y}}_{k,i}\) | \(\:{y}_{k,i}\) | L |

|---|---|---|---|---|---|---|

1 | 0 | 0.57 | 0.6387632 | 8.015258 | 8 | 0.000232 |

1 | 2.272727 | 0.02454552 | 0.5061361 | 6.661537 | 6.6 | 0.000009 |

1 | 3.636364 | -0.3027274 | 0.4248909 | 7.258812 | 7.2 | 0.000003 |

1 | 4.545455 | -0.5209092 | 0.3726397 | 7.010018 | 7 | 0.000001 |

1 | 5 | -0.63 | 0.3475105 | 7.000121 | 7 | 0.000000 |

1 | 6.363636 | -0.9572726 | 0.2774246 | 8.383408 | 8.4 | 0.000003 |

1 | 7.181818 | -1.153636 | 0.2398255 | 9.651243 | 9.6 | 0.000003 |

1 | 7.727273 | -1.284546 | 0.2167775 | 10.04376 | 10 | 0.000002 |

1 | 8 | -1.35 | 0.2058704 | 10.07839 | 10 | 0.000001 |

1 | 8.909091 | − 1.568182 | 0.1724757 | 9.61386 | 9.6 | 0.000002 |

1 | 9.454545 | − 1.699091 | 0.154584 | 9.035881 | 9 | 0.000001 |

1 | 9.818182 | − 1.786364 | 0.1435191 | 8.562194 | 8.6 | 0.000002 |

1 | 10 | − 1.83 | 0.1382383 | 8.303718 | 8.3 | 0.000001 |

1 | 10 | − 1.83 | 0.1382383 | 8.303718 | 8.3 | 0.000001 |

1 | 10.45454 | − 1.939091 | 0.1257478 | 7.608529 | 7.6 | 0.000002 |

1 | 10.72727 | − 2.004546 | 0.1187265 | 7.167997 | 7.2 | 0.000001 |

1 | 10.90909 | − 2.048182 | 0.1142362 | 6.869298 | 6.9 | 0.000001 |

1 | 11 | − 2.07 | 0.112047 | 6.71936 | 6.7 | 0.000001 |

2 | 0 | 0.66 | 0.6592604 | 8.015258 | 8 | 0.000232 |

2 | 2.272727 | 0.1600001 | 0.5399149 | 6.661537 | 6.6 | 0.000009 |

\(\:\cdot\:\) | \(\:\cdot\:\) | \(\:\cdot\:\) | \(\:\cdot\:\) | . | . | |

\(\:\cdot\:\) | \(\:\cdot\:\) | . | . | . | . | |

. | . | . | . | . | . | |

11 | 0 | − 9.73 | \(\:5.946876\text{e}-05\) | 8.015258 | 8 | 0.000232 |

11 | 2.272727 | − 6.320909 | 0.001795079 | 6.661537 | 6.6 | 0.000009 |

Step 4: Retraining with Optimized Parameters Using the optimized parameters \(\:{\eta\:}_{\text{o}\text{p}\text{t}}=0.008\) and \(\:{h}_{\text{o}\text{p}\text{t}}=11\), the network is retrained on the data. The new predictions \(\:{\stackrel{\prime }{y}}_{k,\text{new}}\) are generated for extrapolated input values \(\:{x}_{k,\text{new}}\).

Step 5: Visualization and Comparison Finally, the fractal interpolation curve and the neural network predictions are plotted together to visually compare the results. The extrapolated predictions are also included in the plot to demonstrate the network’s capability for generalization beyond the training data.

FDE optimized neural network approximation: R code illustrations and results

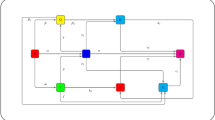

The Fractal Differential Evolution (FDE) algorithm for optimizing the learning rate \(\:\eta\:\) and size \(\:h\) parameters in a neural network follows the same steps as described in Algorithm 1 for optimizing IFS parameters ( \(\:\alpha\:,r\), and \(\:t\) ). The primary differences are in the parameter bounds and interpretation. For the learning rate \(\:\eta\:\), values are bounded within \(\:\left[\text{0.0001,0.1}\right]\), while the network size \(\:h\) (e.g., the number of neurons in a hidden layer) is bounded within an integer range [10, 100]. All steps, including the initialization of \(\:{F}_{i,k}\) and \(\:C{R}_{i,k}\), mutation, crossover, and selection, are applied similarly, with modifications to the fitness function to assess neural network performance. The termination criteria remain the same, based on a predefined fitness threshold or maximum generation count. The R code snippet in the appendix A delineates the FDE optimization and Feedforward Neural Network (NN) used to produce the Figures in 6. The code begins by setting the control parameters for the Differential Evolution (DE) optimization using the DEoptim package. The list () function is used to create a list of control parameters, where itermax \(\:=100\) specifies the maximum number of iterations for the optimization process, NP \(\:=20\) sets the population size, and trace \(\:=\) TRUE enables the output trace to display the optimization progress. The \(\:c\left(\right)\) function is then used to define the lower and upper bounds for the parameters to be optimized, specifically the learning rate and the number of hidden nodes in the neural network. These bounds are stored in the lower_bounds and upper_bounds vectors. The DEoptim () function is called to perform the optimization, where the objective function to be minimized, along with the parameter bounds and control parameters, is passed as arguments. The result of the optimization is stored in de_result, and the optimized parameters are accessed through de_result$optim$bestmem, which are printed to display the best solution found. The optimized parameters for the learning rate and the number of hidden nodes are then extracted from bestmem, with the number of hidden nodes rounded to the nearest integer. These optimized values are used to train the final neural network model using the nnet () function. The nnet () function takes the training data (x_train and y_train), specifies the number of hidden nodes (size \(\:=\) optimized_size), and sets the learning rate decay (decay = optimized_learning_rate). It also specifies that the output should be linear (linout = TRUE) and sets the maximum number of iterations for training (maxit \(\:=1000\) ). The hidden layer uses the sigmoid activation function by default. It provides smooth, nonlinear transformations, ensuring the network can effectively capture the fractal characteristics of the RFC spline while maintaining stability in training. Additionally, its bounded nature ( 0 to 1 ) helps in approximating the constrained behavior of fractal-based interpolations. Finally, the predict () function is used to generate predictions from the trained neural network model, and these predictions are stored in the final_predictions object for further analysis or evaluation. Figures 6 delineates the NN approximated curves for the FDE optimized RFC. Figure 6a refers to the third iteration of the FDE algorithm for optimizing the NN parameters. Similarly, Figs. 6b–d represent the fourth, tenth, and fifteenth iterations, respectively. Also, Table 11 demonstrates the values of the NN parameters across iterations and their corresponding fitness functions obtained through the FDE optimization. Figure 7a represents the Neural Network diagram for the approximated NN predictions of the RFC spline at iteration 3. As shown in Table 11, at iteration 3, the FDE-optimized size \(\:h=11.090035\) is approximated to \(\:h\approx\:11\), and the learning rate \(\:\eta\:=0.004130\). Similarly, Fig. 7b represents the Neural Network diagram for the approximated NN predictions of the RFC spline at iteration 4. As shown in Table 11, at iteration 4, the FDE-optimized size \(\:h=6.938319\) is approximated to \(\:h\approx\:7\), and the learning rate \(\:\eta\:=0.005709\). Similarly, Fig. 7c represents the Neural Network diagram for the approximated NN predictions of the RFC spline at iteration 10. As shown in Table 11, at iteration 10, the FDE-optimized size \(\:h=13.995797\) is approximated to \(\:h\approx\:14\), and the learning rate \(\:\eta\:=0.001036\). Finally, Figure 7d represents the Neural Network diagram for the approximated NN predictions of the RFC spline at iteration 15. As shown in Table 11, at iteration 15, the FDE-optimized size \(\:h=17.687814\) is approximated to \(\:h\approx\:18\), and the learning rate \(\:\eta\:=0.001961\).

NN Diagrams for the RFC approximated NN predictions. The image was generated using R programming (R version 4.3.2, https://cran.r-project.org/). (a) FDE optimized NN predictions for Iteration 3 (b) FDE optimized NN predictions for Iteration 4, (c) FDE optimized NN predictions for Iteration 10, (d) FDE optimized NN predictions for Iteration 15.

Conclusion

The results of this study highlight the effectiveness of the Fractal Differential Evolution (FDE) algorithm in optimizing IFS parameters for Rational Fractal Cubic (RFC) splines. By targeting the optimization of both scaling and shape parameters, the FDE algorithm considerably boosts spline accuracy and computational efficiency. The numerical example provided demonstrates the practical utility of our method, showcasing its success in optimizing the IFS parameters ( \(\:\alpha\:,r\) and \(\:t\) ) of the RFC. We successfully predicted data points by approximating the RFC spline with a feedforward neural network (NN). Using our FDE method, we optimized the NN key parameters, such as the learning rate \(\:\left(\eta\:\right)\) and network size \(\:\left(h\right)\), to enhance the performance of the neural network for extrapolation tasks. As the iteration of the FDE algorithm progresses, the Euclidean distance between the optimized RFC and the optimized NN is reducing, indicating a reasonable approximation of the RFC spline. Future work includes extending the FDE algorithm to optimize parameters for fractal surface interpolation, broadening its applicability beyond univariate fractal models. Additionally, exploring hybrid optimization approaches could further enhance convergence speed and accuracy, particularly in high-dimensional fractal interpolation. Furthermore, future studies may investigate the potential of SA and PSO for IFS parameter optimization, as these methods remain largely unexplored in this domain.

Data availability

All data generated or analysed during this study are included in this published article.

References

Mandelbrot, B. B. & Wheeler, J. A. The fractal geometry of nature. Am. J. Phys. 51 (3), 286–287. https://doi.org/10.1119/1.13295 (1983).

Barnsley, M. F. Fractal functions and interpolation. Constructive Approximation. 2 (1), 303–329. https://doi.org/10.1007/BF01893434 (1986).

Barnsley, M. F. & Harrington, A. N. The calculus of fractal interpolation functions. J. Approximation Theory. 57, 14–34. https://doi.org/10.1016/0021-9045(89)90080-4 (1989).

Chand, A. K. B., Viswanathan, P. & Reddy, K. M. Towards a more general type of univariate constrained interpolation with fractal splines. Fractals 23 (4). https://doi.org/10.1142/S0218348X15500401 (2015).

Chand, A. K. B. & Reddy, K. M. Constrained fractal interpolation functions with variable scaling. Siberian Electron. Math. Rep. 15, 60–73. https://doi.org/10.17377/semi.2018.15.008 (2018).

Katiyar, S. K., Reddy, K. M. & Chand, A. K. B. Constrained Data Visualization Using Rational Bi-cubic Fractal Functions. In: Mathematics and Computing. ICMC 2017. Communications in Computer and Information Science 655, 295–305, Springer, Singapore, (2017). https://doi.org/10.1007/978-981-10-4642-1_23

Reddy, K. M. & Vijender, N. A fractal model for constrained curve and surface interpolation. Eur. Phys. J. Special Top. 232 (7), 1015–1025. https://doi.org/10.1140/epjs/s11734-023-00862-0 (2023).

Chand, A. K. B., Vijender, N. & Navascués, M. A. Shape preservation of scientific data through rational fractal splines. Calcolo 51 (2), 329–362. https://doi.org/10.1007/s10092-013-0088-2 (2014).

Gautam, S. & Katiyar, K. Alpha fractal rational Quintic spline with shape preserving properties. Int. J. Comput. Appl. Math. Comput. Sci. https://doi.org/10.37394/232028.2023.3.13 (2023).

Viswanathan, P. V. & Chand, A. K. B. \(\:\alpha\:\)-fractal rational splines for constrained interpolation. Electron. Trans. Numer. Anal. 41, 420–442 (2014). http://etna.math.kent.edu

Katiyar, S. & Chand, A. A new class of monotone/convex rational fractal function. ArXiv: Dyn. Syst. https://arxiv.org/abs/1802.00315 (2018).

Katiyar, S., Chand, A. K. B. & Kumar, G. A new class of rational cubic spline fractal interpolation function and its constrained aspects. Appl. Math. Comput. 346, 319–335. https://doi.org/10.1016/j.amc.2018.10.036 (2019).

Viswanathan, P., Chand, A. K. B. & Agarwal, R. Preserving convexity through rational cubic spline fractal interpolation function. J. Comput. Appl. Math. 263, 262–276. https://doi.org/10.1016/j.cam.2013.11 (2014).

Gregory, J. & Sarfraz, M. A rational cubic spline with tension. Comput. Aided. Geom. Des. 7, 1–13. https://doi.org/10.1016/0167-8396(90)90017-L (1990).

Feng-Bin, P., Shan-shan, L., Yanjie, W. & Qiang, W. Based on Parameter Equation Function Rational Spline Interpolation with the Shape Preserved. In: 2013 International Conference on Virtual Reality and Visualization, 301–304, (2013). https://doi.org/10.1109/ICVRV. 58 (2013).

Habib, Z., Sarfraz, M. & Sakai, M. Rational cubic spline interpolation with shape control. Computers Graphics. 29, 594–605. https://doi.org/10.1016/J.CAG.2005.05.010 (2005).

Tyada, K., Chand, A. K. B. & Sajid, M. Shape preserving rational cubic trigonometric fractal interpolation functions. Math. Comput. Simul. 190, 866–891. https://doi.org/10.1016/J.MATCOM.2021.06.015 (2021).

Bélisle, E., Huang, Z., Le Digabel, S. & Gheribi, A. E. Evaluation of machine learning interpolation techniques for prediction of physical properties. Comput. Mater. Sci. 98, 170–177. https://doi.org/10.1016/j.commatsci.2014.10.032 (2015).

Fei, X., Wang, J., Ying, S., Hu, Z. & Shi, J. Projective parameter transfer based sparse multiple empirical kernel learning machine for diagnosis of brain disease. Neurocomputing 413, 271–283. (2020).

Jin, X., He, T. & Lin, Y. Multi-objective model selection algorithm for online sequential ultimate learning machine. EURASIP J. Wirel. Commun. Netw. 2019(1), 156. https://doi.org/10.1186/s13638-019-1477-2 (2019).

Shi, B. et al. Prediction of recurrent spontaneous abortion using evolutionary machine learning with joint self-adaptive Sime mould algorithm. Comput. Biol. Med. 148, 105885. https://doi.org/10.1016/j.compbiomed.2022.105885 (2022).

Li, X. F. & Li, X. F. An explicit fractal interpolation algorithm for reconstruction of seismic data. Chin. Phys. Lett. 25 (3), 1157–1159 (2008). http://cpl.iphy.ac.cn/en/article/id/43734

Raubitzek, S. & Neubauer, T. A fractal interpolation approach to improve neural network predictions for difficult time series data. Expert Syst. Appl. 164, 114095. https://doi.org/10.1016/j.eswa.2020.114095 (2021).

Zhai, M. Y. A new method for short-term load forecasting based on fractal interpretation and wavelet analysis. Int. J. Electr. Power Energy Syst. 69, 241–245. https://doi.org/10.1016/j.ijepes.2014.12.087 (2015).

Zhai, M. Y., Fernández-Martínez, J. L. & Rector, J. W. A new fractal interpolation algorithm and its applications to self-affine signal reconstruction. Fractals 19 (3), 287–303. https://doi.org/10.1142/S0218348X11005427 (2011).

Cui, W. et al. Deep multiview module adaption transfer network for Subject-Specific EEG recognition. IEEE Trans. Neural Networks Learn. Syst. 36 (2), 2917–2930. https://doi.org/10.1109/TNNLS.2024.3350085 (2025).

Zhao, H., Qiu, X., Lu, W., Huang, H. & Jin, X. High-quality retinal vessel segmentation using generative adversarial network with a large receptive field. Int. J. Imaging Syst. Technol. 30, 828–842. https://doi.org/10.1002/ima (2020).

Georgioudakis, M. & Plevris, V. A. Comparative Study of Differential Evolution Variants in Constrained Structural Optimization. In: Frontiers in Built Environment, 6, Frontiers Media S.A., (2020). https://doi.org/10.3389/fbuil.2020.00102

He, Q., Wang, L. & Liu, B. Parameter Estimation for chaotic systems by particle swarm optimization. Chaos Solitons Fractals. 34 (2), 654–661. https://doi.org/10.1016/j.chaos.2006.03.079 (2007).

Author information

Authors and Affiliations

Contributions

S.A. performed all experimentation, programming, and prepared the initial draft of the manuscript. M.R.K. supervised the research throughout, reviewed the manuscript, and proofread the final version.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Abdulla, S., Mahipal Reddy, K. Optimizing the neural network and iterated function system parameters for fractal approximation using a modified evolutionary algorithm. Sci Rep 15, 13720 (2025). https://doi.org/10.1038/s41598-025-94821-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-94821-5