Abstract

Contemporary algorithms for enhancing images in low-light conditions prioritize improving brightness and contrast but often neglect improving image details. This study introduces the Swin Transformer-based Light-enhancing Generative Adversarial Network (SwinLightGAN), a novel generative adversarial network (GAN) that effectively enhances image details under low-light conditions. The network integrates a generator model based on a Residual Jumping U-shaped Network (U-Net) architecture for precise local detail extraction with an illumination network enhanced by Shifted Window Transformer (Swin Transformer) technology that captures multi-scale spatial features and global contexts. This combination produces high-quality images that resemble those taken in normal lighting conditions, retaining intricate details. Through adversarial training that employs discriminators operating at multiple scales and a blend of loss functions, SwinLightGAN ensures a seamless distinction between generated and authentic images, ensuring superior enhancement quality. Extensive experimental analysis on multiple unpaired datasets demonstrates SwinLightGAN’s outstanding performance. The system achieves Naturalness Image Quality Evaluator (NIQE) scores ranging from 5.193 to 5.397, Blind/Referenceless Image Spatial Quality Evaluator (BRISQUE) scores from 28.879 to 32.040, and Patch-based Image Quality Evaluator (PIQE) scores from 38.280 to 44.479, highlighting its efficacy in delivering high-quality enhancements across diverse metrics.

Similar content being viewed by others

Introduction

Under suboptimal lighting conditions, images frequently exhibit decreased brightness, colour bias, and noise, constraining their utility in critical applications such as mining and aerial photography1,2,3. To address these limitations, the field of low-light image enhancement (LLIE) has emerged as a focal area of research aiming to expand the practical applicability of such images. Initial efforts in LLIE predominantly relied on non-linear pixel transformations to selectively amplify pixel values across different image regions, thus enhancing contrast and illumination distributions4,5,6,7,8. However, these early methods often failed to model the imaging process accurately, leading to colour distortions and structural anomalies. Although they could marginally improve microlight images, they tended to overlook finer details, compromising image clarity.

To avoid these issues, subsequent research introduced enhancement techniques grounded in retinex theory, which decomposes images into illumination and reflection maps. By processing these maps separately, this approach leverages the light-invariant characteristics of scene content to enhance image quality9,10,11,12. Despite notable advancements in enhancing structural visibility in dimly lit areas using retinex-based methods, challenges like colour oversaturation and noise amplification persist.

Deep learning ushered in a new era for LLIE, with methodologies based on algorithms such as RetinexNet13 and KinD14 showing promise in mitigating colour saturation and noise issues. However, the inherent difficulty of acquiring paired data for training in real-world settings has led researchers to explore unsupervised approaches. Generative Adversarial Networks (GANs)15,16,17,18,19,20 have been pivotal in this regard, with models such as EnlightenGAN15 and RetinexGAN20, which employ unsupervised GAN frameworks to effectively address the lack of paired training data and achieve significant improvements in visual quality across various application scenarios. Despite these advancements, deep learning approaches often struggle with detail loss, noise increase, and colour distortion, particularly in scenarios with a limited dynamic range.

In this study, we propose a novel hybrid network structure, SwinLightGAN, which integrates the Residual Jump U-Net Generator (RSUnet) and Swin Transformer Enhanced Illumination Network (SwinTE-HIEN) to achieve simultaneous enhancement of image brightness and sharpness. It is worth noting that although our method is not directly based on a traditional physical model such as the retinex theory, we demonstrated that our method successfully maintains the consistency of the image’s colour distribution when enhancing a low-light image through preliminary comparative analyses of colour histograms. As shown in Fig. 1, The color histograms of the low-light image LOW, normal-light image HIGH, and our enhanced image are compared specific contributions of this study are enumerated as follows:

Comparison of colour histograms of low light image LOW, standard light image High, and Ours enhanced image. In contrast, although SwinLightGAN is not based on a traditional physical enhancement model, such as Retinex theory, its processed images do not deviate significantly from the original regarding colour distribution. Image obtained from the LOL public dataset (Reference13).

-

We propose a novel hybrid network architecture that merges the U-Net residual network (referred to here as Light) with SwinTE-HIEN to refine the low-light image enhancement processes. The Light segment diligently extracts local details, whereas SwinTE-HIEN, which employs transformer technology, captures comprehensive global information and high-level semantics. This synergistic approach elevates image brightness and clarity. It marks a pivotal innovation in the effective amalgamation of multi-scale spatial features globally, thereby markedly enhancing the adaptability and efficacy of low-light image processing.

-

RSUnet introduced an advanced approach for feature integration and image detail enhancement. RSUnet significantly enhances structural integrity and detail realism in the processed images by implementing residual connections and multi-scale feature amalgamation strategies. This component is crucial for maintaining the natural aesthetics of enhanced images while ensuring that the details are vivid and clear.

-

SwinTE-HIEN utilizes Swin Transformer Blocks as its principal feature extraction module. By leveraging the self-attention mechanism, it meticulously mines hidden details and texture information in low-light images, thereby laying a rich semantic groundwork for image reconstruction. Additionally, by integrating multi-scale features via an innovative hierarchical decoding process and employing a hierarchical decomposition strategy, SwinTE-HIEN substantially boosts network flexibility and efficiency in recovering low-light imagery.

-

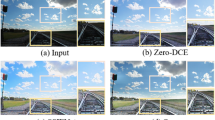

Extensive experimental analyses confirmed that SwinLightGAN outperforms existing methods across a broad spectrum of subjective and objective evaluation metrics. The findings substantiate the effectiveness and innovation of the proposed approach. Specific performance comparisons are presented in Fig. 2.

Related work

This section summarises the existing work relevant to SwinLightGAN, encompassing both traditional and deep learning methodologies for low-light image enhancement, application of Generative Adversarial Networks (GANs), and use of transformer models in natural language processing.

Low light image enhancement

Low-light image enhancement endeavours focus on augmenting the quality of images obtained under suboptimal lighting conditions. This section categorizes low-light image enhancement techniques into three primary groups: distribution-mapping-based methods, retinex-based approaches, and methodologies leveraging deep learning.

-

(1)

Distribution-mapping-based approaches emphasize enhancing low-light images by modifying pixel distribution to improve brightness and sharpness, utilizing techniques such as curve transformation and histogram equalization. Historically prevalent in early image enhancement efforts, these strategies are now increasingly integrated with deep learning models for data augmentation, thereby enhancing the efficacy of deep learning in low-light image enhancement tasks. Arici et al.6 introduced a histogram modification framework that conceptualizes contrast enhancement as an optimization problem centred on minimizing a cost function. This model facilitates flexible adjustment by incorporating specialized penalty terms to regulate the extent of contrast enhancement. In non-uniform illumination scenarios, Veluchamy et al.7 proposed an optimized Bézier curve-based intensity mapping scheme for low-light image enhancement, which achieves significant improvements in brightness and sharpness while preserving image details. Similarly, Bhandari et al.8 introduced a multi-exposure-based optimized contrast and brightness balance method, which effectively enhances image quality in terms of both contrast and luminance consistency. Despite their efficacy in certain contexts, histogram equalization-based low-light enhancement methods face theoretical constraints that limit their performance and prominence compared to the more comprehensive and versatile Retinex theory, especially in processing complex low-light environments.

-

(2)

Approaches based on the retinex model diverge from those founded on distribution mapping, as the construction of the retinex algorithm is predicated on the physical principles of visible light imaging. Retinex theory suggests that an image comprises a reflectance map the stabilizes colours and an illuminance map that signifies the ambient light of the imaging environment. Typically, a low-light image is decomposed, and the illuminance and reflectance images are processed separately and then reassembled to enhance the quality of the low-light image effectively. Fu et al.9 developed a MAP (Maximum A Posteriori Probability)–based retinex model that delineates distinct a priori constraints for different layers. They also proposed a weighted variational model in the logarithmic domain for simultaneous estimation of illumination and reflectance. Guo et al.10 introduced a solution for estimating illumination alone, termed the augmented Lagrange multiplier method (LIME), which constructs illumination maps from the maximum value of each pixel across image channels and refines these maps to accentuate the brightness and clarify the structure. Additionally, to counteract the noise associated with low-light enhancement, Ren et al.11 proposed a retinex model based on low-rank regularization (LR3M), marking the first use of a low-rank prior for retinex decomposition to estimate segmented-smoothed illumination and denoised reflectance, applying it to the enhancement of low-light videos through temporal low-rank constructions. Overall, the Retinex model boasts advantages in colour preservation and dynamic range compression within low-light image enhancement yet grapples with the challenges of noise amplification, high computational complexity, and limitations inherent to model assumptions.

-

(3)

With the rapid advancement of deep learning techniques, low-light image enhancement has witnessed substantial progress. Image enhancement methods that employ deep learning can be categorized into three primary types based on the application of training data: supervised, semi-supervised, and unsupervised. For instance, Chen et al.13 introduced a method based on the retinex model that effectively enhances and decomposes low-light images by training with paired low/normal light images. To mitigate noise arising from low-light enhancement, Zhang et al.14 proposed KinD, rooted in Retinex theory, which integrates denoising in the reflectance decomposition process not only to improve image brightness but also to reduce noise and enhance the overall image quality significantly. Nonetheless, these fully supervised approaches exhibit limited generalisation capabilities, constrained by the need for paired data. In response to these limitations, Yang et al.21 developed a semi-supervised Deep Recurrent Band Network (DRBN), leveraging paired datasets for band representation learning in its initial phase and unpaired datasets for perceptual quality-guided adversarial learning in its subsequent phase, thus effectively elevating image quality. Although semi-supervised methods offer considerable benefits under certain conditions, unsupervised learning remains indispensable, especially given its data availability and cost advantages. To this end, Jiang et al.15 introduced EnlightenGAN. This efficient unsupervised generative adversarial network enhances real-world low-light images across different domains through a global-local discriminator, self-regulated perceptual loss, and an attention mechanism. Guo et al.22 crafted a pixel-by-pixel high-order curve capable of efficiently performing luminance mapping across a wide dynamic range through iterative refinement. Despite its potential in practical applications, unsupervised learning seeks further enhancements in the generalization and adaptability to diverse lighting conditions.

GAN

Generative Adversarial Networks (GANs), initially proposed by Goodfellow et al.23, are currently applied in various domains, including sample data generation, image creation, image restoration, image translation, and text generation. A GAN comprises two critical components: generator (G) and discriminator (D). The generator is responsible for creating fake data, and the discriminator assesses the authenticity of the data. They influence and learn from one another through adversarial learning. Owing to the ability of GANs to generate realistic images, their capacity for unsupervised learning, and their powerful feature learning and adaptability, they excel in processing low-quality or complexly conditioned images, particulary in the absence of paired training data. For example, Ying et al.16 introduced a novel unsupervised low-light image enhancement network called LE-GAN, employing an illumination-aware attention module and identity-invariant loss to enhance visual quality significantly. Cui et al.17proposed a denoising network that combines Generative Adversarial Networks (GANs) with autoencoders (AEs), utilizing GANs to estimate and generate samples of noise distribution features, effectively reducing non-Gaussian noise in guided waves, particulary under low signal-to-noise ratio conditions. Yang et al.18 introduced an integrated learning method for low-light image enhancement called LightingNet, which consists of two core parts: a complementary learning subnet and a Vision Transformer (VIT) low-light enhancement subnet. The VIT subnet aims to learn current data through a full-scale architecture, providing localized high-level features. In contrast, the complementary learning subnet offers global fine-tuning features through transfer learning. These studies underscore the evolution of GAN networks in terms of technology and structure and in addressing practical issues such as image restoration and enhancement, demonstrating their real-world application value. The flexibility and adaptability of GANs position them as key technologies in image processing and generation, both now and in the future19,20.

Transformer

Initially popularized in Natural Language Processing (NLP), transformers have achieved remarkable success in various NLP tasks. Inspired by their significant accomplishments in NLP, researchers have begun to explore the application of Transformers in visual tasks to unlock their potential in image processing. The application of transformers in computer vision has been growing, demonstrating their broad applicability and formidable potential. The Vision Transformer (ViTransformer)24 is a pioneering example of this trend by applying transformers directly to image classification tasks, thereby reducing reliance on traditional CNN structures and demonstrating superior performance in processing large-scale datasets. Following this, Liu et al.25 designed a Swin Transformer, which introduced a hierarchical transformer structure with shifted windows, effectively constraining the computational scope of the self-attention mechanism and making it a versatile backbone for various visual tasks. Additionally, Zamir et al.26 proposed the conformer model, ingeniously blending the local feature extraction capabilities of CNNs with the global information processing advantages of transformers, particularly in image restoration tasks. However, effectively integrating the strengths of visual Transformers and CNNs remains a significant challenge for low-light image enhancement. These advancements not only illustrate the broad applicability of transformers in the field of image processing but also pave the way for future research directions.

Proposed model

In this section, the constituent elements of SwinLightGAN utilised for enhancing images under low-light conditions are delineated. These elements encompass the generator, the discriminator, and a novel hybrid loss function, which facilitates the integration of these components. The architecture of the proposed SwinLightGAN is depicted in Fig. 3.

SwinLightGAN architecture diagram. a Mouse delineates the process where the SwinLight generator network ingests images captured under low-light conditions and produces counterfeit maps that mimic high-quality, normal-light images. Concurrently, the discriminator endeavours to distinguish between authentic normal-light images and the counterfeit maps fabricated by the generator. This differentiation is facilitated by employing hybrid loss functions, including utilising the Vgg16 prediction network for extracting image features, among other techniques, to discern the disparities between genuine images and counterfeit maps. Through this adversarial training regimen, the generator and discriminator engage in a competitive interaction, culminating in the generator’s capability to synthesize images virtually indistinguishable from authentic high-resolution images. Cat presents the structure of the multi-scale discriminator network, highlighting its complexity and sophistication. Image obtained from the ExDark public dataset (Reference27).

Generator SwinLight

This study presents a hybrid network configuration designed to address the challenges of enhancing images captured under low-light conditions. The architecture combines a Residual Jump U-Net Generator (RSUnet) with a Swin-transformer-enhanced illumination network (SwinTE-HIEN). RSUnet specializes in extracting fine-grained, multi-scale spatial features, effectively preserving local detail integrity, while SwinTE-HIEN excels in capturing global contextual information and high-level semantic features through its advanced transformer framework. The outputs from RSUnet and SwinTE-HIEN are combined through a simple feature concatenation strategy, which retains the complementary strengths of both modules.

This fusion ensures that RSUnet’s detailed spatial attributes enhance image textures and structures, while SwinTE-HIEN’s global information refines coherence and semantic consistency across the image. The proposed methodology significantly amplifies image luminance and visual acuity while effectively balancing local and global information. Unlike traditional fusion strategies, which may suffer from limitations such as overly smooth textures or insufficient structural coherence, this approach leverages the strong synergy between the two modules to achieve robust and adaptable image enhancement. A detailed structure of the architecture is illustrated in Fig. 4.

Structure of the generator SwinLight. RSUnet primarily focuses on local detail restoration and residual learning for low-light images, aiming to enhance the image details. On the other hand, SwinTE-HIEN leverages the advantages of the Swin Transformer to provide global contextual information, generating more natural and refined images. The two modules are tightly integrated within the framework, forming a complementary structure, where RSUnet emphasizes local detail enhancement and SwinTE-HIEN focuses on global feature modeling. Together, they collaborate to ultimately optimize low-light images. Image obtained from the ExDark public dataset (Reference27).

RSUnet

To address the task of low-light image enhancement, this study proposes the RSUnet network, which combines the advantages of deep residual networks and focuses on enhancing image textures and structures obscured by low lighting. The essence of RSUnet lies in implementing multi-level and multi-scale feature extraction capabilities. This approach strengthens the flow of information through a series of down-sampling and up-sampling processes, along with residual connectivity, thereby improving the network’s learning capacity and its ability to enhance detail, as illustrated in Fig. 4.

Based on the U-Net architecture, the network incorporates deep residual learning techniques to improve the brightness, clarity, and detail fidelity of the images. Initially, feature extraction was performed on the image using a standard convolutional layer. Subsequently, downsampling operations (including convolution, normalization, activation, and pooling) enable the network to capture key features at multiple scales, progressively decreasing the feature map size and increasing the number of channels to retain the necessary spatial context information.

We introduce residual blocks in the deep feature encoding phase to further strengthen the network’s feature learning capability. This effectively prevents the gradient vanishing problem and ensures the effective preservation and enhancement of the original image details through the jump-joining mechanism. Additionally, during the up-sampling process, the network uses a transposed convolutional layer to recover the image resolution and optimize feature combinations by integrating features from different scales through jump joins, thus enhancing detail capture. Finally, the image is reconstructed through the network’s final convolutional layer, achieving significant enhancement in low-light environments.The multi-scale feature extraction capability of RSUnet ensures that fine-grained details from different spatial resolutions are preserved and enhanced. When integrated with SwinTE-HIEN, the local detail features extracted by RSUnet provide a strong foundation for the global contextual refinement performed by SwinTE-HIEN, creating a seamless fusion of local and global attributes.

SwinTE-HIEN

This study proposes a Swin Transformer-based image enhancement framework called the Swin Transformer Enhanced Illumination Network (SwinTE-HIEN) to recover lost details in dark environments. The SwinTE-HIEN network leverages the Swin Transformer’s high-level feature extraction capabilities through fine-grained hierarchical decomposition and an efficient self-attention mechanism to achieve a deep understanding and significant enhancement of details in low-light images.

SwinTE-HIEN adopts Swin Transformer Blocks as the core feature extraction module and utilizes Window Self-Attention (W-MSA) and Shift Window Self-Attention (SW-MSA) mechanisms to capture the global dependencies and complex spatial structure of the image effectively. Unlike the traditional Multi-head self-attention mechanism, which processes each pixel individually, W-MSA divides the feature map into multiple windows, computing within these smaller windows to reduce computation. SW-MSA was employed to address the lack of information interaction between windows. Starting from Layer1, it shifts the windows such that the window in Layer1+1 contains information from different blocks of the feature map, thereby overcoming the interaction issue between separate windows. The detailed structure is shown in Fig. 5. Each Residual Swin Transformer Block(RSTB) incorporates a residual connection strategy to mitigate information loss during deep network training, enhancing feature representation through layer normalization and a multi-layer perceptron (MLP). To integrate features from multiple RSTB blocks, SwinTE-HIEN integrates multi-scale features from shallow to deep layers through a hierarchical decoding process, as depicted in Fig. 6b Hierarchical decoding approach. Diverging from traditional layer-by-layer up-sampling methods, SwinTE-HIEN employs a hierarchical decomposition that decodes and fuses information from each RSTB layer, finely adjusting the contribution of features at each level to allow flexible feature flow and enhanced capture of fine details and structural information in the images. In the image reconstruction stage, SwinTE-HIEN combines advanced features extracted by a Swin Transformer with layer-by-layer decoding to restore the image brightness, contrast, and sharpness accurately. The SwinTE-HIEN module’s hierarchical decoding structure further enhances the fusion process by progressively integrating features from different layers of the Swin Transformer. This enables a more adaptive balance between global and local information, which is often unattainable using traditional methods. The innovative use of Window Self-Attention (W-MSA) and Shifted Window Self-Attention (SW-MSA) mechanisms provides an additional edge over traditional pixel-based attention mechanisms, ensuring that even subtle structural details are preserved during the fusion process.

SwinTransformer Block. Image obtained from the DICM public dataset (Reference28).

SwinTE-HIEN can be divided into three parts: shallow feature extraction, deep feature extraction, decoding and reconstruction. The detailed structure is shown in Fig. 4. First, the image’s base texture and contour information are obtained by a 3 × 3 Conv, and then the image is segmented into small chunks using patch embedding. Operations such as normalization (LayerNorm), window segmentation, Multi-head Attention Mechanism (MSA), Shifted Window Self-Attention (SW-MSA), and Multi-Layer perceptron (MLP) are carried out by a series of residual RSTB chunks. These processes strengthen the ability of the model to capture the deep features of an image. Finally, the results from each layer of the RSTB are decoded, starting from the deepest level, integrating features from different layers, and reconstructing the image using the Conv and Pixel Shuffle techniques.

Multi-scale discriminator

In image enhancement using Generative Adversarial Networks (GANs), discriminators are crucial in generating low-light images. To improve the quality of the generated images to suppress noise in low-light conditions and reveal more details and textures in dark regions, using a multi-scale discriminator provides comprehensive feedback to the generator, SwinLight. It evaluates the quality of generated images at multiple scales (frequencies), resulting in more realistic, detailed, and less noisy images. The multi-scale discriminator employs average pooled downsampling to create images at different scales, featuring a specific network structure of six 3 × 3 convolutional and sigmoid layers. The detailed structure is shown in Fig. 3.

Loss functions

To achieve end-to-end training of SwinLightGAN and generate normal light images with higher objective evaluation metrics, we employed a hybrid loss function. This function encompasses content loss, multi-scale structural similarity (MS-SSIM) loss, adversarial loss, and perceptual loss. The hybrid loss can be defined as

where \({{w}_{1}}\), \({{w}_{2}}\), \({{w}_{3}}\) and \({{w}_{4}}\) are the weights used to balance the hybrid loss functions, which were 1, 1, 0.002 and 0.1 respectively. content loss is used to extract high-level features through the pre-trained Vgg16 neural network to compute the difference between two images; compared with the traditional loss function, the content loss function pays more attention to the perceived quality of the image, which is more in line with the human eye’s perception of the quality of the image. The content loss function is defined as follows.

where x, y and N are the number of low-light images, normal-light images and advanced features extracted using Vgg16, respectively. \(G(\cdot )\) is the generator and G(x) is the generated normal light image. The Multi-scale Structural Similarity Loss function (MS-SSIM) extends the traditional structural similarity (SSIM) metric to more comprehensively assess the image quality by considering the structural information of the image at multiple scales. The MS-SSIM function is defined as

where M is the number of dimensions; \({{\alpha }_{M}}\),\({{\beta }_{j}}\)and\({{\gamma }_{j}}\) are the parameters used to regulate the importance of different likenesses’s \({{l}_{M}}(G(x),y)\)is the luminance similarity computed at the coarsest scale; and \({{c}_{j}}(G(x),y)\)and\({{s}_{j}}(G(x),y)\)are the contrast and structural similarity computed on the jth scale, respectively. The adversarial loss function extends beyond traditional pixel matching by prompting the neural network to learn the overall distribution and high-level features of the data to generate more visually realistic results. The adversarial loss function is

The discriminator loss function against it is:

Model testing

Datasets

To thoroughly compare and demonstrate the performance of the proposed SwinLightGAN algorithm in low-light enhancement tasks, paired datasets were used to train the network. Additionally, comparisons of the subjective effects were made on both reference and non-reference datasets. One of the paired training datasets used was LOL13. For the test set, we employed the reference datasets LOL13, and SICE29, whereas the no-reference dataset comprises ExDark27, LIME10, MEF30, NPE31, DICM28, DarkFace32, and CVC-ClinicDB33.

Model running environment

In this experiment, we implemented a series of optimization measures to address the Swin transformer model’s training requirements and overfitting problems on small datasets. To enhance the data’s diversity and the model’s generalisation ability, we randomly cropped the image size to 128 × 128 pixels. We introduced random flipping as a data preprocessing technique.

The batch size of the model was set to two during training to accommodate hardware resource constraints. Using the ADAM optimizer, the generator’s and discriminator’s initial learning rate was set to 1e-4. The generator training was scheduled using a progressive warm-up with a cosine annealing learning rate strategy, whereas the discriminator used only the cosine annealing strategy. The initial number of iterations was set to 50, and the model’s performance was evaluated after every five iterations, with the number of iterations adjusted as needed. These measures aim to optimize the training of the Swin transformer model on small datasets to realize its potential in image-processing tasks. The Swin-Light low-light enhancement sub-network model configurations include the embedding diameter set to 60, the depth of each stage set to 1, 6, 8, and 12, and the number of attention heads set to 12. All experiments were conducted on an NVIDIA 3090Ti GPU.These hyperparameters were chosen based on the default settings of the original Swin transformer architecture, ensuring robustness and adaptability across tasks and datasets. Given the widespread application of the Swin transformer in various domains, these settings are expected to generalise well without requiring extensive dataset-specific tuning.

Evaluation metrics

To objectively evaluate the performance of SwinLightGAN from multiple perspectives, we employed various evaluation metrics. For the reference datasets, we used Peak Signal-to-Noise Ratio (PSNR)34, Structural Similarity (SSIM)34, Natural Image Quality Evaluator (NIQE)35, Luminance Order Error (LOE)36, Learning Perceptual Image Block Similarity (LPIPS)37, and Visual Information Fidelity (VIF)38. Additionally, to assess the generalizability of the SwinLightGAN algorithm across different datasets and demonstrate its performance advantages over other low-light enhancement algorithms, we used reference-free datasets, including ExDark, DICM, LIME10, NPE, and MEF, and evaluated them using reference-free metrics such as Natural Image Quality Evaluator (NIQE), Blind/Reference-free Image Spatial Quality Evaluator (BRISQUE)39, and Perception-based Image Quality Evaluator (PIQE)40.

Process of model test

To quantitatively evaluate the performance of the proposed SwinLightGAN in low-light image enhancement tasks, we conducted tests on the LOL dataset. We performed ablation experiments to assess the importance of the generator (G), multi-scale discriminator (Multi-D), and hybrid loss function (loss) in the model. We gained insights into their impact on model performance. Additionally, we compared our proposed model with other state-of-the-art low-light enhancement algorithms, providing evidence that SwinLightGAN outperforms other popular frameworks regarding image quality, such as PSNR, SSIM, and LPIPS. Through these experiments, we validated the effectiveness of SwinLightGAN in preserving image structure and optimizing perceptual quality, further demonstrating its superiority in practical applications.

Results

Ablation experiments

On the LOL dataset, we conducted a series of incremental modification experiments to quantitatively evaluate the impact of individual building blocks on the performance of low-light image enhancement algorithms. The experimental results are presented in Table 1.First, we used SingleGAN as the baseline model and progressively replaced its generator with RSUnet, SwinTE-HIEN, or the combination of both, SwinLight, to explore the contribution of different generator architectures to enhancement performance. Subsequently, we introduced Multi-D as the discriminator module and combined it with the aforementioned generators to evaluate its impact on the quality of image enhancement. In addition, we performed ablation experiments on the loss function, specifically analyzing the effect of adjusting the weight \(w_3\) for \(loss_3\), as well as the addition of \(loss_4\) and its corresponding weight \(w_4\). These experiments aim to comprehensively quantify the contributions of the generator, discriminator, and loss function weight configurations to the overall performance, thereby validating the effectiveness of the proposed method and the necessity of its building blocks.

Table 1 presents the ablation study results of SwinLightGAN on the LOL dataset, quantitatively evaluating the contributions of key components such as the generator architecture, Multi-D discriminator, and loss function configurations. Using SingleGAN as the baseline, replacing its generator with RSUnet or SwinTE-HIEN significantly improved performance, and further combining them into the SwinLight generator yielded even better results. The introduction of the Multi-D discriminator demonstrated a clear improvement in image quality across experiments. Finally, in the loss function configuration experiments, adjusting the weight of \(loss_3\) (SwinLightGAN+\(w_3\hbox {loss}_3\)) significantly enhanced PSNR, SSIM, and LPIPS metrics, while the addition of \(loss_4\) (SwinLightGAN+\(w_3\hbox {loss}_3\)+\(w_4\hbox {loss}_4\)) further improved SSIM and LPIPS, albeit with a slight decrease in PSNR. By integrating the SwinLight generator, Multi-D discriminator, and optimized loss functions, the model achieved its best performance, validating the effectiveness of the proposed method in low-light image enhancement.

To demonstrate the effectiveness of hierarchical decoding, we compared its performance with that of traditional decoding methods using the same test set. As depicted in Fig. 6, the hierarchical decoding method distinguishes itself by incorporating a multi-level feature fusion mechanism, setting it apart from the structure of the traditional method. The experimental results, presented in Table 2, show that the original method achieved an average PSNR of 20.095, SSIM of 0.787, and an LPIPS of 0.124 on the LOL dataset. In stark contrast, hierarchical decoding substantially increased the PSNR to 22.206, enhanced the SSIM to 0.846, and decreased the LPIPS to 0.084, indicating significant improvements in image quality.Similarly, the experimental results on the SICE dataset also demonstrate the advantages of the hierarchical decoding method. Specifically, the original method achieved an average PSNR of 16.507, SSIM of 0.702, and LPIPS of 0.193 on the SICE dataset. In comparison, the hierarchical decoding method improved the PSNR to 17.695, increased the SSIM to 0.748, and reduced the LPIPS to 0.140. These advancements are evidenced not only by quantitative evaluation metrics but also visually through our structural design, further affirming the superiority of hierarchical decoding in feature preservation and image reconstruction quality.

Comparative experiments

To objectively evaluate SwinLightGAN from multiple perspectives, we used several reference evaluation metrics: peak signal-to-noise ratio (PSNR)34, structural similarity (SSIM)34, natural image quality evaluator (NIQE)35, luminance order error (LOE)36, learning perceptual image block similarity (LPIPS)37, and visual information fidelity (VIF)38.

To comprehensively evaluate the performance of existing low-light image enhancement algorithms, we conducted a quantitative comparison of 14 state-of-the-art methods (SRIE12, LIME10, BIMEF5, EnlightenGAN15, KinD14, TBEFN41, MBLLEN42, Zero-DCE22, DRLIE43, RUAS44, StableLLVE45, SCI46, CDEG47, LightenDiffusion48) on the LOLSICE dataset. To ensure fairness, all methods were retrained using the publicly available source codes on the same training and testing datasets. We adopted multiple evaluation metrics, including PSNR, SSIM, NIQE, LOE, LPIPS, MS-SSIM, and VIF, to perform a comprehensive analysis. The quantitative results are summarized in Table 3. As shown, our method (Proposed) achieves outstanding performance on full-reference metrics, with PSNR and SSIM values of 22.206 and 0.846, respectively, outperforming all other methods. Notably, our method achieves the lowest LOE score of 1733.485, demonstrating its capability to preserve natural light distribution in low-light conditions. For no-reference metrics, although the NIQE score of our method is slightly lower than RUAS44 (5.101 vs. 5.073), it achieves the best results in LPIPS (0.084), MS-SSIM (0.899), and VIF (1.023), reflecting superior image quality and perceptual consistency. Compared to Zero-DCE22, our method improves PSNR and SSIM by 24.3% and 10.6%, respectively, and surpasses EnlightenGAN15 and RUAS44 in LOE and LPIPS scores. Overall, as illustrated in Table 3, our method demonstrates exceptional performance among the 14 evaluated algorithms, providing a more effective solution for low-light image enhancement.

Low-light image restoration and detail comparison of different networks on the paired LOL dataset. image obtained from the LOL public dataset (Reference13).

Figure 7 provides a comprehensive visual comparison of 14 state-of-the-art low-light image enhancement algorithms on the LOL dataset. The “LOW” column shows the original low-light input, while the “GT” column represents the ground-truth images.SRIE12 and BIMEF5 fail to recover dark details effectively, leaving significant visual information lost. LIME10, EnlightenGAN15, and KinD14 improve brightness but introduce noise and colour artifacts, with EnlightenGAN15 producing oversaturation and unnatural tones. TBEFN41 and MBLLEN42 offer enhanced brightness but suffer from over-smoothing and detail distortion. Zero-DCE22 brightens the images but amplifies noise and struggles with shadow recovery. DRLIE43, SCI46, and StableLLVE45 exhibit overexposure and tonal inconsistencies, reducing visual naturalness. RUAS44 and CDEG47 enhance global contrast but are prone to overexposure, affecting overall coherence. LightenDiffusion48 improves brightness and sharpness but introduces slight colour distortions in certain areas. In contrast, the Proposed method achieves superior results by effectively recovering dark details, controlling noise, and preserving natural tones. It delivers enhanced brightness and sharpness while maintaining a balance between detail recovery and artifact suppression, producing results visually closer to the ground truth than other algorithms.

Low-light image restoration and detail comparison of different networks on the paired SICE dataset. image obtained from the SICE public dataset (Reference29).

A global and local visual comparison of the algorithms on the SICE dataset is presented in Fig. 8. Both SRIE12 and BIMEF5 algorithms demonstrate limitations in luminance enhancement, particularly in failing to recover dark details, which results in the loss of important visual information. Methods such as LIME10, EnlightenGAN15, KinD14, and TBEFN41 have made progress in color saturation enhancement. However, this is generally accompanied by increased noise levels and inadequate restoration of dark details. MBLLEN42 and StableLLVE45 smooth image details, which can reduce noise in some contexts but may also lead to over-smoothing and a loss of detail, reflecting insufficient image sharpening. Zero-DCE22 and SCI46 fail to strike a good balance between brightness and noise control, resulting in compromised image quality and detailed recovery. DRLIE43 and RUAS44 tend to overexpose, showing a tonal deviation from the natural state, and despite some improvements in brightness, the overall natural feel of the image is adversely affected. Comparatively, SwinLightGAN showcases its unique advantages in several aspects. It excels in recovering dark details, controlling noise, and enhancing the overall image quality compared to the methods mentioned above. Despite slight colour deviations, the SwinLightGAN-processed image visually approached a real scene with a high dynamic range, thereby demonstrating excellent brightness recovery and detail sharpness. In summary, the SwinLightGAN algorithm proved its superiority in enhancing the quality of low-light images, especially in recovering fine shadows and details typical of real-world scenes, while also preserving overall image naturalness and visual coherence. Despite enhancing brightness and colour saturation, the other algorithms fall short in terms of noise control and detail retention.

To further demonstrate the generalizability of the SwinLightGAN algorithm across various datasets and its performance advantages over other low-light image enhancement algorithms, this study employed the reference-free datasets ExDark, DICM, LIME10, NPE, and MEF. We conducted experiments using reference-free image evaluation metrics: Natural Image Quality Evaluator(NIQE), Blind/Reference-free Image Spatial Quality Evaluator(BRISQUE)39, and Perception Based Image Quality Evaluator(PIQE)40. The results presented in Table 4 provide a comprehensive comparison with other algorithms.

When analyzed across multiple reference-free image quality assessment metrics, the SwinLightGAN algorithm demonstrated superior performance across datasets. This was particularly notable in terms of the NIQE scores on the ExDark dataset, where it significantly outperformed the other methods. This indicates that the enhanced images are closer to real-world visual perception in terms of naturalness. Moreover, the relatively low BRISQUE and PIQE scores further highlight the advantages of SwinLightGAN in terms of spatial and perceptual image quality. These results from the quantitative metrics reinforce SwinLightGAN’s effectiveness in low-light image enhancement tasks, revealing its superior performance in enhancing image naturalness, clarity, and overall visual quality.

Global and local visual comparisons of the algorithms on the no-reference datasets ExDark, DICM, LIME10, NPE, and MEF are shown in Fig. 9. The DRLIE43 and StableLLVE45 algorithms significantly over-adjust the luminance, leading to a general loss of detail. This is particularly pronounced in the perception of highlights and mid-tone regions, which are crucial for maintaining the image quality. The RUAS44 and SCI46 algorithms tend to overexpose localized areas, indicating room for improvement in the overall processing. The KinD14, RUAS44, and SCI46 algorithms fall short in restoring detail in darker areas, underscoring the importance of balancing detail retention with luminance enhancement. The ghosting issue observed in the TBEFN41 and MBLLEN42 algorithm results may reflect their limitations in processing high-dynamic-range scenes. Although the EnlightenGAN15 and Zero-DCE22 algorithms provide reasonable luminance enhancement, they underperform noise suppression, which is a critical aspect when evaluating the utility of these algorithms. MBLLEN42 showed better performance in restoring details in dark areas, highlighting the potential of these algorithms in this regard. SwinLightGAN demonstrated superior performance across all evaluation aspects, particularly in fully recovering details in dark areas. Despite the slight ambiguities, it stood out regarding the combined enhancement effect. The accurate recovery of details, especially in dark places, is crucial for the integrity of visual information and the maintenance of perceptual quality, confirming its advancement in maintaining structural integrity and enhancing visual perceptual quality. This provides an essential technical reference for developing low-light image enhancement algorithms.

To comprehensively evaluate the performance of low-light image enhancement methods, we conducted a comparative analysis from both an overall performance perspective and a transformer-based approach perspective. As shown in Table 5, different methods exhibit significant variations in FLOPs, parameter size, and inference speed. Among them, EnlightenGAN15 and Retinexformer51 have higher computational costs and parameter sizes but demonstrate superior performance in image enhancement quality and handling complex scenarios. In contrast, SCI46 and RUAS44 achieve extremely low FLOPs and parameter sizes, making them suitable for resource-constrained environments, although their inference speed does not show a significant advantage. The proposed method strikes a good balance between parameter size and inference speed while achieving competitive overall performance. For transformer-based methods (as shown in Table 6),the FLOPs of the proposed method increase linearly with the resolution, showcasing strong computational robustness for high-resolution image processing. While LYT-Net52 and Retinexformer51 achieve lower FLOPs, the proposed method leverages stronger global modeling capabilities and detail enhancement effects, leading to significant improvements in image quality for high-resolution tasks. Therefore, the proposed method achieves a favorable balance between computational efficiency and enhancement quality, making it suitable for conventional low-light image enhancement scenarios and demonstrating outstanding performance among transformer-based methods.

Visual comparison of SwinLightGAN combined with different image enhancement techniques. Image obtained from the ExDark public dataset (Reference27).

To validate the effectiveness of SwinLightGAN combined with other image enhancement techniques under extreme low-light conditions, we compared the visual results of various combinations, as shown in Fig. 10. These techniques include BM3D denoising, gamma correction, Gaussian blur, histogram equalization (HE), and Deep White-Balance (DWB54. The experimental results show that BM3D performs well in noise reduction but significantly blurs image details; gamma correction enhances brightness and contrast but causes overexposure in some areas; Gaussian blur reduces noise but makes the overall image excessively blurry; histogram equalization significantly improves image clarity and contrast, resulting in a much sharper visual effect; and DWB54 effectively corrects coloUr distortion, making the overall coloUr appear more natural. In conclusion, the combination of SwinLightGAN with histogram equalization (HE) achieves the best results in terms of image clarity and contrast, while DWB54 offers a slight advantage in coloUr correction, making them effective combinations for improving image quality under extreme low-light conditions.

Additional experiments: performance of low-light enhancement in different tasks

In this section, the applicability and effectiveness of the proposed image enhancement algorithm are further validated in two distinct tasks: nighttime object detection and medical imaging enhancement. The performance of low-light enhancement is evaluated in these practical scenarios to assess its potential utility and applicability in real-world applications.

Results of object detection on images enhanced by different algorithms. Image obtained from the DarkFace public dataset (Reference32).

Enhanced colonic polyp imaging with various algorithms. Image obtained from the CVC-ClinicDB public dataset under a CC BY license (Reference33).

As illustrated in Fig. 11, state-of-the-art low-light enhancement algorithms were applied to the task of nighttime object detection. The YOLOv555 detection framework was utilized to comprehensively evaluate the performance of these enhancement methods. The results indicate that the proposed method significantly enhances the visibility of images captured under low-light conditions by improving detail sharpness and suppressing noise. In comparison to other algorithms, natural colour consistency was more effectively preserved, and a substantial improvement in detection accuracy was observed. These findings underscore the advantages of the proposed method in low-light object detection, where accurate identification of targets is critical.

In addition, the proposed enhancement algorithm was validated in the context of medical imaging, as shown in Fig. 12, using the CVC-ClinicDB33 dataset. Unlike the object detection task, the primary objective in this scenario is to improve the visual quality of images to better support clinical diagnosis and analysis. The proposed method demonstrated exceptional performance by enhancing detail sharpness, improving contrast, and reducing noise in medical images. Furthermore, the enhanced images maintained natural colour fidelity and minimized distortions, thereby enabling high-quality visualization of colonic polyps. These results suggest that the proposed algorithm improves not only the visual clarity of medical images but also the reliability of imaging for clinical diagnosis.

Conclusions and future work

In response to the problem that existing low-light image enhancement (LLIE) algorithms primarily focus on brightness and contrast, often at the expense of image detail, this study introduces SwinLightGAN. This algorithm integrates a deep learning framework with sophisticated image processing techniques. SwinLightGAN combines the robust feature extraction capabilities of the Residual Jump U-Net network with the Swin Transformer Enhanced Illumination Network (SwinTE-HIEN) to accurately extract multi-scale spatial features and capture comprehensive global contextual information. Utilizing a multi-scale discriminator and a hybrid loss function, the algorithm optimizes the adversarial training process, ensuring high consistency in visual quality between the enhanced images and their ground truth counterparts. Extensive experimental evaluations across multiple datasets showcase SwinLightGAN’s industry-leading performance, particularly in reference-free image quality assessment metrics such as NIQE, BRISQUE, and PIQE, which set a new standard for image enhancement quality and structural fidelity. Future work will focus on the lightweight design of SwinLightGAN to improve computational efficiency and resource utilization, making it more practical for real-world applications. Additionally, we aim to extend the applicability of SwinLightGAN to more diverse low-light scenarios, such as nighttime video enhancement and challenging medical imaging tasks, ensuring its versatility across a wider range of use cases.

Data availability

The data used to support the findings of this study are available from the corresponding author upon request.

References

Li, C. et al. Low-light image and video enhancement using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 44, 9396–9416 (2021).

Wang, M., Zhang, H., Li, J. & Zhang, C. Deep neural network-based image enhancement algorithm for low-illumination images underground coal mines. Coal Sci. Technol. 51, 231–241 (2023).

Zong, S., Wang, C. & Zhou, Y. ‘low-light aerial image enhancement method based on retinex and multi-attention mechanism. Electron. Opt. Control. 30, 23–28 (2023).

Singh, K., Kapoor, R. & Sinha, S. K. Enhancement of low exposure images via recursive histogram equalization algorithms. Optik 126, 2619–2625 (2015).

Ying, Z., Li, G. & Gao, W. A bio-inspired multi-exposure fusion framework for low-light image enhancement. The authors declare no competing interests. (2017).

Arici, T., Dikbas, S. & Altunbasak, Y. A histogram modification framework and its application for image contrast enhancement. IEEE Trans. Image Process. 18, 1921–1935 (2009).

Veluchamy, M., Bhandari, A. K. & Subramani, B. Optimized bezier curve based intensity mapping scheme for low light image enhancement. IEEE Trans. Emerg. Top. Comput. Intell. 6, 602–612 (2021).

Bhandari, A. K., Subramani, B. & Veluchamy, M. Multi-exposure optimized contrast and brightness balance color image enhancement. Dig. Signal Process. 123, 103406 (2022).

Fu, X. et al. A probabilistic method for image enhancement with simultaneous illumination and reflectance estimation. IEEE Trans. Image Process. 24, 4965–4977 (2015).

Guo, X., Li, Y. & Ling, H. Lime: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 26, 982–993 (2016).

Ren, X., Yang, W., Cheng, W.-H. & Liu, J. Lr3m: Robust low-light enhancement via low-rank regularized retinex model. IEEE Trans. Image Process. 29, 5862–5876 (2020).

Fu, X., Zeng, D., Huang, Y., Zhang, X.-P. & Ding, X. A weighted variational model for simultaneous reflectance and illumination estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2782–2790 (2016).

Wei, C., Wang, W., Yang, W. & Liu, J. Deep retinex decomposition for low-light enhancement. http://arxiv.org/abs/1808.04560 (2018).

Zhang, Y., Zhang, J. & Guo, X. Kindling the darkness: A practical low-light image enhancer. In Proceedings of the 27th ACM International Conference on Multimedia, 1632–1640 (2019).

Jiang, Y. et al. Enlightengan: Deep light enhancement without paired supervision. IEEE Trans. Image Process. 30, 2340–2349 (2021).

Fu, Y., Hong, Y., Chen, L. & You, S. Le-gan: Unsupervised low-light image enhancement network using attention module and identity invariant loss. Knowl.-Based Syst. 240, 108010 (2022).

Cui, X., Li, D., Li, Z. & Ou, J. A gan noise modeling based blind denoising method for guided waves. Measurement 188, 110596 (2022).

Yang, S., Zhou, D., Cao, J. & Guo, Y. Lightingnet: An integrated learning method for low-light image enhancement. IEEE Trans. Comput. Imaging 9, 29–42 (2023).

Ma, T. et al. Retinexgan: Unsupervised low-light enhancement with two-layer convolutional decomposition networks. IEEE Access 9, 56539–56550 (2021).

Wang, T.-C. et al. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 8798–8807 (2018).

Yang, W., Wang, S., Fang, Y., Wang, Y. & Liu, J. From fidelity to perceptual quality: A semi-supervised approach for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 3063–3072 (2020).

Guo, C. et al. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1780–1789 (2020).

Goodfellow, I. et al. Generative adversarial nets. Adv. Neural Inf. Process. Syst.27 (2014).

Dosovitskiy, A. et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv:2010.11929 (2020).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 10012–10022 (2021).

Peng, Z. et al. Conformer: Local features coupling global representations for visual recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 367–376 (2021).

Loh, Y. P. & Chan, C. S. Getting to know low-light images with the exclusively dark dataset. Comput. Vis. Image Underst. 178, 30–42 (2019).

Lee, C., Lee, C. & Kim, C.-S. Contrast enhancement based on layered difference representation of 2d histograms. IEEE Trans. Image Process. 22, 5372–5384 (2013).

Cai, J., Gu, S. & Zhang, L. Learning a deep single image contrast enhancer from multi-exposure images. IEEE Trans. Image Process. 27, 2049–2062 (2018).

Ma, K., Zeng, K. & Wang, Z. Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. 24, 3345–3356 (2015).

Wang, S., Zheng, J., Hu, H.-M. & Li, B. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 22, 3538–3548 (2013).

Yang, W. et al. Advancing image understanding in poor visibility environments: A collective benchmark study. IEEE Trans. Image Process. 29, 5737–5752. https://doi.org/10.1109/TIP.2020.2981922 (2020).

Bernal, J. et al. Wm-dova maps for accurate polyp highlighting in colonoscopy: Validation vs saliency maps from physicians. Comput. Med. Imaging Graph. 43, 99–111 (2015).

Wang, Z., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612 (2004).

Mittal, A., Soundararajan, R. & Bovik, A. C. Making a “completely blind’’ image quality analyzer. IEEE Signal Process. Lett. 20, 209–212 (2012).

Li, S., Jin, W., Li, L. & Li, Y. An improved contrast enhancement algorithm for infrared images based on adaptive double plateaus histogram equalization. Infrared Phys. Technol. 90, 164–174 (2018).

Zhang, R., Isola, P., Efros, A. A., Shechtman, E. & Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 586–595 (2018).

Sheikh, H. R. & Bovik, A. C. Image information and visual quality. IEEE Trans. Image Process. 15, 430–444 (2006).

Venkatanath, N., Praneeth, D., Bh, M. C., Channappayya, S. S. & Medasani, S. S. Blind image quality evaluation using perception based features. In 2015 Twenty First National Conference on Communications (NCC), 1–6 (IEEE, 2015).

Mittal, A., Moorthy, A. K. & Bovik, A. C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 21, 4695–4708 (2012).

Lu, K. & Zhang, L. Tbefn: A two-branch exposure-fusion network for low-light image enhancement. IEEE Trans. Multimedia 23, 4093–4105 (2020).

Lv, F., Lu, F., Wu, J. & Lim, C. Mbllen: Low-light image/video enhancement using cnns. In BMVC, vol. 220 (Northumbria University, 2018).

Tang, L., Ma, J., Zhang, H. & Guo, X. Drlie: Flexible low-light image enhancement via disentangled representations. IEEE Trans. Neural Netw. Learn. Syst. 35, 2694–2707 (2022).

Liu, R., Ma, L., Zhang, J., Fan, X. & Luo, Z. Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 10561–10570 (2021).

Zhang, F., Li, Y., You, S. & Fu, Y. Learning temporal consistency for low light video enhancement from single images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 4967–4976 (2021).

Ma, L., Ma, T., Liu, R., Fan, X. & Luo, Z. Toward fast, flexible, and robust low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5637–5646 (2022).

Lei, X., Fei, Z., Zhou, W., Zhou, H. & Fei, M. Low-light image enhancement based on cell vibration energy model and lightness difference. Comput. Vis. Image Underst. 247, 104079 (2024).

Jiang, H., Luo, A., Liu, X., Han, S. & Liu, S. Lightendiffusion: Unsupervised low-light image enhancement with latent-retinex diffusion models. In European Conference on Computer Vision, 161–179 (Springer, 2025).

Zhang, Y., Di, X., Zhang, B. & Wang, C. Self-supervised image enhancement network: Training with low light images only. http://arxiv.org/abs/2002.11300 (2020).

Wu, W. et al. Uretinex-net: Retinex-based deep unfolding network for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5901–5910 (2022).

Cai, Y. et al. Retinexformer: One-stage retinex-based transformer for low-light image enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 12504–12513 (2023).

Brateanu, A., Balmez, R., Avram, A. & Orhei, C. Lyt-net: Lightweight yuv transformer-based network for low-light image enhancement. http://arxiv.org/abs/2401.15204 (2024).

Yi, X., Wang, Y., Zhao, Y., Yan, J. & Zhang, W. Llieformer: A low-light image enhancement transformer network with a degraded restoration model. In 2023 IEEE International Conference on Image Processing (ICIP), 1195–1199 (IEEE, 2023).

Afifi, M. & Brown, M. S. Deep white-balance editing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2020).

Wu, T.-H., Wang, T.-W. & Liu, Y.-Q. Real-time vehicle and distance detection based on improved yolo v5 network. In 2021 3rd World Symposium on Artificial Intelligence (WSAI), 24–28 (IEEE, 2021).

Acknowledgements

This work was supported in part by the Jiangsu Graduate Practical Innovation Project under Grant SJCX24_2152, in part by the Natural Science Foundation of China under Grant 62301473, in part by the Top-notch Academic Programs Project of Jiangsu Higher Education Institutions under Grant 19KJA110002, in part by the Yancheng Institute of Technology Postgraduate Research and Practice Innovation Programme Project under Grant SJCX23_XZ030.

Author information

Authors and Affiliations

Contributions

M.H. conceived the experiments, managed the data, conducted formal analysis, investigation, methodology design, software development, validation, and visualization, wrote the original draft and reviewed and edited the manuscript, and managed the project. R.W. provided funding, resources, and supervision. Y.W. conducted formal analysis. M.Z. performed visualization. F.Z. handled software development and supervision. X.B. contributed to conceptualization. F.L. carried out formal analysis. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

He, M., Wang, R., Zhang, M. et al. SwinLightGAN a study of low-light image enhancement algorithms using depth residuals and transformer techniques. Sci Rep 15, 12151 (2025). https://doi.org/10.1038/s41598-025-95329-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-95329-8