Abstract

Monitoring and tracking of cell motion is a key component for understanding disease mechanisms and evaluating the effects of treatments. Time-lapse optical microscopy has been commonly employed for studying cell cycle phases. However, usual manual cell tracking is very time consuming and has poor reproducibility. Automated cell tracking techniques are challenged by variability of cell region intensity distributions and resolution limitations. In this work, we introduce a comprehensive cell segmentation and tracking methodology. A key contribution of this work is that it employs multi-scale space-time interest point detection and characterization for automatic scale selection and cell segmentation. Another contribution is the use of a neural network with class prototype balancing for detection of cell regions. This work also offers a structured mathematical framework that uses graphs for track generation and cell event detection. We evaluated cell segmentation, detection, and tracking performance of our method on time-lapse sequences of the Cell Tracking Challenge (CTC). We also compared our technique to top performing techniques from CTC. Performance evaluation results indicate that the proposed methodology is competitive with these techniques, and that it generalizes very well to diverse cell types and sizes, and multiple imaging techniques. The code of our method is publicly available on https://github.com/smakrogi/CSTQ_Pub/, (release v.3.2).

Similar content being viewed by others

Introduction

Monitoring and tracking of cell motion is a key component for understanding disease mechanisms and evaluating treatments1,2. Cell migration, i.e., cell motion that is accompanied by morphological changes, and mitosis, i.e., cell division into two daughters, occur during development, wound healing, and immune responses. In cancer, for example, cell migration is critical in almost all stages of metastasis. Quantifying the progression of cell cycles enables us to understand the effect of drug treatments. In addition, tissue engineering and stem cell manufacturing require quantification of stem cell proliferation in vitro3,4,5.

Time-lapse optical microscopy techniques have been widely used for studying cell migration. The most common techniques toward this end are fluorescence microscopy, phase contrast (PhC), and differential interference contrast (DIC). These technologies produce image sequences frequently in the order of terabytes with a large number of frames, and dense or sparse cell environments depending on the assay. Many labs use manual cell tracking techniques. Such techniques require a significant amount of time and are characterized by poor reproducibility. Hence, there is a clear need for the development of automated, reproducible cell segmentation and tracking methods.

Automated cell tracking mainly entails finding and segmenting all cell occurrences in a time-lapse sequence and defining temporal relationships. This task is complicated by the following factors: (1) variability of cell shapes, sizes, and intensity distributions, (2) variability of cell contrast produced by different microscopy techniques, (3) temporal and spatial resolution limitations, and varying contrast to noise ratio even within a frame of the sequence. Therefore the development of generalizable computer-based tools for cell tracking is a popular open problem in the research community5,6,7,8. Recent software tools implement segmentation and tracking stages9,10,11 but were mostly developed for specific microscopy techniques such as fluorescence10, or phase contrast microscopy11. Due to the significance and popularity of the cell tracking problem, computer-based cell segmentation and tracking competitions, such as the Cell Tracking Challenge (CTC)6,12, have emerged to provide a common basis for performance comparisons. Furthermore, numerous techniques have been published to tackle cell segmentation, detection and tracking9,13,14,15,16,17,18,19,20,21.

In this work, we propose a methodology for generalizable cell segmentation and tracking that can be applied to multiple types of cells and microscopy imaging techniques, that we denote as CSTQ (Cell Segmentation Tracking and Quantification). A frequent pitfall in segmentation of microscopy sequences is the unpredictable change of levels of contrast and noise between different sequences and even from frame-to-frame within a sequence. To address this key issue, we aim to identify the optimal scale for cell detection and segmentation using multi-scale space-time interest point detectors. Analysis of space, time, and space-time interest points in a multi-scale hierarchical strategy has yielded promising results for multiple computer vision tasks, such as segmentation and object detection22,23,24,25,26,27,28. Here we propose to use these detectors (i) to select an optimal scale for each frame, (ii) to identify interest points on the cells, and (iii) to guide segmentation of cell boundaries. We also introduce a multi-layer neural network to detect the cell regions and complete the cell segmentation stage. Cell detection classes are frequently imbalanced, as there are a lot more noisy background regions than cell regions. This imbalance introduces classification bias. To address this issue, we propose to use the method of Density-Based Spatial Clustering of Applications with Noise (DBSCAN)29,30,31 before training, to identify the main cluster prototypes in the feature space and resample the training samples. DBSCAN is an unsupervised nonparametric technique that has also been previously proposed for 3D cell separation9, and in a U-Net boosting training strategy for brain tumor segmentation in MRI32. In the cell tracking stage, we use a graph-based technique to identify cell migration and cell mitosis events. First, we employ optical flow-based motion compensation and cell matching. Next, we form cell tracklets by linking cell instances across frames, and we identify cell migration and mitosis events to complete the tracking stage.

A key contribution of the proposed technique is that it incorporates the concept of space-time iterest point detection and characterization into automatic scale selection, segmentation, and scale detection. An additional strength is the use of a machine learning technique with class prototype balancing for cell detection. Furthermore, our method offers a well-defined and structured mathematical framework that uses graphs for track generation and cell event detection. We evaluated the segmentation, detection and tracking performance of our method on four fluorescence and two phase contrast time-lapse sequences of the Cell Tracking Challenge. In addition, we compared our technique to top performing techniques from CTC. The performance evaluation results indicate that the proposed methodology is competitive with these techniques, and it generalizes very well to diverse cell types and sizes, and diverse spatial and temporal resolutions.

Methods

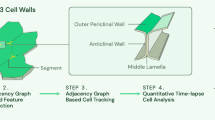

In this section we detail the two main components of our framework: cell segmentation and detection, and cell tracking.

Cell segmentation and detection

We display the main stages for cell segmentation and detection in Fig. 1. We describe each of these stages next, with emphasis on multi-scale interest point detection for automated scale selection and segmentation, and neural network-based foreground/background separation for cell detection.

Multi-scale cell interest point detection and segmentation

Spatio-temporal anisotropic diffusion This filter is applied by solving a system of three coupled partial differential equations (PDEs), each resembling the diffusion equation with anisotropic diffusion33,34. This system is solved for each frame at time t and its direct temporal neighbors \(\tau = \{ t - 1, t, t + 1 \}\):

Here, x and y are the spatial indices of the pixels, and s is the scale index. This stage generates multi-scale diffused frame maps. The diffused frame maps are utilized for spatio-temporal feature detection, segmentation, and foreground/background separation.

Spatio-temporal Interest Point (Feature) Detectors The goal is to detect corners, blob structures and other feature types in the spatio-temporal domain22,27. Earlier techniques have used Laplacian-of-Gaussian blob detectors in a single scale to detect and count cell nuclei.35,36. We expect that the use of non-linear diffusion models will enable the detection of non-spherical features that may correspond to non-circular cell shapes and how they evolve in multiple scales. We also estimate motion displacement vector fields of the moving regions37,38,39,40 and add them to the feature set for machine learning-based cell detection.

Spatio-temporal Moments: These are the spatio-temporal second moments22 computed by pairwise products of the first order derivatives of the pixel intensities as an extension of Harris corner detector. This approach computes the structure tensor

where \(w(\cdot ,\sigma _s^2,\sigma _t^2)\) denotes a smoothing kernel with integrating factors \(\sigma _s^2,\sigma _t^2\) for the spatial and time coordinates, respectively. The points of interest are then identified by the Harris corner detection criterion

Spatio-temporal Hessian: This approach computes the Hessian matrix H composed by the second order derivatives of the pixel intensities in the space-time domain

It measures the strength of the interest points using the determinant magnitude, \(S(x,y,t) = \vert det(H(x,y,t)) \vert\).

Spatial Hessian on Temporal Derivative: This approach computes the spatial Hessian matrix on first-order temporal derivatives \(L_t\) that we denote by \(H_t\). It measures the strength the interest points using the determinant \(S(x,y,t) = \vert det(H_t(x,y,t)) \vert .\)

Motion map computation and cell deformation We estimate the motion of each cell in the prior frame by the Combined Local/Global Optical Flow (CLGOF) technique37,38,39,40. We use the computed motion vector field as a region descriptor for cell detection, and to warp the cells of each prior frame onto the cells of each processed frame.

Automatic scale selection in spatio-temporal domain In this stage, we aim to identify the image scale that preserves meaningful cell visual content while suppressing the noise. For each frame, we develop a stack of multi-scale feature descriptors in the spatio-temporal domain. The feature loci are defined by regional maxima on each scale. We identify the optimal scale as the one that minimizes the number of noisy features that should diminish rapidly in the initial scales, while preserving the features of cells that may sustain longer along the scale space continuum. To this end, we analyze the second order derivatives of features across multiple scales similarly to approaches applied to natural video sequences27. First, we normalize the feature vectors to be able to automatically choose the diffused map with best feature descriptors. Next, we compute the second-order derivative of the spatio-temporal feature vectors to determine a decision criterion for scale selection. Then, the selected scale diffused map along with the regional maxima drive the subsequent cell segmentation and detection stages. Figure 2 illustrates an example of scale generation, keypoint computation, and scale selection.

Watershed and interest point-based segmentation This stage generates markers to drive the watershed segmentation by fusing the regional interest point feature maxima with the regional minima of the edge map computed on the selected diffused map. First, we invert the stochastic map produced by Parzen density estimation to form regions separated by spatio-temporal discontinuities. Then, we fuse the feature maxima with the density map and produce watershed superpixels.

Machine learning-based cell detection

Here we introduce a model that can classify the watershed superpixels into cells (foreground) and non-cell (background) classes. We employed a fully connected neural network to perform this classification. We trained the network on static and dynamic descriptors formed by the regional averages and variances of diffused map intensities, spatio-temporal descriptors, motion displacement vector magnitudes, spatio-temporal intensity gradient magnitudes, and regional areas. The training vectors are designed to enable the model to learn foreground pixels for cells with irregular shapes, variable feature magnitudes and rates of motion, and varying contrast to noise ratios.

One difficulty in effective training of this machine learning model is the class imbalance between cell and non-cell regions as the class of non-cells typically has many more samples than the cell class. This imbalance frequently leads to classification bias. In this work, we propose to use DBSCAN (Density-Based Spatial Clustering of Applications with Noise)29,30,31 to identify the main cluster prototypes formed in the feature space by the training samples. We measure the cluster sizes (or cardinalities) and perform balancing of the cluster sizes by undersampling the cluster with the most elements. This unsupervised learning stage balances class prototypes to improve neural network training.

The main contributions of DBSCAN are cluster modeling and a clustering algorithm. It is based on the assumption that clusters correspond to areas of high density that are separated by areas of lower density. The data points are classified into core points, border points, and noise. It performs nonparametric density estimation by computing the number of points within the \(\epsilon\)-neighborhood of each query point. DBSCAN effectively computes the transitive closure of an equivalence relation. It can identify clusters of arbitrary shapes and sizes, while being less sensitive to noise and outliers.

To balance the cluster cardinalities in the feature space for effective training of the cell detection network without overfitting, we under-sample the most populous cluster to equalize its size with the size of the second most populous cluster. This operation reduces class imbalance in the training data without changing the structure of the data in the feature space. In DBSCAN we used Mahalanobis distances for improved modeling of arbitrary shapes of clusters.

Next, we train the neural network model on the balanced dataset produced by the previous step. Then, employed a network with layer structure of \(9 \times 12 \times 6 \times 2\) nodes. The fully connected layers are followed by RELU activation. The first layer takes as input the regional feature vectors. The network predicts if the input region descriptor corresponds to a cell, or a non-cell region. We employ the cross entropy loss function for training.

Cell boundary refinement and cell cluster separation

In this stage, we aim to refine the cell boundaries detected from the previous stage of foreground/background separation. We employ a region-based energy minimizing level-set model with a local clustering criterion to delineate the cell region. Next, we identify and separate adherent (or clustered) cells. We compute the solidity to identify the clustered cells, and then we compute the signed distance map of the identified regions. The watershed lines of the distance map determine the boundaries that divide the cells.

Cell tracking

A cell tracking technique should effectively manage key cell events in a sequence, such as cell migration (cell motion that is accompanied by morphological changes), cell mitosis (division into two daughters), cell disappearance (leaving the field of view or collision), cell apoptosis/necrosis (cell death), new cells entering the field of view, and cell reappearance (re-entering the field of view). This is a challenging task mainly because of low temporal resolution and variable types of cell motion. For example, the biological cells may follow a Brownian movement, which makes the motion estimation very hard. We developed a graph optimization technique that can be divided into the following stages: bi-frame cell matching, construction of cell tracks and cell event detection41 as shown in Fig. 3.

Cell tracking method overview. Bi-frame cell matching is applied first by motion compensation and max likelihood matching. Cell tracklets are formed by linking cell correspondences over the complete time sequence. A graph structure is used to store the cell relationships and detect migration and mitosis events.

Bi-frame cell matching

In this stage we find cell correspondences between two consecutive frames that we denote by current frame and prior frame. The problem of finding the association with the highest probability is performed by determining the max-likelihood matching for each cell of the current frame among all the warped cells of the prior frame by the motion field that was found by CLGOF as described before.

Let \(X_t = \{x_1^t, x_2^t, \ldots , x_n^t\}\) and \(\Xi _t = \{ \xi _1^t, \xi _2^t, \ldots , \xi _n^t\}\) be the set of observations and the set of states, respectively, for \(n_t\) cells at time t. We assume a Markov process such that the transition probability depends only on the state of the mother cell or the same cell in previous frame \(P(S_{t+1} | S_{t})\). We compute the likelihood \(\pi _{ij}^{t+1}\) that the cell i in frame \(t+1\) is connected with cell j in frame t based on the spatial proximity between cells of warped indicator function \(\hat{L}(\omega + \hat{\delta },t+1)\) and cells of the current indicator function \(L(\omega , t)\):

We also use a reject option that signifies cell appearance when the maximum likelihood is still very low. After making a decision we assign the predicted cell class \(\hat{j}\) to the cell indicator map.

Construction of cell tracks

The cell associations are stored in a structure of linked lists. To form the cell tracks, we traverse the linked lists from the last frame to the first. The identified maximum likelihood tracks form a forest of minimal cost chains.

We use the created linked lists to construct the cell tracks. We traverse the set of cell states in reverse chronological order and we store the cell track information in a structure with elements \(Q = \left[ t, \xi _{m}^{t}, t_p, \xi _{n}^{t_p} \right]\), that contain the frame id t, cell label (indicator) id \(\xi _{m}^{t}\), previous frame id \(t_p\), and previous label id \(\xi _{n}^{t_p}\).

This stage produces a cell track structure that holds the tracks \(\phi _i\) and addresses cell appearance and disappearance. However, some tracks may be partially overlapping in cell division occurrences, where different cells have a common ancestor. We address these cases by identifying the overlapping parts of two tracks \(\phi _j\) and \(\phi _k\), and creating three new tracks, one for the mother \(\phi _p = \phi _j \cap \phi _k\), and the 2 daughters \(\phi _q = \phi _j - \left( \phi _j \cap \phi _k \right)\) and \(\phi _r = \phi _k - \left( \phi _j \cap \phi _k \right)\).

Cell event detection

Cell tracking results can be represented using an acyclic oriented graph \(G=(V, E)\) that consists of a vertex set V and an edge set E such that \(E \subset V \times V\). The graph vertices represent the detected cells and its edges represent temporal relations between the cells. The condition for an ordered vertex pair \(E^{t_1,t_2}_{i,j}\left( \xi _i^{t_1}, \xi _j^{t_2} \right)\) to belong to the edge set E is expressed as:

The function \(P:\Xi \rightarrow \Xi\), where \(\Xi\) is the universe of cell states \(\xi _i\), returns the mother \(\xi _m\) of an entity \(\xi _i\) that is \(\xi _m = P(\xi _i)\). The first condition in the previous expression represents cell migration, while the second condition represents cell division. We assign graph node labels \(L_G(V)\) to cells in each indicator image that determines a global indicator function \(F_L: \Omega \rightarrow L_G\).

Experiments and results

In our performance evaluation experiments, we used Cell Tracking Challenge (CTC) datasets12. CTC datasets consist of time-lapse microscopy sequences and annotations that can serve as benchmarks for cell segmentation, detection and tracking. Our experiments can be divided into two main parts. In the first experiment, we evaluated the performance of our method on CTC training sequences. In the second experiment, we evaluated the performance of our technique in comparison to results of other published techniques that participated in CTC and produced results on the same sequences. Next, we describe the CTC datasets, the evaluation measures, and the results for each experiment.

Datasets

We utilized 4 datasets of fluorescence (Fluo) microscopy and 2 phase-contrast (PhC) microscopy for training and testing. Each dataset contains two sequences labeled 01 and 02, along with two sets of reference masks named gold standard corpus or gold-truth (GT) and silver standard corpus or silver truth (ST). The CTC organizers provide the participating teams with the GT and ST datasets for the training sequences, but reference datasets for the challenge datasets are only available to CTC organizers for evaluation.

We evaluated our method on fluorescence microscopy sequences of low resolution cytoplasm of rat mesenchymal stem cells Fluo-C2DL-MSC (MSC), low resolution nuclei of cervical cancer cells Fluo-N2DL-HeLa (HeLa), high resolution nuclei of mouse embryonic stem cells Fluo-N2DH-GOWT1 (GOWT1) and high resolution simulated nuclei of human leukemia cells (HL-60) Fluo-N2DH-SIM+ (SIM+) from fluorescence microscopy. The phase contrast microscopy datasets include high resolution cytoplasm of glioblastoma-astrocytoma U373 cells PhC-C2DH-U373 (U373) and low resolution cytoplasm of pancreatic stem cells PhC-C2DL-PSC (PSC). The datasets vary in noise levels, cell densities, numbers of cells leaving and entering the field of view, resolution, and numbers of mitotic events.

The reference label set consists of sparse GT and dense ST reference datasets. The GT reference maps were manually annotated by experts with background in biology at three different institutions, but do not necessarily contain masks for all cells and all frames. ST reference datasets contain computer-generated annotations from the top results submitted by former CTC participants. The CTC organizers used segmentation fusion techniques to produce the ST masks. These datasets contain cell masks for all frames and all cells. Because GT and ST reference datasets offer complementary information, we use both of them for our evaluations.

Performance evaluation measures

To evaluate the cell segmentation detection, and tracking performance of our method and the baseline methods, we utilized the Dice Similarity Coefficient (DSC), Jaccard index, and the CTC implementations of the SEG, DET, and TRA measures. The SEG, DET, and TRA measures are particularly useful as they serve as common bases for comparisons with other techniques participating in the CTC challenge. We denote by \(R_s\) the set of all binary cell regions delineated by our cell segmentation method, while \(R_{Ref}\) is the set of cell pixels from the reference region.

SEG measure – It measures the Jaccard index between test and reference data, for each cell that has a reference mask. Then the method sets to zero the indices of cells for which \(\left| {R_s}\cap R_{Ref} \right| \le 0.5 \cdot \left| R_{Ref} \right|\). It finally computes the average of all the individual indices to yield SEG.

DET measure – DET calculates detection accuracy by comparing the nodes of the acyclic graphs generated by the tested algorithm with nodes of the acyclic graphs of the reference masks. It is defined as

Here \(AOGM_D\) is the cost of transforming a set of nodes produced by the tested method into the set of reference nodes. \(AOGM_{D0}\) is the cost of creating the set of reference nodes.

TRA measure – It is based on comparison of acyclic oriented graphs produced by the tested and reference tracking methods. TRA is defined as a normalized Acyclic Oriented Graph Matching (AOGM) measure:

where \(AOGM_0\) is the AOGM value required for creating the reference graph from scratch.

Results on CTC training data: train on sequence 1 and test on sequence 2

First, we present the results on CTC training data. In this experiment, we trained the foreground/background classifier on sequence 01 of each dataset and performed segmentation and tracking on sequence 02. Table 1 contains segmentation, detection and tracking measurements against GT and ST reference data. The dataset Fluo-N2DH-SIM+ has the same gold and silver truth labels because it is simulated. In addition, there is no ST reference data for detection and tracking, so we list the DET-GT and TRA-GT results only. From these results we observe general agreement among Jaccard and SEG for most of the datasets. A bit higher agreement is observed between DET and TRA measures. Overall, our technique yields the following average rates: Jaccard-ST: 0.741, SEG-ST: 0.785, SEG-GT: 0.729, DET-GT: 0.895, TRA-GT: 0.881. We note that the SEG-ST and SEG-GT rates are not directly comparable because ST contains dense annotations, but GT contains sparse annotations. We display segmentation label maps on a frame of three sequences in Fig. 4. We observe the good agreement between the cell label maps produced by our method and the reference label maps. In Fig. 5, we show a visualization of tracking output that enables monitoring of the main cell migration and mitosis events.

Segmentation comparisons: (a) Intensity frame, (b) GT reference segmentation, (c) ST reference segmentation, (d) Segmentation by the proposed method. Fluo-C2DL-MSC-02 (first row): This sequence contains overlapping elongated cells and has low temporal resolution. Our technique produced results that are notably close to the ST and GT references. PhC-C2DL-PSC-02 (second row): PSC has numerous small cells in each frame, with fine shape details, and several cell divisions occur over time. Our technique produced very good detection and segmentation on this dataset. Fluo-N2DH-GOWT1-02 (third row): This sequence is characterized by significant variability in contrast to noise ratios within each frame and over multiple time frames, that make cell detection difficult. The neural network classifier helped to detect cells of low contrast.

Results on CTC challenge data

Next, we present results from our experiments on CTC challenge data. Our method’s code and results were submitted to CTC and re-produced by the challenge organizers for all participants. The organizers did not share reference data for these sequences. Hence, they calculated and posted evaluation performance measures of each method on the challenge website. Table 2 contains SEG, DET, and TRA results of our method on each test sequence.

Furthermore, in Tables 3 and 4 we list the SEG and TRA measures of all CTC participant techniques that were evaluated on all of the 6 datasets utilized in our test set. We also include the average performance measures of the 9 techniques that met the inclusion criterion, and our results for reference. Figure 6 shows boxplots of SEG and TRA values produced by the methods listed in Tables 3 and 4. Among the techniques that produced segmentation and tracking results for the same datasets, our technique ranked above the 70th percentile for 3 datasets in SEG and TRA values. Our technique scored mean SEG of 0.738 and mean TRA of 0.895 over all the tested sequences, ranking among the top 4 and top 3 techniques, respectively. It also achieves the top SEG and TRA performances among all compared methods for Fluo-C2DL-MSC.

Performance evaluation on external datasets

To assess the generalizability of this framework, we evaluated our segmentation and tracking performance on external datasets acquired in the context of tissue engineering research, and specifically cyber-physical organoid formation sequences42,43. In this downstream application, the task is to segment and track cells and micro-robots. We display segmentation and tracking results in Figs. 7 and 8, respectively. These results indicate the good segmentation accuracy and the capacity to track the cells and micro-robots that could help to enable a visual control loop for cyberphysical organoid formation.

Discussion

By comparing the box plots of Fig. 6 we observe that the proposed technique yields very good performances for all test datasets. These results indicate the generalizability of our technique to diverse datasets in terms of cell size and shape, density of environments, spatial and temporal scales, and contrast mechanisms. We summarize the main challenges presented by the utilized datasets next. The Fluo-C2DL-MSC sequences have overlapping elongated cells, with varying shapes and intensity profiles. These sequences have low spatial and temporal resolution. HeLa sequences present challenges for cell separation in a dense environment. PhC-C2DH-U373 has similar intensity levels inside the cell in cytoplasm and background with thick cell borders complicating cell boundary delineation. Fluo-N2DH-GOWT1 is characterized by significant variability in contrast to noise ratios within each frame and over multiple time frames, that make cell detection difficult. PhC-C2DL-PSC has numerous small cells in each frame, with fine shape details, and several cell divisions occur over time. To illustrate challenging cases, in Fig. 9, we show examples from two sequences, where there is variability in contrast and noise. To quantify the image characteristics, we computed Signal-to-Noise Ratio and Contrast-to-Noise Ratios34. For the Fluo-N2DH-SIM+02 sequence, frames 3 and 34, the SNR values are 1.876 and 0.988, and the CNR values are 6.447 and 3.252, respectively. In this dataset, we can visually observe the significant discrepancy in signal and contrast levels. For the Fluo-N2DH-GOWT1-02 sequence, frames 3 and 89, the SNR values are 24.138 and 8.912, and the CNR values are 25.467 and 7.254, respectively. In this dataset, there is noticable variability among the contrast levels of individual cells. The scale selection module helps to address these challenges by selecting the optimal scale of feature representation for each frame. Our machine learning-based cell detection module learns to separate cells from background efficiently despite contrast variations. Another challenge that may be encountered is high cell density. In Fig. 10, we display cell confluency and segmentation accuracy graphs for two datasets, Fluo-N2DL-HeLa-02 and PhC-C2DL-PSC-02, that include many mitotic events and clustered cells. Of note is that while cell confluency increases, the DSC and Jaccard scores do not decrease, hence indicating a good level of robustness with respect to cell density variations. A key factor for this characteristic is the cell boundary refinement stage. With respect to computational time, increasing cell density implies increased run times, mainly observed in the tracking stage. The segmentation run time depends on multiple factors such as the image matrix size, the number of generated scales, and the boundary refinement iterations. Table 5 lists the average segmentation and tracking run times per frame for the CTC test datasets for Matlab implementation running on a system with Intel Xeon E5-2630 CPU at 2.4 GHz.

Examples of variability in signal, contrast and noise levels within a dataset and between datasets. First row: Fluo-N2DH-SIM+02, (a) frame 3 (SNR: 1.876, CNR: 6.447), and (b) frame 34 (SNR: 0.988, CNR: 3.252). Second row: Fluo-N2DH-GOWT1-02, (a) frame 3 (SNR: 24.138, CNR: 8.912), and (b) frame 89 (SNR: 25.467, CNR: 7.254.).

The high TRA rates show that our methodology can accurately create cell tracks and identify cell migration and cell mitosis. As seen in Fig. 5, our method yields results that enable the monitoring and quantification of cell events in high throughput studies. These capabilities are significant for diagnosis of diseases such as cancer, drug development, and personalized medicine. However, some over-segmentation may lead to false positive detection of cell mitosis events that limit tracking accuracy. Such inaccuracies may be addressed by inclusion of additional features in the cell matching stage implying increased computational cost.

An original contribution of this work is the incorporation of multi-scale spatio-temporal features to perform automated scale selection, cell detection and segmentation. This approach helps to select a scale that automatically adjusts to the visual content of each frame, that addresses changes in contrast and noise levels, and reduces the need for hyperparameter tuning. Another key component of this approach is neural network-based detection of cell regions with class prototype balancing. We first identify the main cluster prototypes formed by the features to balance the class representations in the training sample set. Our results showed that our neural network with DBSCAN-based re-sampling improves cell detection and segmentation compared to our previous approaches that employed parametric and nonparametric statistical tests40,41. Relative to a previous version of our work that employed nonparametric foreground/background separation41, the neural network approach resulted in the following performance improvements over the six CTC datasets: 0.713 to 0.754 in average SEG-GT, 0.886 to 0.895 in average DET-GT, and 0.871 to 0.881 in average TRA-GT.

In conclusion, we introduced a comprehensive and interpretable cell segmentation and tracking framework. Our extensive performance evaluation experiments showed its applicability to analysis of heterogeneous cell microscopy sequences. Future research directions include the development of a joint cell segmentation and classification approach, and graph neural network44,45,46 based optimization techniques for cell tracking and cell event detection.

Data availability

The code of our method is publicly available on https://github.com/smakrogi/CSTQ_Pub/, (release v.3.2). The research study has been conducted on open-access data publicly available at https://celltrackingchallenge.net.

References

Franz, C. M., Jones, G. E. & Ridley, A. J. Cell migration in development and disease. Dev. Cell 2, 153–158 (2002).

Eils, R. & Athale, C. Computational imaging in cell biology. J. Cell Biol. 161, 477–481. https://doi.org/10.1083/jcb.200302097 (2003).

Meijering, E., Dzyubachyk, O. & Smal, I. Methods for cell and particle tracking. Methods Enzymol. 504, 183–200. https://doi.org/10.1016/B978-0-12-391857-4.00009-4 (2012).

Meijering, E. Cell Segmentation: 50 Years Down the Road [Life Sciences]. IEEE Signal Process. Mag. 29, 140–145. https://doi.org/10.1109/MSP.2012.2204190 (2012).

Yazdi, R. & Khotanlou, H. A survey on automated cell tracking: challenges and solutions. Multim. Tools Appl. 1–37 (2024).

Ulman, V. et al. An objective comparison of cell-tracking algorithms. Nat. Methods 14, 1141–1152 (2017).

Wang, Y., Williams, O., Annasamudram, N. & Makrogiannis, S. Live cell segmentation and tracking techniques. In Intelligent Image and Video Analytics (ed. Wang, Y.) 129–172 (CRC Press, 2023).

Moen, E. et al. Deep learning for cellular image analysis. Nat. Methods 16, 1233–1246 (2019).

Wang, A. et al. A novel deep learning-based 3d cell segmentation framework for future image-based disease detection. Sci. Rep. 12, 342 (2022).

Ershov, D. et al. Trackmate 7: Integrating state-of-the-art segmentation algorithms into tracking pipelines. Nat. Methods 19, 829–832 (2022).

Holme, B. et al. Automated tracking of cell migration in phase contrast images with celltraxx. Sci. Rep. 13, 22982 (2023).

Maska, M. et al. The cell tracking challenge: 10 years of objective benchmarking. Nat. Methods 20, 1010–1020. https://doi.org/10.1038/s41592-023-01879-y (2023).

Dzyubachyk, O., van Cappellen, W. A., Essers, J., Niessen, W. J. & Meijering, E. Advanced level-set-based cell tracking in time-lapse fluorescence microscopy. IEEE Trans. Med. Imaging 29, 852–867. https://doi.org/10.1109/TMI.2009.2038693 (2010).

Maska, M. et al. Segmentation and shape tracking of whole fluorescent cells based on the chan-vese model. IEEE Trans. Med. Imaging 32, 995–1006. https://doi.org/10.1109/TMI.2013.2243463 (2013).

Magnusson, K. E. G., Jalden, J., Gilbert, P. M. & Blau, H. M. Global linking of cell tracks using the viterbi algorithm. IEEE Trans. Med. Imaging 34, 911–929. https://doi.org/10.1109/TMI.2014.2370951 (2015).

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015, 234–241 (Cham, 2015).

Alegro, M. et al. Automating cell detection and classification in human brain fluorescent microscopy images using dictionary learning and sparse coding. J. Neurosci. Methods 282, 20–33 (2017).

Lux, F. & Matula, P. DIC image segmentation of dense cell populations by combining deep learning and watershed. In: 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019). 236–239, https://doi.org/10.1109/ISBI.2019.8759594 (2019).

Yi, J. et al. Multi-scale Cell Instance Segmentation with Keypoint Graph based Bounding Boxes. Preprint at arXiv:1907.09140 [cs] (2019).

Sixta, T., Cao, J., Seebach, J., Schnittler, H. & Flach, B. Coupling cell detection and tracking by temporal feedback. Mach. Vis. Appl. 31, 1–18. https://doi.org/10.1007/s00138-020-01072-7 (2020).

Löffler, K. & Mikut, R. Embedtrack–simultaneous cell segmentation and tracking through learning offsets and clustering bandwidths. IEEE Access 10, 77147–77157. https://doi.org/10.1109/ACCESS.2022.3192880 (2022).

Laptev, I. On space-time interest points. Int. J. Comput. Vision 64, 107–123 (2005).

Li, Y., Tax, D. M. & Loog, M. Scale selection for supervised image segmentation. Image Vis. Comput. 30, 991–1003. https://doi.org/10.1016/j.imavis.2012.08.010 (2012).

Zhou, Y., Li, J., Feng, L., Zhang, X. & Hu, X. Adaptive scale selection for multiscale segmentation of satellite images. IEEE J. Select. Top. Appl. Earth Observ. Remote Sens. 10, 3641–3651. https://doi.org/10.1109/JSTARS.2017.2693993 (2017).

Pont-Tuset, J., Arbeláez, P., Barron, J. T., Marques, F. & Malik, J. Multiscale combinatorial grouping for image segmentation and object proposal generation. IEEE Trans. Pattern Anal. Mach. Intell. 39, 128–140. https://doi.org/10.1109/TPAMI.2016.2537320 (2017).

Li, Y., Xia, R., Huang, Q., Xie, W. & Li, X. Survey of spatio-temporal interest point detection algorithms in video. IEEE Access 5, 10323–10331 (2017).

Lindeberg, T. Spatio-temporal scale selection in video data. J. Math. Imaging Vis. 60, 525–562 (2018).

Al-Huda, Z., Peng, B., Yang, Y. & Ahmed, M. Object scale selection of hierarchical image segmentation using reliable regions. In 2019 IEEE 14th International Conference on Intelligent Systems and Knowledge Engineering (ISKE), 1081–1088, https://doi.org/10.1109/ISKE47853.2019.9170333 (2019).

Ester, M., Kriegel, H.-P., Sander, J. & Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In: Proc. Second International Conference on Knowledge Discovery in Databases and Data Mining, 226–231 (1996).

Schubert, E., Sander, J., Ester, M., Kriegel, H. P. & Xu, X. DBSCAN revisited, revisited: why and how you should (still) use DBSCAN. ACM Transactions on Database Systems (TODS) 42, 1–21 (2017).

Beer, A. et al. Connecting the dots – density-connectivity distance unifies dbscan, k-center and spectral clustering. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, KDD ’23, 80–92, https://doi.org/10.1145/3580305.3599283 (Association for Computing Machinery, New York, NY, USA,) (2023).

Levi, R., Marzullo, A., Savini, G., Savevski, V. & Politi, L. S. An unet boosting training strategy for the brats-isbi 2024 goat challenge. In 2024 IEEE International Symposium on Biomedical Imaging (ISBI), 1–4 (IEEE, 2024).

Makrogiannis, S. & Bourbakis, N. G. Motion analysis with application to assistive vision technology. In IEEE Int. Conference on Tools for Artificial Intelligence, 344–352 (2004).

Boukari, F. & Makrogiannis, S. Joint level-set and spatio-temporal motion detection for cell segmentation. BMC Med. Genom. 9(Suppl 2), 49. https://doi.org/10.1186/s12920-016-0206-5 (2016).

Byun, J. et al. Automated tool for the detection of cell nuclei in digital microscopic images: application to retinal images. Mol. Vis. 12, 949–60 (2006).

Usaj, M., Torkar, D., Kanduser, M. & Miklavcic, D. Cell counting tool parameters optimization approach for electroporation efficiency determination of attached cells in phase contrast images. J. Microsc. 241, 303–314 (2011).

Brox, T., Bruhn, A., Papenberg, N. & Weickert, J. High accuracy optical flow estimation based on a theory for warping. In European Conference on Computer Vision (ECCV), vol. 3024 of Lecture Notes in Computer Science, 25–36 (Springer, 2004).

Bruhn, A., Weickert, J. & Schnörr, C. Lucas/kanade meets horn/schunck: Combining local and global optic flow methods. Int. J. Comput. Vision 61, 211–231. https://doi.org/10.1023/B:VISI.0000045324.43199.43 (2005).

Guo, D., van de Ven, A. L. & Zhou, X. Red blood cell tracking using optical flow methods. IEEE J. Biomed. Health Inform. 18, 991–998. https://doi.org/10.1109/JBHI.2013.2281915 (2014).

Boukari, F. & Makrogiannis, S. Automated cell tracking using motion prediction-based matching and event handling. IEEE/ACM Trans. Computat. Biol. Bioinform. 17, 959–971. https://doi.org/10.1109/TCBB.2018.2875684 (2020).

Makrogiannis, S., Annasamudram, N., Wang, Y., Miranda, H. & Zheng, K. A system for spatio-temporal cell detection and segmentation in time-lapse microscopy. In 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), 2266–2273, https://doi.org/10.1109/BIBM52615.2021.9669421 (2021).

Das, S., Steager, E. B., Hsieh, M. A., Stebe, K. J. & Kumar, V. Experiments and open-loop control of multiple catalytic microrobots. J. Micro-Bio Robot. 14, 25–34 (2018).

Das, S. et al. Cellular expression through morphogen delivery by light activated magnetic microrobots. J. Micro-Bio Robot. 15, 79–90 (2019).

Ben-Haim, T. & Raviv, T. R. Graph neural network for cell tracking in microscopy videos. In Eur. Conf. Comput. Vis. (ed. Ben-Haim, T.) 610–626 (Springer, 2022).

Pineda, J. et al. Geometric deep learning reveals the spatiotemporal features of microscopic motion. Nat. Mach. Intell. 5, 71–82 (2023).

Pylvänäinen, J. W., Gómez-de Mariscal, E., Henriques, R. & Jacquemet, G. Live-cell imaging in the deep learning era. Curr. Opin. Cell Biol. 85, 102271 (2023).

Arbelle, A. & Riklin-Raviv, T. Microscopy cell segmentation via convolutional lstm networks. 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019) 1008–1012 (2018).

Ben-Haim, T. & Riklin-Raviv, T. Graph neural network for cell tracking in microscopy videos. In European Conference on Computer Vision (2022).

Zhao, X., Gai, N., Hu, S. & Huang, K. CAS-CN, segmentation and tracking. https://public.celltrackingchallenge.net/participants/CAS-CN.pdf (2021).

Aho, L., Yung, R. & Cohen, A. R. C. DREX-US, segmentation and tracking. https://leverjs.net/ctc2021 (2021).

Xiao, P. & Yu, W. IMCB-SG, segmentation and tracking. https://celltrackingchallenge.net/participants/IMCB-SG/ (2014).

Broaddus, C., Weigert, M., Schmidt, U. & Myers, G. MPI-GE (CBG), segmentation and tracking. https://celltrackingchallenge.net/participants/MPI-GE-CBG/ (2021).

Bao, R., Al-Shakarji, N. M., Bunyak, F. & Palaniappan, K. Dmnet: Dual-stream marker guided deep network for dense cell segmentation and lineage tracking. In: Proc. IEEE/CVF International Conference on Computer Vision, 3361–3370 (2021).

Al-Shakarji, N. M., Seetharaman, G., Bunyak, F. & Palaniappan, K. Robust multi-object tracking with semantic color correlation. In 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), 1–7 (IEEE, 2017).

Al-Shakarji, N. M., Bunyak, F., Seetharaman, G. & Palaniappan, K. Multi-object tracking cascade with multi-step data association and occlusion handling. 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS) 1–6 (2018).

Xie, X., Chen, J. & Li, Y. SZU-CN, segmentation and tracking. https://public.celltrackingchallenge.net/participants/SZU-CN.pdf (2020).

Esteves, T., Quelhas, P., Mendonça, A. M. & Campilho, A. Gradient convergence filters and a phase congruency approach for in vivo cell nuclei detection. Machine Vision and Applications23, https://doi.org/10.1007/s00138-012-0407-7 (2012).

Esteves, T., Oliveira, M. J. & Quelhas, P. Cancer cell detection and tracking based on local interest point detectors. In Proceedings of the 10th International Conference on Image Analysis and Recognition,, https://doi.org/10.1007/978-3-642-39094-4_49 (2013).

Acknowledgements

This research was supported by the Army Research Office under grant #W911NF2010095, National Institute of General Medical Sciences of the National Institutes of Health (NIH) award #SC3GM113754, #1U54MD015959-01A1 and the National Science Foundation under award #2234871. We thank Dr. Sambeeta Das and Ms. Ceren Kirmizitas from University of Delaware for providing the cell and microrobot datasets.

Author information

Authors and Affiliations

Contributions

N.A. and S.M. developed the techniques, N.A., J.Z., and S.M. designed the experiments, N.A., J.Z., O.O., and A.P. conducted the experiments, N.A., J.Z., O.O, and A.P. analyzed the results. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Annasamudram, N., Zhao, J., Oluwadare, O. et al. Scale selection and machine learning based cell segmentation and tracking in time lapse microscopy. Sci Rep 15, 11717 (2025). https://doi.org/10.1038/s41598-025-95993-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-95993-w