Abstract

X-ray image-based prohibited item detection plays a crucial role in modern public security systems. Despite significant advancements in deep learning, challenges such as feature extraction, object occlusion, and model complexity remain. Although recent efforts have utilized larger-scale CNNs or ViT-based architectures to enhance accuracy, these approaches incur substantial trade-offs, including prohibitive computational overhead and practical deployment limitations. To address these issues, we propose Xray-YOLO-Mamba, a lightweight model that integrates the YOLO and Mamba architectures. Key innovations include the CResVSS block, which enhances receptive fields and feature representation; the SDConv downsampling block, which minimizes information loss during feature transformation; and the Dysample upsampling block, which improves resolution recovery during reconstruction. Experimental results demonstrate that the proposed model achieves superior performance across three datasets, exhibiting robust performance and excellent generalization ability. Specifically, our model attains mAP50–95 of 74.6% (CLCXray), 43.9% (OPIXray), and 73.9% (SIXray), while demonstrating lightweight efficiency with 4.3 M parameters and 10.3 GFLOPs. The architecture achieves real-time performance at 95.2 FPS on the GPUs. In summary, Xray-YOLO-Mamba strikes a favorable balance between precision and computational efficiency, demonstrating significant advantages.

Similar content being viewed by others

Introduction

With accelerating urbanization and expanding public transportation networks, security checks has become pivotal in safeguarding public spaces. X-ray image-based security checks for prohibited items are widely deployed in critical infrastructure (e.g., airports, rail hubs) and mass gathering venues (e.g., stadiums, convention centers), effectively mitigating security risks in high-density population scenarios. Conventional methodologies primarily rely on manual inspection, where trained operators conduct visual interpretation of X-ray images with an empirical accuracy of approximately 80%. Nevertheless, this approach faces dual challenges arising from severe occlusion patterns in X-ray imagery that hinder object identification, and chronic operator fatigue combined with task-induced laxity due to the low-probability nature of contraband occurrences, both of which substantially lead to declines in the efficiency and accuracy of prohibited item identification.

To address security inspection demands for prohibited item recognition, object detection algorithms have been introduced into X-ray image analysis to enhance detection efficiency. Early studies primarily employed Bag of Visual Words (BoVW) methods1, which accomplished prohibited item classification through feature extraction, clustering, and classifiers such as Support Vector Machines (SVM) or sparse representation2. With breakthroughs in deep learning, CNN-based detection techniques gradually became mainstream in this domain3: Convolutional Neural Networks (CNNs)4 initially demonstrated potential in binary classification tasks like firearm presence determination5. Subsequent models including sliding window-based CNNs, Faster R-CNN, and R-FCN were extended to multi-category hazardous item detection tasks, achieving significant performance improvements. Studies further validated the real-time detection capabilities of CNNs models in aviation security X-ray images, where YOLOv2 achieved exceptional mean average precision and real-time performance in detecting targets like scissors and aerosols on the SASC dataset6. With the continuous evolution of the YOLO series, its applications in prohibited item detection have progressively deepened, substantially advancing technological development in this field.

Contemporary deep learning-based object detection research primarily evolves along two architectural paradigms: CNNs and Vision Transformers (ViTs)7. While CNNs leverage intrinsic inductive biases including locality and translation equivariance for effective local feature extraction8, their hierarchical receptive field expansion fundamentally limits long-range dependency preservation9—particularly detrimental to prohibited item detection requiring simultaneous localization of fine-grained textures and global contextual patterns. In contrast, ViTs address these limitations through self-attention mechanisms enabling global contextual modeling, yet the quadratic complexity of self-attention mechanisms remains a critical drawback10, severely impacting scalability and inference speed, particularly in real-time detection scenarios. Recent hybrid approaches (QAGA-Net11, HMKD-Net12, MHT-X13) attempt to balance these trade-offs by cascading CNN feature extractors with Transformer modules. However, such designs still inherit the quadratic complexity bottlenecks of ViTs in critical path operations. The emerging Mamba14 architecture, which is based on State Space Models (SSMs), introduces a novel solution to this issue by excelling in long-range interactions while maintaining a linear-time computational complexity. This approach effectively resolves the quadratic complexity problem faced by ViTs models, making it a promising candidate for real-time prohibited item detection in X-ray images.

Building on the Mamba architecture, we propose a lightweight detection model named Xray-YOLO-Mamba, designed to balance performance and efficiency. The main contributions of this paper are summarized as follows:

-

(1)

We propose a novel lightweight Xray-YOLO-Mamba model based on the Mamba framework, which combines the strengths of Mamba and YOLO. This novel approach applies the Mamba architecture to prohibited item detection in X-ray images, opening new avenues for research in this field.

-

(2)

We design an innovative backbone and neck network to enhance the model’s feature extraction capabilities and detection accuracy. In the backbone, we propose the CResVSS Block and SDConv Block to optimize the model’s performance. In the neck, we propose the Dysample Block, which improves the capture of fine-grained features and further enhances detection accuracy.

-

(3)

We conduct experiments on three different datasets to validate the performance of the Xray-YOLO-Mamba model. The experimental results show that Xray-YOLO-Mamba, as a lightweight model, achieves superior performance compared to other methods, effectively balancing precision and computational efficiency.

Related work

Detection of prohibited items in X-ray images

The task of detecting prohibited items in X-ray images faces the following three challenges: first, the limited texture information in the images; second, the severe occlusion of items within luggage; and third, the significant intra-class variations. To address these challenges in X-ray prohibited item detection, Miao et al.15 proposed leveraging foreground information across different Feature Pyramid Network (FPN) layers to eliminate background interference and mitigate overlapping issues. They designed a CHR model for validation and released a subway security inspection dataset, SIXray, which laid the foundation for prohibited item detection tasks. Liu et al.16 approached the problem from an image processing perspective, first enhancing the texture information of prohibited items through color processing and then addressing the detection of prohibited items. Wei et al.17 simultaneously considered color and contour information, introducing an attention mechanism to tackle the challenges in X-ray prohibited item detection. To address the issues of overlapping and large intra-class variations, Zhao et al.18 released a novel dataset, CLCXray, which includes knives and liquids, thereby expanding the scope of hazardous item detection. They also proposed a novel Label-Aware (LA) mechanism, achieving an mAP50 of 71.8% on the CLCXray dataset and 88.26% on the OPIXray dataset, representing state-of-the-art performance.

Real-time object detectors

With the advancement of deep learning technology, various real-time object detection models have been proposed. Among these, the YOLO series, which follows the single-stage detector design methodology, has garnered significant attention due to its efficient real-time performance.

YOLOv119, as the pioneer of the YOLO series, introduced the concept of transforming object detection tasks into regression problems, establishing the foundation for single-stage object detection models. Subsequently, YOLOv320 improved the feature extraction network architecture by adopting a deeper network, Darknet-53, and incorporating residual connections to address the vanishing gradient problem, thereby enhancing both detection speed and accuracy. To further enhance speed and accuracy, YOLOv421 introduced extensive residual structure designs, reducing redundant computations and proposing the CSPDarknet53 backbone network. YOLOv5 adopted more efficient computational methods and hardware acceleration, balancing real-time performance and accuracy. YOLOv8 integrated features from previous YOLO versions, proposing a C2f structure with richer gradient flow, effectively extracting and fusing image features, achieving higher detection accuracy. YOLOv1022 improved inference speed through a consistent dual assignment strategy for non-maximum suppression training and integrated attention mechanisms to enhance detection accuracy. YOLOv1123 optimized the model architecture by designing a new C3k2 feature extraction module and incorporating a parallel spatial attention module, C2PSA (Convolutional block with Parallel Spatial Attention), after the SPPF (Spatial Pyramid Pooling Fast), thereby improving the overall performance of the model. However, these models still exhibit certain limitations in extracting features from X-ray images with complex backgrounds and overlapping objects, necessitating the design of deeper networks with more comprehensive receptive fields and hierarchical structures.

Visual state space models in machine vision

Recently, VMamba has emerged as a novel object detection model, gradually becoming a research hotspot in the field due to its innovative architecture and methodologies. To address the challenges of slow training convergence, high computational costs, and difficulties in detecting overlapping objects, Liu et al.24 proposed VMamba, a novel visual backbone network based on SSMs, which has emerged as a promising approach in object detection and attracted significant research attention.

VMamba employs a linear-time complexity representation learning method, effectively resolving the computational inefficiency of transformers in handling long-sequence state spaces. Chen et al.25 further optimized the VMamba model and introduced Res-VMamba, which achieved state-of-the-art results in fine-grained food image classification tasks. Additionally, Ma et al.26 innovatively developed the FER-YOLO-Mamba model, incorporating an SSMs-based backbone network for facial expression recognition tasks, thereby enhancing the accuracy of facial expression recognition. By leveraging VMamba as a foundational framework, novel methods have been developed for diverse scenarios, demonstrating the effectiveness of VMamba in addressing challenges in machine vision.

Inspired by the success of VMamba in machine vision, we propose Xray-YOLO-Mamba, a model that integrates the strengths of YOLOv11 and Mamba, leveraging a global receptive field for improved object detection.

Methods

Overall architecture

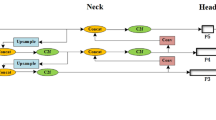

Figure 1 provides an overview of the architecture of Xray-YOLO-Mamba. The proposed detection model integrates Mamba with the YOLOv11-n framework, redesigning the backbone network by incorporating the Visual State Space (VSS)24 and spatial to depth downsampling, while integrating a dynamic upsampling into the neck network. Specifically, the architecture begins with the Stem in the backbone, which performs downsampling to generate a 2D feature map with a resolution of 160 × 160. The Stem layer is a streamlined layer that utilizes two convolutional layers with a stride of 2 and a kernel size of 3 to divide the input image into non-overlapping patches. The feature extraction phase consists of three stages, each composed of an SDConv Block and a CResVSS Block, for extract multi-level deep 2D feature maps. The SDConv Block is a spatial to depth downsampling block that replaces the original Conv block. The CResVSS Block is a feature extraction block that integrates the VSS and the 2D Selective Scan (SS2D)24 mechanism, replacing the original C3K2. In the neck, the design framework of YOLOv11-n is retained, and a dynamic upsampling27 approach is incorporated through the design of the Dysample Block. This optimizes the upsampling layer, enhances the model’s ability to capture feature maps, and improves accuracy.

Visual state space models

The core of VMamba consists of a stack of VSS blocks and SS2D blocks. The VSS blocks are the visual backbone network based on SSMs with linear complexity for visual representation learning. By traversing along four scanning routes, SS2D bridges the gap between the ordered nature of 1D selective scan and the non-sequential structure of 2D vision data, and obtains the global receptive field of the image.

State Space Models (SSMs). SSMs can be regarded as linear time-invariant (LTI) systems that map an input sequence \(x\left( t \right) \in {\mathbb{R}}^{L}\) to an output sequence \(y\left( t \right) \in {\mathbb{R}}^{L}\) via an implicit latent state \(h\left( t \right) \in {\mathbb{R}}^{N}.\) Mathematically, continuous-time SSMs can be formulated as linear ordinary differential equations (ODEs) as follows:

In Eqs. (1) and (2), \(A \in {\mathbb{R}}^{N \times N}\) denotes the state transition matrix, which governs how the hidden state evolves over time; \(B \in {\mathbb{R}}^{N \times L}\) denotes the weight matrix, which indicates the relationship between input stimulation and hidden states; \(C \in {\mathbb{R}}^{L \times N}\) denotes the observation matrix, which maps the hidden intermediate state to the output; \(D \in {\mathbb{R}}^{L \times L}\) denotes the feedforward matrix, which is often omitted (\(D = 0\)) to decouple the direct input–output dependency; \(x\left( t \right)\), \(y\left( t \right)\) and \(h\left( t \right)\) represent the input sequence, output sequence, and state vector, respectively.

While continuous-time SSMs provide theoretical advantages, their direct implementation in deep learning frameworks faces computational challenges due to incompatible time domains. To address this, it is necessary to discretize the ODEs through a discretization process, with the primary aim of converting continuous ODEs into discrete functions. The discretization of SSMs essentially converts the system’s continuous-time ordinary differential equation system into an equivalent discrete-time representation. The process of discretization employs fixed discretization rules \(f_{A}\) and \(f_{B}\) to transform continuous parameters \(A\) and \(B\) into the discrete parameters \(\overline{A}\) and \(\overline{B}\) . Following the Zero-Order Hold (ZOH) principle, Eqs. (1) and (2) are discretized as:

The discretization formulas for \(A\), \(B\), \(C\) and \(D\) are:

In Eqs. (3–8), \(\overline{A}\) and \(\overline{B}\) denote the discretized matrices of \(A\) and \(B\), respectively; \(\Delta\) represents discretization step size, which controls the temporal resolution of the model; \(\Delta A\) and \(\Delta B\) respectively denote the discrete-time counterparts of the continuous parameters \(A\) and \(B\) over the given time interval; \(I\) represents the identity matrix; \(\overline{C}\) and \(\overline{D}\) are the same as \(C\) and \(D\) of the continuous system.

2D Selective Scan Mechanism (SS2D). The incompatibility between 2D visual data and 1D language data renders the Mamba model unsuitable for direct application in visual tasks. Therefore, the VMamba model introduces a novel Selective Scan Mechanism (S6), which infuses the system with contextual responsiveness and weight dynamism. As illustrated in Fig. 2, the data forwarding process in SS2D consists of three steps: cross-scanning, selective scanning with S6 blocks, and scan merging. First, SS2D expands the input patches into four sequences along four distinct traversal paths. The scanning approach ensures that each element in the feature map contains information from all directions, establishing a comprehensive global receptive field. Subsequently, each feature sequence is processed by the selective scan space state sequential model (S6). Finally, the results are merged to construct the 2D feature map as the final output.

CResVSS block

The CResVSS Block is the core component of Xray-YOLO-Mamba, as illustrated in Fig. 3b. Overall, the framework adopts a composite residual VSS architecture, hence it is referred to as the CResVSS Block. The residual connection design of the CResVSS Block is inspired by the framework style of the Transformer, where the input of the sub-layer is directly added to its output. This design minimizes the loss of feature information during deep stacking, facilitates efficient gradient flow, and enhances network stability. The calculation formulas for the CResVSS Block are as follows:

In Eqs. (9) and (10), \(X_{in}\) and \(X_{out} \in {\mathbb{R}}^{H \times W \times C}\) denote the input and output feature maps, respectively, and \(X_{1} \in {\mathbb{R}}^{H \times W \times C}\) denotes the intermediate feature maps, \(\sigma_{SiLU} \left( . \right)\) denotes the nonlinear SiLU activation function.

At the input stage, \(X_{in}\) is first processed by the Conv Block, the calculation formula of which is shown in Eq. (9). Subsequently, \(X_{1}\) passes through a dilation residual block (DRes Block), the SS2D Block, and an inverted residual block (IRes Block), with SS2D being the key component of this stage. The calculation formulas for this part are shown in Eq. (10).

The primary workflow of SS2D is illustrated in Fig. 3a. By implementing complementary 1D traversal paths, SS2D not only effectively integrates information from all other pixels across various directions, thereby establishing a global receptive field in 2D space, but also enhances subsequent features with multi-dimensional characteristics through directional transformations. This approach significantly improves the efficiency and comprehensiveness of multidimensional image feature extraction.

DRes Block. The design of the receptive field is crucial for object detection tasks since it is related to accuracy. Before the SS2D Block, a DRes Block is introduced, which adopts multi-rate dilated depthwise convolutions to filter regional features of different sizes. This design features a scalable receptive field, enabling it to more effectively acquire multi-scale contextual information. The DRes Block is designed in a residual fashion, as illustrated in Fig. 3c, and its calculation formula is as follows:

In Eqs. (11) and (12), both \(X_{2} \in {\mathbb{R}}^{H \times W \times C}\) and \(X_{1}^{\prime} \in {\mathbb{R}}^{{H \times W \times \frac{C}{2}}}\) denote the intermediate feature maps.

A larger receptive field can establish longer-range spatial connections, but an excessively large receptive field may yield diminishing returns. Therefore, the dilation rates for the three branches are set to 1, 3, and 5, respectively. Since long-range connections in convolutions require the assistance of short-range connections, and smaller receptive fields are more critical for capturing fine-grained features, the output channel capacity of the first branch is designed to be twice that of the other branches.

IRes block. The multi-layer perceptron architecture of Mamba follows the design of Transformers, utilizing residual network structures for nonlinear transformations. However, its ability to enhance feature representation remains limited. Inspired by the inverted residual network structure, a lightweight inverted residual block (IRes Block) is employed in the output stage to replace the original residual module, as illustrated in Fig. 3d and its calculation formula is as follows:

In Eq. (13), \(X_{3}\) and \(X_{out} \in {\mathbb{R}}^{H \times W \times C}\) denote the intermediate and output feature maps, respectively, and \(\sigma_{GELU} \left( . \right)\) denotes the nonlinear GELU activation function. The IRes Block employs inverted residuals and residual connections to enhance feature extraction, mitigate the vanishing gradient problem, and improve the model’s performance.

SDConv block

CNNs architectures, which implement downsampling through strided convolutions or pooling layers, can filter out a large amount of redundant pixel information in high-resolution images, thereby enhancing convolution efficiency. However, this approach also discards fine-grained feature information of the image, which poses significant challenges for detecting blurry or small-target objects. In prohibited item detection, a significant number of images contain blurry objects or small targets, making detection more challenging. To address this issue, a Spatial to Depth (SDConv) block is proposed to achieve effective downsampling of feature maps while capturing fine-grained feature information.

As illustrated in Fig. 4, the feature map transformation process of the SDConv Block is divided into three main stages: slicing, concatenation, and convolutional fusion. The calculation formulas for the block are as follows:

The definitions of \(F_{0}\), \(F_{1}\), \(F_{2}\) and \(F_{3}\) are:

In Eqs. (14–18), \(F_{0}\) , \(F_{1}\) , \(F_{2}\) and \(F_{3} \in {\mathbb{R}}^{{\frac{H}{2} \times \frac{W}{2} \times C}}\) denote the four sub-feature maps divided by \(F_{in}\) , \(F_{in} \in {\mathbb{R}}^{H \times W \times C}\) and \(F_{out} \in {\mathbb{R}}^{{\frac{H}{2} \times \frac{W}{2} \times 2C}}\) denote the input and output feature maps, respectively, and \(\sigma_{SiLU} \left( . \right)\) denotes the nonlinear SiLU activation function.

Dysample block

The quality of feature map upsampling significantly impacts the accuracy of object detection. Traditional upsampling methods employ fixed rules for interpolation, lacking flexibility. To address this limitation, we have integrated a lightweight and efficient Dysample Block. This block is designed from the perspective of point sampling, rather than utilizing convolutional kernels for upsampling, offering superior performance in terms of latency, parameter count, and accuracy.

The upsampling process of this block is divided into six steps, with the pseudo-code for the Dysample Block detailed in Algorithm 1. Firstly, a linear projection is applied to the original feature map \(X\) to generate initial offset values. To prevent output artifacts caused by overlapping local sampling positions, the offset values are multiplied by 0.25, thereby satisfying the theoretical boundary condition between overlapping and non-overlapping regions. Subsequently, the offset values \(O\) undergo pixel rearrangement and are added to the original sampling grid \(G\) to obtain the sampling set \(S\). Finally, the upsampling function resamples the feature map \(X\) based on the sampling points \(S\) to produce \(X^{\prime}.\)

Algorithm 1 Pseudo-code for dynamic upsampling.

Experiments

Datasets and implementation details

This section first provides an overview of the three datasets used in the experiments: CLCXray18, OPIXray17, and SIXray15, followed by a detailed description of the experimental implementation. The basic information of the datasets is presented in Table 1.

The CLCXray dataset comprises 9,565 X-ray images, specifically designed to address the challenge of overlapping object recognition in X-ray imagery. The dataset consists of 12 categories, with the following distribution: blade (3,539), dagger (988), knife (700), scissors (2,496), Swiss Army knife (1,041), cans (789), carton drinks (1,926), glass bottle (540), plastic bottle (5,998), vacuum cup (2,166), spray cans (1,077), and tin (856). The OPIXray dataset contains 8,885 X-ray images, with a particular focus on frequently encountered prohibited cutting tools. The dataset consists of 5 categories, with the following distribution: folding knife (FO, 1,993), straight knife (ST, 1,044), scissors (SC, 1,863), utility knife (UT, 1,978), and multi-tool knife (MU, 2,042). The SIXray dataset includes 8,929 manually annotated images of prohibited items. The dataset consists of 5 categories, with the following distribution: gun (3,131), knife (1,943), wrench (2,199), pliers (3,961), and scissors (983). In accordance with general dataset division practices, the experimental datasets are divided into training, validation, and testing sets at an 8:1:1 ratio.

All our models were evaluated on a single NVIDIA A100 GPU. In terms of the training strategy, the models were trained from scratch without using pre-trained weights. The input image size was uniformly set to 640 × 640 pixels throughout training and evaluation. The training process consisted of 600 epochs, with strong data augmentation techniques such as Mosaic and Mixup applied. Data augmentation was disabled during the final 10 epochs. All models utilized the SGD optimization algorithm with a batch size of 64. The initial learning rate was set to 0.01, and the final learning rate was also 0.01. The optimizer was configured with a momentum of 0.937 and a weight decay of 0.0005.

Evaluation metrics

In the experiment, the evaluation metrics for the model include mAP50, mAP75, mAP50–95, Parameters, FLOPs (floating-point operations), and FPS (Frames Per Second). The Parameters refer to the total number of trainable parameters in the model. FLOPs refers to the total number of floating-point operations required for one forward pass of the model. FPS refer to the number of input images the model can process per second. The formulas for calculating mAP are as follows:

In Eqs. (19–21), \(mAP\) represents the mean average precision, \(AP_{c}\) represents the average precision for class \(c\) , \(N\) is the number of classes; \(P\left( R \right)\) represents the precision-recall curve, and \(AP\) represents the average precision, which is the area under the precision-recall curve. mAP50 represents the mean average precision calculated at an IoU threshold of 0.5, while mAP75 is calculated at an IoU threshold of 0.75. mAP50-95 is the primary evaluation metric, computed across 10 IoU thresholds, with a step size of 0.05.

Comparisons with state-of-the-art methods

In this section, we first compare our Xray-YOLO-Mamba model with the ATTS + Lacls and FCOS + DOAM methods, proposed by the creators of the CLCXray and OPIXray datasets, to validate its effectiveness. We then compare our method with several state-of-the-art object detection approaches across all datasets to further assess its robustness and generalization capabilities.

Table 2 presents the performance of Xray-YOLO-Mamba on the CLCXray and OPIXray datasets, compared with other state-of-the-art object detectors. our method achieves improvements of 12.2%, 14%, and 15.3% in mAP50, mAP75, and mAP50–95, respectively, compared to ATTS + Lacls. When compared to the top-performing YOLOv10-s, our method achieves improvements of 0.2%, 0.3%, and 0.7% in mAP50, mAP75, and mAP50–95, respectively. In terms of computational complexity, our method reduces the parameter count by 40.3% and the computational load by 52.3% compared to YOLOv10-s. On the OPIXray dataset, our method also exhibits excellent performance: compared to FCOS + DOAM, mAP50 increased by 8.49%; compared to the best-performing YOLOv10-s, our method shows improvements of 0.2%, 0.1%, and 0.3% in mAP50, mAP75, and mAP50–95, respectively, consistent with the results on the CLCXray dataset. In terms of computational efficiency, although our method shows a 65.4% increase in the number of parameters and a 58.5% increase in FLOPs compared to the baseline YOLOv11-n, with a decrease of 9 frames per second in inference speed, our method demonstrates significant improvements in mAP metrics. Specifically, compared to YOLOv11-n, our method improves by 2.0%, 2.1%, and 2.7% in mAP50, mAP75, and mAP50–95, respectively. Despite the slight decrease in inference speed, our method still meets real-time detection requirements with a processing speed of 95 frames per second.

The experimental results demonstrate that the proposed method achieves the best performance across all mAP metrics. Overall, the Xray-YOLO-Mamba model strikes a good balance between accuracy, computational complexity, and real-time performance, showing significant advantages.

To further validate the model’s performance, we conducted a comprehensive comparison of our proposed method with baseline classification performance on the OPIXray dataset, as shown in Table 3. Specifically, when compared to the best-performing YOLOv10-s model, our method shows a 1.2% improvement in mAP50 for the MU category, while also achieving slight improvements in most other categories. The results align with the model performance shown in Table 2.

To validate the robustness and generalization capability of the proposed model, we conducted experiments on the SIXray dataset, as shown in Table 4. Our method outperforms the best-performing lightweight YOLOv11-s model across key performance metrics, exhibiting significant enhancements of 0.6% in mAP50, 0.4% in mAP75, and 0.2% in mAP50–95. Additionally, it achieves a 54.3% reduction in parameters and a 52.1% reduction in FLOPs, demonstrating a slight accuracy advantage while significantly improving computational efficiency. These results are consistent with the model’s performance on the CLCXray and OPIXray datasets.

Ablation study

This section conducts component-wise ablation studies on the CLCXray dataset, quantifying the contributions of individual blocks in Xray-YOLO-Mamba. YOLOv11-n serves as the baseline model, achieving an mAP50–95 value of 71.9%. As shown in Table 5, the incorporation of the SS2D Block elevates the mAP50–95 from 71.9 to 73.1%, highlighting its critical role in enhancing model performance. The addition of DRes Block and IRes Block further improves performance, achieving mAP50–95 values of 73.9% and 73.6% respectively. The integration of SDConv Block and Dysample Block also makes positive contributions to performance enhancement, achieving mAP50–95 values of 73.3% and 73.6% respectively. When all blocks are used together, the model achieves its best performance, with an mAP50–95 value of 74.6%, representing a 2.7% improvement over the baseline model. These results demonstrate that integrating the SSM and its associated blocks successfully preserves more visual features, thereby significantly enhancing model performance.

Visualization

Figure 5 illustrates the detection outcomes of the Xray-YOLO-Mamba model on the CLCXray test dataset. The detection results demonstrate the model’s robust detection capabilities, as it successfully identifies all 12 categories of prohibited items even in complex scenarios involving overlapping objects and X-ray images. Figure 6 illustrates the heatmaps corresponding to the test samples. The heatmaps reveal that the model accurately localizes prohibited items and effectively extracts key features from cluttered image environments. These visualization results confirm that the model is capable of efficiently detecting prohibited items in X-ray images and exhibits strong recognition performance under practical application conditions.

Conclusion

In this study, we investigated YOLO and VMamba models and proposed a lightweight Xray-YOLO-Mamba model that integrates state space methods with the YOLO framework. This model effectively combines the efficient feature extraction capabilities of deep learning with the ability of SSMs, enabling the capture of long-range dependencies. In the proposed model, we introduced the DRes Block to overcome the limitations of local receptive fields in the Mamba architecture and the constraints of traditional MLPs, which significantly enhances feature extraction. Additionally, we proposed the IRes Block to mitigate the vanishing gradient problem and enrich contextual information by leveraging multi-scale features. To address the limitations of upsampling and downsampling in capturing fine-grained features, we introduced the Dysample Block and the SDConv Block, which improve the model’s ability to retain detailed information. Experimental results demonstrate that the proposed model achieves optimal performance across three datasets, attaining the best levels in key evaluation metrics such as mAP, Params, and FLOPs, while exhibiting robust performance characteristics and excellent generalization capabilities.

Although there are certain limitations in terms of inference speed, the model still meets the basic requirements for real-time detection, with further potential for improvement through pruning optimization techniques. Overall, the proposed model has achieved a favorable balance among precision and computational efficiency, effectively addressing the core challenges associated with the detection of prohibited items in X-ray images.

Data availability

The data that support the findings of this study are available from the corresponding author, lutianliang@ppsuc.edu.cn, upon reasonable request.

References

Schmidt-Hackenberg, L., Yousefi, M. R. & Breuel, T. M. Visual cortex inspired features for object detection in X-ray images. In 2021 21st International Conference on Pattern Recognition (ICPR) 2573–2576 (IEEE, 2012).

Mery, D., Svec, E. & Arias, M. Object recognition in baggage inspection using adaptive sparse representations of X-ray images. In Pacific-Rim Symposium on Image and Video Technology (PSIVT) 709–720 (Springer, 2016).

Mery, D. et al. Modern computer vision techniques for X-ray testing in baggage inspection. IEEE Trans. Syst. Man. Cybern Syst. 47, 682–692 (2017).

Song, H. & Zhou, Y. Simple is best: a single-CNN method for classifying remote sensing images. Netw. Heterogen. Media 18, 4 (2023).

Akçay, S., Kundegorski, M. E., Devereux, M. & Breckon, T. P. Transfer learning using convolutional neural networks for object classification within X-ray baggage security imagery. In 2016 IEEE International Conference on Image Processing (ICIP) 1057–1061 (IEEE, 2016).

Liu, Z., Li, J., Shu, Y. & Zhang, D. Detection and recognition of security detection object based on Yolo9000. In 2018 International Conference on Systems and Informatics (ICSAI) 278–282 (IEEE, 2018).

Dosovitskiy, A. et al. An image is worth 16x16 words: transformers for image recognition at scale. Int. Conf. Learn. Represent (ICLR) (2021).

Song, H. A leading but simple classification method for remote sensing images. Ann. Emerg. Technol. Comput. 7, 3 (2023).

Song, H., Wei, C. & Yong, Z. Efficient knowledge distillation for remote sensing image classification: a CNN-based approach. Int. J. Web Inf. Syst. 20, 129–158 (2024).

Song, H. et al. Quantitative regularization in robust vision transformer for remote sensing image classification. Photogramm. Rec. 39, 340–372 (2024).

Song, H. et al. QAGA-Net: enhanced vision transformer-based object detection for remote sensing images. Int. J. Intell. Comput. Cybern. 7, 3 (2024).

Song, H. et al. Efficient knowledge distillation for hybrid models: a vision transformer-convolutional neural network to convolutional neural network approach for classifying remote sensing images. IET Cyber‐Systems Rob. 6, e12120 (2024).

Alansari, M. et al. Multi-scale hierarchical contour framework for detecting cluttered threats in baggage security. IEEE Access. (2024).

Gu, A. & Dao, T. Mamba Linear-time sequence modeling with selective state spaces. arXiv:2312.00752 (2023).

Miao, C. et al. SIXray: a large-scale security inspection X-ray benchmark for prohibited item discovery in overlapping images. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2119–2128 (2019).

Liu, J., Leng, X. & Liu, Y. Deep convolutional neural network based object detector for X-ray baggage security imagery. In 2009, 31st International Conference on Tools with Artificial Intelligence (ICTAI) 1757–1761 (IEEE, 2019).

Wei, Y. et al. Occluded prohibited items detection: an X-ray security inspection benchmark and de-occlusion attention module. In 28th ACM International Conference on Multimedia 138–146 (2020).

Zhao, C., Zhu, L., Dou, S., Deng, W. & Wang, L. Detecting overlapped objects in X-ray security imagery by a label-aware mechanism. IEEE Trans. Inf. Forensics Secur. 17, 998–1009 (2022).

Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You only look once: unified, real-time object detection. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 779–788 (IEEE, 2016).

Redmon, J. & Farhadi, A. YOLOv3: an incremental improvement. arXiv:1804 (2018).

Bochkovskiy, A., Wang, C. Y. & Liao, H. Y. M. YOLOv4: optimal speed and accuracy of object detection. arXiv:2004.10934 (2020).

Wang, A. et al. YOLOv10: real-time end-to-end object detection. arXiv:2405.14458 (2024).

Khanam, R. & Hussain, M. YOLOv11: an overview of the key architectural enhancements. arXiv:2410.17725 (2024).

Liu, Y. et al. Vmamba: visual state space model. arXiv:2401.10166 (2024).

Chen, C. S. et al. Res-vmamba: fine-grained food category visual classification using selective state space models with deep residual learning. arXiv:2402.15761 (2024).

Ma, H. et al. FER-YOLO-Mamba: facial expression detection and classification based on selective state space. arXiv:2405.01828 (2024).

Liu, W. et al. Learning to upsample by learning to sample. In 2023 IEEE/CVF International Conference on Computer Vision (ICCV) 1–10 (2023).

Wang, N. et al. NAS-FCOS: fast neural architecture search for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 11943–11951 (2020).

Kim, K. & Lee, H. S. Probabilistic anchor assignment with IoU prediction for object detection. In Computer Vision–ECCV 2020: 16th European Conference 355–371 (2020).

Acknowledgements

This work was supported by the Development and Application of Intelligence Analysis System Based on Large Language Model (2024300050036), and partially by the Henan Province Science and Technology Research Project (242102320065) and the Key Research Projects of Higher Education Institutions in Henan Province (23B520022).

Author information

Authors and Affiliations

Contributions

K.Z: manuscript text, software; S.P: methodology; Y.L: data preparation; T.L: research design. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhao, K., Peng, S., Li, Y. et al. A lightweight Xray-YOLO-Mamba model for prohibited item detection in X-ray images using selective state space models. Sci Rep 15, 13171 (2025). https://doi.org/10.1038/s41598-025-96035-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-96035-1