Abstract

Expert knowledge can help fill gaps in quantitative empirical information about complex ecological phenomena. We examined the level of agreement between 21 studies that collected expert ratings of the sensitivity of species and habitats to human activities and their pressures as input data for mapping the human impact on marine ecosystems. Our analyses revealed broad agreement about which human activities and pressures many species and habitats are sensitive to. These agreements reflect a common view of the main threats to ocean ecosystems. In contrast, scores provided by individual experts varied both within and across studies. Sensitivity scores collected with the same method for different regions were often more similar than scores collected for the same region but with different methods. These results highlight how inconsistencies in the design of many expert surveys can lead to variable outcomes. It is important to employ more consistent and theoretically grounded methods and protocols when eliciting expert ratings of species’ sensitivity to pressures, to ensure compatibility across studies and maintain rigour in analyses supporting effective ocean management.

Similar content being viewed by others

Many studies have investigated the effects of single or multiple pressures in the laboratory, field, or through various modeling approaches1 1. However, even single pressures can have diverging effects across ecological scales2. Ecosystems often respond nonlinearly to stress3,4, and there are complex interactions between pressures5,6,7,8. Furthermore, many types of interactions have been poorly studied. Given such complexity and missing information, ecological studies sometimes employ simpler methods that rely on expert knowledge to fill data gaps9,10.

The European Union’s Marine Strategy Framework Directive requires Member States to analysze human impacts from multiple pressures in marine waters under their jurisdictions11, and the Maritime Spatial Planning (MSP) Framework Directive requires this knowledge for allocating human uses at sea within MSP initiatives12. These requirements have led to improved spatial analysis of pressures and ecosystems across the European Seas13; however, modeling approaches reflecting the complexity of ecosystems under stress – such as end-to-end ecosystem models and sophisticated statistical models – are rare14and seldom applied in real planning15. Methods addressing ecosystem dynamics are only now becoming accessible to many scientists and practitioners16,17. Therefore, simple models combining spatial data about human activities or pressures and ecosystem components (species or habitats) with ‘sensitivity weights’ remain widespread18. These weights have many names in the literature, but they all refer to species’ or habitats’ sensitivity or vulnerability to human activities and pressures, with the aim to estimate potential impacts of these activities and pressures on specific ecosystem components. Typically, they are derived from expert surveys19,20or workshops21,22. Henceforth, we use the term ‘sensitivity scores’.

Here, we analyse the sensitivity scores collected in 21 marine studies worldwide, asking (1) which (if any) ecosystem components are consistently rated highly sensitive or insensitive to which human activities and pressures and (2) how much (if at all) experts agree within and across studies and marine regions. Coherence in sensitivity scores within and across studies and marine regions would indicate that (1) there is a common view of the main hazards to marine ecosystems and that (2) expert elicitation provides a robust basis for the spatially explicit modeling of the human footprint on marine ecosystems. Analysing sensitivity scores across marine regions shows whether there are regional differences in estimated ecosystem sensitivity.

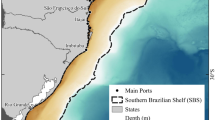

We obtained sensitivity scores from published scientific papers and supplementary materials and by request from authors of non-published reports and projects (Table 1; Fig. 1). We included only journal articles, reports, and projects (henceforth, ‘studies’) that fulfilled the following criteria: (1) the study reported sensitivity scores for marine habitats or species and multiple human activities or pressures; (2) the sensitivity scores were obtained by expert judgment; and (3) the data were available in English. We found 21 studies meeting these criteria, of which 6 reported scores for individual experts, whereas the others reported only scores averaged across experts (Table 1). One study reported individual experts’ replies but anonymization prevented the reconstruction of a full data set for each expert. We thus included only the average sensitivity scores of this study19. Most studies were from Europe, six were from other continents, and one was global (Fig. 1).

Results

Sensitivity scores across regions

Several ecosystem components were consistently rated as sensitive to specific human activities and pressures across studies (Fig. 2). To identify especially high and low sensitivity scores, we chose the 10 th and 90 th percentiles of the scores, which represent ≤ 0.22 and ≥ 0.78 sensitivity scores (Supplement Table S1). We also considered their interquartile ranges (hereafter, iqr) for the variation, but did not set any definitive criterion for a low or high iqr. High sensitivity scores ≥ 0.78 occurred mostly for bottom trawling (on deep habitats and demersal fish with iqr < 0.24), climate change (on coastal habitats with iqr 0.08–0.31 and corals with iqr 0.20), extraction of species (on fish and their habitats as well as bycatch of marine mammals), hazardous substances (on birds, mammals, deep soft habitats and vent habitats with relative high iqr 0.20–0.46), and physical damage including dredging and dumping (iqr < 03 only for estuaries, phytoplankton corals and seagrass and iqr > 0.3 for many habitats). All the median and iqr values are in Table S1. The large interquartile ranges of some high median scores, e.g., physical damage to macroflora 0.64, may indicate that the experts had varying views of the sensitivity or, alternatively, different understanding of the survey question.

The lower end of the spectrum with median values ≤ 0.22 were mostly distributed among artificial combinations such as hunting impacts on underwater species, or highly indirect impacts such as pelagic fishery on coastal habitats (Fig. 2, Table S1). They also reflected the fact that many human activities take place in coastal waters (e.g. recreation) and do not impact deep-sea habitats or species. However, some scores may reflect also low sensitivity of direct exposure such as the sensitivity of sharks to offshore energy production (median 0.2, iqr 0.06) or aquaculture to kelps (median 0.18, iqr 0.17, Table S1). The interquartile values for these pressures were generally small (< 0.2), suggesting good agreement between studies.

Regarding ecosystem components, high sensitivity scores occurred for several ecosystem components, although most were sensitive only to specific pressures. For example, estuaries were highly sensitive to physical damage (iqr 0), corals to climate change, physical damage and dumping (iqr 0.2–0.4) and macroflora to hydrological changes (iqr 0.06). Most studies also agreed that at least some marine mammal species are sensitive to noise and hazardous substances, and that shallow benthic habitats (both soft and hard bottoms) were sensitive to climate change, physical damage (e.g., from bottom trawling, dredging and dumping), and inputs of nutrients, organic matter, and hazardous substances. The lowest sensitivity scores occurred for deep pelagic habitats, seamounts, canyons and ridges, and invertebrates. These ecosystem components had low interquartile ranges for most pressures.

Agreement and disagreement between and within studies

We explored the similarities and dissimilarities of the scores by inspecting the similarity matrices calculated for the scores given by individual experts (Fig. 3) and the scores averaged for all experts within a study (Fig. 4). In some studies, experts agreed well (especially HOLAS I, and to a lesser extent RALAHA, ECOMAR, the ETC Mediterranean, and HOLAS II) (Fig. 3). Regarding agreement within and across geographical regions, the results were mainly inconclusive. For example, expert agreement across the various studies for the Baltic Sea was often weak to moderate. As another example, RALAHA and ECOMAR both collected scores for Danish marine waters, but the resulting scores were no more similar than scores collected for neighbouring regions. Finally, three pairs of experts stand out in Fig. 3 for their high agreement across studies (two between the ETC surveys for the Baltic Sea and the Atlantic, and one between the ETC survey for the Black Sea and the Atlantic). Each of these ‘pairs’ was a single expert who responded to the ETC survey for the two regions.

Similarity of sensitivity scores given by individual experts. Rows and columns represent experts grouped by study (and for the ETC study, by region). The cell colours below the diagonal show similarity measured as rank correlation. The cell colours above the diagonal show a similarity measure based on absolute differences. Greater numbers denote higher agreement of experts. For example, the third row and second column show the correlation between third and second expert in the ECOMAR study. The figure shows only experts with at least 30 scores that could be compared with all others.

When averaged across experts, sensitivity scores collected within the same study for different regions were more similar than scores for the same or nearby regions collected in different studies (Fig. 4). The SYMPHONY scores for different regions were the most similar (Fig. 4) and this was also in the ETC scores for different regions (for example, the ETC scores for the northeast Atlantic and the Mediterranean Sea, despite being very different ecosystems). Scores collected by different studies for the same or nearby regions were sometimes, but not always, similar. For example, scores for the Baltic Sea from HOLAS I, HOLAS II, and the ETC were similar. However, the scores from HOLAS I were similar to the ETC scores for the Mediterranean and the Atlantic. As another example, scores for Spencer Gulf, Australia19 were similar to the scores for many European regions. The scores that were the least similar to most others came from studies focusing on specific locations, e.g., Selkoe et al. for Hawaii and Gissi et al. for the Adriatic Sea.

Similarity of sensitivity scores averaged across experts for each study. Rows and columns represent studies. Cell colours below the diagonal show similarity measured as rank correlation. The cell colours above the diagonal show a similarity measure based on absolute differences. The cell colours above the diagonal show a similarity measure based on absolute differences. Greater numbers denote higher agreement of experts. The figure shows only studies with at least 30 scores that could be compared with all others.

Discussion

Analysing sensitivity scores in 21 studies from four continents yielded three main results. First, averaged scores across studies and regions broadly agreed on the sensitivity to many pressures. Second, regional differences did not adequately explain the variation in sensitivity scores given by individual experts or averaged within studies. Third, sensitivity scores collected in the same study for different regions (by mainly different experts) were relatively similar. In the following subsections, we discuss these key results in the context of other literature, outline the limitations of our analyses, discuss implications for models (e.g., cumulative effects assessments) and conservation, and argue that the collection of sensitivity scores must use more reliable and theoretically grounded methods.

When averaging experts’ ratings, our analyses revealed consistent sensitivity rating of many species and habitats to human activities and pressures. The highest sensitivity scores occurred for bottom trawling, climate change, hazardous substances, physical damage to the seafloor, and for specific combinations like effects of noise on marine mammals and hydrological changes on macroflora. These results broadly reflect the scientific consensus. For example, climate change effects have been reported across marine regions and taxa39and species extraction and physical damage from bottom trawling have well-documented impacts40,41,42. In coastal seas, hazardous substances pose a major threat to top predators43. Our results are also consistent with regional assessments. For example, according to the OSPAR Quality Status Report44, the most threatening pressures for marine mammals in the northeastern Atlantic are the extraction of species, noise, and marine litter.

The analysed studies further agreed that deep-water or offshore ecosystem components have low to moderate sensitivity to marine recreation or hydrological alterations which impacts take place mainly in shallow water ecosystem. However, effects reported in the literature are mixed45. Some low sensitivity scores may reflect remaining knowledge gaps about the pressures’ effects46,47. For example, the effects of underwater noise on marine mammals and fish have been closely studied, but there are gaps for other species such as invertebrates48. Furthermore, low sensitivity scores for deep-sea habitats (e.g., seamounts, ridges, canyons) might reflect limited evidence due to the inaccessibility of these locations35.

Four main limitations of this study arise from its joint analysis of many data sets that were collected with different methods for different regions and purposes.

First, the studies asked the experts to define ecosystem sensitivity to pressure intensity with different and often unclear terms. For example, the MarLin approach was to numerically describe pressure levels, whereas many Baltic surveys referred to a “typical pressure level” and the ETC survey referred to “a relatively high pressure intensity”. Hence, questions about sensitivity to a pressure must come with clear information about its intensity – for example, how many degrees warming over which period of time, or which level of underwater noise at which frequencies?

Second, our results suggest – albeit not conclusively – that methodological differences were the main driver of disagreeing scores between studies. This was seen in the lack of a regional effect but a possible study effect. For example, we found some regional similarities within same studies but not between studies. These may arise from the choices made: in HOLAS I, experts provided scores after discussions at a workshop, and some experts from the same country provided a joint set of scores. In RALAHA, experts could optionally use a pre-filled template, only changing the scores with which they disagreed. Many surveys solely relied on online questionnaires (e.g., the ETC surveys). MarLIN-MarESA scores were produced as a stepwise process where literature review was followed by an expert group meeting, two workshops and one more expert group49. Each of these methodological choices impacts the variability of the scores. Yet, completely separating the methodological effects from other effects (e.g., specifics of the study region and composition of the expert group) was beyond this study.

Third, differences in terminology blur the interpretation of our results. For example, many studies conflate sensitivity (the degree to which an ecosystem component responds to a pressure) and vulnerability (the probability that it will be exposed to the pressure50. As another example, we found that most studies agreed that many ecosystem components were sensitive to climate-change-related pressures, but differences in the wording of the original surveys might guide experts to consider future climate risks or current pressures (e.g., asking experts to rate ‘threats’ to marine ecosystems versus asking them to rate sensitivity to pressures at one or more specified levels). There have been many attempts to homogenize wording and concepts in cumulative effect assessments5,30 but comparability remains a problem.

Fourth, to make the data comparable across studies, we combined the species, habitats, human activities, and pressures for which they reported sensitivity scores into broad categories (like ‘climate change’ and ‘marine mammals’). This broad categorization neglects the complex, context-dependent nature of pressure effects in marine systems. For example, climate change encompasses several pressures, such as chronic warming, heat waves, acidification, and more frequent storms51. Each of these pressures has dozens of effects across species and ecological scales, from changes in individual physiology and metabolism to broad-scale effects on geographical ranges and biodiversity. Furthermore, these effects are context dependent. For example, a species might be negatively affected by global warming near the equatorward limit of their geographical range, but benefit near their poleward limit2.

Given these limitations, our results provide a broad overview of expert agreement: high scores across studies reflect experts’ widespread concerns about these issues. However, high sensitivity only implies a large risk if the ecosystem component is exposed to the pressure. For example, some impacts might be avoided by ecosystem based spatial planning5,52,53 that separates human activities and pressures from sensitive species and habitats. Conversely, low sensitivity scores across studies suggest that experts were little concerned, but do not imply that the respective human activities and pressures are harmless. Our analyses support marine spatial planning by providing a broad summary of which species and habitats would ideally be protected from specific human activities and pressures, but must be interpreted in the context of more nuanced, region-specific knowledge.

The sensitivity scores analysed were originally collected to support spatial assessments of multiple pressures in marine systems. Such assessments remain commonly based on an additive model18. This model uses sensitivity scores to link spatial data describing human activities or pressures and ecosystem components54. Using expert surveys is a main concern about this method55, yet sensitivity analyses have repeatedly shown that other sources of uncertainty are more important35,56,57,58,59. Thus, methodological flaws in collecting sensitivity scores do not invalidate this body of literature, but given the importance of methods evident in our analyses, future research must use the best methods available. Furthermore, the broad agreement of average scores across regions suggests that global scores may be reliable or scores from other regions can be an acceptable substitute if no resources for region-specific surveys are available.

Sound elicitation methods are critical for obtaining reliable and valid expert ratings9. Various structured approaches exist to make expert elicitation more reliable but remain underused. For example, the Delphi and IDEA protocols are structured approaches that address common issues, such as ‘group think’ and ambiguous questions60,61. In these approaches, researchers individually reply to questionnaires and then revise their replies based on an anonymized summary of others’ replies (possibly including a round of discussions between experts). For example, Wåhlström et al. (2022)21 applied the Delphi protocol when establishing climate change sensitivity scores for Swedish waters, which substantially reduced the variation among responses, thus generating more reliable sensitivity scores.

Despite the availability of such well-established approaches, several studies we analysed used ad-hoc approaches and only few studies grounded their methods in decision science, established fundamentals of survey design, or other theoretical foundations31,50. Most studies ignored well-known sources of bias62, and some asked leading questions (for example, referring to pressures as “threats”, which might prime respondents to assign higher sensitivity scores than more neutral terms). Furthermore, many methodological choices have been documented inadequately. Such flaws reflect a broader trend of natural scientists using social science methods and reviewing the resulting research without adequate training and knowledge of the literature and theoretical foundations63,64. Therefore, we urge natural scientists eliciting sensitivity scores to engage with social scientists to lead surveys and workshops.

Taken together, we found broad agreement about the most severe human activities and pressures to marine ecosystems. This result was in line with our hypothesis and strengthens the scientific understanding that climate change, hazardous substances and physical damage to benthic ecosystems from towed mobile fishing gears are threats that must be globally addressed. Our results can also help guiding the limited conservation resources towards protecting the most vulnerable species and habitats. While there was a global agreement on some of the ecosystem sensitivity, our analysis also revealed severe methodological shortcomings in many published studies, which prevent robust conclusions on ecosystem sensitivity against many other pressures and limit our understanding of region-specific ecosystem sensitivity. We showed that many survey designs did not follow the state-of-the-art standards and the methods were not always adequately reported. We recommend to (1) Use a professional and experienced survey designer, (2) Select the experts for each question separately (not a pool of experts to all questions where they do not have expertise), (3) Use resources to increase common understanding of the survey objectives, questions and the technical issues, and (4) Define the levels of human activities or pressures clearly in the survey questions (so-called pressure benchmarking)9,50,65.

Methods

Data preprocessing and reclassifying human activities, pressures, and ecosystem components

We searched the scientific literature for cumulative effect (or impact) assessments in Google scholar and supplemented that by asking within our own networks of colleagues to identify additional studies. This allowed finding also project reports. We included studies which used weight coefficients to estimate impacts of an anthropogenic pressure to an ecosystem component (or ‘receptor’, see definitions in66). The coefficients were typically called ‘sensitivity weights’ or ‘vulnerability factors’, following e.g67. In this study we call them ‘sensitivity scores’. We converted all studies’ reported average sensitivity scores to a unified format listing the study, pressure or human activity, ecosystem component (habitat or species), and the score. For the studies where individual scores were available, we compiled the same data per expert (as opposed to the average across each study’s experts).

The studies included different ecosystem components, human activities, and pressures. In this manuscript an ‘ecosystem component’ is a habitat, species, or species group and a ‘pressure’ refers to an anthropogenic pressure, not naturally occurring stress on an ecosystem component. To compare sensitivity scores across studies, we reclassified the scores into broad categories of (1) human activity, (2) pressure, (3) habitats, (4) biogenic habitats and habitat-forming forming species, and (5) other species (Tables S1-S5). For example, all fish species were classified as “fish,” and all climate-related pressures like warming, acidification, and sea level rise were classified as “climate.” Some studies reported sensitivity scores for activities and the resulting pressures (e.g., “Noise from shipping”). In such situations, the sensitivity score was assigned to both the human activity and the broad pressure class, i.e., including the scores under the pressure ‘noise’ and the activity ‘shipping’.

To reduce the effects of this coarse generalization, we always picked the maximum sensitivity score within each combination of broad pressure and ecosystem component categories. For example, for a given study or expert, a high sensitivity score for the combination of species group “fish” and pressure group “climate” means that at least one rated fish species had a high score for at least one climate-related pressure.

Data analysis

Our analyses focused on non-parametric data exploration and visualization because the sensitivity scores are ratings and sample sizes within groups of interest (studies and geographical regions) were small.

To explore the average sensitivity scores, we rescaled them in each study by dividing them by the study’s maximum. We then calculated the median and interquartile range across studies for each combination of (1) activity and (2) pressure versus (a) physical habitats, (b) biogenic habitats, and (c) species. We excluded combinations for which fewer than three studies reported scores. Finally, we qualitatively interpreted the plots of these medians and ranges to identify combinations with consistently high or low sensitivity scores.

We explored agreement and disagreement between average scores from pairs of studies with two measures of similarity: (1) the Kendall correlation coefficient τ of the sensitivity scores provided in each pair of studies and (2) an absolute-difference-based measure. While theoretically appropriate, rank-order-based dissimilarity measures like Kendall’s τ have two shortcomings. First, although Kendall’s τ can handle ties, the limited number of levels that sensitivity scores could take in some studies (e.g., no impact, low, medium, or high) caused an unusually large number of ties. Second, while sensitivity scores are ratings, their absolute values and differences are meaningful. For example, the scores (0.1, 0.2, 0.2) have the same rank order as (0.8, 1.0, 1.0), yet they reflect a strong disagreement about sensitivity. Therefore, for two vectors of scores (s1,1, …, s1,n) and (s2,1, …, s2,n), we calculated an alternative similarity σ abs based on the mean absolute differences between scores:

This measure intuitively captures the differences of interest but has a weaker theoretical justification than Kendall’s τ for rating-scaled data. We therefore report both measures.

As the studies addressed different categories of pressures and ecosystem components and included scores for only a subset of possible combinations, it was impossible to calculate pairwise similarities for all studies. Instead, we used a stepwise algorithm to identify a subset of studies such that each had at least 30 combinations in common with all others. The algorithm started with all the studies. One by one, it removed the study that could be compared with the fewest others. If this criterion yielded several candidates for removal, the resulting paths were recursively evaluated. Finally, the path yielding the most studies that could be compared was retained. The resulting subset contained 21 studies. The similarity between individual experts’ scores was calculated alike.

Data availability

Data on which this study is based has been extracted from published papers, their Supplementary Materials, or provided by the corresponding authors on request.

References

Hodgson, E. E. & Halpern, B. S. Investigating cumulative effects across ecological scales. Conserv. Biol. 33 (1). https://doi.org/10.1111/cobi.13125 (2019).

Woodburn, E. et al. The many pathways of climate change affecting coastal ecosystems: a case study of Western Vancouver Island, Canada. FACETS https://doi.org/10.1139/facets-2024-0043 (in press).

Hunsicker, M. E. et al. Characterizing driver–response relationships in marine pelagic ecosystems for improved ocean management. Ecol. Appl., 26(3), 651–663 (2016).

Parravicini, V. et al. Understanding relationships between conflicting human uses and coastal ecosystems status: A Geospatial modeling approach. Ecol. Ind. 19, 253–263. https://doi.org/10.1016/j.ecolind.2011.07.027 (2012).

Gissi, E. et al. A review of the combined effects of climate change and other local human stressors on the marine environment. Sci. Total Environ. 755 https://doi.org/10.1016/j.scitotenv.2020.142564 (2021).

Côté, I. M., Darling, E. S. & Brown, C. J. Interactions among ecosystem stressors and their importance in conservation. Proceedings of the Royal Society B: Biological Sciences, 283(1824), Article 1824. (2016).

Crain, C. M., Kroeker, K. & Halpern, B. S. Interactive and cumulative effects of multiple human stressors in marine systems. Ecol. Lett. 11 (12), 1304–1315. https://doi.org/10.1111/j.1461-0248.2008.01253.x (2008).

Darling, E. S. & Côté, I. M. Quantifying the evidence for ecological synergies. Ecol. Lett., 11(12), 1278–1286 (2008).

Drescher, M. et al. Toward rigorous use of expert knowledge in ecological research. Ecosphere 4 (7), art83. https://doi.org/10.1890/ES12-00415.1 (2013).

Burgman, M. et al. Redefining expertise and improving ecological judgment. Conserv. Lett. 4 (2), 81–87. https://doi.org/10.1111/j.1755-263X.2011.00165.x (2011).

EC Directive 2008/56/EC of the European Parliament and of the Council of 17 June 2008 Establishing a framework for community action in the field of marine environmental policy (Marine strategy framework Directive). OJ L 164, (2008). 25.6.2008.

EC. Directive 2014/89/EU of the European Parliament and of the Council of 23 July 2014 Establishing a framework for maritime Spatial planning. OJ L. 257, 28 (2014). 8.2014.

Korpinen, S. et al. Combined effects of human pressures on Europe’ s marine ecosystems. Ambio https://doi.org/10.1007/s13280-020-01482-x (2021).

Korpinen, S. & Andersen, J. H. A global review of cumulative pressure and impact assessments in marine environments. Front. Mar. Sci. 3, 153 (2016).

Gissi, E., Fraschetti, S. & Micheli, F. Incorporating change in marine Spatial planning: A review. Environ. Sciene Policy. 92, 191–200. https://doi.org/10.1016/j.envsci.2018.12.002 (2019).

Steenbeek, J. et al. Making ecosystem modeling Operational–A novel distributed execution framework to systematically explore ecological responses to divergent climate trajectories. Earth’s Future. 12 (3). https://doi.org/10.1029/2023EF004295 (2024). e2023EF004295.

Stock, A. et al. Exploring cumulative human impacts with Ecopath, Ecosim, and ecospace: research designs, modeling techniques, and future opportunities. Sci. Total Environ. 869 (161719). https://doi.org/10.1016/j.scitotenv.2023.16171 (2023). Article 161719.

Simeoni, C. et al. Evaluating the combined effect of climate and anthropogenic stressors on marine coastal ecosystems: insights from a systematic review of cumulative impact assessment approaches. Sci. Total Environ. 861, 160687. https://doi.org/10.1016/j.scitotenv.2022.160687 (2023).

Doubleday, Z. A., Jones, A. R., Deveney, M. R., Ward, T. M. & Gillanders, B. M. Eight habitats, 38 threats and 55 experts: assessing ecological risk in a multi-use marine region. PLOS ONE. 12 (5), e0177393. https://doi.org/10.1371/journal.pone.0177393 (2017).

Halpern, B. S., Selkoe, K. A., Micheli, F. & Kappel, C. V. Evaluating and ranking the vulnerability of global marine ecosystems to anthropogenic threats. Conserv. Biol. 21 (5), 1301–1315. https://doi.org/10.1111/j.1523-1739.2007.00752.x (2007).

Wåhlstöm, I. et al. Projected climate change impact on a coastal sea – As significant as all current pressures combined. Glob. Change Biol. 28, 5310–5319. https://doi.org/10.1111/gcb.16312 (2022).

WIO Symphony. WIO Symphony User Manual and Demo, edition 2023-11-20. Nairobi Convention and Swedish Agency for Marine and Water Management. Nairobi. (2023). https://www.nairobiconvention.org/wio-symphony/

Selkoe, K. A., Halpern, B. S. & Toonen, R. J. Evaluating anthropogenic threats to the Northwestern Hawaiian Islands. Aquat. Conservation: Mar. Freshw. Ecosyst. 18 (7), 1149–1165. https://doi.org/10.3354/meps08345 (2008).

Korpinen, S., Meski, L., Andersen, J. H. & Laamanen, M. Humans pressures and their potential impact on the Baltic sea ecosystem. Ecol. Ind. 15 (1), 105–114. https://doi.org/10.1016/j.ecolind.2011.09.023 (2012).

Andersen, J. H. et al. Relative impacts of multiple human stressors in estuaries and coastal waters in the North sea–Baltic sea transition zone. Sci. Total Environ. 704. https://doi.org/10.1016/j.scitotenv.2019.135316 (2019).

HELCOM. Thematic assessment of cumulative impacts on the Baltic Sea 2011–2016. Baltic Sea Environment Proceedings No. 159. (2018). Available at: http://www.helcom.fi/baltic-sea-trends/holistic-assessments/ state-of-the-baltic-sea-2018/reports-and-materials/

Andersen, J. H., Stock, A., Mannerla, M. & Heinänen, S. (eds),M. Vinther, M. Human uses, pressures and impacts in the eastern North Sea. Aarhus University, DCE – Danish Centre for Enviroment and Energy. 136 pp. Technical Report from DCE – Danish Centre for Environment and Energy No. 18. (2013). http://www.dmu.dk/Pub/TR18.pdf

Hammar, L. et al. Cumulative impact assessment for ecosystem-based marine Spatial planning. Sci. Total Environ. 734, 139024. https://doi.org/10.1016/j.scitotenv.2020.139024 (2020).

Tillin., H. & Tyler-Walters., H. Assessing the sensitivity of subtidal sedimentary habitats to pressures associated with marine activities. Phase 1 Report: Rationale and proposed ecological groupings for Level 5 biotopes against which sensitivity assessments would be best undertaken. JNCC Report No. 512A (2013).

Stelzenmüller, V., Lee, J., South, A. & Rogers, S. I. Quantifying cumulative impacts of human pressures on the marine environment: a Geospatial modelling framework. Ecol. Appl. 20 (5), 1402–1416. https://doi.org/10.1890/09-1173.1 (2010).

Teck, S. J. et al. Using expert judgment to estimate marine ecosystem vulnerability in the California current. Mar. Ecol. Prog. Ser. 398, 19–32. https://doi.org/10.1111/cobi.13141 (2010).

MacDiarmid, A. et al. Assesment of anthropogenic threats to new Zealand marine habitats. New. Z. Aquat. Environ. Biodivers. Rep., 93, 1–255 (2012).

Kappel, C. V. & Halpern, B. S. Mapping cumulative impacts of human activities on marine ecosystems. Coastal. Mar. Spat. Plann. https://doi.org/10.31230/osf.io/6exng (2012). 3.

La Riviére, M. et al. Assessing benthic habitats´ sensitivity to human pressures: a methodological framework. Nat. Herit. Service. 87. https://doi.org/10.13140/RG.2.2.19763.22565 (2016).

Gissi, E. et al. Addressing uncertainty in modelling cumulative impacts within maritime Spatial planning in the Adriatic and ionian region. PloS One. 12 (7), Article7 (2017).

Kenny, A. J. et al. Assessing cumulative human activities, pressures, and impacts on North sea benthic using biological traits approach. J. Mar. Sci. 75 (3), 1080–1092. https://doi.org/10.1093/icesjms/fsx205 (2018).

Willaert, T., Garcia-Alegre, A., Queiroga, H., Cunha-e-Sá, M. & Lillebø, A. I. Measuring vulnerability of marine and coastal habitats´ potential to deliver ecosystem services: complex Atlantic region as case study. Mar. Sci. 6. https://doi.org/10.3389/fmars.2019.00199 (2019).

Andersen, J. H. et al. Are European blue economy ambitions in conflict with European environmental visions? Ambio 52, 1981–1991. https://doi.org/10.1007/s13280-023-01896-3 (2020).

Poloczanska, E. S. et al. Global imprint of climate change on marine life. Nat. Clim. Change. 3 (10). https://doi.org/10.1038/nclimate1958 (2013). Article 10.

Coll, M., Libralato, S., Tudela, S., Palomera, I. & Pranovi, F. Ecosystem overfishing in the ocean. PLOS ONE. 3 (12), e3881. https://doi.org/10.1371/journal.pone.0003881 (2008).

Jackson, J. B. C. et al. Historical overfishing and the recent collapse of coastal ecosystems. Science 293 (5530), Article5530 (2001).

Pusceddu, A. et al. Chronic and intensive bottom trawling impairs deep-sea biodiversity and ecosystem functioning. Proc. Natl. Acad. Sci. 111 (24), 8861–8866. https://doi.org/10.1073/pnas.1405454111 (2014).

Alygizakis, N. Network analysis to reveal the most commonly detected compounds in predator-prey pairs in freshwater and marine mammals and fish in Europe. Sci. Total Environ. 950, 175303. https://doi.org/10.1016/j.scitotenv.2024.175303 (2024).

OSPAR. Marine Mammals Thematic Assessment, (2024). https://oap.ospar.org/en/ospar-assessments/quality-status-reports/qsr-2023/thematic-assessments/marine-mammals/pressures/

Martínez, M. L. et al. A systemic view of potential environmental impacts of ocean energy production. Renew. Sustain. Energy Rev. 149, 111332. https://doi.org/10.1016/j.rser.2021.111332 (2021).

Vargas-Fonseca, O. A., Frazier, M., Lombard, A. T. & Halpern, B. S. Knowns and unknowns in future human pressures on the ocean. Earth´s Future. 12 (9). https://doi.org/10.1029/2024EF004559 (2005).

Kraan, C. et al. Thresholds of seascape fauna composition along gradients of human pressures and natural conditions to inform marine Spatial planning. Sci. Total Environ. 914, 169940. https://doi.org/10.1016/j.scitotenv.2024.169940 (2024).

Merchant, N. D. et al. A decade of underwater noise research in support of the European marine strategy framework directive. Ocean Coast. Manag. 228, 106299. https://doi.org/10.1016/j.ocecoaman.2022.106299 (2022).

Tillin, H. M., Hull, S. C. & Tyler-Walters, H. Development of a Sensitivity Matrix (pressures-MCZ/MPA features). Report to the Department of Environment, Food and Rural Affairs from ABPMer, Southampton and the Marine Life Information Network (MarLIN) Plymouth: Marine Biological Association of the UK.Defra Contract No. MB0102 Task 3A, Report No. 22. (2010).

Zacharias, M. A. & Gregr, E. J. Sensitivity and vulnerability in marine environments: an approach to identifying vulnerable marine areas. Conserv. Biol. 19 (1), 86–97. https://doi.org/10.1111/j.1523-1739.2005.00148.x (2005).

Piet, G., Grundlehner, A., Jongbloed, R., Tamis, J. & de Vries, P. SCAIRM: A Spatial cumulative assessment of impact risk for management. Ecol. Ind. 157 (15), 111157. https://doi.org/10.1016/j.ecolind.2023.111157 (2023).

Frazão Santos, C. et al. Integrating climate change in ocean planning. Nat. Sustain. 3 (7), 505–516. https://doi.org/10.1038/s41893-020-0513-x (2020).

Galpasoro et al. (Under revision).

Halpern, B. S. et al. A global map of human impact on marine ecosystems. Science 319 (5865). https://doi.org/10.1126/science.1149345 (2008).

Halpern, B. S. & Fujita, R. Assumptions, challenges, and future directions in cumulative impact analysis. Ecosphere 4 (10). https://doi.org/10.1890/ES13-00181.1 (2013). Article 10.

Jones, A. R. et al. Capturing expert uncertainty in Spatial cumulative impact assessments. Sci. Rep. 8 (1). https://doi.org/10.1038/s41598-018-19354-6 (2018).

Murphy, G. E. P., Stock, A. & Kelly, N. E. From land to deep Sea: A continuum of cumulative human impacts on marine habitats in Atlantic Canada. Ecosphere 15 (9), e4964. https://doi.org/10.1002/ecs2.4964 (2024).

Stock, A., Crowder, L. B., Halpern, B. S. & Micheli, F. Uncertainty analysis and robust areas of high and low modeled human impact on the global oceans. Conserv. Biol. 32 (6). https://doi.org/10.1111/cobi.13141 (2018). Article 6.

Stock, A. & Micheli, F. Effects of model assumptions and data quality on Spatial cumulative human impact assessments. Glob. Ecol. Biogeogr. 25 (11). https://doi.org/10.1111/geb.12493 (2016). Article 11.

Hemming, V., Burgman, M., Hanea, A., McBride, M. & Wintle, B. A practical guide to structured expert elicitation using the IDEA protocol. Methods Ecol. Evol. 9. https://doi.org/10.1111/2041-210x.12857 (2017).

Mukherjee, N. et al. The Delphi technique in ecology and biological conservation: applications and guidelines. Methods Ecol. Evol. 6 (9), 1097–1109. https://doi.org/10.1111/2041-210X.12387 (2015).

Choi, B. C. K. & Pak, A. W. P. A catalog of biases in questionnaires. Prev. Chronic Dis. 2 (1), A13 (2004).

Castillo, A., Bullen-Aguiar, A. A., Peña-Mondragón, J. L. & Gutiérrez-Serrano, N. G. The social component of social-ecological research: moving from the periphery to the center. Ecol. Soc. 25 (1), art6. https://doi.org/10.5751/ES-11345-250106 (2020).

Martin, V. Y. Four Common Problems In Environmental Social Research Undertaken by Natural Scientists. BioScience, 70(1), 13–16. (2020). https://doi.org/10.1093/biosci/biz128

Knoll, A. B., Slottje, P., van der Sluijs, J. P. & Leblert, E. The use of expert elicitation in environmental health impact assessment: a seven step procedure. Environ. Health. 9 (19). https://doi.org/10.1186/1476-069X-9-19 (2010).

Judd, A. D., Backhaus, T. & Goodsir, F. An effective set of principles for practical implementation of marine cumulative effects assessment. Environ. Sci. Policy. 54, 254–262. https://doi.org/10.1016/j.envsci.2015.07.008 (2015).

Halpern, B. S., Selkoe, K. Y., Micheli, F. & Kappel, C. V. Four evaluating and ranking vulnerability of global marine ecosystems to anthropogenic threats. Conserv. Biol. 21 (5), 1301–1315. https://doi.org/10.1111/j.1523-1739.2007.00752.x (2007).

Acknowledgements

This study is the outcome of the work of a Synthesis Working Group at the Aquatic Synthesis Research Centre (AquaSYNC). JHA, CM, JAC, NK, SK, VS, and AS were partly supported by the Horizon Europe project GES4SEAS (Grant Agreement No. 101059877). Thanks are due to Emilie Kallenbach, Therese Harvey, Monika Peterlin and Johnny Reker as well as the kind help by the corresponding authors of the original studies of ecosystem sensitivity.

Author information

Authors and Affiliations

Contributions

Conceived and designed the analysis: J.H.A., S.K.Collected the data: S.K., C.M., J.H.A., L.H., N.K.Contributed data or analysis tools: S.K., A.S., C.M., L.H., N.K., E.G., V. S., B.S.H.Performed the analysis: A.S., N.K.Wrote the first draft: N.K., J.H.A., A.S., S.K.All authors have contributed to the editing and revisions of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kallio, N., Andersen, J.H., Carstensen, J. et al. Challenges in expert ratings of marine habitat and species sensitivity to anthropogenic pressures. Sci Rep 15, 12546 (2025). https://doi.org/10.1038/s41598-025-96913-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-96913-8