Abstract

With the rapid development of digital communications, sensitive digital storage security, especially that of images, remains a significant challenge. Most of the existing hash algorithms cannot meet the requirements of security, efficiency, and adaptability in cryptographic applications based on an image. We will fill this critical gap by proposing a new hybrid hash algorithm integrating three powerful cryptographic concepts: Cellular Automata (CA), sponge functions, and Elliptic Curve Cryptography (ECC). We use the fact that CA is naturally unpredictable and has a high entropy to improve its diffusion properties. This makes it less vulnerable to differential cryptanalysis. The sponge construction allows for variable-length input handling with fixed-length output, ensuring scalability for diverse image data sizes. Last but not least, elliptic curve operations add more layers of collision resistance and nonlinearity to make the cryptography even more resistant to preimage and collision attacks. The novelty of this work is that it creates synergy between the CA’s chaotic behaviour, the flexibility of sponge functions, and the lightweight but powerful security of ECC, resulting in a hash algorithm that is both secure and efficient. This hybrid design is especially suitable for lightweight cryptographic scenarios where the traditional methods fall short. The proposed scheme provides a viable platform for secure image transactions and holds excellent promise to resist advanced cryptanalytic attacks.

Similar content being viewed by others

Introduction

Cryptography is a fascinating area that incorporates elements of history, mathematics, creativity, and science. Encryption has long been employed as a means to safeguard secrecy for countless centuries, ranging from merchants safeguarding their physical and intellectual assets to military commanders communicating with their troops. A Cryptography is the practice of concealing or hiding the significance of data or messages and subsequently deciphering that significance. Cryptology is a formal science that focuses on the study and application of mathematics, linguistics, and other sciences to comprehend and create cryptographic systems. These systems consist of integrated elements, components, and processes that are designed to carry out specific cryptographic functions or operations. In today’s world, it is important to have encryption methods to safeguard communication, protect information, and uphold privacy. Cryptographic hash functions are an important concept in this field as they ensure data integrity verification, create signatures, and form the basis for more advanced cryptographic protocols. These functions are one-way functions that take any amount of input data and generate a fixed-size hash value that acts as a representation of the data. The security of these hash functions depends on their ability to resist collisions, pre-image attacks and second pre-image attacks1. Long before modern cryptography emerged, computer science used the concept of hashing to retrieve data using hash tables efficiently. This began to veer towards cryptographic hash functions in the 1970s; Diffie and Hellman2 discuss how the theories of communication and computing are now offering the means to resolve longstanding cryptographic difficulties. As the need for integral, secure communications emerged. In 1975, the National Bureau of Standards, now called the National Institute of Standards and Technologies (NIST), published the Data Encryption Standard (DES), which indirectly influenced hash function development. Around this time, Ralph Merkle and Ivan Damgård3,4 independently proposed what is now known as the Merkle–Damgård construction, a cornerstone in building secure hash functions.

These days, the majority of applications necessitate hash functions that are lightweight and suitable for use in contexts with limited resources. However, the lightweight nature of the hash algorithms must not compromise the security of the function. This situation involves a compromise between effectiveness and protection. To achieve this, one can utilise novel cryptographic primitives such as collision-resistant hash functions CA and ECC sponge functions during the design process. This study presents a novel hash function that utilises ECC, CA, and sponge function. The contribution to this paper is

Novel hash function development

A new cryptographic hash function is proposed that incorporates these three advanced cryptographic primitives: ECC, CA, and sponge functions.

Lightweight and secure design

The design of the proposed hash function is lightweight in terms of operations to be performed; nevertheless, it is secure in terms of resisting collisions, pre-image attacks, and second pre-image attacks for a robustness requirement.

Using cryptographic principles together

The suggested hash function combines the best of ECC, CA, and sponge functions to make the most of their strengths in both speed and security. This makes it the best secure hash function design yet.

Focus on contemporary needs

The new approach will meet the demands of contemporary cryptography by providing lightweight and adaptable hash functions without compromising security, catering to the wide range of applications in today’s and future technological environments.

Preliminaries and related works

The following section provides an introduction to the above-mentioned concepts.

Cryptographic hash functions

A hash function is a mathematical function or algorithm that transforms a variable-length message into a fixed-length string, known as a hash value or hash. The first widely adopted cryptographic hash function was MD4, designed by5 in 1990. MD4 introduced the basic structure for secure hash functions but was very soon found to have vulnerabilities.6 followed up with MD5 in 1992, which became very popular despite later proofs of insecurity. Later, the Secure Hash Algorithm (SHA)7 is a set of cryptographic hash functions developed by the United States government in the early 1990s. SHA-0 was the initial hash standard. Due to its significant inherent weaknesses, it was promptly eliminated and substituted with SHA-1 in 1995. SHA-1 employs a 512-bit block size to generate a 160-bit message digest by doing eight rounds of operation. SHA-1 lacks sufficient resilience against attacks like a birthday attack and is no longer regarded as secure for use. In 2001, the SHA-2 family of hash algorithms was developed and implemented as a replacement. The commonly used practice with SHA-2 is to describe a specific implementation by indicating the size of the resulting message digest. The message digests in SHA-2 can have lengths of 224, 256, 384, and 512 bits. SHA-224 and SHA-256 have a block length of 512 bits, but SHA-384 and SHA-512 have a block length of 1024 bits. NIST announced the Secure Hash Algorithm 3 (SHA-3) on August 5, 2015. It is derived from the Keccak cryptographic family, which was created by Guido Bertoni, Joan Daemen, Michael Peeters, and Gilles Van Assche. This subset is commonly referred to as Keccak. While initially intended to be a component of the SHA standard, it differs significantly in its internal structure from earlier versions of SHA. SHA-3 employs the sponge creation method8, which relies on a broad random function or permutation. The sponging technique enables the algorithm to accept any quantity of data as input and compresses the output, providing a higher level of adaptability compared to previous SHA methods. SHA-3 is not intended to replace SHA-2; instead, it can be directly substituted for it. At now, there are no intentions to eliminate SHA-2 from the standard.

Elliptic curve cryptography

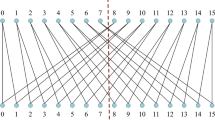

ECC, a type of public-key cryptography, derives its form from the algebraic structure of elliptic curves over finite fields by an equation of the form \(y^2 = x^3+cx+d\). ECC has the same security level as any other traditional cryptographic system, such as RSA, but can use much smaller key sizes, particularly in areas with minimal computational resources9,10,11. Most of the cryptographic applications, such as key exchange protocols, digital signatures, and encryption methods, have widely adopted ECC because of its efficiency and strength. Adding ECC to the cryptographic hash function adds a new level of security to cryptography. EC Graphical representation is shown in Fig. 1. The security of ECC is based on the difficulty of the Elliptic Curve Discrete Logarithm Problem (ECDLP) given an elliptic curve, a point P on the curve, and a scalar K; it is hard to find KP. In this case, it is not computationally feasible to derive P and K, at least for curves over large prime fields, using the best technology available today. The ECDLP problem is considered to be more complex than the integer factorization problem that underlies RSA. Thus, the ECC achieves equivalent security with much smaller12. Several algorithms are designed to improve the performance of ECC operations. Among them, scalar multiplication is the essential computational operation for ECC. The double-and-add algorithm is one of the basic approaches similar to binary exponentiation in RSA. Another important algorithm which gives resistance to the side-channel attack is the Montgomery Ladder, which considers keeping the time and power consumption of scalar multiplication operations independent of the value of the scalar13.

It is possible to map pixel values of an image to the coordinates on an elliptic curve for the image encryption using ECC. The public key can be converted into ciphertext format with the public key, which should have the attributes of confidentiality and integrity. The idea behind this approach is to encode the image contents into points on an elliptic curve and then encrypt the points to yield an encrypted image14. However, ECC also plays an important role in internet security, implementing SSL/TLS protocols that form the backbone of secure web traffic. In blockchains and cryptocurrencies, ECC is also playing a critical role in generating and validating cryptographic keys, as implemented in Bitcoin and other cryptocurrencies15. Additionally Hankerson and Menezes16 discusses the Sliding Window and Fixed Window methods to further optimise the scalar multiplication by pre-computation of several points that reduce the number of operations required. Murugeshwari et al.17 show that data mining with privacy protection will consider significant concerns about individual data privacy, using the precise elliptic curve cryptography in multi-cloud environments. Arunkumar et al.18 have proposed a design methodology integrating Logistic Regression machine learning with ECC, termed LRECC, for developing a secured Internet of Things architecture targeted for Wireless sensor networks (WSN). Krishnamoorthy et al.19 discusses methods for enhancing data security in cloud computing using elliptical curve cryptography. It aims to manage and safeguard sensitive information shared in an environment like the cloud without compromising safety or integrity. Adeniyi et al.20discusses ECC is generally seen to be highly efficient in protecting resource-limited Internet of Things (IoT) devices with robust security using smaller key sizes than conventional cryptosystems. However, with the rise of quantum computing and the computational cost of scalar operations, researchers are now exploring the integration of CA to complement ECC, aiming to boost performance and randomness in key operations. Mathematical operation of ECC is shown in Fig. 2, 3 and 4.

Sponge structures

Reference21 introduced the sponge function, also known as a cryptographic primitive, in 2007. Sponge construction processes incoming data in rounds with a fixed permutation or transformation function, followed by two stages: absorb and squeeze. The permutation function transforms the incoming data, including an XOR with part of the internal state, until it absorbs all incoming data. The squeezing phase iteratively applies the transformation to the internal state, extracting the output from a portion of it. Two essential parameters of a sponge function are bit rate r, the amount of input absorbed or the amount of output squeezed during every iteration in a sponge function, and capacity c, which are related to the security level of the construction. It provides a drop-in and plug-and-play platform on which to build a wide variety of cryptographic functions, from simple cryptographic hash functions and stream cyphers to Message Authentication Code (MAC)s. The SHA-3 cryptographic hash function, which won the 2012 NIST hash function competition, is the most prominent application of the sponge construction to date.

The key characteristic of sponge functions is that they take input and produce an output of arbitrary length, making them highly versatile in serving a wide variety of cryptographic applications22. The most well-known example is SHA-3, based on the Keccak sponge function. SHA-3 has some advantages compared with traditional hash functions like SHA-2: it is resistant to length extension attacks, and its design is straightforward and well-understood23. Apart from hash functions, sponge constructions also form the basis for the design of authenticated encryption schemes such as Keyak and Ketje, which are part of the CAESAR competition for authenticated encryption schemes. Sponge functions are also used to generate pseudo-random bit sequences and construct MACs with provable security properties under certain conditions24.

Cellular automata in cryprography

CA are mathematical models with a grid of cells with infinitely many states. The state of each cell in the grid evolves according to a set of rules dependent on the states of neighbouring cells. It follows that CA has a high degree of parallelism and can exhibit complex behaviour from simple rules, thereby finding many applications in cryptography25. The most well-known example of a CA is Conway’s Game of Life, devised by British mathematician John Horton Conway back in 197026. This two-dimensional CA consists of a grid of any number of dimensions, and every cell can be alive or dead. Reference27 studied Elementary Cellular Automata (ECA), which is 1D, 2-state, and 3-neighbourhood CA. We can divide CA into several types based on their dimensionality, the nature of the cell states, and the rules governing their evolution. For example, we can conceptualise one-dimensional CA as a line of cells where each cell’s state depends on that of its immediate neighbours. Two-dimensional CA, such as in the Game of Life, is similarly a grid of cells where each cell depends not only on its immediate neighbours in each direction but also on its corresponding immediate neighbour in the second dimension. Higher dimensions can extend CA, but due to their added complexity, they receive less research. People most commonly use periodic and closed boundary conditions in CA. Due to their inherent simplicity, parallelism, and capacity to produce complex, unpredictable behavior from simple rules, researchers have explored CA as a basis for designing cryptographic hash functions. Wolfram’s 3-neighbourhood CA has been used in many cryptosystems because of its Pseudo-random number generating (PRNG). However, idea of using CA was first proposed by Ref.4. Later it has been widely used in Cryptographic hash functions applications28,29,30,31. While ECC and CA have been separately researched in depth, the incorporation of CA into ECC frameworks is underdeveloped and fragmented in literature. Some researchers have suggested using CA to enhance key generation, improve randomness, or serve as control logic in scalar multiplication. However, achieving a systematic approach to combine both fields for enhanced encryption, particularly in image security and IoT applications, remains a challenge. This gap creates a chance for hybrid cryptographic models integrating CA’s lightweight, parallelism features with ECC’s mathematically proven security. This work will bridge this gap by presenting a new model of integrating ECC-CA, examining its performance, and designing it as a future-proofed cryptographic solution.

Proposed approach

In this section, we discuss the detailed methodologies used in the proposed hash algorithm, which is based on the combination of the CA and Sponge Functions. We divide the methodology into subsections, each covering a crucial aspect of algorithm design and implementation. These sections are: message padding and Initialisation; Absorbing Phase; State Evolution via CA; Permutation operations; and Squeezing Phase.

Sponge construction: message padding

First, the input message M has to be padded to a multiple of the bit rate r before processing. The padded message \(M'\) is divided into blocks \(M_1, M_2,....,M_n\), each of length r bits. We execute padding by attaching a ‘1’ bit, followed by a sequence of ‘0’s to complete the block, and culminating with an additional ‘1’ bit to represent the end of the block. If the input message is image then first the image is one-dimensional bitstream. For \(m\times n\) grayscale image, the pixel value is an 8-bit and color image it has concatenated pixel values of three channels with 24-bit each. After flattening the Image data is converted as a matrix of size \(m \times n\) and further reshaped into a sequence of data (pixel bits):

The block diagram for the sponge function is shown in Fig. 5 We now define the Internal State S, of total length \(b = r + c = 256 + 512 = 768\,bits\), where r is the bit rate and c is the capacity respectively which governs the security and efficiency of the algorithm32. The initial state is

Absorbing phase

In the absorption phase, the padded input is XORed into the state’s rate portion block by block(\(M_1,M_2,...M_n\)). The blocks are absorbed as follows:

where \(M_i\) blocks of the input message, which is Xored with first r bits of the current state \(S_i\), and then transformation and permutation in the internal function is applied to internal state \(S_{i+1}\).

Permutation

Our Permutation process is based on CROSS permutation33. The CROSS permutation implies the swap or re-arrangement of elements across the internal state matrix to maximise the properties of diffusion and confusion. First the 2D matrix of the state with 256 bits divided into sub-blocks of 16 blocks (4 \(\times\) 4). After dividing it into sub-blocks, we define matrix-based swapping as diagonal swapping, anti-diagonal swapping, and cycle shifting. For each round we apply different swapping techniques to increase randomness and more diffusion on the input blocks. The internal state undergoes 15 rounds of permutations that are iteratively performed. Justification for choosing 15 rounds is that the main goal of cross-permutation is to provide high diffusion across the state. The number of rounds was chosen based on theoretical cryptographic rules that say even a small change in the input will cause big changes all over the state after the permutation rounds. Most lightweight cryptographic designs use between 10 and 20 rounds in order to provide enough diffusion—e.g., AES uses 10 rounds for 128-bit keys. Based on this common practice, 15 rounds achieve a tradeoff between diffusion and computational efficiency. And also we have carried out randomness tests using the NIST SP 800-22 statistical test suite on the outputs after varying the number of permutation rounds (5, 10, 15, and 20). The tests indicate that randomness metrics, such as uniformity and autocorrelation, stabilise considerably by the 15th round, after which the improvements in subsequent rounds are hardly noticeable. At 10 rounds, diffusion was inadequate in a few test cases, as the randomness metrics indicated a slight bias. At 20 rounds, the overhead of this performance is 30% higher than that at 15 rounds, while there is no evident improvement on statistical security metrics. At 15 rounds, the design maintained an excellent trade-off in terms of computational efficiency while passing all tests of randomness and diffusion.

CA based transformation

The Internal Function transformation is processed by Two Dimensional Cellular Automata (2DCA) to achieve a high level of diffusion and non-linearity. The state is treated as a blocks of size \(m \times n\). Next, the CA transformation is applied. The process of scrambling the data based on CA will be applied iteratively to the entire state for several steps so that the message is dispersed throughout the state and reaches a state of maximal entropy. The internal state undergoes 15 rounds of iterative transformations. Each round consists of a Permutation operation, followed by the CA transformation which mixes the data across the full state. This keeps the state highly sensitive to input changes and provides significant resistance to various types of cryptanalysis, both differential and/or linear cryptanalysis. The comparison of how cellular automata contribute to diffusion in comparison to traditional techniques like Feistel networks is shown in Table 1

Squeezing phase

After the absorption phase of the operation, the function goes into the squeezing phase and hash output is drawn from the output state. Each time output is drawn from the state, the state is permuted, and the CA is an iterative transformation applied before the output is extracted; therefore, each squeezed output block retains due to the internal state of the complex intermingling for the final absorbed phase. Lastly, the hash output required length is 256 bits; therefore, 256 bits are extracted(rate), and the output finalisation will give the required output length of 256 bits.

ECC implementation

The ECC system has much stronger security compared to classical systems, such as RSA, for an equivalent key length. A 256-bit key in ECC gives similar security to a 3072-bit key in RSA. As a result, ECC achieves much better efficiency in terms of performance and resource usage, which is quite desirable in applications dealing with constrained computational capabilities. This paper leverages the strength of the ECC to boost security by incorporating scalar multiplication over elliptic curves into the proposed hash function. In our approach, we select the elliptic curve according to its cryptographic strength and efficiency.

From the Table 2 for our current design, we use “secp256k1(p256)” associated with Koblitz curve31. Because, it has been used in many cryptographic systems, such as Bitcoin and blockchain and offers a much higher level of security and wider industrial acceptance. The Elliptic curve used here is 256-bit SEC-2.

over the finite field \(F_p\), where p is the large prime. For each input message block, the scalar multiplication process generates a point on the elliptic curve. The curve is defined by sextuple \(T = (p,a,b,G,n,h)\).

-

Prime field(p): p = \(2^{256}-2^{32}-977\)(which is the large prime) = 11579208923731619542357098500868790785326998466564056403945758400790883467 1663.

-

Curve equation: For secp256k1, the curve equation is

$$\begin{aligned} y^2=x^3 +7[mod \ p], \end{aligned}$$(2)where \(a=0\) and \(b=7\).

-

Base point(G): G = {5506626302227734366957871889516853432625060345377759417550018736038911672 9240, 32670510020758816978083085130507043184471273380659243275938904335757337482 424}

-

Order(n):n=11579208923731619542357098500868790785283756427907490438260516 3141518161494337. Where n is cyclic order of G.

-

Cofactor(h): \(h = 1\)

The final hash value is denoted by \(H_S\), which further serves as the input for ECC–following mapping the 256-bit hash output(\(H_S\)) to a point on the elliptic curve p256. This mapping is done by using direct hash to the point appraoch, trial and increment method or Elligator or hash-to-curve to ensure that the point is a valid point in elliptic curve. We perform elliptic curve scalar multiplication once we obtain a point P(\(x_P, y_P\)) on the curve to add some cryptographic complexity. Scalar multiplication involves repeated point doubling and point addition.

Point addition

Formulas for adding two points \(P_1\) and \(P_2\) on the curve are:

New point \(P_3\):

Point doubling

Formulas for point doubling P on the curve are:

New point \(P_3\):

We accomplish this by deriving the scalar k from the original hash \(H_S\), multiplying the Scalar k such that \(k\in [1,n-1]\), where n is cyclic order of the curve, with the generator point G \(Q = k.G\), resulting (\(x_Q, y_Q\)). Scalar multiplication is one of the important operations in ECC because it ensures that the outcome is nonlinear and resistant to the reverse process. The x coordinate is used as a final 256-bit hash output.

Security analysis

Statistical analysis

Collisions can subject the hash function to a brute force assault if its distribution is not uniform. By picking the input plaintext at random, statistical analysis executes the hash function process. To find the updated output hash function, we randomly alter the value of one input bit. We carry out the procedure 256, 512, 1024, 2048 and 4096 times (N-total number of tests) in the experiment. Equations (3)–(8)36 provide the following quantities for measurement:

The ideal average variation ratio P is 50%, and the ideal average number of variation bits B is 128 for a hash output of 256 bits. It is highly desirable to have \(\triangle\)B and \(\triangle\)P values below 5%. For N = 256, 512, 1024, 2048 and 4096, the algorithm’s performance characteristics are listed in Table 3. The table show that both B and P are close to the optimal value. The suggested function’s statistical properties are useful. Comparative analysis of the statistic analysis is given in Table 4 shows that comparing with existing schemes our proposed scheme has good statistical properties. The Statistical Analysis Comparison is provided in Fig. 6

Resistance to known attacks

The resistance to known attacks of a cryptographic system evaluates how it can withstand a range of well-known approaches employed by attackers to break encryption or hash functions. Obviously, if a system is to be secure, it should be able to hold out against these tried and tested cryptographic attacks.

Types of attack

-

Brute force attack An attacker will check every possible key until they find the correct one. Due to the enormous amount of processing power required, brute-force attacks are not viable. This is especially true for secp256k1, whose key length is 256 bits.

-

Quantum attack Using methods like Shor’s algorithm, this will resolve the ECDLP in polynomial time against all ECC based cryptographic systems. Currently, no accurate quantum method is known to weaken secp256k1, but this apparent security will not be permanent because the advent of quantum computing could threaten ECC systems in the long run. But for now, there are no threats.

-

Chosen plaintext/ciphertext attack The plaintext/ciphertext attack is a type of cryptographic attack where the attacker uses knowledge about certain parts of plaintext and their corresponding ciphertext to deduce the key used for encryption. These types of attacks attempt to access information by breaking an encryption system or some secret code. The employment of strong hash functions and digital signature schemes, like the one implemented in this paper using ECC, CA, and the Sponge function, will make such attacks hard to be effective.

-

Replay attack A replay attack is a type of network attack in which an attacker intercepts and retransmits a valid message between two parties, often without the network’s detection. The attacker can then assume the sender’s identity and engage in activities such as transaction duplication, authentication, and fraudulent activity establishment. In our work, ECC provides the inclusion of the signature to reduce this risk so that every signature is unique.

-

Collision attack A collision attack is a cryptographic attack in which two different inputs have the same output hash value. Such an attack has the potential to undermine the reliability of hash functions and, more broadly, other schemes that rely on them. The hybrid approach incorporates additional transformations through cellular automata that further enhance resistance. Many images from repositories and online images have been analysed and compared with each image’s hash, and it has been found that no two inputs have the same output hash value.

Avalanche criteria

One of the most important features of hash algorithms is the avalanche effect. This feature states that small input changes must result in extreme variations in the output bits of the hash digest. If H is a function that has the avalanche effect, the Hamming distance between its output for a random binary string x and the output that comes from randomly changing one bit of x should be about half of the output size35. Mathematically, \(\{0,1\}^p \rightarrow \{0,1\}^q\) it has an avalanche if it holds

in which the hamming distance between two n-bit blocks x and y is denoted as hamming(x, y). We have gathered a sample of 5000 messages of varied lengths to establish the Avalanche effect of the proposed hash algorithm. In this regard, for each message, we introduced a one-bit modification into the original message and calculated the hamming distance between the hash values of the original and modified messages. These Hamming distance values are between 101 and 160, while the hash digest size is 256 with an average of 126 as shown in Fig. 7. It indicates that a one-bit change in the input changes about half of the output bits, so the avalanche effect is substantial for the suggested hash function.

NIST randomness test

In cryptography, randomness will play a very significant role in the security and dependability of cryptographic techniques. A truly random sequence is necessary for ensuring that encrypted data are resistant to a number of different forms of cryptographic attacks, such as brute force or pattern-based analysis. In this case, with image encryption and hashing, randomness means that the encrypted version of an image should appear as unpredictable as possible, with no discerning patterns from which to gain an advantage. Ensuring high-quality randomness in such encrypted images calls for a set of rigorous statistical tests. The National Institute of Standards and Technology designed the NIST Statistical Test Suite34, a series of intensive tests, to assess the randomness of binary sequences. Each test calculates the chi-square statistic for a specific parameter. This is accomplished by comparing the parameter of the created bit stream with its ideal value. The optimal value will be derived from the theoretical outcomes of an identical sequence of bits. The chi-square value is transformed into a random probability value known as the p-value. The suite is also quite well-known and consists of a number of tests, each targeting different statistical properties that form the basis for a sequence’s non-predictability. We used the hash function as a pseudorandom number generator to generate a 16-MB data stream for the test suite execution. We produce the stream by applying the hash function to an initial seed, S, and incrementing it by one. We can concatenate them to obtain a 16 Mb sequence. This serves as input for the NIST statistical test suite. Table 1 illustrates that the hash function produced a binary stream that exhibited favourable p-values and pass rates for the relevant tests.

Performance analysis

The proposed hash algorithm is implemented in Python 3.10 (64.bit) coding platform and this work is performed in a personal computer with 8 GB of main memory, an AMD Ryzen 5 3450U with Radeon Vega Mobile Gfx, CPU running at 2.10 GHz, Microsoft Windows 11 Home (64 bits). The known attacks have been analysed and it shows the good hashing and avalanche effect experimented with 5000 samples of data in which 10 samples are given in the Figs. 10 and 11 shows that small changes like 1 bit changes shows very big changes in the output.

The sequence’s results from the NIST test suite in Table 5 and Fig. 8 demonstrate strong randomness features: most tests, including frequency, block frequency, cumulative sums, runs, and rank, achieved perfect pass rates, and the p-value range was generally good from 0.35 to 0.91, indicating no suspicious bias or pattern. Non-overlapping template and approximate entropy tests also yielded high p-values, indicating a high level of unpredictability due to the absence of repetitive structures. The random excursions and random excursions variant tests each passed 9 out of 10 times, which means that there were some minor but acceptable deviations-most probably the deficiency in the characteristics of the sequence, such as not having enough excursions. The results from the universal test and the FFT test further confirmed the sequence’s non-compressibility and the absence of significant periodicity, respectively. We established a high level of randomness with this sequence in general, and found no serious problems that would make it unsuitable for cryptographic purposes. Figure 9 shows the speed comparison between the existing has function and proposed hash function, the results indicates good performance for cryptographic use. Some real-world scenarios where our proposed hash function outperforms traditional ones includes,

Constraint environments

The proposed hash function can provide effective security with reduced computational overhead in devices having small processing power or very little memory, like IoT devices. Its lightweight nature ensures that there is low resource usage without compromising on security-something that is not offered by the conventional hash functions, like SHA-2, which would otherwise be very heavy for such applications.

Blockchain and cryptocurrency

The proposed hash function with elliptic curve cryptography could be very useful for improving the verification process in transactions and block creation so that they can be run quickly and use little energy as blockchain-based apps become more popular. This is in contrast to older algorithms like SHA-256, which need more computing power.

Mobile and edge computing

As we move towards mobile and edge computing, where most devices are in a low-power state, the proposed hash function’s efficiency is important for fast hashing in applications like digital signatures and integrity checks. This is an area where it would be hard to break security with traditional hash functions that require a lot of computing power.

Secure communication

This proposed hash function combines ECC and sponge functions, which makes it perfect for encryption and key exchange protocols in situations where speed and security are very important. For example, low latency is a must in real-time messaging apps like Signal.

Conclusion

In this paper, we propose a novel hash algorithm that effectively integrates CA, cross-permutation, and the sponge function, demonstrating a resilient design that improves cryptographic security. We achieved a lot of diffusion and non-linearity by using strict methods like message padding, absorbing phase, permutation operations, and the unique use of 2DCA for state transformation. ECC makes the method better by protecting it from multiple attack paths, such as brute force and certain plaintext attacks. A thorough security analysis reveals that the suggested system has good cryptographic properties. The algorithm consistently demonstrated high randomness and minimal bias in NIST statistical tests, proving its effectiveness. Additionally, extensive testing validated the avalanche effect, ensuring that even minor input changes result in significant output variations. Overall, the proposed hash algorithm demonstrates excellent potential for secure image encryption and hashing applications, providing a reliable solution for contemporary cryptographic challenges.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Menezes, A. J., Van Oorschot, P. C. & Vanstone, S. A. Handbook of Applied Cryptography (CRC Press, 2018).

Diffie, W. & Hellman, M. E. New directions in cryptography. In Democratizing Cryptography: The Work of Whitfield Diffie and Martin Hellman 365–390 (2022).

Merkle, R. C. One way hash functions and des. In Conference on the Theory and Application of Cryptology 428–446 (Springer, 1989).

Damgard, I. B. A design principle for hash functions. In Conference on the Theory and Application of Cryptology 416–427 (Springer, 1989).

Rivest, R. L. Rfc1186: Md4 Message Digest Algorithm (1990).

Rivest, R. L. Rfc1321: The md5 Message-Digest Algorithm (1992).

Standard, S. H. FIPS PUB 180-1, National Institute of Standards and Technology (NIST) (US Department of Commerce, 1995).

Bertoni, G., Daemen, J., Peeters, M. & Van Assche, G. The making of keccak. Cryptologia 38, 26–60 (2014).

Miller, V. S. Use of elliptic curves in cryptography. In Conference on the Theory and Application of Cryptographic Techniques 417–426 (Springer, 1985).

Koblitz, N. Elliptic curve cryptosystems. Math. Comput. 48, 203–209 (1987).

Ullah, S. et al. Elliptic curve cryptography; Applications, challenges, recent advances, and future trends: A comprehensive survey. Comput. Sci. Rev. 47, 100530 (2023).

Blake, I. Elliptic Curves in Cryptography (Cambridge University Press, 1999).

Montgomery, P. L. Speeding the pollard and elliptic curve methods of factorization. Math. Comput. 48, 243–264 (1987).

Singh, L. D. & Singh, K. M. Image encryption using elliptic curve cryptography. Procedia Comput. Sci. 54, 472–481 (2015).

Narayanan, A. Bitcoin and Cryptocurrency Technologies: A Comprehensive Introduction (Princeton University Press, 2016).

Hankerson, D. & Menezes, A. Elliptic curve cryptography. In Encyclopedia of Cryptography, Security and Privacy 1–2 (Springer, 2021).

Murugeshwari, B., Selvaraj, D., Sudharson, K. & Radhika, S. Data mining with privacy protection using precise elliptical curve cryptography. Intell. Autom. Soft Comput. 35, 1 (2023).

Arunkumar, J. et al. Logistic regression with elliptical curve cryptography to establish secure iot. Comput. Syst. Sci. Eng. 46, 1 (2023).

Krishnamoorthy, N. & Umarani, S. Implementation and management of security for sensitive data in cloud computing environment using elliptical curve cryptography. J. Theor. Appl. Inf. Technol. 102, 1 (2024).

Adeniyi, A. E., Jimoh, R. G. & Awotunde, J. B. A systematic review on elliptic curve cryptography algorithm for internet of things: Categorization, application areas, and security. Comput. Electric. Eng. 118, 109330 (2024).

Bertoni, G., Daemen, J., Peeters, M. & Van Assche, G. Sponge functions. In ECRYPT Hash Workshop (2007).

Bertoni, G., Daemen, J., Peeters, M. & Van Assche, G. On the indifferentiability of the sponge construction. In Annual International Conference on the Theory and Applications of Cryptographic Techniques 181–197 (Springer, 2008).

Sha, N. Standard: Permutation-based hash and extendable-output functions. FIPS PUB 202 3 (2015).

Bertoni, G., Daemen, J., Peeters, M. & Van Assche, G. Duplexing the sponge: Single-pass authenticated encryption and other applications. In Selected Areas in Cryptography: 18th International Workshop, SAC. Toronto, ON, Canada, August 11–12, 2011, Revised Selected Papers 320–337 (Springer, 2012).

Von Neumann, J. et al. Theory of Self-Reproducing Automata (1966).

Gardner, M. Mathematical games. Sci. Am. 222, 132–140 (1970).

Wolfram, S. & Gad-el Hak, M. A. New kind of science. Appl. Mech. Rev. 56, B18–B19 (2003).

Mukhopadhyay, D. & RoyChowdhury, D. Cellular automata: An ideal candidate for a block cipher. In Distributed Computing and Internet Technology: First International Conference, ICDCIT. Bhubaneswar, India, December 22–24, 2004. Proceedings 1 452–457 (Springer, 2005).

Jamil, N., Mahmood, R. & Muhammad, R. A new cryptographic hash function based on cellular automata rules 30, 134 and omega-flip network. ICICN 27, 163–169 (2012).

Kuila, S., Saha, D., Pal, M., Chowdhury, D. R. Cash: Cellular automata based parameterized hash. In Security, Privacy, and Applied Cryptography Engineering: 4th International Conference, SPACE. Pune, India, October 18–22, 2014. Proceedings 4 59–75 (Springer, 2014).

Sadak, A., Ziani, F. E., Echandouri, B., Hanin, C. & Omary, F. Hcahf: A new family of ca-based hash functions. Int. J. Adv. Comput. Sci. Appl. 10, 502–510 (2019).

Chen, L., Moody, D., Regenscheid, A. & Randall, K. Recommendations for Discrete Logarithm-Based Cryptography: Elliptic Curve Domain Parameters. Tech. Rep (National Institute of Standards and Technology, 2019).

Shi, Z. & Lee, R. B. Bit permutation instructions for accelerating software cryptography. In Proceedings IEEE International Conference on Application-Specific Systems, Architectures, and Processors 138–148 (IEEE, 2000).

NIST SP 800-22. https://csrc.nist.gov/projects/random-bit-generation/documentation-and-software (Accessed 30 September 2024).

Castro, J. C. H., Sierra, J. M., Seznec, A., Izquierdo, A. & Ribagorda, A. The strict avalanche criterion randomness test. Math. Comput. Simul. 68(1), 1–7 (2005).

Todorova, M., Stoyanov, B. & Szczypiorski, K. BentSign: Keyed hash algorithm based on bent Boolean function and chaotic attractor. Bull. Pol. Acad. Sci. Tech. Sci. 1, 557–569 (2019).

Teh, J. S., Alawida, M. & Ho, J. J. Unkeyed hash function based on chaotic sponge construction and fixed-point arithmetic. Nonlinear Dyn. 100(1), 713–729 (2020).

Xiao, D., Liao, X. & Deng, S. One-way Hash function construction based on the chaotic map with changeable-parameter. Chaos Solitons Fractals 24(1), 65–71 (2005).

Li, Y., Ge, G. & Xia, D. Chaotic hash function based on the dynamic S-Box with variable parameters. Nonlinear Dyn. 84(4), 2387–2402 (2016).

Wu, S. T. & Chang, J. R. Secure one-way hash function using cellular automata for IoT. Sustainability 15(4), 3552 (2023).

Alawida, M., Teh, J. S., Oyinloye, D. P., Ahmad, M. & Alkhawaldeh, R. S. A new hash function based on chaotic maps and deterministic finite state automata. IEEE Access 8, 113163–113174 (2020).

Acknowledgements

The authors extend their appreciation to the Deanship of Scientific Research and Graduate Studies at King Khalid University for funding this work through Large Groups Project under grant number (project number RGP .2/ 482/45. Academic year 1445H).

Funding

The current work was assisted financially to the Dean of Science and Research at King Khalid University via the Large Group Project under grant number RGP. 2/482/45.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ibrahim, M.M., Venkatesan, R., Ali, N. et al. Enhanced image hash using cellular automata with sponge construction and elliptic curve cryptography for secure image transaction. Sci Rep 15, 14148 (2025). https://doi.org/10.1038/s41598-025-98027-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-98027-7

This article is cited by

-

Light weight encryption technique: a cellular automaton based approach for securing health records

Scientific Reports (2025)