Abstract

Early and accurate diagnosis of Alzheimer’s disease (AD) is crucial for effective treatment. While the integration of deep learning techniques for AD classification is not entirely new, this study introduces CAPCBAM—a framework that extends prior approaches by combining Capsule Networks with a Convolutional Block Attention Module (CBAM). In CAPCBAM, standardized preprocessing of MRI images is followed by feature extraction using Capsule Networks, which preserve spatial hierarchies and capture intricate relationships among image features. The subsequent application of CBAM, employing both channel and spatial attention mechanisms, refines the feature maps to highlight the most clinically relevant regions. This dual-attention strategy offers clear advantages over conventional CNN methods, particularly in enhancing model generalization and mitigating information loss due to pooling. On the ADNI dataset, CAPCBAM achieved an impressive accuracy of 99.95%, with precision and recall both at 99.8%, an AUC of 0.99, and an F1-Score of 99.92%. Although the use of Capsule Networks and attention mechanisms has been explored previously, CAPCBAM distinguishes itself by its robust integration of these components. The study’s advantages include improved feature extraction, faster convergence, and superior classification performance, making it a promising tool for the early detection of Alzheimer’s disease.

Similar content being viewed by others

Introduction

Alzheimer’s disease (AD) is among the leading causes of dementia, affecting millions worldwide. It is a progressive neurodegenerative disorder characterized by cognitive decline, memory loss, and changes in behavior and personality. AD not only impacts the quality of life of patients but also places a significant emotional and financial burden on families and healthcare systems. The identification and classification of AD using brain MRI images can significantly aid in early diagnosis and intervention, potentially slowing the progression of the disease. Traditional methods for AD classification are often time-consuming and subjective, relying heavily on manual interpretation by medical experts. This variability in diagnosis underscores the need for more objective and reproducible methods.

Recent advancements in deep learning offer promising solutions to automate and improve the accuracy of AD classification. Deep learning models, particularly Convolutional Neural Networks (CNNs), have revolutionized the field of medical imaging by providing powerful tools for image analysis and pattern recognition. CNNs are adept at learning hierarchical feature representations from raw image data, which can be crucial for detecting subtle changes in brain structure associated with AD. However, CNNs have inherent limitations. They often struggle with capturing spatial hierarchies and relationships between features due to their reliance on pooling operations, which can lead to a loss of spatial information.

To address these limitations, attention mechanisms have been introduced into deep learning models. Attention mechanisms, such as the Convolutional Block Attention Module (CBAM), enhance the performance of CNNs by allowing the network to focus on the most relevant parts of the input image. CBAM operates by applying channel and spatial attention modules sequentially to the feature maps, which helps in emphasizing important features while suppressing less relevant ones. This selective attention mechanism can significantly improve the feature extraction capabilities of the network, leading to better classification performance.

In addition to attention mechanisms, Capsule Networks (CapsNets) have emerged as a novel architecture that addresses some of the key shortcomings of traditional CNNs. CapsNets, introduced by Sabour et al., are designed to preserve the spatial hierarchies and relationships between features through the use of capsules. Capsules are groups of neurons that represent various properties of an object, and their outputs are vectors rather than scalar values. The dynamic routing mechanism between capsules ensures that the network learns robust feature representations, which can lead to more accurate and interpretable classification results.

In this paper, we present a novel deep learning model named CAPCBAM, which integrates the Convolutional Block Attention Module with Capsule Networks to enhance feature extraction and classification capabilities for AD. This model is designed to leverage the strengths of both CBAM and CapsNets. CBAM enhances important features while suppressing irrelevant ones, and CapsNets effectively handle spatial hierarchies and relationships between features. The combination of these two methods aims to create a more robust and accurate model for classifying Alzheimer’s disease from brain MRI images.

Background and motivation

The rising prevalence of Alzheimer’s disease (AD) has prompted extensive research into its early detection and diagnosis. AD is characterized by the accumulation of amyloid-beta plaques and tau tangles in the brain, leading to neuronal damage and cognitive decline. Traditional diagnostic methods, including clinical assessments and cognitive testing, are often supplemented with neuroimaging techniques such as MRI, which provides detailed images of brain structures. However, manual interpretation of MRI scans is subject to variability and requires considerable expertise.

The advent of deep learning has opened new avenues for the automatic analysis of medical images. CNNs, in particular, have demonstrated impressive capabilities in extracting features from complex image data. These networks consist of multiple layers that progressively learn to recognize patterns, edges, textures, and shapes, which are crucial for distinguishing between healthy and diseased tissues. Despite their success, CNNs have limitations in capturing the spatial relationships between features, which can be critical for accurately identifying regions affected by AD.

Attention mechanisms in deep learning

Attention mechanisms have been developed to address some of the shortcomings of traditional CNNs. These mechanisms allow models to focus on the most pertinent parts of an image, improving feature extraction and enhancing performance. The Convolutional Block Attention Module (CBAM) is a particularly effective attention mechanism that applies both channel and spatial attention. Channel attention focuses on ‘what’ is important by selecting relevant feature maps, while spatial attention focuses on ‘where’ by highlighting significant regions within the feature maps. By sequentially applying these attention modules, CBAM can improve the representation of salient features in an image, leading to better classification outcomes.

Capsule networks for improved spatial hierarchies

Capsule Networks (CapsNets) were introduced to overcome the limitations of CNNs in preserving spatial hierarchies and relationships. CapsNets consist of capsules, which are groups of neurons that encapsulate various properties of objects in vector form. The dynamic routing algorithm used in CapsNets allows for the iterative adjustment of connections between capsules, ensuring that the most relevant spatial hierarchies are preserved. This approach enables CapsNets to capture intricate patterns and relationships within the data, making them well-suited for complex tasks such as medical image classification.

Integrating CBAM with capsule networks

The integration of CBAM with Capsule Networks, as proposed in our CAPCBAM model, aims to leverage the strengths of both approaches. CBAM enhances the feature extraction process by focusing on the most relevant parts of the image, while CapsNets ensure that the spatial hierarchies and relationships between features are preserved. This combination is expected to improve the robustness and accuracy of AD classification models.

In summary, our proposed CAPCBAM model represents a novel approach to Alzheimer’s disease classification using brain MRI images. By integrating attention mechanisms with Capsule Networks, we aim to create a model that can effectively extract and utilize relevant features while preserving important spatial relationships. This has the potential to significantly enhance the accuracy and reliability of AD classification, ultimately aiding in early diagnosis and treatment planning.

The rest of the paper is organized as follows. Section “Related work” reviews related works in the field of AD classification. Section “Methodology” describes the methodology, the mathematical formulations and the ablation study used in this work. ADNI dataset, the preprocessing steps, results and discussion are presented in section “Results and discussion” Finally, Section “Conclusion and Future Work”concludes the paper and outlines directions for future research.

Related work

The classification of Alzheimer’s Disease (AD) using deep learning has seen rapid advancements, with various architectures being proposed to enhance feature extraction, attention mechanisms, and computational efficiency. Table 1 provides a comparative analysis of prominent models, highlighting their architectural frameworks, key innovations, and classification accuracies.

The proposed CapCBAM model, which integrates Capsule Networks with CBAM (Convolutional Block Attention Module), achieves 99.95% accuracy, surpassing other state-of-the-art models. The dynamic routing in Capsule Networks ensures effective spatial hierarchies, while CBAM’s spatial attention mechanism refines feature selection, enhancing AD classification performance. Compared to conventional CNN-based models, CapCBAM effectively captures spatial dependencies, leading to superior accuracy and generalization. DenseNet (Desai et al., 2024)1employs multimodal fusion of MRI and PET scans, leveraging a deep CNN framework. While achieving a 90.00% accuracy, the model is limited by computational overhead and the challenge of optimal modality integration. Similarly, the Hardware-Accelerated CNN (Sujathakumari et al., 2024)2incorporates Discrete Wavelet Transform for feature extraction and GPU acceleration, attaining 91.56% accuracy. Despite its efficiency, the reliance on predefined frequency components may constrain adaptability to complex AD patterns. The SE-DenseNet (Liang et al., 2024)3enhances feature representation by combining Squeeze-and-Excitation (SE) modules with DenseNet connectivity, achieving 98.12% accuracy. However, its reliance on channel attention alone may limit spatial feature interactions, a limitation addressed by CapCBAM’s integrated spatial and channel attention mechanisms. The Deep Q-Network (DQN) Transfer Learning model (Hao & Chen, 2024)4extracts resting-state fMRI features but achieves only 86.66% accuracy, likely due to the complexity of temporal brain activity representation in AD progression. Additionally, MADNet (Li et al., 2024)5introduces a multimodal CNN combining sMRI and DTI-MD features, leveraging long-range dependencies and attention-based feature fusion. This model improves AD vs. CN classification but requires extensive data preprocessing and computational power. Early Detection CNN (Naidu, 2024)6applies MobileNet and VGG16 to the augmented ADNI dataset, achieving high classification accuracy, though specific performance metrics were not reported. Finally, EfficientNet + Deep ResUNet (Rao et al., 2024)7applies a multi-scale attention mechanism within a Siamese network, reaching 99.38% accuracy. While highly competitive, its network complexity and computational cost pose challenges, especially in real-time clinical applications. Furthermore, Slimi et al. (2024)8propose a combinatorial deep learning approach, integrating DenseNet121 and Xception pretrained convolutional networks for Alzheimer’s disease classification. This method enhances feature extraction by leveraging diverse model architectures, optimizing classification performance while ensuring robust generalization. The study demonstrates state-of-the-art accuracy, further solidifying the significance of hybrid deep learning techniques in AD detection. The Shanto et al. 20249study’s employs a simple and cost-effective Convolutional Neural Network (CNN) architecture trained on T1-weighted 2D MRI scans from the Alzheimer’s Disease Neuroimaging Initiative (ADNI), which includes data from cognitively normal individuals, stable mild cognitive impairment cases, and Alzheimer’s dementia patients. The model achieved a remarkable accuracy of 98.97%, demonstrating its effectiveness in the early diagnosis and classification of Alzheimer’s Disease while surpassing many previous studies with its low-cost design. Ishaaq et al., 202410introduces a novel CNN model specifically designed for the early diagnosis of Alzheimer’s disease. It outperforms established models like ResNet50, DenseNet201, and VGG16, achieving an outstanding accuracy of 99% on the test dataset. The research emphasizes that using high-quality medical imaging data is key to the model’s effectiveness, suggesting that this advanced approach could significantly enhance diagnostic sensitivity and lead to better early treatment strategies for Alzheimer’s disease. Daher et al., 202411focuses on enhancing the early diagnosis of Alzheimer’s disease (AD) through a deep convolutional neural network (CNN) model, which is central to its methodology. The researchers utilized a publicly available comprehensive MRI dataset, categorizing images into four classes: Non-Dementia (ND), Very Mild Dementia (VMD), Mild Dementia (MD), and Moderate Dementia (MOD). The CNN architecture is designed for effective feature extraction and classification of these stages, achieving a remarkable accuracy of 99%, which surpasses previous studies. Pandiyaraju et al., 202412presents a dual attention enhanced deep learning framework for classifying Alzheimer’s disease (AD) using neuroimaging data. It employs combined spatial and self-attention mechanisms to focus on neurofibrillary tangles and amyloid plaques in MRI images, achieving an accuracy of 99.1%. The model showcased its superiority over existing state-of-the-art convolutional neural networks and highlighting its potential for improving early AD diagnosis. Zhao et al., 202413presents a comparative study on machine learning approaches for Alzheimer’s disease diagnosis using 2D MRI slices. It highlights the effectiveness of ConvNeXt, which achieved an average accuracy of 95.74% in classifying Alzheimer’s, mild cognitive impairment, and normal controls. In the same year, Abubakar et al., 202414presents a deep learning framework for early Alzheimer’s disease diagnosis using MRI scans, emphasizing the importance of timely interventions. It employs transfer learning with CNNs like ResNet50 and DenseNet201 for local feature extraction, complemented by the Vision Transformer (ViTB32) for global context. The hybrid model achieved accuracy rates of 96.1%, 96.4%, and 98.0% in distinguishing normal images from those indicating mild cognitive impairment and severe AD, showcasing the effectiveness of global features in enhancing diagnostic efficiency. A novel technique is proposed by Sangeetha & Raghavendran, 202415that employs a pre-trained multi-layer convolutional residual transfer Random Forest with Inception V3 model for image processing, achieving a training accuracy of 96%, mean average precision of 93%, sensitivity of 95%, and an AUC of 90% in the experimental analysis. Yang et al., 202516presents a multi-label deep learning model that utilizes a dot-product attention mechanism and an innovative labeling system to improve the diagnosis and classification of Alzheimer’s disease (AD) subtypes and severity levels, addressing the limitations of traditional binary classification systems. The proposed model demonstrated significant improvements in diagnostic accuracy, achieving 83.1% for identifying AD subtypes and 83.3% for severity prediction, compared to 72.9% and 73.5% respectively for a baseline fully connected neural network, indicating its potential to enhance personalized treatment strategies in neurodegenerative diseases. Li et al., 202517 addresses the urgent need for early diagnosis and intervention in Alzheimer’s Disease (AD) by proposing a deep learning model called Enhanced Residual Attention Network (ERAN), which improves the classification of medical images through a combination of residual learning, attention mechanisms, and soft thresholding. The model achieves a high accuracy of 99.36% with a low loss rate of 0.0264.

However, despite significant advancements, several gaps remain in existing works that hinder optimal Alzheimer’s disease classification. Many models, such as DenseNet (Desai et al., 2024) and SE-DenseNet (Liang et al., 2024), rely heavily on CNN-based architectures, which inherently struggle with preserving spatial hierarchies due to their dependence on pooling operations. This limits their ability to model complex relationships between brain regions, reducing classification robustness. Additionally, multimodal fusion approaches, such as those in MADNet (Li et al., 2024), require extensive data preprocessing and large-scale computational resources, making them less feasible for real-time clinical deployment. Similarly, while Transformer-based methods, including Swin Transformer (Illakiya & Karthik, 2023), capture long-range dependencies, they exhibit high computational costs and require large training datasets to achieve stable performance. Models employing handcrafted features, such as the DQN Transfer Learning model (Hao & Chen, 2024), demonstrate limited accuracy due to the challenges of representing complex temporal brain activity patterns. Furthermore, GAN-based methods like 3D DCGAN (Kang et al., 2023) suffer from instability issues such as mode collapse, affecting classification reliability. Hybrid approaches, such as those proposed by Slimi et al. (2024), improve feature diversity but increase network complexity, making training and optimization challenging. These limitations highlight the need for a model that balances spatial hierarchy preservation, attention-enhanced feature selection, computational efficiency, and generalization capability—gaps effectively addressed by the proposed CapCBAM model, which integrates Capsule Networks for transformation invariance and CBAM for refined feature selection, achieving state-of-the-art performance in AD classification. Fig. 1

In summary, the CapCBAM model outperforms all reviewed architectures by effectively integrating dynamic routing with spatial attention, demonstrating the highest accuracy (99.95%). Its ability to capture fine-grained spatial hierarchies while maintaining robust feature selection underscores its potential for clinical deployment in early and accurate AD detection.

Methodology

CAPCBAM framework overview

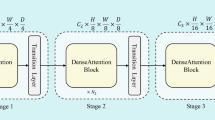

The proposed CAPCBAM framework is designed to enhance AD classification by preserving spatial hierarchies and refining feature selection. The overall architecture consists of two main parts:

-

Capsule Network: Maintains spatial relationships through dynamic routing, encoding important features into capsules.

-

CBAM Module: Applies sequential channel and spatial attention to selectively enhance relevant features.

Figures 2, 3, and 4 show the architectures of the CapsNet Block, the CBAM Block, and the proposed model, respectively.

Mathematical formulations

Capsule neural networks

Capsule networks represent an advancement over traditional convolutional neural networks (CNNs) by encapsulating spatial hierarchies in feature learning. Capsules are groups of neurons whose activities represent various properties of objects or object parts. The length of the output vector of a capsule indicates the probability of the presence of the feature, and the orientation of the vector captures the instantiation parameters. This section provides the mathematical formulations underlying the capsule network architecture.

The primary capsule layer is created from the input of the proposed model (here the shape of input = (128, 128, 3)). The primary capsule layer reshapes and applies a squashing function to produce capsule outputs \({v}_{i}\):

where \({{\varvec{s}}}_{{\varvec{i}}}\) is the input to the capsule and \({v}_{i}\) is the output vector of the capsule. The squashing function ensures that the length of the vector vi is between 0 and 1, making it suitable for representing probabilities.

Capsules in one layer predict the outputs of capsules in the next layer using a dynamic routing mechanism. Each capsule i in the lower layer makes a prediction for the output of each capsule j in the next layer:

where \({{\varvec{W}}}_{{\varvec{i}}{\varvec{j}}}\) is a weight matrix. The routing coefficients \({c}_{ij}\) are determined by a “routing softmax” that depends on the agreement between predicted outputs and actual outputs:

where \({b}_{ij}\) are log prior probabilities that are iteratively refined. The output of capsule j is a weighted sum of the prediction vectors, followed by a squash function:

The agreement between the prediction vectors and the actual outputs is used to update \({b}_{ij}\):

In our case, we use two capsule layers, where the output of the second one is forwarded to input of CBAM Module.

Convolutional block attention module (CBAM)

The process involves:

-

Global average pooling and max pooling.

-

Passing the pooled features through two dense (fully connected) layers.

-

Combining the attention maps generated from average and max pooled features.

$${F}_{\text{avg}}={\text{GlobalAveragePooling2D}}\left(F\right)$$(7)$${M}_{\text{avg}}=\upsigma {W}_{2}\left({\text{ReLU}}\left({W}_{1}\left({F}_{\text{avg}}\right)\right)\right)$$(8)$${F}_{\text{max}}={\text{MaxPooling2D}}\left({\varvec{F}}\right)$$(9)$${M}_{\text{max}}=\sigma {{\varvec{W}}}_{2}\left({\text{ReLU}}\left({{\varvec{W}}}_{1}\left({F}_{\text{max}}\right)\right)\right)$$(10)$${M}_{c}\left(F\right)={M}_{\text{avg}}\otimes F+{M}_{\text{max}}\otimes F$$(11)

Where:

-

F is the input feature map.

-

\({{\varvec{W}}}_{1}\) and \({{\varvec{W}}}_{2}\) are the weights of the first and second dense layers, respectively.

-

σ denotes the sigmoid function.

-

⊗ denotes element-wise multiplication.

Ablation study

To rigorously evaluate the individual contributions of each component in our proposed CAPCBAM framework and assess model generalization, we conducted an ablation study. This study compares the performance of two configurations:

-

CapsNet Only (without CBAM)

-

CAPCBAM (CapsNet integrated with CBAM)

The quantitative results for both configurations are summarized in Table 1 below.

Accuracy analysis

-

CapsNet Only Configuration:

The accuracy curve for the standalone Capsule Network increases gradually over the epochs, with the validation accuracy plateauing around 80%. Additionally, a notable disparity exists between the training and validation accuracy curves, suggesting that the network may struggle with inadequate feature focus and is susceptible to overfitting.

-

CAPCBAM Configuration (with CBAM):

When the Convolutional Block Attention Module is integrated, the accuracy curve exhibits a rapid ascent within the initial epochs, achieving over 99% accuracy. Importantly, both the training and validation accuracies converge almost perfectly, which indicates a robust generalization and enhanced feature extraction capability introduced by CBAM.

Loss analysis

-

CapsNet Only Configuration:

In the absence of CBAM, the loss metric decreases slowly and remains comparatively high throughout the training process. A persistent gap between the training and validation loss curves is observed, further highlighting the challenges in optimizing feature representations and the propensity for overfitting.

-

CAPCBAM Configuration (with CBAM):

The addition of CBAM results in a steep decline in both training and validation loss values, with both curves stabilizing near zero after only a few epochs. This overlapping behavior demonstrates that CBAM not only accelerates convergence but also ensures that the network learns optimal feature representations with minimal generalization errors.

Discussion and conclusion

The ablation study clearly illustrates the substantial benefit of integrating CBAM with Capsule Networks:

-

Performance Enhancement:

The full CAPCBAM model achieves a dramatic improvement in accuracy (from 82.2% to 99.95%), along with superior precision, recall, and F1-score, and an AUC-ROC of 0.99.

-

Improved Feature Selection and Convergence:

While the Capsule Network alone preserves spatial hierarchies, it fails to effectively prioritize relevant features, leading to slower learning and overfitting. The dual attention mechanism of CBAM compensates for this by selectively enhancing disease-relevant regions in MRI scans, which leads to faster convergence and a marked reduction in loss.

-

Robust Generalization:

The near-perfect alignment of training and validation metrics in the CAPCBAM model confirms its high level of generalization, making it highly suitable for the accurate and reliable classification of Alzheimer’s disease.

In conclusion, the ablation study supports that incorporating CBAM into the Capsule Network architecture is critical for achieving state-of-the-art performance in Alzheimer’s disease diagnosis. The integration significantly refines feature extraction and minimizes overfitting, thereby establishing CAPCBAM as a robust and high-accuracy framework for clinical applications. The comparison between the two models performances is showed further in Fig. 6.

Results and discussion

-

ADNI Dataset

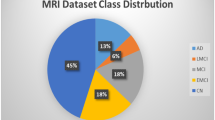

The Alzheimer’s Disease Neuroimaging Initiative (ADNI)18 offers datasets pertaining to Alzheimer’s disease in either Nifti or DICOM formats, representing three-dimensional volumetric data. Engaging directly with three-dimensional datasets presents certain complexities; therefore, the dataset in question was specifically developed to facilitate the application of image processing algorithms with greater ease. This dataset is comprised of two-dimensional axial images derived from the baseline dataset of ADNI, which originally included Nifti images. It encompasses three distinct categories, namely Alzheimer’s Disease (AD), Mild Cognitive Impairment (CI), and Cognitively Normal (CN) individuals. The images were extracted from the ADNI Baseline dataset (NIFTI format), which consisted of a total of 199 instances. The original images are accessible for download at https://ida.loni.usc.edu/login.jsp?project=ADNI.

To evaluate the performance of the suggested model, a comparative study with a few pretrained networks has been carried out using the same methodology as the proposed design, as shown in Table 1.

-

Preprocessing

Preprocessing was done on each dataset image as follows:

Resizing images to expedite the execution of applications and use less processing power.

Data augmentation, which makes it possible to get fresh training image data sets that are related to the source image. Rotation, flipping both horizontally and vertically, and adjusting width and height are a few techniques that can be applied to improve image recognition and accuracy. In this inquiry, we used zoom, brightness, and horizontal flip approaches as part of our data augmentation strategies in the experiments. Increasing the number of photos as much as possible is the aim here.

Oversampling, which addresses the issue of unbalanced classes by utilizing the SMOTE approach19.

We divided the ADNI dataset images into 80% for train and 20% for test.

-

All parameters used in this methodology

The training parameters values are presented in Table 2

-

Evaluation

Several evaluation criteria, including accuracy, precision, recall, F1-score, and AUC, are used to assess how well deep learning architecture’s function.

Where the percentage of accurate predictions to all occurrences analyzed is measured by the Accuracy metric. The positive patterns that are accurately predicted from all of the projected patterns in a positive class are measured using the precision metric. The fraction of positive patterns that are correctly categorized is measured by recall, and the harmonic mean of recall and accuracy values is represented by the F1 score metric22.

where TN is true negative, TP is true positive, FN is false negative, and FP is false positive. One useful statistic with values in the range [0, 1] is the Area Under the Curve (AUC). The AUC is equal to 1 since there is perfect discrimination between instances of the two classes. On the other hand, the AUC = 0 and vice versa when all Benign instances are categorized as Malignant.

Table 3 summarizes the performance of several deep learning models on the ADNI dataset, highlighting key metrics such as accuracy, F1-score, AUC, precision, and recall. The results indicate that while traditional models like VGG16 and ResNet50 achieve moderate performance (with VGG16 attaining only around 78% accuracy and ResNet50 close to 89%), more advanced architectures such as Xception, InceptionV3, and DenseNet121 perform better with accuracy figures ranging from 93% to 96%. Notably, the proposed CAPCBAM model dramatically outperforms all other models, reaching an accuracy of 99.95%, F1-score of 99.92%, and near-perfect precision and recall values of 99.8% along with an AUC of 99%. These results underscore the effectiveness of integrating Capsule Networks with the Convolutional Block Attention Module (CBAM), demonstrating its superior capability in extracting and emphasizing the most relevant features for Alzheimer’s disease detection, which consequently leads to enhanced overall classification performance compared to existing state-of-the-art deep learning models.

Table 4 presents a comparative analysis of our proposed CAPCBAM model against several state-of-the-art models on the ADNI dataset, highlighting distinct architectures, performance metrics, strengths, and limitations. While CAPCBAM (which integrates Capsule Networks with CBAM) achieves an impressive 99.95% accuracy and excels in preserving spatial hierarchies and enhancing feature selection through dynamic routing, its major drawback is its high computational expense. In contrast, the Ensemble CNN model by Fathi et al. (2024) also shows strong classification performance (99.83% accuracy) with robust generalization; however, it suffers from complex architecture. Other approaches such as Conv-Swinformer, which combines CNNs with Transformers, benefit from shift window attention for spatial feature extraction but require extensive training data, while 3D DCGAN offers improvements in learning on small datasets yet is vulnerable to issues like mode collapse. The Feature Fusion Network utilizes a blend of GSDW, GCN, and EfficientNet-B0 to target MCI detection but underperforms relative to CNN-based models, and the hybrid ML-DL approach by Thayumanasamy & Ramamurthy (2022) and the Swin Transformer-based model by Illakiya & Karthik (2023) achieve good accuracy levels but are offset by either high computational costs or resource dependency. Overall, the table emphasizes that while each model has its own merits, CAPCBAM uniquely balances accuracy and feature representation—albeit at the expense of higher computational demands—making it a strong candidate for future clinical application in Alzheimer’s disease detection.

The confusion matrix presented by Fig. 5 reveals a near-perfect classification performance across the three classes—AD (Alzheimer’s Disease), CI (Cognitive Impairment), and CN (Cognitively Normal)—with almost all instances correctly predicted. Specifically, 552 out of 553 AD cases and 504 out of 505 CN cases were accurately classified, while the CI class achieved a flawless 100% accuracy with all 496 instances correctly identified. The only misclassifications were a single AD case and a single CN case being erroneously labeled as CI, illustrating a minimal error rate. This high accuracy and low misclassification rate underscore the model’s strong discriminative power and its potential for reliable clinical application in early disease detection and patient stratification.

To evaluate the robustness and generalizability of the proposed model, a fivefold cross-validationmethod29 was utilized, as outlined in Table 5. The dataset was divided into five random subsets. During each iteration, one subset was used as the test set, while the remaining four subsets were used for training. This process was repeated five times, with shuffling enabled to ensure randomness and a fixed seed to maintain reproducibility. After each fold, the accuracy was recorded, and the overall performance was determined by computing the mean and standard deviation of the accuracy across all folds. The cross-validation results demonstrated a mean accuracy of 99.84% with a standard deviation of 0.00017, highlighting the model’s consistency and reliability.

In the top row of Fig. 6, the Capsule-only model shows a gradual increase in accuracy over epochs but plateaus below 85%, with a noticeable gap between training and validation curves indicating potential overfitting and suboptimal feature extraction. Its loss decreases slowly, never reaching particularly low values. In contrast, the bottom row reveals that the CAPCBAM model converges much more rapidly and achieves near-perfect accuracy (close to 100%) by around the fifth epoch, while both training and validation losses drop sharply to almost zero. The alignment of the training and validation curves in CAPCBAM further suggests robust generalization, with minimal signs of overfitting. Overall, these plots illustrate how the attention-based architecture integrated into Capsule Networks (via CBAM) dramatically accelerates learning and enhances classification performance compared to the standalone Capsule model.

In Fig. 7, we investigate the robustness of our ADNI dataset image classification model through the injection of various types of noise. The goal is to understand how different types and levels of noise affect the performance of the model across different classes. We applied three types of noise to the original images: Gaussian Blur with standard deviations (σ) of 0.2, 0.4, and 0.6 to simulate different levels of blurring, Salt-and-Pepper Noise with probabilities (p) of 0.05, 0.1, and 0.15 to mimic pixel corruption, and Speckle Noise with standard deviations (σ) of 0.1, 0.2, and 0.3 to simulate multiplicative noise. By experimenting with multiple parameter values for each type of noise, we aimed to observe their effects on the classification performance of our model. Our findings highlight the importance of evaluating model robustness against various types and levels of noise. Understanding the impact of noise on classification accuracy is crucial for developing more robust and reliable deep learning models for Alzheimer’s disease diagnosis.

Figure 8demonstrates the Grad-CAM-based generation of attention maps30, illustrating how the model highlights crucial regions for Alzheimer’s classification. The underlying code first performs a forward pass through the model to collect feature maps, then computes gradients via a backward pass for the target class score. These gradients are pooled to derive weights, which are then combined with the activation maps to create a raw attention map. After normalization, resizing, and Gaussian blurring, this map is overlaid on the original MRI images to visualize regions of focus. In the figure, the original image is displayed alongside its corresponding heatmap and an overlaid image, where vivid attention regions coincide with clinically significant areas—such as the hippocampus and cortical structures. This demonstrates that the integrated CBAM mechanism within the CAPCBAM framework effectively directs the model’s attention to the most relevant features for differentiating AD, CI, and CN cases, enhancing interpretability and supporting reliable clinical assessment.

In Fig. 9, both subplots depict 2D UMAP31 projections of feature representations learned by two different models: the proposed model (left) and DenseNet121 (right). Each point represents an image feature embedded into two dimensions for visualization, with color indicating different classes or categories. In the left plot, points of similar colors appear more compact and better separated from the other colors, suggesting that the proposed model extracts more discriminative features. By contrast, while DenseNet121’s feature representations (right plot) also form distinct regions, there is greater overlap and mixing of different colors, implying lower separability of classes. Overall, this comparison highlights that the proposed model’s learned features yield stronger class separation, potentially leading to more accurate classification or clustering performance.

Conclusion and future work

In conclusion, this work presents a novel CAPCBAM framework that integrates Capsule Networks with the Convolutional Block Attention Module to advance Alzheimer’s disease detection from MRI scans. By leveraging the dynamic routing capabilities of Capsule Networks and the selective feature enhancement provided by CBAM, the model effectively preserves spatial hierarchies and emphasizes clinically significant regions, thereby achieving near-perfect classification metrics on the ADNI dataset. Comparative evaluations against traditional transfer learning models and state-of-the-art approaches demonstrate the superior accuracy, robustness, and interpretability of CAPCBAM, as evidenced by comprehensive performance metrics, Grad-CAM visualizations, and UMAP feature projections. Although the proposed method exhibits impressive diagnostic performance and resilience to various noise conditions, its inherent computational complexity and dependency on high-performance hardware remain as notable limitations. Future work should therefore focus on developing more computationally efficient routing algorithms and exploring model compression techniques such as pruning and quantization to reduce resource consumption without sacrificing accuracy. Expanding the evaluation to include diverse, clinically representative datasets will be crucial to assess the model’s robustness and adaptability across varied imaging environments. Furthermore, integrating multimodal approaches—such as combining MRI data with PET scans or clinical information—could enhance diagnostic performance and provide a more comprehensive tool for early Alzheimer’s detection, ultimately paving the way for more effective clinical translation and improved patient outcomes.

Data availability

The Dataset used is publicly available on the Kaggle platform via this link : ADNI Dataset.

References

Desai M B, Kumar Y, Pandey, S. Efficient Approach for Diagnosis and Detection of Alzheimer Diseases Using Deep Learning. In : 2024 International Conference on Advances in Computing Research on Science Engineering and Technology (ACROSET). IEEE, p. 1–5 (2024).

Sujathakumari, B. A., Kulkarni, S. S. P. & Hallikeri, V. Brain magnetic resonance imaging image classification for Alzheimer’s disease and its hardware acceleration. IAES Int. J. Artificial Intelligence https://doi.org/10.11591/ijai.v13.i2.pp1272-1281 (2024).

Shengbin L, Haoran S, Fuqi S. et al. Alzheimer’s disease classification algorithm based on fusion of channel attention and densely connected networks. Journal of Intelligent & Fuzzy Systems, no Preprint, p. 1–21.( 2024).

Ma, H. et al. Classification of Alzheimer’s disease: application of a transfer learning deep Q-network method. Europ. J. Neurosci. 59(8), 2118–2127 (2024).

Li, Y. et al. Dominating Alzheimer’s disease diagnosis with deep learning on sMRI and DTI-MD. Front. Neurol. 15, 1444795 (2024).

Naidu, N. B. Early Detection of Alzheimers Disease through Deep Learning in MRI Scans. Int. J. For Sci. Technol. Eng. https://doi.org/10.22214/ijraset.2024.59137 (2024).

Rao, B. S., Aparna, M., Harikiran, J. & Reddy, T. S. An effective Alzheimer’s disease segmentation and classification using Deep ResUnet and Efficientnet. J. Biomol. Struc. Dyn. https://doi.org/10.1080/07391102.2023.2294381 (2023).

Slimi, H. et al. A combinatorial deep learning method for Alzheimer’s disease classification-based merging pretrained networks. Front. Comput. Neurosci. 18, 1444019 (2024).

Shanto, S., & Haque, N. Early Diagnosis and Classification of Alzheimer’s Disease Using Convolutional Neural Network Based on MRI Images. 199–203. https://doi.org/10.1109/icict64387.2024.10839698(2024).

Ishaaq, N., Nafis, M. T., & Reyaz, A. Leveraging Deep Learning for Early Diagnosis of Alzheimer’s Using Comparative Analysis of Convolutional Neural Network Techniques. In A. Khang (Ed.), Driving Smart Medical Diagnosis Through AI-Powered Technologies and Applications (pp. 142–155). IGI Global Scientific Publishing. https://doi.org/10.4018/979-8-3693-3679-3.ch009 (2024).

Daher, M., & Elewi, A. Enhancing Early Diagnosis of Alzheimer’s Disease in MRI Images using Deep CNN Model. 1–6. https://doi.org/10.1109/idap64064.2024.10710771(2024).

Pandiyaraju, V., Venkatraman, S., Abeshek, A., Aravintakshan, S. A., Kumar S, P., & Kannan, A. A Dual-Attention Aware Deep Convolutional Neural Network for Early Alzheimer’s Detection. https://doi.org/10.48550/arxiv.2407.10921(2024).

Zhao, Z. et al. Machine learning approaches in comparative studies for Alzheimer’s diagnosis using 2D MRI slices. Turkish J. Elect. Eng. Comput. Sci. https://doi.org/10.55730/1300-0632.4057 (2024).

Abubakar, Aliyu and Jibrin, Yunusa and Maina, Mahmoud Bukar and Maina, Ali Bukar, Classification of Alzheimer’s Disease Using Cnn-Based Features and Vit-Global Contextual Patterns from MRI Images. Available at SSRN: https://doi.org/10.2139/ssrn.4811438

Devi, T. S. and Raghavendran, V. "Alzheimer’s Disease Classification and Severity Level Identification in Image Processing Using Deep Learning Algorithms," 2024 2nd International Conference on Sustainable Computing and Smart Systems (ICSCSS), Coimbatore, India, 2024, pp. 1161–1166, https://doi.org/10.1109/icscss60660.2024.10625413 (2024).

Yang, M. et al. A Multi-Label Deep Learning Model for Detailed Classification of Alzheimer’s Disease. Actas Espanolas De Psiquiatria 53(1), 89–99. https://doi.org/10.62641/aep.v53i1.1728 (2025).

Li, X., Gong, B., Chen, X., Li, H. & Yuan, G. Alzheimer’s disease image classification based on enhanced residual attention network. PLoS ONE 20(1), e0317376. https://doi.org/10.1371/journal.pone.0317376 (2025).

ADNI Dataset : https://www.kaggle.com/datasets/katalniraj/adni-extracted-axial

Chawla, N. V. et al. SMOTE: synthetic minority over-sampling technique. J. Artificial Int. Res. 16, 321–357 (2002).

Kingma, Diederik P., and Jimmy Ba. "Adam: A method for stochastic optimization." arXiv preprint arXiv:1412.6980 pp. 1–15 (2014).

Marin, I., Kuzmanić Skelin, A. & Grujić, T. Empirical evaluation of the effect of optimization and regularization techniques on the generalization performance of deep convolutional neural network. Appl. Sci. 10(21), 7817. https://doi.org/10.3390/APP10217817 (2020).

Hossin, M. & Sulaiman, M. N. A review on evaluation metrics for data classification evaluations. Int. J. Data Min. Knowl. Manag. Process. 5(2), 1 (2015).

Fathi, S. et al. A deep learning-based ensemble method for early diagnosis of Alzheimer’s disease using MRI images. Neuroinformatics 22(1), 89–105 (2024).

Hu, Z. et al. Conv-Swinformer: Integration of CNN and shift window attention for Alzheimer’s disease classification. Comput. Biol. Med. 164, 107304 (2023).

Kang, W. et al. Three-round learning strategy based on 3D deep convolutional GANs for Alzheimer’s disease staging. Sci. Rep. 13(1), 5750 (2023).

Illakiya T, Karthik R. Alzheimer’s Disease neuroimaging initiative, et al. A deep feature fusion network with global context and cross-dimensional dependencies for classification of mild cognitive impairment from brain MRI. Image and Vision Computing, 144, 104967 (2024).

Thayumanasamy, I. & Ramamurthy, K. Performance analysis of machine learning and deep learning models for classification of Alzheimer’s disease from brain MRI. Traitement du Signal. 39(6), 1961 (2022).

Illakiya, T. & Karthik, R. Dimension centric proximate attention network and swin transformer for age-based classification of mild cognitive impairment from Brain MRI. IEEE Access 11, 128018–128031 (2023A).

Anguita, D., Ghelardoni, L., Ghio, A., Oneto, L., & Ridella, S.. The “K” in K-fold Cross Validation. The European Symposium on Artificial Neural Networks, 441–446. https://arpi.unipi.it/handle/11568/962587 (2012)

SELVARAJU, Ramprasaath R., DAS, Abhishek, VEDANTAM, Ramakrishna, et al. Grad-CAM: Why did you say that?. arXiv preprint arXiv:1611.07450, (2016).

GHOJOGH, Benyamin, GHODSI, Ali, KARRAY, Fakhri, et al. Uniform manifold approximation and projection (UMAP) and its variants: tutorial and survey. arXiv preprint arXiv:2109.02508, (2021).

Author information

Authors and Affiliations

Contributions

Conceptualization, H.S., S.A., and M.S.; Methodology, H.S., S.A., M.S.; Software, H.S., S.A., M.S.; Validation, S.A., M.S.; Formal analysis, H.S.; Investigation, S.A., M.S.; Writing—original draft preparation, H.S., S.A.; Project administration, M.S.

Corresponding author

Ethics declarations

Competing interest

The authors state that no known competing financial interest or personal relationship may have had any influence on any of the work disclosed in this study.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Slimi, H., Abid, S. & Sayadi, M. Revolutionizing Alzheimer’s disease detection with a cutting-edge CAPCBAM deep learning framework. Sci Rep 15, 13925 (2025). https://doi.org/10.1038/s41598-025-98476-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-98476-0