Abstract

Visually impaired individuals suffer many problems in handling their everyday activities like road crossing, writing, finding an object, reading, and so on. However, many navigation methods are available, and efficient object detection (OD) methods for visually impaired people (VIP) need to be improved. OD is the most crucial contribution of computer vision (CV) and plays an important part in recognizing and finding objects in the image. The aged and visually challenged people can identify different objects accurately under some constraint features like scaled, occlusion, illuminated, and blurred nature in different real world. A considerable number of studies are performed in the domain of real-time object recognition using deep learning (DL). The DL-based methods remove the features autonomously and classify and detect the objects. This paper proposes a novel Object Detection Model for Visually Impaired Individuals with a Metaheuristic Optimization Algorithm (ODMVII-MOA) technique. The proposed ODMVII-MOA technique aims to improve OD methods in real-time with advanced techniques to detect and recognize objects for disabled people. At first, the image pre-processing stage applies the Weiner filter (WF) method to enhance image quality by eliminating the unwanted noise from the data. Furthermore, the RetinaNet technique is utilized for the OD process to recognize and locate objects within an image. Besides, the proposed ODMVII-MOA method employs the EfficientNetB0 method for the feature extraction process. For the classification process, the LSTM-AE method is employed. Finally, the Dandelion Optimizer (DO) method adjusts the hyperparameter range of the LSTM-AE method optimally and results in better performance of classification. The experimental validation of the ODMVII-MOA model is verified under the indoor OD dataset and the outcomes are determined regarding different measures. The comparison study of the ODMVII-MOA model portrayed a superior accuracy value of 99.69% over existing techniques.

Similar content being viewed by others

Introduction

A human being’s essential feature is often his vision ability1. The capacity of being able to see objects with the eyes is considered a gift and a major factor in everyday activities. The significant challenge for numerous VIPs is not able to be completely independent and being restricted by their vision2. Visually impaired individuals mostly encounter significant threats with everyday activities, and object recognition is a significant feature they depend on regularly. They specifically face difficulty with recognizing movement and objects in their environment, particularly while walking on the streets3. Vision loss usually occurs around the age of 50, a concern that is becoming more prevalent due to the ageing population. Vision impairment can cause diverse reasons like diabetic retinopathy, uncorrected refractive errors, unaddressed presbyopia, glaucoma, corneal opacity, and cataracts4. Numerous methods are intended to assist VIPs and enhance their living standards. Unfortunately, most of these methods are restricted in their capability. Artificial intelligence (AI) is initiating novel methods for individuals with disabilities to access the globe. OD is a significant task of CV, which handles identifying samples of visual objects of certain classes in digital images5. The purpose of OD is to progress computational techniques and models that offer the most fundamental pieces of knowledge required by the application of CV: What objects are where? The dual important metrics for OD are speed and precision6.

OD acts as an origin for multiple other tasks of CV, like object tracking, instance segmentation, image captioning, and more. Over recent years, the faster growth of DL models has greatly endorsed the enhancement of OD, resulting in remarkable innovations and propelling it to an investigation hotspot with unprecedented attention7. OD is broadly employed in various real-time applications namely robot vision, video surveillance, autonomous driving, and so on. Different methodologies have been utilized to identify objects effectively and accurately in various applications. The DL models remove the features individually to classify and detect the objects. Since a model is proficient with multiple objects, a DL-based OD method offers a precise outcome8. Internet of Things (IoT) gadgets, for example, microcontrollers can run neural networks effectively. Therefore, a DL technique is combined with IoT methods to progress it as an assistive gadget for visually challenged individuals. Numerous effective DL-based OD structures are accessible. The growing population of VIPs faces growing challenges in daily life, specifically in navigating their environment and recognizing objects9. With improvements in AI and CV, there is a significant opportunity to develop systems that can assist these individuals in real-time, improving their independence. Object detection, significant for situational awareness, is optimized with advanced techniques to give reliable feedback. Metaheuristic optimization algorithms and advanced object detection models can improve assistive technologies for VIPs, enhancing accuracy, safety, and autonomy. These innovations enable real-time object recognition, boosting confidence and independence in navigating their environment10.

This paper proposes a novel Object Detection Model for Visually Impaired Individuals with a Metaheuristic Optimization Algorithm (ODMVII-MOA) technique. The proposed ODMVII-MOA technique aims to improve OD methods in real-time with advanced techniques to detect and recognize objects for disabled people. At first, the image pre-processing stage applies the Weiner filter (WF) method to enhance image quality by eliminating the unwanted noise from the data. Furthermore, the RetinaNet technique is utilized for the OD process to recognize and locate objects within an image. Besides, the proposed ODMVII-MOA method employs the EfficientNetB0 method for the feature extraction process. For the classification process, the LSTM-AE method is employed. Finally, the Dandelion Optimizer (DO) method adjusts the hyperparameter range of the LSTM-AE method optimally and results in better performance of classification. The experimental validation of the ODMVII-MOA model is verified under the indoor OD dataset and the outcomes are determined regarding different measures. The key contribution of the ODMVII-MOA model is listed below.

-

The ODMVII-MOA model employs WF-based image pre-processing to enhance image quality by mitigating noise and enhancing clarity. This step confirms more accurate OD by giving cleaner input data. By minimizing noise interference, the approach strengthens the overall performance of the object recognition process.

-

The ODMVII-MOA technique utilizes RetinaNet-based OD to effectually detect and localize objects within images. This approach improves the accuracy of the detection process by concentrating on the focal loss function, which addresses class imbalance issues. It allows for precise object recognition, even in complex environments with varying object sizes.

-

The ODMVII-MOA method implements EfficientNetB0-based feature extraction to generate effectual and high-quality feature representations. This method significantly mitigates computational costs while maintaining performance. By optimizing the feature extraction process, it improves the capability of the model to detect and classify objects with greater accuracy.

-

The ODMVII-MOA methodology employs DO-based tuning to fine-tune the parameters and improve detection accuracy. This optimization technique effectively enhances convergence speed and model performance. By adjusting parameters in a dynamic and adaptive manner, it results in more precise object detection in diverse scenarios.

-

Integrating the DO with RetinaNet and EfficientNetB0 for OD, integrated with WF-based pre-processing, creates a novel approach to improving accuracy and efficiency. This unique integration addresses challenges such as noise reduction, class imbalance, and computational efficiency. The use of DO additionally improves optimization, allowing for enhanced performance in dynamic and complex environments.

Literature survey

In11, a real-world edge-based hazardous OD method is projected that employs a lightweight DL method for classifying objects taken by a camera positioned on Raspberry Pi edge gadgets. By alerting and identifying VIPs to the existence of hazardous objects in their settings, this method can considerably enhance their independence and safety. Chinni et al.12presented a transformative OD method intended to upgrade the navigational capability of VIPs over the application of sophisticated CV technology. Employing the YOLO technique, connected to the comprehensive object collection (COCO) dataset, this method offers real-world, precise classification and OD. The core capability of the application permits the processing of either static images or live video feeds, allowing blind users to receive auditory announcements of neighbouring objects. In13, a smart assistive navigation method is projected that integrates voice-over with OD. The mobile application employs audio and voice only to offer navigational guidance to consumers. Likewise identifying objects, the built prototype permits the user and access the environmental data namely surface wetness, item distance, and orientation. Talaat et al.14 projected SightAid. This novel wearable vision method employs a DL-based structure. SightAid includes a seven-stage architecture with sophisticated DNN with many convolutional and FC layers. Interaction with users assisted through haptic feedback or audio. A continuous learning mechanism, combining new data and user feedback, guaranteeing the system’s adaptability and ongoing refinement. More et al.15developed an OD model particularly described for VIP utilizing the YOLO-v8. The application of this OD enlarges multiple features of day-to-day life, accessibility, and promoting inclusivity for VIPs and bridging the gap between progressive investigation and practical assistive technology with the assistance of auditory feedback and edge devices. Sugashini and Balakrishnan16 projected an innovative economically effective, robust, handy, and simple solution with the help of smartphones. After identifying another stage is to evaluate the distance among the objects and VIPs to interact with them utilizing the headphones or audio devices. Arifando et al.17presented the Improved-YOLO-v10, an innovative method intended to address challenges in pov classification and bus identification by combining Adaptive Kernel Convolution (AKConv) and co-ordinate attention (CA) into the YOLO-v10 structure. The Improved YOLO-v10 progresses the YOLO-v10 framework over the integration of CA that improves spatial awareness, AKConv, and longer-range dependency modelling that are dynamically fine-tuned convolutional kernels for extracting features. Gomaa and Saad18 propose a novel residual channel-attention (RCA) network for scene classification, integrating a lightweight residual structure for multi-scale feature capture with a channel attention mechanism to highlight relevant features. Furthermore, a squeeze-and-excitation (SE) mechanism is integrated to prioritize key features and minimize background noise.

In19, a resilient semi-automatic method for unsupervised object detection, incorporating YOLOv8 with background subtraction and clustering is introduced. The approach eliminates manual labelling by refining background subtraction results and utilizing them to fine-tune YOLOv8 for OD and classification. Navya et al.20utilize ML and CV to automatically detect hypochromic microcytes in PBS images for improved IDA diagnosis, utilizing ResNet50 for classification and YOLOv7 for localization. Gomaa, Abdelwahab, and Abo-Zahhad21 propose a real-time approach for vehicle detection and tracking in aerial videos, incorporating Top-hat and Bottom-hat transformations for detection and motion analysis utilizing KLT tracker and K-means clustering. An efficient algorithm assigns vehicle labels to corresponding trajectories. Wei et al.22propose a Refined-Dilated Hybrid Bridge Feature Pyramid Network-Small Object Detection YOLOv8 (RHS-YOLOv8) method, which improves feature extraction with Refined-Dilated convolution, Hybrid Bridge Feature Pyramid Network (HBFPN), and Efficient Localization Attention (ELA), improves spatial relationships with an Involution module, and adds a small OD branch for improved performance. Gomaa and Abdalrazik23present a semi-automatic method integrating modified YOLOv4 and background subtraction for unsupervised object detection, eliminating manual labelling by using motion information to generate automatically labelled data. Zhao, Feng, and Wang24present a remote sensing object detection network (SCENE-YOLO) technique, a remote sensing OD network built on YOLOv8 with scene supervision. It integrates a scene information gathering and distribution network (SGD) model for high-level semantic injection, an omni-dimensional dynamic convolution (ODConv) method for feature weight redistribution, a scene label generation algorithm (SLGA) technique for model supervision, and a scene-assisted detection head (SADHead) method for improved performance in complex backgrounds. Abdalrazik, Gomaa, and Afifi25 propose a tri-band elliptical patch antenna for L2, L5, and L1 GNSS bands, utilizing a probe-fed patch for circular polarization and an eye-shaped aperture to improve beamwidth, with a stacked parasitic element improving performance at the L1 band. Wang et al.26 present a dynamic adversarial attack with transferable patches for non-rigid objects, optimized utilizing a cascade module and momentum technique, and improved with distance-adaptive generation and perspective transformation for improved robustness. Patil et al.27 improve OD by mitigating image size with Gaussian Blur and utilizing colour descriptors and Fourier transforms for feature extraction, surpassing conventional restricted Boltzmann machine (RBM) and deep neural network (DNN) methods.

Despite crucial improvements in OD systems for VIPs, various limitations still exist. Many methods still face difficulty with real-time processing and accuracy in dynamic environments, specifically in complex backgrounds and diverse object types. Existing models often need precise labelling and manual interventions, restricting their scalability and adaptability. Moreover, the integration of advanced techniques such as RBM and DNN in OD models is still constrained by computational complexity and feature extraction inefficiency. Moreover, many systems encounter threats in object classification under varying conditions such as lighting or occlusions. The requirement for more robust, real-time, and adaptable systems remains evident across several domains, from surveillance to assistive technologies for VIPs.

The proposed methodology

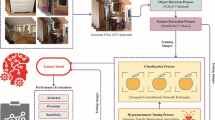

In this study, a novel ODMVII-MOA technique is introduced. The proposed ODMVII-MOA technique aims at improving the OD method in real-time with advanced techniques to detect and recognize objects for disabled people. It holds image preprocessing, OD, feature extractor, hybrid classification, and parameter tuning. Figure 1 depicts the entire flow of the ODMVII-MOA model.

Image preprocessing

At first, the image pre-processing stage applies the WF method to enhance image quality by eliminating the unwanted noise from the data28. This method is chosen for its capability to effectively mitigate noise and enhance image quality, which is significant for accurate OD. Unlike other methods, the WF adapts to local variations in the image, making it highly effective for removing noise without blurring crucial features. This is particularly beneficial in real-world scenarios where images may have varying levels of distortion or noise. The method is computationally efficient, enabling faster preprocessing while maintaining high-quality input for the detection model. Its capacity to improve image clarity enhances the overall performance of downstream models, making it more reliable in complex and noisy environments. Additionally, the WF model is a well-established technique, ensuring robustness and consistency across diverse datasets. Figure 2 illustrates the overall structure of the WF technique.

WF is a signal processing method applied for image restoration and denoising, making it beneficial in OD applications. In OD, WF aids in increased feature extraction by improving edges and significant details, resulting in improved detection precision. It is mainly beneficial in pre-processing stages for DL methods such as RetinaNet, whereas higher-quality input images increase performance. The filter adjusts to local image variants, making it efficient in processing real-time noisy environments. Its combination with feature extraction methods can result in enhanced OD in complex environments.

OD using retinanet

Next, the RetinaNet technique is utilized for the OD process to recognize and locate objects within an image29. This technique is chosen due to its efficiency in handling the class imbalance issue, which is a general threat in many real-world datasets. Its use of the focal loss function allows it to concentrate more on hard-to-detect objects, enhancing detection accuracy, particularly for small or rare classes. Unlike other models, RetinaNet strikes a balance between speed and accuracy, making it appropriate for real-time applications. It also performs well in both dense and sparse object scenarios, making it versatile for a wide range of detection tasks. The capability of the architecture to efficiently detect objects at diverse scales additionally improves its performance. Its proven track record in multiple domains, comprising autonomous driving and surveillance, underscores its reliability and robustness. Figure 3 represents the architecture of RetinaNet.

DL-based OD methods are separated into dual families. The 1 st family is made of dual‐phase recognition methods, like R‐CNN and its elements. This type of model splits the OD task into a dual phase that includes making candidate areas before implementing regression and classification. Therefore, they naturally can attain higher detection precision, however, they frequently have slower detection speed. The 2nd family comprises ‐phase DL‐based OD methods, like YOLO and its elements. These models explain OD to the end-to-end regression and classification task without making a candidate area. Therefore, they are normally faster but are frequently more uncertain in comparison with two‐phase systems. How to attain either higher accuracy or speed became a difficult task in the development of DL‐based OD analyses. Observed that these challenges mostly originate from the great imbalance normally discovered in sample class allocations. They presented the new function of loss called focal loss (FL) as a replacement for the conventional cross-entropy loss, and they established the RetinaNet networks for testing this FL task. FL is described in Eqs.(1-3)

Whereas, \(\:y\) means the label, \(\:y=1\) signifies that the instance constitutes the positive class. \(\:p\in\:\left[\text{0,1}\right]\) for binary classification epitomizes the possibility that the samples should be forecast as the positive instance. \(\:{p}_{t}\) denotes the method’s projected possibility \(\:p\) for positive class, or else is the l-\(\:p\) for negative class. \(\:\alpha\:\in\:\left[\text{0,1}\right]\) refers to the weighting factor applied to fine-tune the presentations to the FL from negative and positive samples. Akin to the description of \(\:{p}_{t},\) \(\:{\alpha\:}_{t}\) refers to \(\:\alpha\:\) for the positive class, else is the \(\:1-\alpha\:\) for the negative class. \(\:\gamma\:>0\) represents the modulation feature applied to overwhelm the sample weight which is classified and to manage the method to concentrate on instances that are in addition problematic to categorize. Fixed\(\:\alpha\:=0.25\) and \(\:\gamma\:=2\) in this research. Due to advancements in FL, it was ultimately shown that RetinaNet can perform real-world object detection with either higher speed or accuracy.

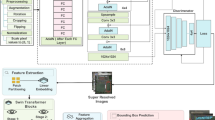

EfficientNetB0 feature extractor

Besides, the proposed ODMVII-MOA method implements the EfficientNetB0 method for the feature extraction process30. This technique is chosen due to its superior efficiency in balancing the performance and computational cost of the model. By utilizing a compound scaling method, it optimizes depth, width, and resolution simultaneously, attaining advanced accuracy with lesser parameters compared to other architectures. This makes EfficientNetB0 ideal for scenarios with restricted computational resources while maintaining high-quality feature extraction. Additionally, its lightweight nature allows for faster inference, which is significant in real-time applications. Unlike other deep models, EfficientNetB0 is designed to scale well, making it highly adaptable to diverse dataset sizes and complexities. Its efficiency confirms robust performance without the trade-offs in accuracy or speed commonly found in other models. Figure 4 demonstrates the EfficientNetB0 framework.

EfficientNet-B0 is the primary method of the EfficientNet family that begins with the layer of stem convolution. It is followed by numerous MBConv blocks that apply depth-wise separable convolutional. This convolution divided the normal convolution 1 into depth-wise convolutional individually filters the input channel for reading, and point-wise convolutional which combines the output of the depth-wise convolution. These separation type considerably reduces the parameter counts and computing cost by the features mapping, however, the feature extraction capacity stays very effective. The MBConv blocks in EfficientNet-B0 are more accurately allowed to identify the best features from the images like the minute parts in the images which are extremely valuable. Eventually, to combine each of the features gained from the extraction procedure, it combines Global Average Pooling. This layer down-samples the provided feature mapping from the preceding convolution layer in the average method and preserves simply the significant features. The result of these models is the lower comprehensive vector still concentrates on significant features. The last output is then transmitted to the dense classification layer but the presence should be recognized.

Hybrid LSTM-AE classification

For the classification process, the LSTM-AE method is employed31. This model is chosen for its capacity to effectually capture both temporal dependencies and spatial features in sequential data. LSTM outperforms at learning long-range dependencies, making it ideal for tasks comprising time series or sequential data. The AE component gives unsupervised feature learning, enabling the model to extract meaningful patterns from raw input data, which enhances the classification process. Integrating these two techniques allows the model to improve both feature extraction and sequence modelling capabilities, outperforming conventional methods that may face difficulty with complex, high-dimensional data. Furthermore, the hybrid approach mitigates overfitting by utilizing both supervised and unsupervised learning, making it more robust and efficient. This results in superior classification accuracy, particularly in dynamic environments with growing data. Figure 5 specifies the LSTM-AE approach.

AE is the form of ANN that learns how to effectively encode unlabeled data. It incorporates the succeeding dual portions: decoder and encoder. The initial portion addresses the reduction and generation of a few models of the characteristics from the first input \(\:x\) over the hidden layer (HL) \(\:h\). The overall objective of the AE represents the transformation of higher- to lower‐dimensional data. Determine the input layer \(\:X\in\:{R}^{l}\), the HL \(\:h\in\:{R}^{p}\), and the layer of output as \(\:x\in\:{R}_{l}\). The input data maps are implemented based on Eq. (4), while to condense the concealed representations \(\:p<l\), and \(\:\phi\:\) means the function of activation, \(\:W,\) and \(\:b\) refers to the weighted matrix and biased vector. These representations of the concealed data are applied to rebuild the input data afterwards seeing Eq. (5).

The LSTM-AE is an incorporation of the AE and the encoding-decoding LSTM structure. The AE tries to identify the most related special designs within the training data by minimizing the latent area at the input \(\:n<m\). It includes the succeeding 3 layers: the output layer, the input layer, and the hidden area. The training process contains various phases. During this next phase, the system learning for decoding moves back to the new input. The error of mean reconstruction is measured. The weighting is altered by the BP model.

The input is \(\:X\in\:{R}^{m}\), whereas the encoding compress \(\:x\) to get the encoding representation \(\:z=e\left(x\right)\in\:{R}^{n}\). Formerly, the decoder rebuilds this model to get \(\:X=d\left(z\right)\in\:{R}^{m}\) as the output. The training procedure aims to reduce the reconstruction error, as presented in Eq. (6).

Parameter tuning using DO

Finally, the DO approach fine-tunes the hyperparameter range of the LSTM-AE model optimally and results in better performance of classification32. This approach is chosen due to its capability to effectually optimize complex, non-convex objective functions. Unlike conventional optimization algorithms like gradient descent, DO does not depend on derivative information, making it appropriate for problems where gradient-based methods struggle, such as in noisy or multimodal landscapes. It gives a robust global search capability that averts local minima, resulting in improved solutions in challenging optimization scenarios. Additionally, DO adapts dynamically, adjusting its search strategy during optimization to improve convergence speed and accuracy. The capability to fine-tune model parameters in real-time enhances overall model performance and generalization. By utilizing DO, the parameter tuning process becomes more effectual, ensuring optimal model configurations even in highly variable environments. Figure 6 indicates the steps involved in the DO model.

The DO is a bio-inspired optimizer model derived from the dispersion procedure of dandelion seeds. The DO model transforms these biological phenomena into the optimizer structure which balances exploitation and exploration, making it suitable for resolving composite and higher‐dimensional optimizer problems. The DO works in the following 3 major steps: rising, decline, and landing phases. All phases symbolize an alternative phase of the optimizer procedure, facilitating either exploration or exploitation of the searching region.

Rising phase

Dandelion seeds are spread in the air, imitating the exploration stage. The model presents uncertainty over parameters like the scaling feature \(\:\lambda\:\), angle \(\:\theta\:\), and random vectors NEW. The location of every seed is upgraded as presented in Eq. (7):

Whereas \(\:\alpha\:\) refers to the scaling factor; \(\:{v}_{x}\) and \(\:{v}_{y}\) mean velocity elements; \(\:\lambda\:\) stands for random scaling feature; and NEW denotes randomly produced vector.

Decline phase

Once the seeds have grown, they start to run down, representing the migration from exploration to exploitation. Here, the population is fine-tuned according to the mean location of the seeds and a random feature \(\:\beta\:\), guaranteeing that seeds determine nearer feasible locations, as demonstrated in Eq. (8):

Here, \(\:{p}_{mean}\) characterizes the mean location of the population, and \(\:\beta\:\) denotes randomly formed features which control the fall. This phase improves the search and utilizes the best-discovered solutions.

Landing phase

During this last phase, the seeds land and settle into the best locations. This phase is focused on a Lévy flight (LF) that improves global exploration by presenting large, arbitrary stages. The location upgrade within the landing phase is provided, as exposed in Eq. (9):

Whereas Elite \(\:(i,j)\) characterizes the top individuals inside the population; Step length \(\:(i,j)\) refers to step size established by the distribution of LF; \(\:l\) denotes present iteration, and Max \(\:iter\) signifies maximal iteration counts.

Exploration and exploitation balance

The DO successfully balances exploitation and exploration over its 3-phase procedure. The increasing phase underlines exploration by permitting the model to search new areas of the searching region. The landing and decline phase move the concentration toward exploitation, refines the solutions, and converges near the best outcomes.

LF mechanism

LFs present randomness with a likelihood distribution which allows for larger, arbitrary stages, assisting the model escaping local ideals and exploring novel regions of the search area. This method is mainly useful in higher-dimensional and multi-modal optimizer issues, while the searching region is vast and complex. The DO is summarized accurately over the succeeding stages: Rising phase

Decline phase

Landing phase

The DO has proven to function well on various benchmark optimizer difficulties, mainly those that are higher-dimensional and multi-modal. Its capability to balance exploitation and exploration, incorporated with the LF method, makes it a stronger and more useful model to resolve composite real‐time difficulties. The DO model initiates a fitness function (FF) to reach the boosted outcome of classifications. It establishes a progressive number to characterize the greater performance of the candidate solutions.

Result analysis and discussion

The experimental analysis of the ODMVII-MOA model is examined under the indoor OD dataset33. This dataset contains 6642 samples under 10 objects. The complete details of the dataset are given below in Table 1.

Figure 7 presents the classifier outcomes of the ODMVII-MOA approach. Figure 7a and b shows the confusion matrices with truthful classification of each class label under 70%TRAPHA and 30%TESPHA. Figure 7c shows the PR values, showing optimal performance over every class. Besides, Fig. 7d demonstrates the ROC values, signifying accomplished outcomes with maximum ROC values for dissimilar classes.

Table 2; Fig. 8 established the OD of the ODMVII-MOA technique under 70:30 of TRAPHA/TESPHA. Based on 70% TRAPHA, the proposed ODMVII-MOA technique gains an average \(\:acc{u}_{y}\) of 99.69%, \(\:pre{c}_{n}\) of 94.40%, \(\:rec{a}_{l}\) of 89.63%, \(\:{F1}_{score}\:\)of 91.22%, and \(\:MCC\) of 91.39%. Besides, depending upon 30%TESPHA, the proposed ODMVII-MOA approach accomplishes an average \(\:acc{u}_{y}\) of 99.67%, \(\:pre{c}_{n}\) of 96.43%, \(\:rec{a}_{l}\) of 87.72%, \(\:{F1}_{score}\:\)of 90.53%, and \(\:MCC\) of 91.07%.

In Fig. 9, the training (TRAN) \(\:acc{u}_{y}\) and validation (VALN) \(\:acc{u}_{y}\) outcomes of the ODMVII-MOA model are demonstrated. The figure highlighted that both \(\:acc{u}_{y}\) analyses exhibit a growing trend which informed the capability of the ODMVII-MOA with better performance across distinct iterations. Moreover, both \(\:acc{u}_{y}\) remain closer across the epochs, which shows lesser overfitting and displays the greater solution of the ODMVII-MOA.

In Fig. 10, the TRAN loss (TRANLOSS) and VALN loss (VALNLOSS) analysis of the ODMVII-MOA model is exhibited. It is denoted that both values illustrate a lessening tendency, updating the capacity of the ODMVII-MOA in balancing a trade-off between data fitting and generalization. The continual reduction in loss values additionally promises the enhanced performance of the ODMVII-MOA model and tunes the prediction outcomes.

Table 3; Fig. 11illustrate the comparative results of the ODMVII-MOA technique with existing models34,35,36. The results emphasized that the Yolo-V8, Faster R-CNN, Yolo-v5n, GSDN, FCAF3D, Instant-NGP, and 3DGS-DR models have reported lesser performance. Whereas, the ODMVII-MOA approach stated the highest performance with high \(\:acc{u}_{y},\:\:pre{c}_{n}\), \(\:rec{a}_{l},\)and \(\:{F1}_{score}\) of 99.69%, 94.40%, 89.63%, and 91.22%, respectively.

The time complexity (TC) result of the ODMVII-MOA technique is illustrated in Table 4; Fig. 12. Based on TC, the ODMVII-MOA method presents a minimal TC of 10.86 s while the Yolo-V8, Faster R-CNN, Yolo-v5n, GSDN, FCAF3D, Instant-NGP, and 3DGS-DR methodologies achieve higher TC values of 21.28 s, 18.37 s, 13.98 s, 17.35 s, 25.43 s, 18.13 s, and 18.40 s, correspondingly.

Conclusion

In this paper, a novel ODMVII-MOA methodology is introduced. The proposed ODMVII-MOA methodology aims at improving the OD method in real-time with advanced techniques to detect and recognize objects for disabled people. At first, the image pre-processing stage applies the WF method to enhance image quality by eliminating the unwanted noise from the data. Furthermore, the RetinaNet technique is utilized for the OD process to recognize and locate objects within an image. Besides, the proposed ODMVII-MOA method employs the EfficientNetB0 method for the feature extraction process. For the classification process, the LSTM-AE method is employed. Finally, the DO method adjusts the hyperparameter range of the LSTM-AE method optimally and results in better performance of classification. The experimental validation of the ODMVII-MOA model is verified under the indoor OD dataset and the outcomes are determined regarding different measures. The comparison study of the ODMVII-MOA model portrayed a superior accuracy value of 99.69% over existing techniques. The limitations of the ODMVII-MOA model comprise various aspects that could affect its generalizability and performance in real-world applications. First, the approach may be sensitive to discrepancies in environmental conditions, such as lighting changes or sensor noise, which could mitigate its robustness. Second, the reliance on a fixed dataset limits the adaptability of the technique to unseen scenarios or diverse environments. Furthermore, the computational complexity of the algorithm may affect real-time processing in resource-constrained systems. The requirement for large training datasets and the time-consuming nature of model tuning additionally restrict its scalability. Future works may concentrate on improving the efficiency of the model through transfer learning and integrating adaptive mechanisms for enhanced robustness in dynamic environments. Additionally, exploring real-time deployment on edge devices and combining multi-modal data sources could result in a more versatile system.

Data availability

The data that support the findings of this study are openly available in the Kaggle repository at https://www.kaggle.com/datasets/thepbordin/indoor-object-detection, reference number [33].

References

Bhandari, A., Prasad, P. W. C., Alsadoon, A. & Maag, A. Object detection and recognition: using deep learning to assist the visually impaired. Disabil. Rehabilitation: Assist. Technol. 16 (3), 280–288 (2021).

Naqvi, K., Hazela, B., Mishra, S. & Asthana, P. Employing real-time object detection for visually impaired people. In Data Analytics and Management: Proceedings of ICDAM (pp. 285–299). Springer Singapore. (2021).

Theodorou, L. et al. October. Disability-first dataset creation: Lessons from constructing a dataset for teachable object recognition with blind and low vision data collectors. In Proceedings of the 23rd International ACM SIGACCESS Conference on Computers and Accessibility (pp. 1–12). (2021).

Abid Siddique, R. V. & Naik, S. A Survey on Gesture Control Techniques for Smart Object Interaction in Disability Support. Electronics, 12, p.512. (2023).

Lecrosnier, L. et al. Deep learning-based object detection, localisation and tracking for smart wheelchair healthcare mobility. International journal of environmental research and public health, 18(1), p.91. (2021).

Joshi, R. C., Yadav, S., Dutta, M. K. & Travieso-Gonzalez, C. M. Efficient multi-object detection and smart navigation using artificial intelligence for visually impaired people. Entropy, 22(9), p.941. (2020).

Masud, U., Saeed, T., Malaikah, H. M., Islam, F. U. & Abbas, G. Smart assistive system for visually impaired people obstruction avoidance through object detection and classification. IEEE Access. 10, 13428–13441 (2022).

Jubair, M. A. et al. Multi-objective optimization in Satellite-Assisted UAVs. J. Intell. Syst. Internet Things, 16(1). (2025).

Nasreen, J., Arif, W., Shaikh, A. A., Muhammad, Y. & Abdullah, M. December. Object detection and narrator for visually impaired people. In 2019 IEEE 6th international conference on engineering technologies and applied sciences (ICETAS) (pp. 1–4). IEEE. (2019).

Madhuri, A. & Umadevi, T. Role of context in visual Language models for object recognition and detection in irregular scene images. Fusion: Pract. Appl., 15(1). (2024).

Kadam, U. et al. Hazardous object detection for visually impaired people using edge device. SN Comput. Sci. 6 (1), 1–13 (2025).

Chinni, N. P. K. et al. July. Vision Sense: Real-Time Object Detection And Audio Feedback System For Visually Impaired Individuals. In 2024 2nd World Conference on Communication & Computing (WCONF) (pp. 1–6). IEEE. (2024).

Okolo, G. I., Althobaiti, T. & Ramzan, N. Smart Assistive Navigation System for Visually Impaired People. Journal of Disability Research, 4(1), p.20240086. (2025).

Talaat, F. M., Farsi, M., Badawy, M. & Elhosseini, M. SightAid: empowering the visually impaired in the Kingdom of Saudi Arabia (KSA) with deep learning-based intelligent wearable vision system. Neural Comput. Appl. 36 (19), 11075–11095 (2024).

More, S. S. et al. Empowering the visually impaired: YOLOv8-based object detection in android applications. Procedia Comput. Sci. 252, 457–469 (2025).

Sugashini, T. & Balakrishnan, G. Visually impaired object segmentation and detection using hybrid Canny edge detector, Hough transform, and improved momentum search in YOLOv7. Signal, Image and Video Processing, 18(Suppl 1), pp.251–265. (2024).

Arifando, R., Eto, S., Tibyani, T. & Wada, C. January. Improved YOLOv10 for visually impaired: balancing model accuracy and efficiency in the case of public transportation. In Informatics (Vol. 12, No. 1, 7). MDPI. (2025).

Gomaa, A. & Saad, O. M. Residual Channel-attention (RCA) network for remote sensing image scene classification. Multimedia Tools Appl., pp.1–25. (2025).

Gomaa, A. October. Advanced domain adaptation technique for object detection leveraging semi-automated dataset construction and enhanced yolov8. In 2024 6th Novel Intelligent and Leading Emerging Sciences Conference (NILES) (pp. 211–214). IEEE. (2024).

Verma, K. T. N., Prasad, S. & Kumar Singh, B. M. K. and Efficient diagnostic model for iron deficiency anaemia detection: a comparison of CNN and object detection algorithms in peripheral blood smear images. Automatika, 66(1), pp.1–15. (2025).

Gomaa, A., Abdelwahab, M. M. & Abo-Zahhad, M. Efficient vehicle detection and tracking strategy in aerial videos by employing morphological operations and feature points motion analysis. Multimedia Tools Appl. 79 (35), 26023–26043 (2020).

Wei, Y., Tao, J., Wu, W., Yuan, D. & Hou, S. RHS-YOLOv8: A Lightweight Underwater Small Object Detection Algorithm Based on Improved YOLOv8. Applied Sciences, 15(7), p.3778. (2025).

Gomaa, A. & Abdalrazik, A. Novel deep learning domain adaptation approach for object detection using semi-self building dataset and modified yolov4. World Electric Vehicle Journal, 15(6), p.255. (2024).

Zhao, T., Feng, R. & Wang, L. SCENE-YOLO: A One-stage remote sensing object detection network with scene supervision. IEEE Trans. Geosci. Remote Sens. (2025).

Abdalrazik, A., Gomaa, A. & Afifi, A. Multiband circularly-polarized stacked elliptical patch antenna with eye-shaped slot for GNSS applications. Int. J. Microw. Wirel. Technol. 16 (7), 1229–1235 (2024).

Wang, X. et al. Transferable and robust dynamic adversarial attack against object detection models. IEEE Internet Things J. (2025).

Patil, S. et al. May. Object Recognition and Tracking System to Assist Visually Impaired: A Neural Network-Based Deep RBM Technique. In 2024 International Conference on Electronics, Computing, Communication and Control Technology (ICECCC) (pp. 1–6). IEEE. (2024).

Yu, M., Su, J., Wang, Y. & Han, C. A noise reduction method for rolling bearing based on improved wiener filtering. Rev. Sci. Instrum., 96(2). (2025).

Wang, M., Liu, R., Luttrell, I. V., Zhang, J. & Xie, J. C. and Detection of masses in mammogram images based on the enhanced retinanet network with inbreast dataset. J. Multidisciplinary Healthc., pp.675–695. (2025).

Sambasivam, G., Prabu kanna, G., Chauhan, M. S., Raja, P. & Kumar, Y. A hybrid deep learning model approach for automated detection and classification of cassava leaf diseases. Scientific Reports, 15(1), p.7009. (2025).

Duraj, A., Szczepaniak, P. S. & Sadok, A. Detection of Anomalies in Data Streams Using the LSTM-CNN Model. Sensors, 25(5), p.1610. (2025).

Al Hwaitat, A. K., Fakhouri, H. N., Zraqou, J. & Sirhan, N. Hybrid Optimization Algorithm for Solving Attack-Response Optimization and Engineering Design Problems. Algorithms, 18(3), p.160. (2025).

https://www.kaggle.com/datasets/thepbordin/indoor-object-detection

Nimma, D. et al. Object detection in real-time video surveillance using attention based transformer-YOLOv8 model. Alexandria Eng. J. 118, 482–495 (2025).

Yang, Y. Q. et al. Swin3d: A pretrained transformer backbone for 3d indoor scene Understanding. Comput. Visual Media. 11 (1), 83–101 (2025).

Cao, J., Cui, J. & Schwertfeger, S. GlassGaussian: extending 3D Gaussian splatting for realistic imperfections and glass materials. IEEE Access. (2025).

Acknowledgements

The authors extend their appreciation to the King Salman center for Disability Research for funding this work through Research Group no KSRG-2024-070.

Author information

Authors and Affiliations

Contributions

Alaa O. Khadidos: Conceptualization, methodology, validation, investigation, writing—original draft preparation, fundingAyman Yafoz: Conceptualization, methodology, writing—original draft preparation, writing—review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Khadidos, A.O., Yafoz, A. Leveraging retinanet based object detection model for assisting visually impaired individuals with metaheuristic optimization algorithm. Sci Rep 15, 15979 (2025). https://doi.org/10.1038/s41598-025-99903-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-99903-y