Abstract

Large language models (LLMs) have the potential to enhance evidence synthesis efficiency and accuracy. This study assessed LLM-only and LLM-assisted methods in data extraction and risk of bias assessment for 107 trials on complementary medicine. Moonshot-v1-128k and Claude-3.5-sonnet achieved high accuracy (≥95%), with LLM-assisted methods performing better (≥97%). LLM-assisted methods significantly reduced processing time (14.7 and 5.9 min vs. 86.9 and 10.4 min for conventional methods). These findings highlight LLMs’ potential when integrated with human expertise.

Similar content being viewed by others

Introduction

Evidence syntheses require independent reviewers to extract data and assess the risk of bias (ROB)1, resulting in a labor-intensive and time-consuming process2, especially particularly in complementary and alternative medicine (CAM) research3,4. CAM has gained prominence due to its efficacy and safety profiles, leading to increased adoption by both clinicians and patients5,6. However, the lack of high-quality evidence necessitates efficient syntheses to support clinical practice7. Challenges include CAM’s complex, discipline-specific terminology and multilingual literature, complicating data extraction8.

Recent advances in generative artificial intelligence have produced outstanding large language models (LLMs) capable of analyzing vast text corpora, capturing complex contexts, and adapting to specialized domains, making them suitable for evidence synthesis9. Preliminary studies suggest LLMs’ potential in systematic reviews and meta-analyses10,11,12,13. However, their application in CAM is limited due to difficulties in creating CAM-specific prompts, maintaining precision in terminology, and handling diverse languages and study designs14. Moreover, the potential for LLM-assisted methods, where AI and human expertise work in tandem, remains largely unexplored. This study aimed to develop structured prompts for guiding LLMs in extracting both basic and CAM-specific data and assessing ROB in randomized controlled trials (RCTs) on CAM interventions. We compared LLM-only and LLM-assisted methods to conventional approaches, seeking to enhance efficiency and quality in data extraction and ROB assessment, ultimately supporting clinical practice and guidelines.

We randomly selected 107 RCTs (Supplementary Table 1) from 12 Cochrane reviews15,16,17,18,19,20,21,22,23,24,25,26, spanning 1979–2024, with 27.1% in English and 72.9% in Chinese; 44.9% were published post-2013. Studies focused on mind-body practices (41.1%), herbal decoctions (34.6%), and natural products (24.3%). Based on OCR recognizability, 94.4% of RCTs had higher recognizability (≥70% of text and data accurately detected), while 5.6% had lower recognizability (<70%).

Two LLMs—Claude-3.5-sonnet and Moonshot-v1-128k—were employed to extract data and assess ROB. Supplementary Notes 1 to 4 document all responses from two models. Supplementary Tables 2 to 7 present the analysis results of LLM-only and LLM-assisted extractions and assessments.

From 107 RCTs, both models produced 12,814 extractions. As shown in Fig. 1, Claude-3.5-sonnet showed superior overall accuracy (96.2%, 95% CI: 95.8–96.5%) compared to Moonshot-v1-128k (95.1%, 95% CI: 94.7–95.5%), with a statistically significant difference (RD: 1.1%, 95% CI: 0.6–1.6%; p < 0.001). Claude outperformed in the Baseline Characteristics domain, while both models had similar accuracy across other domains. For Moonshot-v1-128k, the highest correctness rate was in the Outcomes domain (97.6%), and the lowest was in the Methods domain (90.9%). Errors in Moonshot-v1-128k’s extractions often resulted from incorrectly labeling data as “Not reported.” Commonly missed information included start/end dates (44 RCTs), baseline balance descriptions (39), number analyzed (40), demographics (22), theoretical basis (21), treatment frequency (8), all outcomes in 3 RCTs, and specific outcome data in 45 RCTs. However, Moonshot-v1-128k successfully extracted CAM-specific data, such as traditional Chinese medicine terminology. The inter-model agreement rate between Claude and Moonshot-v1-128k was 93.8%, with 83.3% of Claude’s errors also present in Moonshot-v1-128k’s results.

This figure compares the accuracy of two language models, Claude-3.5-sonnet and Moonshot-v1-128k, in data extraction and risk-of-bias (ROB) assessments across multiple domains in 107 RCTs. Claude-3.5-sonnet demonstrated higher overall accuracy in data extraction (96.2%, 95% CI: 95.8% to 96.5%) than Moonshot-v1-128k (95.1%, 95% CI: 94.7% to 95.5%), with a statistically significant difference of 1.1% (p < 0.001). In the ROB assessment, Claude-3.5-sonnet also achieved slightly higher accuracy (96.9% vs. 95.7%), though the difference was not statistically significant. The greatest difference in domain-specific accuracy was observed in the Baseline Characteristics for data extraction, where Claude-3.5-sonnet outperformed Moonshot-v1-128k.

Investigators refined Moonshot-v1-128k’s extractions, achieving a corrected accuracy of 97.9% (95% CI: 97.7–98.2%), higher than the expected 95.3% for conventional methods (RD: 2.6%, 95% CI: 2.2–3.1%; p < 0.001; Fig. 2). The RD between LLM-assisted and LLM-only extractions was 2.8% (95% CI: 2.4–3.2%; p < 0.001; Table 1). Accuracy improvements were most notable in the Methods domain (+7.4%) and the Data and Analysis domain (+6.0%). Subgroup analyses revealed that higher PDF recognizability positively impacted Moonshot-v1-128k’s accuracy (p interaction = 0.023) but had no significant effect on LLM-assisted accuracy (p interaction = 0.100). Claude achieved higher accuracy in extracting data from English RCTs compared to Chinese RCTs (p interaction = 0.000).

This figure presents a comparison of the accuracy (correct rate) and efficiency (time spent) of three methods for data extraction and risk of bias (ROB) assessment: conventional, LLM-only, and LLM-assisted. For data extractions, the conventional method had an estimated accuracy of 95.3% and took 86.9 min per RCT. The LLM-only method achieved an accuracy of 95.1% and took only 96 s per RCT, while the LLM-assisted method had the highest accuracy at 97.9% and took 14.7 min per RCT. For ROB assessments, the conventional method had an estimated accuracy of 90.0% and took 10.4 min per RCT. The LLM-only method achieved an accuracy of 95.7% and took only 42 s per RCT, while the LLM-assisted method had the highest accuracy at 97.3% and took 5.9 min per RCT. These results demonstrate that LLM-assisted methods can achieve higher accuracy than conventional methods while being substantially more efficient.

Both models conducted 1,070 ROB assessments. As shown in Fig. 1, Claude achieved 96.9% accuracy (95% CI: 95.7–97.9%), slightly higher than Moonshot-v1-128k’s 95.7% (95% CI: 94.3–96.8%), though the difference was not statistically significant (RD: 1.2%, 95% CI: –0.4–2.8%). Moonshot-v1-128k’s lowest accuracy was in the Sequence generation domain (87.9%), while other domains ranged from 94.4% to 100.0%. Sensitivities in Selective outcome reporting and Other bias were relatively low (0.50 and 0.40), with corresponding F-scores of 0.67 and 0.44, but other domains had F-scores between 0.97 and 1.00. Of the 46 incorrect assessments, 62.1% were due to missing supporting information, while 37.9% involved correct data extraction but erroneous judgments. Cohen’s kappa values indicated substantial to almost perfect agreement in most domains, except for Selective outcome reporting (0.66) and Other bias (0.42), likely due to high true negative rates (>93%). The inter-model agreement between Claude-3.5-sonnet and Moonshot-v1-128k was almost perfect (Cohen’s kappa = 0.88), with 66.7% of Claude’s errors also present in Moonshot-v1-128k’s results.

After refinement based on Moonshot-v1-128k’s assessments, the mean correctness rate of LLM-assisted ROB assessments increased to 97.3% (95% CI: 96.1–98.2%), significantly surpassing the expected 90.0% accuracy of conventional methods (RD: 7.3%, 95% CI: 6.2–8.3%; p < 0.001; Fig. 2). The RD between LLM-assisted and LLM-only assessments was 1.6% (95% CI: 0.0–3.2%; p = 0.05; Table 2), indicating that human review corrected some errors, leading to improved accuracy. The PABAK (Prevalence-Adjusted Bias-Adjusted Kappa) among the four investigators was 0.88, signifying an almost perfect agreement. The Sequence generation domain exhibited the greatest improvement in accuracy (+8.4%), and all errors in the Allocation sequence concealment domain were rectified, achieving a 100% correctness rate. Subgroup analysis revealed that Claude-3.5-sonnet achieved significantly higher accuracy in assessing ROB for English-language RCTs compared to Chinese-language RCTs (p interaction = 0.000). Conversely, LLM-assisted assessments showed higher accuracy for RCTs published in Chinese (p interaction = 0.023), suggesting that the investigators’ native language influenced assessment accuracy.

For both data extraction and ROB assessment, the LLM models demonstrated significant time savings compared to conventional methods. Data extraction took an average of 96 s per RCT with Moonshot-v1-128k and 82 s with Claude-3.5-sonnet, while refinement extended Moonshot-v1-128k-assisted extractions to 14.7 min per RCT—still much faster than the 86.9 min required by traditional approaches. Similarly, ROB assessments averaged 42 s per RCT with Moonshot-v1-128k and 41 s with Claude, with Moonshot-v1-128k-assisted assessments, including refinement, taking just 5.9 min per RCT compared to 10.4 min for conventional methods.

Overall, both Claude-3.5-sonnet and Moonshot-v1-128k demonstrated high accuracy, with LLM-assisted methods significantly outperforming conventional approaches in both accuracy and efficiency (Fig. 2). Claude-3.5-sonnet achieved slightly higher accuracy than Moonshot-v1-128k for data extraction (96.2% vs. 95.1%) and ROB assessment (96.9% vs. 95.7%), though the difference was statistically significant only for data extraction. Errors in both models often stemmed from failing to identify reported data, such as start/end dates and participant numbers, rather than misinterpreting extracted information. ROB assessment errors were most frequent in the Sequence generation domain, where inconsistent judgments arose despite correct justifications. For example, Moonshot-v1-128k accurately identified the randomization method in Mao, 2014 (Supplementary Note 2) but incorrectly classified it, suggesting challenges in applying rule-based criteria.

LLM-assisted methods proved particularly effective in addressing these issues. Human reviewers identified and corrected common error patterns, significantly improving accuracy, especially in the Methods domain (+7.4%) for data extraction and the Sequence generation domain (+8.4%) for ROB assessment. By addressing these recurring issues, reviewers not only enhanced the reliability of individual assessments but also provided insights into systematic weaknesses in LLM outputs. This process highlighted the critical role of human expertise in working with LLMs, as reviewers could identify specific areas needing improvement and ensure the conclusions were accurate and reliable.

Efficiency gains were substantial. For data extraction, time per RCT decreased from 86.9 min to 14.7 min, while ROB assessment times dropped from 10.4 min to 5.9 min. The combination of time savings and improved accuracy significantly enhances evidence synthesis, particularly in complex domains like CAM that demand specialized knowledge.

Subgroup analyses revealed that higher PDF recognizability improved Moonshot-v1-128k’s extraction accuracy (p interaction = 0.023), but Claude-3.5-sonnet outperformed in extracting data from English-language RCTs compared to Chinese-language ones (p interaction = 0.000). This subgroup difference may be attributed to variations in the training datasets of the models, underscoring the need to consider document quality and language characteristics when applying LLMs to evidence synthesis.

This study aligns with previous research demonstrating high accuracy for LLMs like Claude 2 and GPT-4 in data extraction when guided by structured prompts27,28,29. Our findings build on earlier work by optimizing prompts to enhance domain-specific judgment logic, step-wise reasoning, and few-shot learning, leading to significantly higher accuracy in ROB assessments compared to prior studies30. Additionally, incorporating confidence estimates and justifications for each domain allowed investigators to more effectively identify errors.

Strengths of this study include validated prompts, a diverse sample of RCTs, and the inclusion of less experienced reviewers to estimate practical effects. Limitations include potential language-dependent biases, as all reviewers were native Chinese speakers, and reliance on benchmark estimates for conventional methods, which may not fully reflect current practices across diverse settings. The RCTs included in our study had first authors affiliated with institutions in 12 countries and regions, but 83 studies (77.6%) were from mainland China. While we analyzed the publication language of the RCTs, it is possible that the primary language background of researchers was predominantly Chinese, which may pose challenges for generalizing these findings. While this study demonstrated the feasibility and effectiveness of LLM-assisted methods using Claude-3.5-sonnet, future research should explore the application of other high-ranking models to ensure broader generalizability. Incorporating models from authoritative rankings or widely recognized sources could validate whether the approach is robust across different architectures and datasets, enhancing its applicability in diverse research contexts.

Methods

This study was conducted between November 3, 2023, and September 30, 2024, adhering to the AAPOR reporting guideline31. The Medical Ethics Review Committee of Lanzhou University’s School of Public Health exempted the study from requesting approval since all data originated from published studies.

This study employed two LLMs: Moonshot-v1-128k, an open-access model developed by Moonshot AI32, and Claude-3.5-sonnet, developed by Anthropic33. Our hypothesis posits that a two-step process involving (1) data extraction and ROB assessment by LLMs guided by structured prompts, followed by (2) verification and refinement by a single researcher, would yield results non-inferior in accuracy and superior in efficiency to conventional methods requiring two independent researchers.

Tools and Prompts for Data Extraction and Risk-of-Bias Assessment

We drafted prompts for data extraction and ROB assessment using a few-shot learning strategy. For data extraction, we followed the Cochrane Handbook (Supplementary Table 8)34, aiming to include general core items in the following domains: Methods, Participants, Intervention groups, Outcomes, Data and analysis, and Others. Additionally, we extended these prompts to include CAM-specific elements following Xia et al.35, such as Chinese diagnostic patterns, therapeutic principles and methods, herbal formula compositions, non-pharmacological therapy details, and theoretical basis.

For ROB assessment, we drafted a prompt following a modified version of the Cochrane 1.0 instrument (ROB 1; Supplementary Note 5)36. It comprises ten domains: Sequence generation, Allocation sequence concealment, Blinding (including blinding of patients, healthcare providers, data collectors, outcome assessors, and data analysts), Missing outcome data, Selective outcome reporting, and Other bias. Each domain can be rated as “definitely or probably yes” (low risk of bias) or “probably or definitely no” (high risk of bias). We refined the prompts through iterative pilot tests with five selected RCTs until the two LLMs could continuously and entirely correctly extract data and assess ROB. Supplementary Notes 6 and 7 present the finalized prompts, consisting of five core components: instruction and role setting, general, specific, output formatting, and supplementary guidelines.

Selection of samples and investigators

We estimated the accuracy of human reviewers for data extraction to be 95.3%, with a mean time per RCT of 86.9 min (inclusive of time for data extraction, verification, and consensus) according to Buscemi et al.37. The accuracy of human reviewers using the ROB 1 tool for assessment was assumed to be 90% according to Arno et al.38, with a mean time per RCT of 10.4 min. Assuming an expected accuracy of 95% for both LLM-assisted extractions and ROB assessments, and a non-inferiority threshold of 0.10, we determined a sample size of 104 RCTs, based on a 2.5% type I error rate and 90% power39. We searched the Cochrane Database of Systematic Reviews using MeSH terms for complementary therapies and keywords related to randomized controlled trials to identify Cochrane reviews (Supplementary Box 1). We included Cochrane reviews that included at least 5 RCTs with evidence synthesis, provided detailed data extraction, and performed ROB assessments, with no language restrictions. Retracted studies or those with unavailable full texts were excluded. Using Excel software, we generated random numbers and selected reviews from the eligible pool. For each selected review, we employed a stratified random sampling approach. Reviews including 10 or fewer RCTs had all their studies included, while for those with more than 10 RCTs, we randomly selected 10 using an Excel-generated randomization sequence. This process continued sequentially across reviews until we attained our target sample of 104 RCTs.

Four Chinese-speaking investigators with limited experience (one to 1.5 years in evidence synthesis) participated in the manual reviews. Before starting, they underwent one month of standardized training to ensure consistency.

Study Procedures

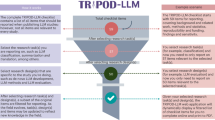

Figure 3 illustrates the main study process, led and supervised by a senior researcher (LG).

The study process began with the random selection of 107 randomized controlled trials (RCTs) on complementary and alternative medicine interventions. An investigator checked the PDF files of these RCTs to ensure their eligibility and completeness. Two large language models (LLMs)—Moonshot-v1-128k and Claude-3.5-sonnet—were employed, with tailored prompts iteratively tested and refined until both LLMs could achieve continuous, correct extractions and assessments across five consecutive RCTs. A single investigator then independently conducted extractions and risk-of-bias (ROB) assessments for each RCT using these finalized prompts. Simultaneously, four reviewers, after one month of training, used the lower-accuracy LLM outputs as initial references. Each reviewer independently checked and, if necessary, modified the LLM results to create four separate LLM-assisted assessments and extractions per RCT. To validate these results, two methodologists compared the LLM-only, LLM-assisted, and conventional manual assessments and reached a consensus to establish a benchmark reference. The study was conducted between November 2023 and September 2024, adhering to AAPOR guidelines and exempt from ethics approval by Lanzhou University’s Medical Ethics Review Committee due to its use of publicly available data.

Extraction and Assessment by the Large Language Model Independently

Two investigators (HHL and LYH) conducted extractions and assessments for all RCTs using Moonshot-v1-128k in mainland China from March 5 to 15, 2024, and Claude-3.5-sonnet in Canada from September 15 to 30, 2024. For each RCT, the investigator checked the recognizability of the portable document format (PDF) files, employing optical character recognition (OCR) software to convert them to text and quantifying the proportion of unrecognizable text and data. The investigator uploaded each PDF and prompt simultaneously to both LLMs and then exported their complete outputs. We accessed both models through their respective APIs, set the temperature parameter to 0 to ensure the models strictly followed the prompt, used a wired 100 Mbps network, and repeated any outputs invalidated by unrelated issues, such as network or server failures.

Extraction and Assessment by Reviewers with Large Language Model Assistance

Based on the outputs from the LLM with relatively lower accuracy (either Moonshot-v1-128k or Claude-3.5-sonnet), four reviewers (CYB, WLZ, JYL, and DNX) independently extracted data and assessed ROB for all RCTs. The reviewers first examined the LLM-derived results and then either agreed with them or modified them to form four LLM-assisted extraction and assessment results. The reviewers had the option to consult the original articles at their discretion throughout this process. The reviewers recorded the total time spent on each RCT, which included the time taken by the LLMs to generate the initial results plus the time spent by the reviewers to verify and modify those results.

Outcome measures

We evaluated the performance of both LLM-only and LLM-assisted methods in terms of accuracy and efficiency, comparing them against conventional manual methods as well as assessing the accuracy improvement from LLM-only to LLM-assisted methods. Two methodologists (HHL, LG) independently compared the results from three sources: the direct output from the LLMs (LLM-only), the results after human review and modification of the LLM output (LLM-assisted), and their own manual extraction and assessment. Through careful comparison and discussion of these three sets of results, the methodologists collaboratively established a reference standard for each extraction and assessment. Any discrepancies were resolved through consensus, ensuring a robust and accurate benchmark against which to evaluate the LLM-based methods.

For data extraction, we considered extractions incorrect if they had substantial omissions or erroneous extractions, while minor differences in phrasing or formatting that did not affect the content’s accuracy were disregarded. For ROB assessment, “definitely/probably yes” is categorized as having a low risk of bias and “definitely/probably no” as having a high risk of bias, considering the overall intent and implications of assessors’ judgments rather than strictly adhering to semantic distinctions in response options. To assess efficiency, we measured the total time spent on each RCT, which included the LLM’s generation time and the investigators’ verification and modification time.

Data analysis

We conducted data analysis using R version 4.3.340. We quantified accuracy as the percentage of correct evaluations (correctness rate). We calculated the rate difference (RD) with 95% confidence intervals (CIs) to compare the overall correct correctness rates between LLM-assisted and conventional methods, considering an RD > 0.10 as an indication of superiority and an RD < -0.10 as an indication of inferiority. The RDs between LLM-assisted and LLM-only methods were also calculated for both overall and for each domain. For ROB, we also calculated domain-specific and overall sensitivity, specificity, and F-score, considering “high risk” as positive and “low risk” as negative. To assess consistency, we calculated the agreement rates between the LLM-only extractions across models. For ROB assessments, Cohen’s kappa measured agreement between LLM-only results from each model and between these results and the reference standard. For both data extraction and ROB assessment, we utilized the prevalence-adjusted bias-adjusted kappa (PABAK) to assess inter-rater agreement among the four investigators’ LLM-assisted results. We chose PABAK over Fleiss’s Kappa due to the high prevalence of correct extractions and assessments, which can yield paradoxically low kappa values despite high observed agreement.

We conducted subgroup analyses of potential influencing factors, including PDF recognizability (dichotomized as higher recognizability, with ≥70% of text and data elements accurately detected by OCR in their original layout and context, versus lower recognizability, with <70% accurately detected), publication language (English versus non-English), and year of publication (prior to 2013 versus 2013 and later). These subgroup analyses were performed based on a priori hypotheses that studies with higher PDF recognizability, published in English, and more recently published would be associated with greater accuracy.

Data availability

All data generated or analyzed during this study are included in this published article and its supplementary information files. Additional details or specific datasets from the analysis can be made available by the corresponding author upon reasonable request.

Code availability

The custom code used for prompt development and data extraction/assessment processes involving Moonshot-v1-128k and Claude-3.5-sonnet LLMs is available from the corresponding author upon reasonable request. The analysis scripts, along with the LLM interaction pipelines, were developed using open-source R packages.

References

Page, M. J. et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 372, n71 (2021).

Veginadu, P., Calache, H., Gussy, M., Pandian, A. & Masood, M. An overview of methodological approaches in systematic reviews. J. Evid. Based Med. 15, 39–54 (2022).

Shekelle, P. G. et al. Challenges in systematic reviews of complementary and alternative medicine topics. Ann. Intern Med 142, 1042–1047 (2005).

Wider, B. & Boddy, K. Conducting systematic reviews of complementary and alternative medicine: common pitfalls. Eval. Health Prof. 32, 417–430 (2009).

Tangkiatkumjai, M., Boardman, H. & Walker, D.-M. Potential factors that influence usage of complementary and alternative medicine worldwide: a systematic review. BMC Complement. Med. Ther. 20, 363 (2020).

Phutrakool, P. & Pongpirul, K. Acceptance and use of complementary and alternative medicine among medical specialists: a 15-year systematic review and data synthesis. Syst. Rev. 11, 10 (2022).

Fischer, F. H. et al. High prevalence but limited evidence in complementary and alternative medicine: guidelines for future research. BMC Complement. Altern. Med. 14, 46 (2014).

Neimann Rasmussen, L. & Montgomery, P. The prevalence of and factors associated with inclusion of non-English language studies in Campbell systematic reviews: a survey and meta-epidemiological study. Syst. Rev. 7, 129 (2018).

Lin, Z. How to write effective prompts for large language models. Nat Hum Behav https://doi.org/10.1038/s41562-024-01847-2 (2024).

Li, Z. et al. Ensemble pretrained language models to extract biomedical knowledge from literature. J Am Med Inform Assoc ocae061 https://doi.org/10.1093/jamia/ocae061 (2024).

Nashwan, A. J. & Jaradat, J. H. Streamlining Systematic Reviews: Harnessing Large Language Models for Quality Assessment and Risk-of-Bias Evaluation. Cureus 15, e43023 (2023).

Hasan, B. et al. Integrating large language models in systematic reviews: a framework and case study using ROBINS-I for risk of bias assessment. BMJ Evidence-Based Medicine https://doi.org/10.1136/bmjebm-2023-112597 (2024).

Lai, H. et al. Assessing the Risk of Bias in Randomised Controlled Trials with Large Language Models: A Feasibility Study. JAMA Netw Open.

Kumar, P. Large language models (LLMs): survey, technical frameworks, and future challenges. Artif. Intell. Rev. 57, 260 (2024).

Chen, X., Deng, L., Jiang, X. & Wu, T. Chinese herbal medicine for oesophageal cancer. Cochrane Database Syst. Rev. 2016, CD004520 (2016).

Chan, E. S. et al. Traditional Chinese herbal medicine for vascular dementia. Cochrane Database Syst. Rev. 12, CD010284 (2018).

Zhang, H. W., Lin, Z. X., Cheung, F., Cho, W. C.-S. & Tang, J.-L. Moxibustion for alleviating side effects of chemotherapy or radiotherapy in people with cancer. Cochrane Database Syst. Rev. 11, CD010559 (2018).

Ngai, S. P. C., Jones, A. Y. M. & Tam, W. W. S. Tai Chi for chronic obstructive pulmonary disease (COPD). Cochrane Database Syst. Rev. 2016, CD009953 (2016).

Mu, J. et al. Acupuncture for chronic nonspecific low back pain. Cochrane Database Syst. Rev. 12, CD013814 (2020).

Lim, C. E. D., Ng, R. W. C., Cheng, N. C. L., Zhang, G. S. & Chen, H. Acupuncture for polycystic ovarian syndrome. Cochrane Database Syst. Rev. 2019, CD007689 (2019).

Hartley, L. et al. Qigong for the primary prevention of cardiovascular disease. Cochrane Database Syst. Rev. 2015, CD010390 (2015).

Zhu, X., Liew, Y. & Liu, Z. L. Chinese herbal medicine for menopausal symptoms. Cochrane Database Syst. Rev. 3, CD009023 (2016).

Zhou, K., Zhang, J., Xu, L. & Lim, C. E. D. Chinese herbal medicine for subfertile women with polycystic ovarian syndrome. Cochrane Database Syst. Rev. 6, CD007535 (2021).

Kong, D. Z. et al. Xiao Chai Hu Tang, a herbal medicine, for chronic hepatitis B. Cochrane Database Syst. Rev. 2019, CD013090 (2019).

Flower, A., Wang, L.-Q., Lewith, G., Liu, J. P. & Li, Q. Chinese herbal medicine for treating recurrent urinary tract infections in women. Cochrane Database Syst. Rev. 2015, CD010446 (2015).

Deng, H. & Xu, J. Wendan decoction (Traditional Chinese medicine) for schizophrenia. Cochrane Database Syst. Rev. 6, CD012217 (2017).

Konet, A. et al. Performance of two large language models for data extraction in evidence synthesis. Res Synth Methods https://doi.org/10.1002/jrsm.1732 (2024).

Khraisha, Q., Put, S., Kappenberg, J., Warraitch, A. & Hadfield, K. Can large language models replace humans in systematic reviews? Evaluating GPT-4’s efficacy in screening and extracting data from peer-reviewed and grey literature in multiple languages. Res Synth. Methods 15, 616–626 (2024).

Gartlehner, G. et al. Data extraction for evidence synthesis using a large language model: A proof-of-concept study. Res Synth. Methods 15, 576–589 (2024).

Lai, H. et al. Assessing the Risk of Bias in Randomized Clinical Trials With Large Language Models. JAMA Netw. Open 7, e2412687 (2024).

Pitt, S. C., Schwartz, T. A. & Chu, D. AAPOR Reporting Guidelines for Survey Studies. JAMA Surg. 156, 785–786 (2021).

Kimi.ai. https://kimi.moonshot.cn/.

Home. Anthropic https://docs.anthropic.com/en/home.

Chapter 5: Collecting data. https://training.cochrane.org/handbook/current/chapter-05.

Xia, Y. et al. A precision-preferred comprehensive information extraction system for clinical articles in traditional Chinese Medicine. Int. J. Intell. Syst. 37, 4994–5010 (2022).

Tool to Assess Risk of Bias in Randomized Controlled Trials DistillerSR. DistillerSR https://www.distillersr.com/resources/methodological-resources/tool-to-assess-risk-of-bias-in-randomized-controlled-trials-distillersr.

Buscemi, N., Hartling, L., Vandermeer, B., Tjosvold, L. & Klassen, T. P. Single data extraction generated more errors than double data extraction in systematic reviews. J. Clin. Epidemiol. 59, 697–703 (2006).

Arno, A. et al. Accuracy and Efficiency of Machine Learning-Assisted Risk-of-Bias Assessments in ‘Real-World’ Systematic Reviews: A Noninferiority Randomized Controlled Trial. Ann. Intern Med 175, 1001–1009 (2022).

Flight, L. & Julious, S. A. Practical guide to sample size calculations: non-inferiority and equivalence trials. Pharm. Stat. 15, 80–89 (2016).

The Comprehensive R Archive Network. https://cran.rstudio.com/.

Acknowledgements

This study was jointly supported by the Fundamental Research Funds for the Central Universities (No. lzujbky-2024-oy11), the National Natural Science Foundation of China (No. 82204931) and the Scientific and Technological Innovation Project of the China Academy of Chinese Medical Sciences (No. CI2021A05502).

Author information

Authors and Affiliations

Consortia

Contributions

H.H.L., J.Y.L., and L.G. conceptualized the study, developed the structured prompts for large language models (LLMs), and drafted the manuscript. H.H.L., J.Y.L., C.Y.B., H.L., B.P., X.F.L., and D.N.X. refined and optimized the prompts for data extraction and risk-of-bias (ROB) assessments. H.H.L. ran the LLM-based data extraction and ROB assessments. H.H.L., J.Y.L., and W.L.Z. performed statistical analyses. L.Y.H., J.H.T., Y.L.C., X.L., J.L., N.N.S., X.S., and L.G. assisted in the collection and analysis of randomized controlled trials (RCTs) data, including reviewing the extraction and assessment results from LLMs and human reviewers. J.E. and L.Z. provided guidance on systematic review methodologies and contributed to the interpretation of the accuracy and efficiency results. H.C.S., Z.X.B., and L.Q.H. reviewed the study design, provided domain-specific expertise in complementary and alternative medicine (CAM), and contributed to the interpretation of CAM-specific results. K.H.Y. and L.Q.H. supervised the entire research process, overseeing both the technical aspects of LLM application and the clinical relevance of the findings. L.G. was responsible for overall project administration, ensuring integration across the study’s various components, and contributed to the final approval of the manuscript. All authors reviewed, edited, and approved the final version of the manuscript for submission.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lai, H., Liu, J., Bai, C. et al. Language models for data extraction and risk of bias assessment in complementary medicine. npj Digit. Med. 8, 74 (2025). https://doi.org/10.1038/s41746-025-01457-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-025-01457-w