Abstract

To screen for careless responding, researchers have a choice between several direct measures (i.e., bogus items, requiring the respondent to choose a specific answer) and indirect measures (i.e., unobtrusive post hoc indices). Given the dearth of research in the area, we examined how well direct and indirect indices perform relative to each other. In five experimental studies, we investigated whether the detection rates of the measures are affected by contextual factors: severity of the careless response pattern, type of item keying, and type of item presentation. We fully controlled the information environment by experimentally inducing careless response sets under a variety of contextual conditions. In Studies 1 and 2, participants rated the personality of an actor that presented himself in a 5-min-long videotaped speech. In Studies 3, 4, and 5, participants had to rate their own personality across two measurements. With the exception of maximum longstring, intra-individual response variability, and individual contribution to model misfit, all examined indirect indices performed better than chance in most of the examined conditions. Moreover, indirect indices had detection rates as good as and, in many cases, better than the detection rates of direct measures. We therefore encourage researchers to use indirect indices, especially within-person consistency indices, instead of direct measures.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Avoid common mistakes on your manuscript.

Questionnaire measures are convenient and are used regularly to assess psychological phenomena. However, credible results can be obtained by the survey instrument only if respondents have answered the questions attentively. Unfortunately, it is rare that all respondents will be attentive, especially if their motivation to participate is low (Goldammer et al., 2020). Respondents often rush through the questionnaire without paying attention to item content or instructions (Goldammer et al., 2020; Meade & Craig, 2012). That kind of response behavior has been commonly labeled insufficient effort responding (Huang et al., 2012) or careless responding (Meade & Craig, 2012).

The consequences of undetected careless responding can be severe. Simulations suggest that even small proportions, 10% (Hong et al., 2020; Woods, 2006) or even 5% (Credé, 2010) of careless respondents in the data, can bias results; moreover, bias increases with the rate of careless respondents (Hong et al., 2020). In addition, careless responding has a biasing effect on a range of estimates, including item covariances (Credé, 2010; Goldammer et al., 2020), item means (Goldammer et al., 2020), reliability estimates (Hong et al., 2020; Huang et al., 2012), factor loadings (Kam & Meyer, 2015; Meade & Craig, 2012), and the testing of construct dimensionality (Arias et al., 2020; Goldammer et al., 2020; Woods, 2006). As a consequence, careless responding detection research has gained traction, and several methods for detecting careless responding have been proposed (for an overview, see Curran, 2016). How good are the methods?

A common strategy for detecting careless responding is the use of bogus screening items, such as “I have never used a computer” (Huang, et al., 2015, p. 303), or instructed response items (e.g., “Answer with ‘Disagree’ for this item”; Curran & Hauser, 2019; Edwards, 2019). However, the utility of these explicit or direct measures is questionable for at least two reasons. They may confuse careful respondents and undermine the spirit of cooperation between researchers and respondents (Edwards, 2019; Ward & Meade, 2022). Moreover, respondents could legitimately justify selecting an infrequent response option or not selecting the instructed response option (Curran & Hauser, 2019), which may explain why some studies reported a high rate of participants falsely classified as careless responders (Huang et al., 2012; Niessen et al., 2016).

A better approach might therefore be the use of indirect or unobtrusive measures. Indirect indices make use of a respondent’s deviant or unlikely response pattern. Indirect careless response indices are therefore not salient to respondents, and their use does not alter the questionnaire. Several indirect indices have been proposed for careless responding detection, such as average response time per item (Huang et al., 2012), intra-individual response variability (Marjanovic et al., 2015), and standardized log-likelihood (Niessen et al., 2016).

How is careless responding studied? Many of the measures were developed in an ad hoc manner, and the use of experiments—where the information environment is fully controlled—is unfortunately rare (e.g., Goldammer et al., 2020; Huang et al., 2012; Niessen et al., 2016). In addition, it is unclear for many indices whether their detection performance depends on contextual factors, such as severity of the careless response pattern (i.e., full vs. partial careless responding), type of item keying (i.e., bidirectionally keyed items vs. unidirectionally keyed items only), and type of item presentation (one item per page vs. several items per page [matrix presentation]).

This paper has three aims. In five experiments, we examine (a) how 14 indirect careless responding detection indices perform compared to each other, (b) how the indirect indices perform (individually and jointly) compared to direct careless responding measures (i.e., bogus items and infrequency items), and (c) to what extent the detection performance of the indices is affected by the contextual factors mentioned above. By shedding light on these issues, we provide researchers with guidelines for detecting careless respondents.

In the following, we describe the theoretical basis of the 14 indirect indices that we examined (for an overview, see Table 1). The indices were selected either because they have already been successfully applied for careless responding detection or because they were effective in previous studies for detecting other types of aberrant responding (e.g., faking, cheating). These measures can be grouped into three broad categories: measures of response time, measures of response invariability, and measures of response inconsistency.

Measures of response time

If respondents are not well motivated to complete a questionnaire, it is likely that they speed up their response rate (i.e., to get through it as quickly as possible). The response time for the total survey and the page-specific response time are measures that may reflect speeded-up response behavior. If the questionnaire is conducted via an online survey tool (e.g., Qualtrics), these measures can usually be extracted automatically. In previous experimental studies, response time measures were found to be very effective in differentiating between careful and careless respondents (Goldammer et al., 2020; Huang et al., 2012; Niessen et al., 2016). We therefore expect careless respondents to complete the total survey or specific parts of a survey much faster than careful respondents (Goldammer et al., 2020; Huang et al., 2012; Niessen et al., 2016).

Measures of response invariability

To minimize their effort while completing the questionnaire, careless respondents may also use more efficient but content-irrelevant response strategies (i.e., irrelevant to the item content). Using the same response option (e.g., “agree”) or the same adjacent response options (e.g., “agree” and “strongly agree”) over a row of consecutive items would be two such effort-saving response strategies. For instance, these strategies go along with the least cursor or pencil movement needed to complete the questionnaire (Ward & Meade, 2022). Two indices have been commonly used to detect this invariant careless response patterns, maximum longstring index (Johnson, 2005; Meade & Craig, 2012) and intra-individual response variability (Dunn et al., 2018; Marjanovic et al., 2015). Except for Goldammer et al. (2020), previous experimental studies (Huang et al., 2012; Niessen et al., 2016) and simulation studies (Hong et al., 2020; Wind & Wang, 2022) have supported the utility of these invariability indices in detecting careless respondents. Compared to careful respondents, careless respondents might therefore produce response sets with longer strings of identical responses and sets in which the variability of responses is reduced.

Measures of response inconsistency

Careless respondents answering items irrespective of their content may result in three different low-probability response patterns. First, the pattern will be inconsistent within itself (items of similar content are answered differently). Psychometric antonyms (Goldberg, 2000, cited in Johnson, 2005, p. 111), psychometric synonyms (Meade & Craig, 2012, p. 443), and personal reliability (Curran, 2016; DeSimone et al., 2015; Jackson, 1976) reflect this within-person inconsistency. Second, the pattern is inconsistent with the normative response pattern (the sample norm of careful respondents). The Mahalanobis distance (Mahalanobis, 1936) and the person-total/personal-biserial correlation coefficient (rpbis; Donlon & Fischer, 1968) reflect this norm-based inconsistency. Third, the pattern is inconsistent with the measurement model. Depending on the measurement model chosen, different types of indices may be calculated that reflect different aspects of this model-based inconsistency. In this paper, we highlight three types of model-based inconsistency indices: (a) nonparametric person-fit statistics such as the normed Guttman error index for polytomous items (Emons, 2008; Molenaar, 1991) and the person or transposed scalability index (Mokken, 1971; Sijtsma, 1986), (b) parametric item response theory (IRT)-based person-fit statistics such as the standardized log-likelihood for polytomous items (Drasgow et al., 1985) and the infit and outfit mean squared error statistics (Wright & Stone, 1979), and (c) a covariance structure-based person-fit index—the individual contribution to the model misfit or χ2 (Reise & Widaman, 1999).

Within-person inconsistency

Careful respondents are expected to choose similar response options when rating items with similar content and to choose opposite response options when rating items with opposite content. Thus, response vectors of synonym pairs should correlate positively, and it follows that response vectors of antonym pairs should correlate negatively. This principle is used for calculating the psychometric synonym index and the psychometric antonym index (DeSimone et al., 2015). For this purpose, the within-person correlation is calculated between the response vectors of strongly correlated synonym or antonym pairs (e.g., greater than |.60| [Meade & Craig, 2012, pp. 442–443]). Optimally, such items pairs are obtained from the correlation matrix of careful respondents. Inconsistency in a respondent’s response pattern is indicated by low values on the synonym index or high values on the antonym index (DeSimone et al., 2015). Previous experimental studies (Goldammer et al., 2020; Huang et al., 2012) and simulation studies (Hong et al., 2020; Meade & Craig, 2012) support the utility of these two indices in detecting careless responding. Compared to careful respondents, we therefore expect careless respondents to have lower values on the synonym index and higher values on the antonym index (e.g., Goldammer et al., 2020).

Careful respondents are also expected to choose similar response options when they rate items of the same unidimensional scale. Accordingly, a score that is based on half the scale items (e.g., even items) should correlate positively with a score based on the rest of the items. This principle is applied when calculating personal reliability, which is also referred to as even–odd consistency (Curran, 2016; DeSimone et al., 2015; Jackson, 1976). If a questionnaire includes at least three scales, personal reliability can be obtained by calculating the within-person correlation between the two vectors of even–odd scale score halves (DeSimone et al., 2015). Inconsistency in a respondent’s response pattern is indicated by a low personal reliability score (DeSimone et al., 2015). In previous experimental work (Goldammer et al., 2020; Huang et al., 2012; Niessen et al., 2016) and a simulation (Meade & Craig, 2012), this index was very effective in classifying respondents. We therefore expect careless respondents to have a lower personal reliability score than that of careful respondents (e.g., Goldammer et al., 2020).

Inconsistency with the sample norm

Typically, the Mahalanobis distance (MD) has been used in regression analyses for detecting multivariate outliers (see Tabachnick & Fidell, 2007, pp. 73–77). A small distance value indicates that a respondent’s response pattern is closely in line with that of the sample norm; a large distance value indicates that a respondent’s response pattern deviates substantially from the sample norm (Goldammer et al., 2020). In previous experimental (Goldammer et al., 2020; Huang et al., 2012; Niessen et al., 2016) and simulation studies (Hong et al., 2020; Meade & Craig, 2012; Wind & Wang, 2022), the MD was very effective in detecting careless responding. We therefore expect careless respondents to have larger MD values than those of careful respondents.

Another measure, the person-total/personal-biserial correlation coefficient (rpbis), is comparable to an item-total or item-rest correlation in the context of a scale reliability analysis (Curran, 2016, pp. 12–13). This measure captures whether response patterns of individuals correlate positively with the response pattern of the sample norm. Low or even negative correlation coefficients are a point of concern. Thus, if the normative response pattern is set by careful respondents, we expect careless respondents to have produced with their content-irrelevant responses a response pattern that correlates only minimally with that of the norm (Goldammer et al., 2020); a simulation study shows likewise (Karabatsos, 2003).

Model-based inconsistency: Nonparametric person-fit indices

The idea behind Guttman errors is that respondents should answer test items in accordance with their total score (e.g., Meijer et al., 2016; Niessen et al., 2016). In the context of polytomous items, respondents produce a Guttman error if they take an unpopular item step after not taking a more popular item step previously (Niessen et al., 2016, pp. 10–11). Thus, it is very likely that careless respondents produce Gutman errors with their content-irrelevant response pattern. Initial support for the utility of Gnormed (i.e., the normed and polytomous version of the Guttman error index) in detecting careless responding was found in an experimental study (Niessen et al., 2016) and two simulation studies (Karabatsos, 2003; Wind & Wang, 2022). We therefore expect careless respondents to have larger Gnormed values than those of careful respondents.

The person or transposed scalability index (Ht) represents an extension of the conventional Guttman error index and can be interpreted as the ratio of the frequencies of observed to expected Guttman errors (Wind & Wang, 2022). Previous simulation studies (Karabatsos, 2003; Wind & Wang, 2022) suggested the utility of the Ht in detecting careless responding. Compared to careful respondents, we therefore expect careless respondents to have poorer scalability and thus smaller Ht values.

Model-based inconsistency: Parametric IRT-based person-fit indices

Compared to nonparametric person-fit statistics, which are only calculated from the respondents’ scored responses, parametric person-fit statistics require the estimation of a measurement model to be calculated (Karabatsos, 2003). In the case of an IRT model, the respondent’s latent trait level or theta is one of the core parameters and the basis for IRT-based person-fit statistics (Karabatsos, 2003), such as the standardized log-likelihood for polytomous items (lz), and the infit and outfit mean squared error (MSE) statistics. In general, such parametric IRT-based person-fit indices quantify to what extent a respondent’s response pattern is in line with the response pattern that would be expected under the person’s latent trait level (Glas & Khalid, 2017). Whereas consistently responding respondents are expected to respond to items according to their latent trait level, inconsistently responding respondents are expected to provide a response pattern that is very unlikely under the person’s latent trait level.

In the case of the lz, a misfit between the observed and expected response pattern is indicated by large negative values (Reise & Widaman, 1999, p. 6). An experimental study (Niessen et al., 2016) and simulation studies (Hong et al., 2020; Karabatsos, 2003) found support for the utility of this index in detecting careless responding. We therefore expect careless respondents to have lower lz values than those of careful respondents.

The Rasch model (a specific IRT model)-based infit and outfit MSE statistics represent two other indices that indicate deviations between the observed response pattern and the one that would be expected under the person’s latent trait level (Wright & Stone, 1979). Both these error indices range from 0 to infinity, and large positive infit and outfit MSE values are generally taken as indication of a poor fit between the observed and expected response pattern (Linacre, 2002). Because previous simulation studies (Jones et al., 2023; Karabatsos, 2003) suggested the utility of these two indices in detecting careless responding, we expect careless respondents to have larger infit and outfit MSE values than those of careful respondents.

Model-based inconsistency: Parametric covariance structure-based person-fit index

In contrast to parametric IRT-based person-fit indices, the individual contribution to the model misfit or χ2 (INDCHI) is based on the comparison of two covariance structure models—the saturated model and the substantive factor model (Reise & Widaman, 1999). This results in a statistic, INDCHI, that reflects the individual contribution to the overall factor model misfit (Reise & Widaman, 1999). Respondents with a response pattern that is rather unlikely under the estimated factor model will have larger INDCHI values than respondents that have produced a response pattern that is consistent with the estimated factor model (Reise & Widaman, 1999). Because this index performed well in detecting participants that were instructed to fake-bad (Goldammer et al., 2023), we expect this index to also have utility in detecting careless responding. Thus, we assume that careless respondents will have larger INDCHI values than those of careful respondents.

Indirect careless responding measures: Unknowns

As the review of the 14 indices shows, there are several promising indirect indices available for detecting careless responding in data sets. However, researchers still have little guidance about the relative effectiveness of different indirect careless responding indices. Even though some previous experimental studies have examined the utility of careless response indices, the analyses of these studies were limited to a selected group of indices (e.g., Goldammer et al. (2020) examined seven indices, Huang et al. (2012) examined four indices, and Niessen et al. (2016) examined six indices). By examining a selection of 14 indirect careless response indices, we provide a more informative picture of the indices’ relative performance. Our first research question was the following:

-

Research question 1: How accurate are the 14 indirect indices designed to detect careless responding compared to each other?

Even less is known about how indirect indices perform in comparison to a direct careless response measure. For instance, experimental research has not examined how the intra-individual response variability (IRV), psychometric synonyms and antonyms, rpbis, Ht, infit and outfit MSE, and INDCHI indices perform compared to a direct measure (e.g., bogus and infrequency items) in detecting careless responding. We therefore investigated the following research question:

-

Research question 2: How accurately do these 14 indirect indices detect careless responding compared to a direct careless response measure?

If several of these indirect indices turn out to be effective in detecting careless responding and increase classification accuracy beyond a direct careless responding measure, it may be fruitful to examine to what extent a selected group of indirect indices, as a set, can outperform a direct careless responding measure. We therefore addressed the following research question:

-

Research question 3: How accurately does a set of effective indirect indices detect careless responding compared to a direct measure?

In addition—and most importantly regarding our contribution—little is known on how the detection performance of the careless response indices is affected by contextual factors, such as the severity of the careless response pattern (i.e., partial vs. full careless responding), the type of item keying (i.e., bidirectionally keyed items vs. unidirectionally keyed items only), and the type of item presentation (one item per page vs. several items per page [matrix presentation]). Even though some simulation studies (Hong et al., 2020; Meade & Craig, 2012) partially examined the effects of contextual factors on the detection rate of careless response indices, the generalizability of such simulation findings may be limited, because computer-generated careless response data may not fully reflect human-generated careless response data. Niessen et al. (2016), for instance, found detection rates of indirect indices to be remarkably lower when human-generated careless response data were used, compared to when computer-generated random data were used; simulated careless response data may lead to detection rates that are too optimistic. Thus, examining the following three research questions with human-generated careless response data may bring us novel insights:

-

Research question 4: How does the severity of the careless response pattern (i.e., partial vs. careless responding) affect the detection rate of careless response indices?

-

Research question 5: How does the type of item keying (i.e., bidirectionally keyed items vs. unidirectionally keyed items only) affect the detection rate of careless response indices?

-

Research question 6: How does the type of item presentation (one item per page vs. several items per page [as matrix]) affect the detection rate of careless response indices?

To examine the six research questions, we conducted five studies in which the detection accuracy of the indices was examined based on experimentally induced careless response sets. In Studies 1 and 2, we used a between-subject factor design in which participants had to rate the personality of an actor that presented himself in a 5-min-long videotaped speech. In Studies 3, 4, and 5, we used a within- and between-subject factor design in which participants rated their own personality across two measurements. Notably, and in contrast to previous experimental studies on careless responding detection (Goldammer et al., 2020; Huang et al., 2012; Niessen et al., 2016), each of our five studies was designed in such a way that we could conduct a true test of the effect of the response instructions on participants’ response behavior (e.g., by comparing the ratings of the experimental groups with that of a norm group).

Study 1

In Study 1, we addressed research questions 1 to 4.

Method

Participants

The participants in Study 1 were 357 German-speaking conscripts doing military service in the fall of 2021 in three randomly drawn basic training camps. The participants in this sample were on average 20.33 years old (SD = 1.31) and predominantly men (n = 355, 99.4%). The educational level of the participants in the study was as follows: More than a third (n = 123, 34.5%) had completed upper secondary school, and the majority (n = 220, 61.6%) had completed a certified apprenticeship. Only a minority of the participants (n = 14, 3.9%) indicated nine years of compulsory schooling as their highest educational level.

Experimental conditions and survey arrangement

The data were gathered platoon-wise. After providing the members of each platoon with a general introduction to the study, we randomly assigned them to one of three experimental conditions—the careful responding condition (n = 119) or one of the two careless responding conditions (i.e., partially careless—careless responding to the last third of the questionnaire items [n = 120]; fully careless—careless responding to all questionnaire items [n = 118]). Civilian instructors, who were randomly assigned to one of the three conditions, then led the three subgroups into separate labs. All participants were then told that they would take part in an experiment that was about how to best identify careless responding in survey data and that after completing the survey they would all receive 10 Swiss francs (about US $10) as compensation for their efforts.

Participants were further told that they would first have to attentively watch a 5-min-long videotaped self-presentation by a non-commissioned officer (NCO) candidate and would then have to complete a questionnaire (i.e., Big-Five Inventory [BFI-2]; Soto & John, 2017) about that NCO candidate. The self-presentation was played by a non-professional actor and was designed such that he would receive high scores on the dimensions extraversion, conscientiousness, agreeableness, and open-mindedness, and low scores on the dimension negative emotionality (see supplements for video and translated script). After watching the video, participants in the careful responding group were asked to complete the questionnaire on the NCO candidate accurately and honestly (i.e., they were told to simply give their honest, personal view of the candidate); participants in the partially careless responding condition were asked to complete the first survey part (i.e., 66% of the items) accurately and honestly and the second survey part (i.e., 33% of the items) with the following attitude: They should show no great interest in completing the rest of the survey. Accordingly, they were to get the survey over with as quickly as possible, without being caught by our careless responding detection method. Participants in the fully careless responding condition were asked to complete the full questionnaire with the following attitude: They should respond as if they had no great interest in completing the survey. Accordingly, they were to get the survey over with as quickly as possible, without being caught by our careless responding detection methods. After receiving the instructions, the participants started completing the online questionnaire, beginning with three sociodemographic questions. They then completed the main part of the questionnaire, in which the order of the items was randomized and each item was displayed on a single web page.

Substantive measures

The participants completed the German translation (Danner et al., 2019) of the BFI-2 (Soto & John, 2017) to rate the NCO candidate in the video. The BFI-2 is composed of the five trait scales extraversion (αCareful = 0.78, αPartially careless = 0.49, αFully careless = 0.62), agreeableness (αCareful = 0.88, αPartially careless = 0.65, αFully careless = 0.73), conscientiousness (αCareful = 0.86, αPartially careless = 0.64, αFully careless = 0.77), negative emotionality (αCareful = 0.80, αPartially careless = 0.51, αFully careless = 0.71), and open-mindedness (αCareful = 0.80, αPartially careless = 0.39, αFully careless = 0.56). All 60 bidirectionally keyed items (i.e., 30 positively and 30 negatively worded items) of the BFI-2 were rated on a Likert scale ranging from 1 = completely disagree to 6 = completely agree.

Direct careless responding measure

We used three bogus items as a direct careless responding measure. The two items “I have been to every country in the world” and “I have never brushed my teeth” were taken from Meade and Craig (2012, p. 441), and the item “I have never used a computer” was taken from Huang et al., (2015, p. 303). We chose these three bogus items because they turned out to be the only items for which participants in the two studies by Curran and Hauser (2019, p. 4) could not find valid justification when selecting infrequent response options. In addition, using three bogus items per 60 substantial items seemed reasonable to give this direct measure a fair chance to perform well (see Huang et al., 2015, p. 303). These three bogus items were embedded in the regular questionnaire and randomly displayed. As with the substantial items, respondents rated the three bogus items on a Likert scale from 1 = completely disagree to 6 = completely agree. For the main analyses, an average score was computed across the three items (αCareful = 0.43, αPartially careless = 0.34, αFully careless = 0.71). If a respondent’s response was missing, the average score was based on the remaining non-missing responses. However, this average score could not be computed for one respondent in the careful responding group, because this respondent did not answer any of the bogus items.

Indirect careless responding measures

We calculated the 14 indirect indices from our representative selection. For computing these indirect indices, only the 60 items of the BFI-2 were used. Detailed information on how these indices were computed is provided in the supplementary material.

Careless responding criterion and analytical procedure

The indices’ classification accuracy of careless and careful responders was our outcome variable in Study 1. We therefore plotted for every index (and selected combinations of indices) a receiver operating characteristic (ROC) curve (e.g., Swets, 1986) and examined the corresponding area under the curve (AUC). We used the tie-corrected nonparametric method (i.e., trapezoidal approximation) with bootstrap standard errors (i.e., 1000 replications) for estimating the AUCs and its standard errors. All these analyses were performed in Stata (StataCorp, 2021).

Results

Manipulation check

To examine whether our manipulation worked, we compared the scores of the five substantive scales and three indirect careless response indices (i.e., average response time per item, IRV, resampled personal reliability [RPR]) of the experimental groups with those of a norm group. The comparisons revealed that our manipulation was successful (see supplementary material and Table S1). Thus, we proceeded with the main analyses.

Descriptive statistics

The condition-specific correlation matrices as well as the condition-specific means and standard deviations of the substantive measures and careless responding indices are reported in the supplementary material (see Tables S2–S4).

Comparisons between the indirect indices

The omnibus test indicated a significant inequality between the AUCs for detecting partially careless respondents (F[13, 4.9*109] = 18.28, p < .001) and between the AUCs for detecting fully careless respondents (F[13, 2.3*1010] = 25.91, p < .001). These global inequalities were then further explored through Bonferroni-corrected pairwise comparisons.

For detecting partially careless respondents (i.e., Careless 33%), the following indices turned out to be effective (i.e., the confidence interval of their AUC did not include 0.5): RPR (i.e., repeated calculation across several random splits of scale halves), MD, lz, psychometric synonyms, Gnormed, outfit MSE, infit MSE, rpbis, Ht, INDCHI, psychometric antonyms, and average response time per item (see Table S5 for AUCs). Of these 12 effective indices, the RPR and the MD tended to be the best—they significantly outperformed some of the other effective indices in terms of AUC. In contrast, maximum longstring and IRV did not perform well in detecting partially careless respondents. The accuracy of these indices was not better than chance (i.e., the confidence interval of their AUC included 0.5).

For detecting fully careless respondents, all 14 examined indices turned out to be effective (see Table S6). Again, the RPR tended to be the best-performing index. It outperformed more than half the indices in terms of AUC.

Lastly, we addressed research question 4 and compared each index’s performance in detecting partially careless respondents with its performance in detecting fully careless respondents. For each index, the condition-specific univariate logit estimates (i.e., estimate for Careless 33% and estimate for Careless 100%) were therefore stacked into one model, simultaneously estimated, and tested for equality by using seemingly unrelated estimation (i.e., SUEST; Weesie, 1999). The following seven indices performed equally well in detecting partially and fully careless responding according to the Bonferroni-corrected critical χ2 or F-value of 8.49 (i.e., α = 0.05/14): Ht (χ2[1] = 0.03), RPR(χ2[1] = 0.44), psychometric synonyms (χ2[1] = 1.05), INDCHI (χ2[1] = 1.71), rpbis (χ2[1] = 2.79), MD (χ2[1] = 3.64), and psychometric antonyms (F[1, 1.7*106] = 4.72).

In contrast, the following indices performed better in detecting one specific careless responding form (i.e., partially vs. fully careless responding). For one, Gnormed (χ2[1] = 9.11), lz (χ2[1] = 10.91), infit MSE (χ2[1] = 12.51), and outfit MSE (χ2[1] = 13.18) were found to better detect partially careless responding than fully careless responding. For another, the average response time per item (χ2[1] = 12.11) and the IRV (χ2[1] = 18.63) were found to better detect fully careless responding than partially careless responding. Even though the Bonferroni-corrected critical χ2 value was not passed in the case of maximum longstring (χ2[1] = 7.23), it nevertheless seems legitimate to also claim that this index better detects fully careless responding than partially careless responding, especially when taking into account the condition-specific AUCs and their confidence intervals (see Tables S5 and S6). Whereas the 95% confidence interval of the AUC in the partially careless responding condition included 0.5, that of the AUC in the fully careless responding condition did not.

Comparisons between the average score on bogus items and indirect indices

We then compared the detection performance of each effective indirect index with that of the average score on the three bogus items (see Table 2). For detecting partially careless respondents, the Bonferroni-corrected pairwise comparisons revealed the following results: Whereas one half of the examined indices (i.e., RPR, MD, lz, psychometric synonyms, Gnormed, outfit MSE, infit MSE) performed significantly better than the average score on the bogus items, the other half (i.e., rpbis, Ht, INDCHI, psychometric antonyms, average response time per item) performed as well as the average score on the bogus items. For detecting fully careless respondents, the Bonferroni-corrected pairwise comparisons revealed the following results: Whereas the RPR and the average response time per item performed significantly better than the average score on the bogus items, the majority of indices (i.e., MD, lz, psychometric synonyms, Gnormed, rpbis, Ht, INDCHI, psychometric antonyms, outfit MSE, infit MSE, maximum longstring) were as accurate as the average score on the bogus items. Only the IRV turned out to have a significantly lower detection accuracy than the average score on the bogus items. In addition, we also examined the indices’ incremental validity beyond the average score of bogus items. These estimates are reported in the supplementary material (see Table S7).

Comparisons between the average score of bogus items and sets of indirect indices

Lastly, we compared the performance of three sets with that of the average score on the three bogus items. In the first set, all effective indirect indices were used in combination. In the second set, the three most accurate indices were used in combination. In the third set, the three indices that needed the fewest code lines until the final index was obtained (see supplementary Table S8) were used in combination. For these comparisons, we used linear multiple imputation predictions of logit models as input for the ROC analyses.

Most notably, each of the three examined sets performed significantly better than the average score on the three bogus in terms of AUC, no matter which form of careless responding was detected (see Table 3). In addition, some of the sets even outperformed the average score on bogus items in terms of sensitivity at low-false positive rates (see Table 3). For instance, at a false-positive rate of 5%, the set in which all effective indirect indices were used in combination allowed a detection rate of 69% of the partially careless respondents, whereas the average score on bogus items only detected 35%. This difference in sensitivity at a false-positive rate of 5% also turned out to be significant for fully detected careless respondents. Whereas the set of all indirect indices detected 73% of the fully careless respondents, the average score on the bogus items detected only 47%. These analyses showed that—independently of the careless responding pattern—the combined use of indirect indices leads to significantly better identification of careless responding participants than frequently used bogus items do.

Study 2

Study 1 revealed that indirect indices are effective in detecting different types of careless responding and may even outperform direct measures (i.e., bogus items). Study 2 aimed to replicate these findings in another sample and with a different set of item keying, and thus helped us to gain further insights regarding research questions 1 to 4. In contrast to Study 1, in which respondents were asked to rate the NCO in the video with a set of bidirectionally keyed items (i.e., items of the same unidimensional construct were positively and negatively worded/keyed), the respondents in Study 2 were asked to rate the NCO with a set of unidirectionally keyed items (i.e., all items of same unidimensional construct were scored in the same direction). This design allowed us to compare the indices’ detection performance across Study 1 and 2, and thus to examine research question 5 as well.

Method

Participants

The participants in Study 2 were 341 German-speaking conscripts doing military service in the fall of 2021 in three randomly drawn basic training camps. The participants in this sample were on average 20.15 years old (SD = 1.49) and predominantly men (n = 328, 96.2%). The educational level of the participants in the study was as follows: Most of the participants had either completed upper secondary school (n = 166, 48.7%) or completed a certified apprenticeship (n = 168, 49.3%). Only seven participants (2.0%) indicated the nine years of compulsory schooling as their highest educational level.

Experimental conditions and survey arrangement

The experimental conditions and the survey arrangement were identical to those in Study 1. The participants in Study 2 were randomly assigned to one of three experimental conditions— the careful responding condition (n = 112), or one of the two careless responding conditions (i.e., partially careless—careless responding to the last third of the questionnaire items [n = 114], fully careless—careless responding to all questionnaire items [n = 115]).

Substantive measures

To rate the NCO candidate in the video, the participants completed a selection of unidirectionally keyed items (i.e., all items of the same construct were keyed in the same direction) of the German adaptation (IPIP-5F-30F-R1; Iller et al., 2020) of the International Personality Item Pool (IPIP; Goldberg et al., 2006). In the complete version of the IPIP-5F-30F-R1, each of the five dimensions extraversion, agreeableness, conscientiousness, neuroticism, and openness to experience is measured with six facets, and each facet is measured with six items. To build a questionnaire of unidirectionally keyed items for Study 2 that was comparable to the questionnaire that we used in Study 1 in terms of content and length, we proceeded as follows: First, we selected from each of the five dimensions those three facets that most closely matched those of the BFI-2. Second, we selected from each of these 15 facets those four items that we thought could be most easily assessed by the participants after the video presentation. Finally, this selection resulted in a questionnaire of 60 unidirectionally keyed items (see supplementary Table S9), each of which had to be rated on a Likert scale ranging from 1 = completely disagree to 6 = completely agree. The condition-specific Cronbach’s alpha for the five personality dimensions was as follows: Extraversion (αCareful = 0.69, αPartially careless = 0.52, αFully careless = 0.56), agreeableness (αCareful = 0.78, αPartially careless = 0.64, αFully careless = 0.77), conscientiousness (αCareful = 0.77, αPartially careless = 0.62, αFully careless = 0.72), neuroticism (αCareful = 0.84, αPartially careless = 0.64, αFully careless = 0.76), and openness to experience (αCareful = 0.76, αPartially careless = 0.53, αFully careless = 0.62). These consistency values were somewhat lower than those reported by Iller et al. (2020); of course, this result makes sense given that alpha depends on the number of items in the scale, and we used fewer items (Carmines & Zeller, 1979).

Direct careless responding measure

We used the same three bogus items as in Study 1. Like the substantive measure, these three items were rated on a Likert scale that ranged from 1 = completely disagree to 6 = completely agree. For the main analyses, an average score was computed across the three items (αCareful = 0.28, αPartially careless = 0.09, αFully careless = 0.63). If a respondent’s response was missing, the average score was based on the remaining non-missing responses.

Indirect careless responding measures

We used the same indirect careless responding indices as in Study 1. For computing these indirect indices, only the 60 items of the IPIP-5F-30F-R1 were used. Detailed information on how these indices were computed is provided in the supplementary material.

Careless responding criterion and analytical procedure

We used the same criterion and analytical procedure as in Study 1.

Results

Manipulation check

To examine whether our manipulation worked, we compared the scores of the five substantive scales and three indirect careless response indices (i.e., average response time per item, IRV, RPR) of the experimental groups with those of a norm group. The comparisons revealed that our manipulation was successful (see supplementary material and Table S10). Thus, we proceeded with the main analyses.

Descriptive statistics

The condition-specific correlation matrices as well as the condition-specific means and standard deviations of the substantive measures and careless responding indices are reported in the supplementary material (see Tables S11–S13).

Comparisons between the indirect indices

The omnibus test indicated significant inequality between the AUCs for detecting partially careless respondents (F[13, 6.5*108] = 11.70, p < .001) and between the AUCs for detecting fully careless respondents (F[13, 7.0*108] = 26.49, p < .001). These global inequalities were then further explored through Bonferroni-corrected pairwise comparisons.

For detecting partially careless respondents (i.e., Careless 33%), the following 11 indices performed better than chance (i.e., the confidence interval of their AUC did not include 0.5): lz, Gnormed, MD, RPR, Outfit MSE, psychometric synonyms, rpbis, Ht, infit MSE, INDCHI, and psychometric antonyms (see Table S14). In contrast, average response per item, maximum longstring, and IRV did not perform well in detecting partially careless respondents, and they were outperformed by all other indices. Whereas average response time per item and maximum longstring did not perform better than chance (i.e., the confidence interval of their AUC included 0.5), IRV even screened in the wrong direction (i.e., it was more indicative for careful responding).

For detecting fully careless respondents, the following 12 indices performed better than chance (i.e., the confidence interval of their AUC did not include 0.5): rpbis, average response time per item, RPR, Ht, psychometric synonyms, psychometric antonyms, INDCHI, MD, lz, Gnormed, outfit MSE, and infit MSE (see Table S15). Among these effective indices, rpbis, average response time per item, RPR, and Ht tended to be the best, as they significantly outperformed indices with mediocre performance (i.e., MD, lz, Gnormed, outfit MSE, infit MSE). In contrast, maximum longstring and IRV were the only indices that turned out to be ineffective in detecting fully careless responding (i.e., the confidence interval of their AUC included 0.5).

Lastly, we compared each index’s performance in detecting partially careless respondents with its performance in detecting fully careless respondents. For each index, the condition-specific univariate logit estimates (i.e., estimate for Careless 33% and estimate for Careless 100%) were therefore stacked into one model, simultaneously estimated, and tested for equality by using seemingly unrelated estimation (i.e., SUEST; Weesie, 1999). The following nine indices were equally effective (or ineffective in the case of the maximum longstring) in detecting partially and fully careless responding according to the Bonferroni-corrected critical χ2 or F-value of 8.49 (i.e., α = 0.05/14): RPR(χ2[1] = 0.13), INDCHI (χ2[1] = 0.59), MD (χ2[1] = 2.46), Ht (χ2[1] = 3.05), psychometric synonyms (χ2[1] = 3.49), rpbis (χ2[1] = 3.83), longstring (χ2[1] = 4.64), psychometric antonyms (F[1, 292′368.4] = 4.69), and Gnormed (χ2[1] = 7.46).

In contrast, the following indices performed better in detecting one specific careless responding form. For one, infit MSE (χ2[1] = 8.73), outfit MSE (χ2[1] = 9.77), and lz (χ2[1] = 9.93) were found to better detect partially careless responding than fully careless responding. For another, average response time per item (χ2[1] = 38.59) and IRV (χ2[1] = 11.45) were found to better detect fully careless responding than partially careless responding. In the case of IRV, however, it needs to be mentioned that this difference in accuracy did not really reflect an improvement in classification accuracy. It mainly occurred because IRV did not perform that badly (i.e., being as good as chance) when fully careless responding should be detected and not as badly as it did (i.e., being more indicative for careful responding) when partially careless responding should be detected.

Comparisons between the average score on bogus items and indirect indices

We then compared the detection performance of each effective indirect index with that of the average score of the three bogus items (see Table 4). For detecting partially careless respondents, the Bonferroni-corrected pairwise comparisons revealed the following results: Whereas INDCHI and psychometric antonyms turned out to be as accurate as the average score on the bogus items, the majority of the examined indices (i.e., lz, Gnormed, MD, RPR, outfit MSE, psychometric synonyms, rpbis, Ht, infit MSE) performed significantly better. For detecting fully careless respondents, the Bonferroni-corrected pairwise comparisons revealed the following results: The majority of examined indirect indices (i.e., MD, RPR, psychometric synonyms, rpbis, Ht, INDCHI, psychometric antonyms, average response time per item) were as accurate as the average score on the bogus items. Only lz, Gnormed, outfit MSE, and infit MSE had a significantly lower detection accuracy. In addition, we also examined the indices’ incremental validity beyond the average score on bogus items. These estimates are reported in the supplementary material (see Table S16).

Comparisons between the average score of bogus items and sets of indirect indices

We then compared the performance of three sets of indirect indices with that of the average score on the three bogus items. In the first set, all effective indirect indices were used in combination. In the second set, the three most accurate indices were used in combination. In the third set, the three indices that needed the fewest code lines until the final index was obtained (see supplementary Table S8) were used in combination. For these comparisons, we used linear multiple imputation predictions of logit models as input for the ROC analyses.

For detecting partially careless respondents, all examined sets turned out to have a significantly larger AUC than the average score on bogus items (see Table 5). In addition, the set in which all effective indices were combined also outperformed the average score on bogus items in terms of sensitivity at a false-positive rate of 5%. Whereas this set of indirect indices detected 51% of the partially careless respondents, the average score on bogus items only detected 12% (see Table 5). For detecting fully careless responding, the first and second set turned out to have a significantly larger AUC than the average score on bogus items.

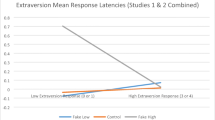

Detection performance of indices at bidirectionally and unidirectionally keyed items

We then addressed research question 5 and compared the indices’ performance in Study 1 (in which items of same unidimensional construct were positively and negatively keyed) with their performance in Study 2 (in which all items of same unidimensional construct were scored in the same direction). We therefore appended the data of Study 2 to the data of Study 1 and used the by option of the roccomp command in Stata.

However, none of the pairwise AUC comparisons for detecting partially and fully careless respondents reached the Bonferroni-corrected critical value of 9.88 (i.e., α = 0.05/30). Nevertheless, it seemed legitimate to claim that three indices functioned differently across the two studies, especially if the confidence intervals of the indices’ AUCs were used for making the judgment. For instance, when partially careless responding should be detected, the average response time per item performed significantly better than chance in Study 1 and did not do so in Study 2 (see AUCs reported in Table S5 and Table S14, respectively). For another, when fully careless responding should be detected, the maximum longstring and the IRV performed significantly better than chance in Study 1 and did not do so in Study 2 (see AUCs reported in Table S6 and Table S15, respectively).

Study 3

In Study 3, the manipulation turned out to be unsuccessful and thus prevented us from conducting the main analyses. For transparency reasons, and to inform researchers interested in conducting studies on careless responding in online populations like MTurk, we report the methods of Study 3 and the results of the manipulation check in the supplementary material (including Table S17).

Study 4

Studies 1 and 2 showed that indirect indices are effective in detecting different types of careless responding and even outperform direct measures (i.e., bogus items). Study 4 aimed to replicate these findings with a sample of Prolific workers and with a shift in the rating perspective (i.e., respondents were asked to rate their own personality). In addition, we used a different direct careless responding measure—the eight items of the infrequency scale by Huang et al. (2015). Thus, Study 4 allowed us to address research questions 1 to 4 and to examine how the comparison between direct and indirect careless responding measures turns out if a validated bogus item scale is used that contains more than twice as many bogus items as in Studies 1 and 2.

Methods

Participants

The data for Study 4 were gathered in January 2024. The participants were Prolific workers who had Prolific accounts in the United States or Great Britain and an approval rate of at least 98%. On the Prolific website and the starting web page of the online survey, Prolific workers were informed that the study would examine the impact of careless (i.e., unmotivated) responding on the quality of personality test results. For this purpose, potential participants would have to complete our personality questionnaire twice. In the first session, all participants would be asked to complete the survey accurately. In the second session, however, some participants would be asked to imitate careless responding and would be instructed to complete the survey or parts thereof according to our specific “careless responding” instructions, whereas others would be instructed to complete the questionnaire accurately. Lastly, we announced that we would pay each participant £3 for participating in our study.

A total of N = 481 Prolific workers were recruited for Study 4. The participants in this sample were on average 44.30 years old (SD = 13.97) and half of them were women (n = 249, 51.8%). Almost two thirds of the participants (n = 292, 60.7%) had a bachelor’s or higher degree, and 40% of them had an associate’s degree or a lower education level (n = 189, 39.3%). Approximately three quarters of the participants were currently employed (n = 347, 72.1%).

Experimental conditions

At the first measurement, which also included the four sociodemographic variables, all participants were instructed to respond to the questionnaire items as they applied to them. They were further informed that there were no right or wrong answers and that they just had to respond to each item as honestly as possible.

Immediately after the participants had completed the first measurement, they completed the same questionnaire again. At this second measurement, however, they were randomly assigned to one of the four experimental conditions—either to one of the two careful responding conditions (nCareful unincentivized = 120; nCareful incentivized = 121)Footnote 1 or to one of the two careless responding conditions (i.e., partially careless—careless responding to the last third of the questionnaire items [nPartially careless = 118], fully careless—careless responding to all questionnaire items [nFully careless = 122]).

Participants assigned to the unincentivized careful responding condition were again instructed to respond to the questionnaire items as they applied to them and as honestly as possible. Participants assigned to the incentivized careful responding condition received the same instruction as participants in the unincentivized careful responding condition. However, they were additionally told that we acknowledged that answering the same questions with the same instruction may be a less attractive task, which is why they would receive a bonus of £1 as compensation, so that they would nevertheless pay full attention when completing the second measurement. In contrast, participants in the partially careless responding condition were asked to complete the first survey part (i.e., 66% of the items) accurately and honestly and the second survey part (i.e., 33% of the items) with the following instruction: They should pretend that they were not really interested in the survey and just wanted to get the job done as quickly as possible. Thus, they should deliberately respond without effort to every item of the remaining questionnaire. They were further informed that there would be no risk of any penalty and that we would pay them in any case. Participants in the fully careless responding condition received the following instruction: They should pretend that they were not really interested in the survey and just wanted to get this job done as quickly as possible. Thus, they should deliberately respond without effort to every item of the questionnaire. They were further informed that there would be no risk of any penalty and that we would pay them in any case.

Substantive measures

At both measurements, the participants completed the Big Five Inventory–2 (BFI-2; Soto & John, 2017), which contains the five trait scales extraversion (αCareful unincentivized = 0.87t1/0.88t2, αCareful incentivized = 0.88t1/0.89t2, αPartially careless = 0.89t1/0.63t2, αFully careless = 0.86t1/NAt2), agreeableness (αCareful unincentivized = 0.86t1/0.87t2, αCareful incentivized = 0.83t1/0.84t2, αPartially careless = 0.81t1/0.70t2, αFully careless = 0.88t1/0.46t2), conscientiousness (αCareful unincentivized = 0.91t1/0.91t2, αCareful incentivized = 0.90t1/0.92t2, αPartially careless = 0.91t1/0.72t2, αFully careless = 0.91t1/0.62t2), negative emotionality (αCareful unincentivized = 0.94t1/0.94t2, αCareful incentivized = 0.94t1/0.94t2, αPartially careless = 0.91t1/0.77t2, αFully careless = 0.90t1/0.27t2), and open-mindedness (αCareful unincentivized = 0.87t1/0.89t2, αCareful incentivized = 0.89t1/0.91t2, αPartially careless = 0.89t1/0.77t2, αFully careless = 0.92t1/0.29t2). The respondents rated all 60 bidirectionally keyed items (i.e., 30 positively and 30 negatively worded items) of the BFI-2 on a Likert scale ranging from 1 = completely disagree to 6 = completely agree.

Direct careless responding measure

We used Huang et al.’s (2015) infrequency scale as a direct careless responding measure. The eight bogus items of this scale were embedded in the regular questionnaire and randomly displayed. As with the substantial items, respondents rated the eight bogus items on a Likert scale from 1 = completely disagree to 6 = completely agree. For the main analyses, an average score was computed across the eight items (αCareful unincentivized = 0.88t1/0.97t2, αCareful incentivized = 0.90t1/0.92t2, αPartially careless = 0.68t1/0.53t2, αFully careless = 0.78t1/0.88t2). If a respondent’s response was missing, the average score was based on the remaining non-missing responses. However, this average score could not be computed for one respondent in the fully careful responding group at the second measurement, because they did not answer any of the bogus items.

Indirect careless responding measures

We used the same indirect careless responding indices as in Studies 1 and 2. For computing these indirect indices, only the 60 items of the BFI-2 were used. Detailed information on how these indices were computed is provided in the supplementary material.

Careless responding criterion and analytical procedure

The within-subject design of Study 4 allowed us to examine whether the direct and indirect careless response indices correctly distinguished between ratings that were given by careless responders (i.e., rating at t2) and ratings that were given by careful responders (i.e., rating at t1). Accordingly, only participants that were assigned to the partially or fully careless responding condition at the second measurement were used for examining the indices’ detection accuracy.Footnote 2 As in the previous studies, we plotted for every index (and selected combinations of indices) an ROC curve (e.g., Swets, 1986) and examined the corresponding AUC. We used the tie-corrected nonparametric method (i.e., trapezoidal approximation) with bootstrap standard errors (i.e., 1000 replications) for estimating the AUCs and standard errors. Because the data for all ROC analyses were arranged in long format, the standard errors were additionally adjusted for clustering. All these analyses were performed in Stata 18 (StataCorp, 2021).

Results

Manipulation check

To examine whether the respondents followed our response instructions, we compared the t1–t2 mean differences of the five substantive scales and of three indirect careless response indices (i.e., average response time per item, IRV, RPR) across the four response conditions. The comparisons revealed that our manipulation was successful (see supplementary material and Table S18). Thus, we proceeded with the main analyses.

Descriptive statistics

The condition-specific correlation matrices at the first and second measurement as well as the condition-specific means and standard deviations at the first and second measurement of the substantive measures and careless responding indices are reported in the supplementary material (see Tables S19–S26).

Comparisons between the indirect indices

The omnibus test indicated significant inequality between the AUCs for detecting partially careless responding (χ2[13] = 966.47, p < .001) and between the AUCs for detecting fully careless responding (F[13, 35,601] = 43.29, p < .001). These global inequalities were then further explored through Bonferroni-corrected pairwise comparisons.

For detecting partially careless responding (i.e., Careless 33%), the following 12 indices turned out to be effective (i.e., the confidence interval of their AUC did not include 0.5): MD, psychometric synonyms, RPR, psychometric antonyms, Gnormed, rpbis, Ht, average response time per item, outfit MSE, lz, infit MSE, and maximum longstring (see Table S27 for AUCs). Of these effective indices, the MD and the three within-person consistency indices (psychometric synonyms, psychometric antonyms, and RPR) tended to be the best: They significantly outperformed at least five of the other eight effective indices in terms of AUC. In contrast, INDCHI and IRV did not perform well in detecting partially careless responding. The accuracy of these indices was not better than chance (i.e., the confidence interval of their AUC included 0.5).

For detecting fully careless responding, all examined indices except INDCHI turned out to be effective (see Table S28). Of the 13 effective indices, psychometric synonyms, rpbis, and RPR tended to be the best-performing indices. They significantly outperformed seven of the other ten effective indices in terms of AUC.

Lastly, we addressed research question 4 and compared each index’s performance in detecting partially careless respondents with its performance in detecting fully careless respondents. For each index, the condition-specific univariate logit estimates (i.e., estimate for Careless 33% and estimate for Careless 100%) were therefore stacked into one model, simultaneously estimated, and tested for equality by using seemingly unrelated estimation (SUEST; Weesie, 1999). Although we observed a tendency for several indices to perform better when fully instead of partially careless responding had to be detected, the Bonferroni-corrected critical value (i.e., a χ2 of 8.49 or a F-value of 8.56; α = 0.05/14) was only reached in the case of the IRV. This index was significantly better in detecting fully careless responding than partially careless responding (χ2[1] = 11.04). The remaining 13 indices were equally effective (or ineffective in the case of INDCHI) in detecting partially and fully careless responding according to the Bonferroni-corrected critical value: INDCHI (χ2[1] = 0.04), RPR(χ2[1] = 0.06), Gnormed (χ2[1] = 0.37), Ht (χ2[1] = 0.38), psychometric antonyms (F[1, 554] = 0.62), psychometric synonyms (F[1, 128,642.2] = 0.93), maximum longstring (χ2[1] = 2.12), MD (χ2[1] = 2.61), outfit MSE (χ2[1] = 3.86), lz (χ2[1] = 4.80), rpbis (F[1, 3.9*107] = 5.01), infit MSE (χ2[1] = 5.51), and average response time per item (χ2[1] = 8.04).

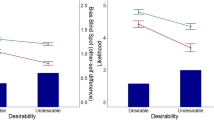

Comparisons between the average score of bogus items and indirect indices

We then compared the detection performance of each effective indirect index with that of the average score of the eight bogus items (see Table 6). For detecting partially careless responding, the Bonferroni-corrected pairwise comparisons revealed the following results: The MD and the three within-person consistency indices (psychometric synonyms, psychometric antonyms, RPR) turned out to be as accurate as the average score of the bogus items, but the majority of the examined indices (i.e., Gnormed, rpbis, Ht, average response time per item, outfit MSE, lz, infit MSE, maximum longstring) performed significantly worse. For detecting fully careless responding, the Bonferroni-corrected pairwise comparisons revealed the following results: Psychometric synonyms, rpbis, and psychometric antonyms turned out to be as accurate as the average score of bogus items, but the majority of examined indirect indices (i.e., MD, RPR, Gnormed, Ht, average response time per item, outfit MSE, lz, infit MSE, maximum longstring, and IRV) performed significantly worse. In addition, we also examined the indices’ incremental validity beyond the average score on bogus items. These estimates are reported in the supplementary material (see Table S29).

Comparisons between the average score of bogus items and sets of indirect indices

We then compared the performance of three sets of indirect indices with that of the average score of the eight bogus items. In the first set, all effective indirect indices were used in combination. In the second set, the three most accurate indices were used in combination. In the third set, the three indices that needed the fewest code lines until the final index was obtained (see supplementary Table S8) were used in combination. We used linear predictions of logit models as input for the ROC analyses in the partially careless responding condition and linear multiple imputation predictions of logit models (across 72 to 100 imputations because convergence was not achieved for estimating logit models in some imputation samples) as input for the ROC analyses in the fully careless responding condition.

For detecting partially careless responding, the analyses revealed the following: Although the second and third set were as accurate as the average score of bogus items, the first set performed significantly better than the average score of bogus items in terms of AUC and sensitivity at a false-positive rate of 1% (see Table 7). The difference in sensitivity at a false-positive rate of 1% between the first set and average score of bogus items was impressive. Moreover, the first set detected every partially careless respondent; however, the average score of bogus items only detected 17% of them.

For detecting fully careless responding, the analyses revealed the following results (see Table 7): First, the second set turned out to be as accurate as the average score of bogus items in terms of AUC and sensitivity. Second, even though the third set reached high levels of AUC (0.93) and sensitivity (0.75 at a false-positive rate of 5% and 0.70 at a false-positive rate of 1%), it performed significantly worse than the average score of bogus items, which reached an almost perfect detection accuracy. Lastly, only the first set outperformed the average score of bogus items. As for detecting partially careless responding, the first set reached perfect detection accuracy and performed significantly better than the average score of bogus items in terms of sensitivity at a false-positive rate of 1%. Whereas the first set detected every fully careless respondent, the average score of bogus items detected “only” 85% of them.

Study 5

Studies 1, 2, and 4 showed that indirect indices were effective in detecting different types of careless responding and may even outperform different sets of bogus items, and thus one popular type of direct careless responding measure. In Study 5 we aimed to replicate these findings with another sample of Prolific workers and another popular direct careless responding measure: instructed response items. Thus, Study 5 allowed us to address research questions 1 to 4 and to examine how the comparison between direct and indirect careless responding measures turns out if an index of eight instructed response items is used as a direct careless responding measure instead of an average score of bogus items.

Methods

Participants

The data for Study 5 were gathered in January 2024. The participants were Prolific workers who had Prolific accounts in the United States or Great Britain and an approval rate of at least 98%. In addition, they were excluded from participation if they were already participating in Study 4. On the Prolific website and the starting web page of the online survey, Prolific workers were informed that the study would examine the impact of careless (unmotivated) responding on the quality of personality test results. For this purpose, potential participants would have to complete our personality questionnaire twice. In the first session, all participants would be asked to complete the survey accurately. In the second session, some participants would be asked to imitate careless responding and would be instructed to complete the survey or parts thereof according to our specific “careless responding” instructions, while others would be instructed to complete the questionnaire accurately. Lastly, we announced that we would pay every participant £3 for participating in our study.

A total of N = 481 Prolific workers were recruited for Study 5. The participants in this sample were on average 42.40 years old (SD = 13.76), and almost half of them were women (n = 225, 46.8%). More than half of the participants (n = 279, 58.0%) had a bachelor’s or higher degree, and a bit more than 40% of them had an associate’s degree or a lower education level (n = 202, 42.0%). Approximately three quarters of the participants were currently employed (n = 363, 75.5%).

Experimental conditions

The experimental conditions in Study 5 were identical to those in Study 4. As in Study 4, the participants in Study 5 were randomly assigned to one of the four experimental conditions—either to one of the two careful responding conditions (nCareful unincentivized = 120; nCareful incentivized = 120) or to one of the two careless responding conditions (i.e., partially careless—careless responding to the last third of the questionnaire items [nPartially careless = 120], fully careless—careless responding to all questionnaire items [nFully careless = 121]).

Substantive measures

At both measurements, the participants completed the BFI-2 (Soto & John, 2017) which contains the five trait scales extraversion (αCareful unincentivized = 0.86t1/0.87t2, αCareful incentivized = 0.88t1/0.90t2, αPartially careless = 0.85t1/0.73t2, αFully careless = 0.90t1/0.08t2), agreeableness (αCareful unincentivized = 0.88t1/0.93t2, αCareful incentivized = 0.86t1/0.89t2, αPartially careless = 0.84t1/0.67t2, αFully careless = 0.85t1/0.39t2), conscientiousness (αCareful unincentivized = 0.89t1/0.92t2, αCareful incentivized = 0.90t1/0.92t2, αPartially careless = 0.91t1/0.78t2, αFully careless = 0.91t1/0.41t2), negative emotionality (αCareful unincentivized = 0.94t1/0.94t2, αCareful incentivized = 0.94t1/0.94t2, αPartially careless = 0.93t1/0.79t2, αFully careless = 0.94t1/0.33t2), and open-mindedness (αCareful unincentivized = 0.86t1/0.88t2, αCareful incentivized = 0.90t1/0.91t2, αPartially careless = 0.90t1/0.79t2, αFully careless = 0.87t1/0.08t2). The respondents rated all 60 bidirectionally keyed items (i.e., 30 positively and 30 negatively worded items) of the BFI-2 on a Likert scale ranging from 1 = completely disagree to 6 = completely agree.

Direct careless responding measure

We used eight instructed response items as a direct careless responding measure (e.g., Meade & Craig, 2012). These eight instructed response items were embedded in the regular questionnaire and randomly displayed. For each of the eight items, the respondents were instructed to select one particular response option on a six-point Likert scale from 1 = completely disagree to 6 = completely agree. If the instructed response option was chosen, the participant’s response was coded as 0; if uninstructed response option was chosen, the participant’s response was coded as 1. For main analyses, an average score was computed across the eight items (αCareful unincentivized = NAt1/NAt2, αCareful incentivized = NAt1/0.01t2, αPartially careless = 0.01t1/0.26t2, αFully careless = 0.45t1/0.60t2).Footnote 3 If a respondent’s response was missing, the average score was based on the remaining non-missing responses.

Indirect careless responding measures

We used the same indirect careless responding indices as in Studies 1, 2, and 4. For computing these indirect indices, only the 60 items of the BFI-2 were used. Detailed information on how these indices were computed is provided in the supplementary material.

Careless responding criterion and analytical procedure

We used the same criterion and analysis procedure as in Study 4.

Results

Manipulation check

To examine whether the respondents followed our response instructions, we compared the t1–t2 mean differences of the five substantive scales and of three indirect careless response indices (i.e., average response time per item, IRV, RPR) across the four response conditions. The comparisons revealed that our manipulation was successful (see supplementary material and Table S30). Thus, we proceeded with the main analyses.

Descriptive statistics

The condition-specific correlation matrices at the first and second measurement as well as the condition-specific means and standard deviations at the first and second measurement of the substantive measures and careless responding indices are reported in the supplementary material (see Tables S31–S38).

Comparisons between the indirect indices

The omnibus test indicated significant inequality between the AUCs for detecting partially careless responding (χ2[13] = 931.49, p < .001) and between the AUCs for detecting fully careless responding (F[13, 99′423.2] = 39.95, p < .001). These global inequalities were then further explored through Bonferroni-corrected pairwise comparisons.

For detecting partially careless responding (i.e., Careless 33%), the following indices turned out to be effective (i.e., the confidence interval of their AUC did not include 0.5): psychometric synonyms, MD, RPR, psychometric antonyms, Gnormed, Ht, rpbis, average response time per item, and maximum longstring (see Table S39 for AUCs). Of these nine effective indices, psychometric synonyms, MD, and RPR tended to be the best: They significantly outperformed four of the other nine effective indices in terms of AUC. In contrast, the following five indices did not perform well in detecting partially careless responding: IRV, infit MSE, outfit MSE, lz, and INDCHI. The accuracy of these indices was not better than chance (i.e., the confidence interval of their AUC included 0.5).

For detecting fully careless responding, all examined indices turned out to be effective (see Table S40). Among the 14 effective indices, the three within-person consistency indices (psychometric synonyms, psychometric antonyms, and RPR), rpbis, average response time per item, and MD tended to perform best: They significantly outperformed seven to eight other effective indices in terms of AUC.

Lastly, we addressed research question 4 and compared each index’s performance in detecting partially careless respondents with its performance in detecting fully careless respondents. For each index, the condition-specific univariate logit estimates (i.e., estimate for Careless 33% and estimate for Careless 100%) were therefore stacked into one model, simultaneously estimated, and tested for equality by using seemingly unrelated estimation (SUEST; Weesie, 1999). The following seven indices were equally effective in detecting partially and fully careless responding according to the Bonferroni-corrected critical χ2 of 8.49 or F-value of 8.53 (i.e., α = 0.05/14): Ht (χ2[1] = 0.08), psychometric synonyms (F[1, 7′974.6] = 0.35), MD (χ2[1] = 0.53), RPR(χ2[1] = 0.91), Gnormed (χ2[1] = 2.20), maximum longstring (χ2[1] = 3.16), and psychometric antonyms (F[1, 1096.8] = 5.98). In contrast, the following seven indices were more effective when fully instead of partially careless responding had to be detected: INDCHI (χ2[1] = 9.37), infit MSE (χ2[1] = 11.70), outfit MSE (χ2[1] = 12.47), IRV (χ2[1] = 13.40), average response time per item (χ2[1] = 13.48), lz (χ2[1] = 18.17), and rpbis (F[1, 1.7*106] = 18.84).

Comparisons between the average score of instructed response items and indirect indices

We then compared the detection performance of each effective indirect index with that of the average score of the eight instructed response items (see Table 8). For detecting partially careless responding, the Bonferroni-corrected pairwise comparisons revealed the following results: Of the nine effective indirect indices, eight of them performed as accurately as the average score of the instructed response items. The only exception was maximum longstring, which was significantly less accurate than the average score of instructed response items.

For detecting fully careless responding, the Bonferroni-corrected pairwise comparisons revealed the following: First, two indices performed significantly better than the average score of instructed response items—RPR and average response time per item. Second, a group of six indices (psychometric synonyms, psychometric antonyms, MD, Gnormed, Ht, rpbis) turned out to be as accurate as the average score of the instructed response items. Third, a group of another six indices (maximum longstring, IRV, infit MSE, outfit MSE, lz, INDCHI) performed significantly worse than the average score of instructed response items. In addition, we also examined the indices’ incremental validity beyond the average score of instructed response items. These estimates are reported in the supplementary material (see Table S41).

Comparisons between the average score of instructed response items and sets of indirect indices

We then compared the performance of three sets of indirect indices with that of the average score of the eight instructed response items (see Table 9). In the first set, all effective indirect indices were used in combination. In the second set, the three most accurate indices were used in combination. In the third set, the three indices that needed the fewest code lines until the final index was obtained (see supplementary Table S8) were used in combination. We used linear predictions of logit models as input for the ROC analyses in the partially careless responding condition and linear multiple imputation predictions of logit models (across 69 to 100 imputations because convergence was not achieved for estimating logit models in some imputation samples) as input for the ROC analyses in the fully careless responding condition.

When detecting partially careless responding, all sets of indirect indices outperformed the average score of instructed response items in terms of AUC. However, none of the three sets outperformed the average score of instructed response items at the two sensitivity levels.